Bunt H., Beun R.-J., Borghuis T. (eds.) Multimodal Human-Computer Communication. Systems, Techniques, and Experiments

Подождите немного. Документ загружается.

Developing Multimodal Interfaces 171

date with the speech modality while editing an object with two other modalities

(keyboard and mouse). The temporal coincidence between these two indepen-

dent commands is not enough for the system to integrate them. As already

mentioned before, redundancy also enables faster interaction in COMIT (cf. the

'quit' example in Fig. 4).

In COMIT, modalities may also cooperate to improve recognition. Although

speech recognition systems have become much more robust in recent years with

respect to both speaker and acoustical variability, the performance of even the

best state-of-the art systems tends to deteriorate when:

- the user speaks before the system is ready,

- speech is transmitted over telephone lines,

- the signal-to-noise ratio is extremely low,

- the speaker's native language is not the one with which the system was

trained,

- the speaker has a cold,

- the speaker has performed an out-of-vocabulary utterance.

(see Stern, 1995; Yankelovich et al., 1995). Thus, any chance of improving speech

recognition by taking into account events detected in other modalities is of in-

terest. COMIT is only a test application yet, with a small speech vocabulary (30

words). The speech recognition system is a VECSYS-datavox. The branching

factor is also small (between 1 and 3) except for the first word which has 10

possible values. Yet, recognition errors happen. Moreover, we are also building

multimodal interfaces to more complex applications (Fig. 2) like itinary descrip-

tion (Briffault, 1996) where the vocabulary (including street and building names)

and the branching factor are greater. Multimodal interfaces thus have to cope

with speech recognition errors.

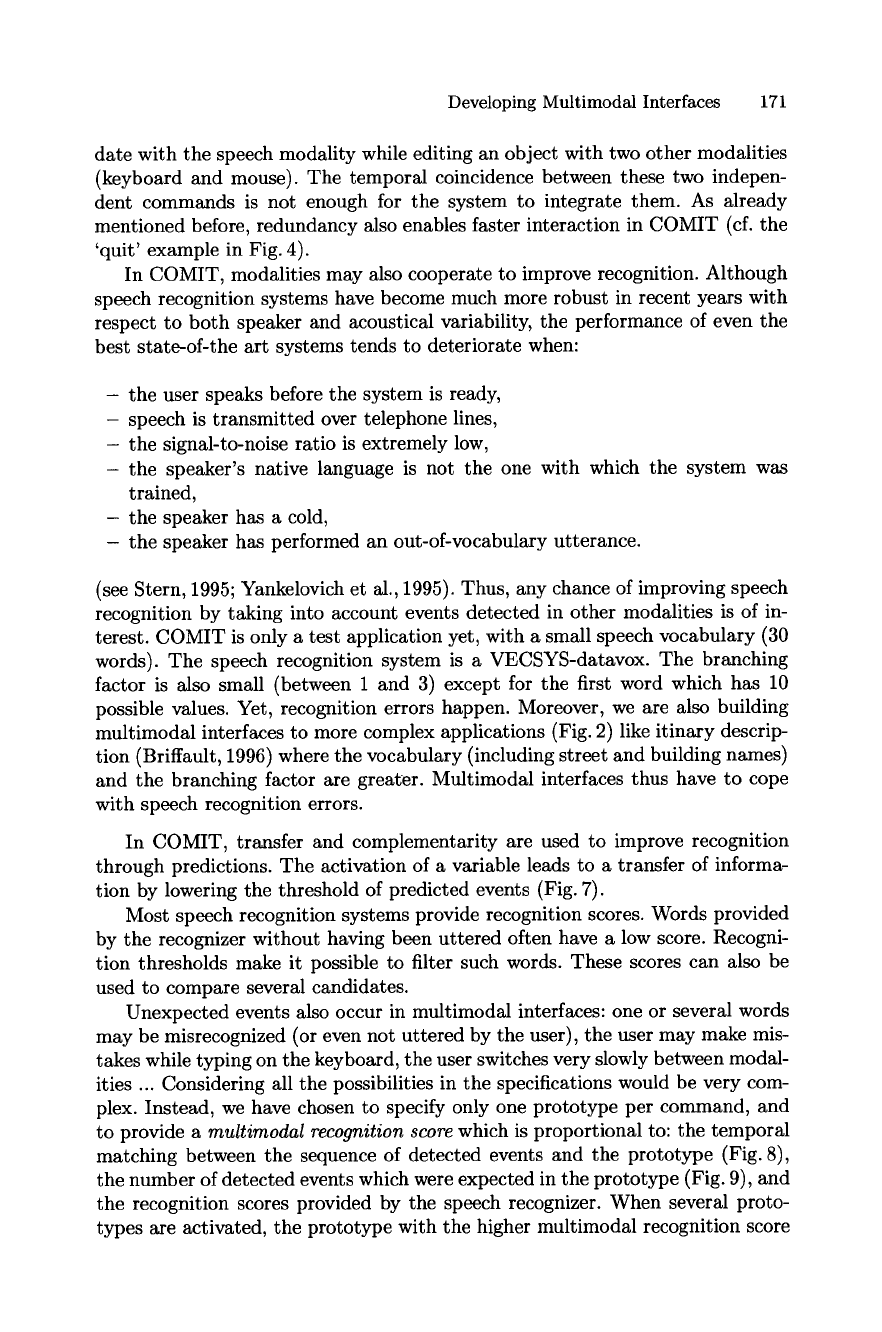

In COMIT, transfer and complementarity are used to improve recognition

through predictions. The activation of a variable leads to a transfer of informa-

tion by lowering the threshold of predicted events (Fig. 7).

Most speech recognition systems provide recognition scores. Words provided

by the recognizer without having been uttered often have a low score. Recogni-

tion thresholds make it possible to filter such words. These scores can also be

used to compare several candidates.

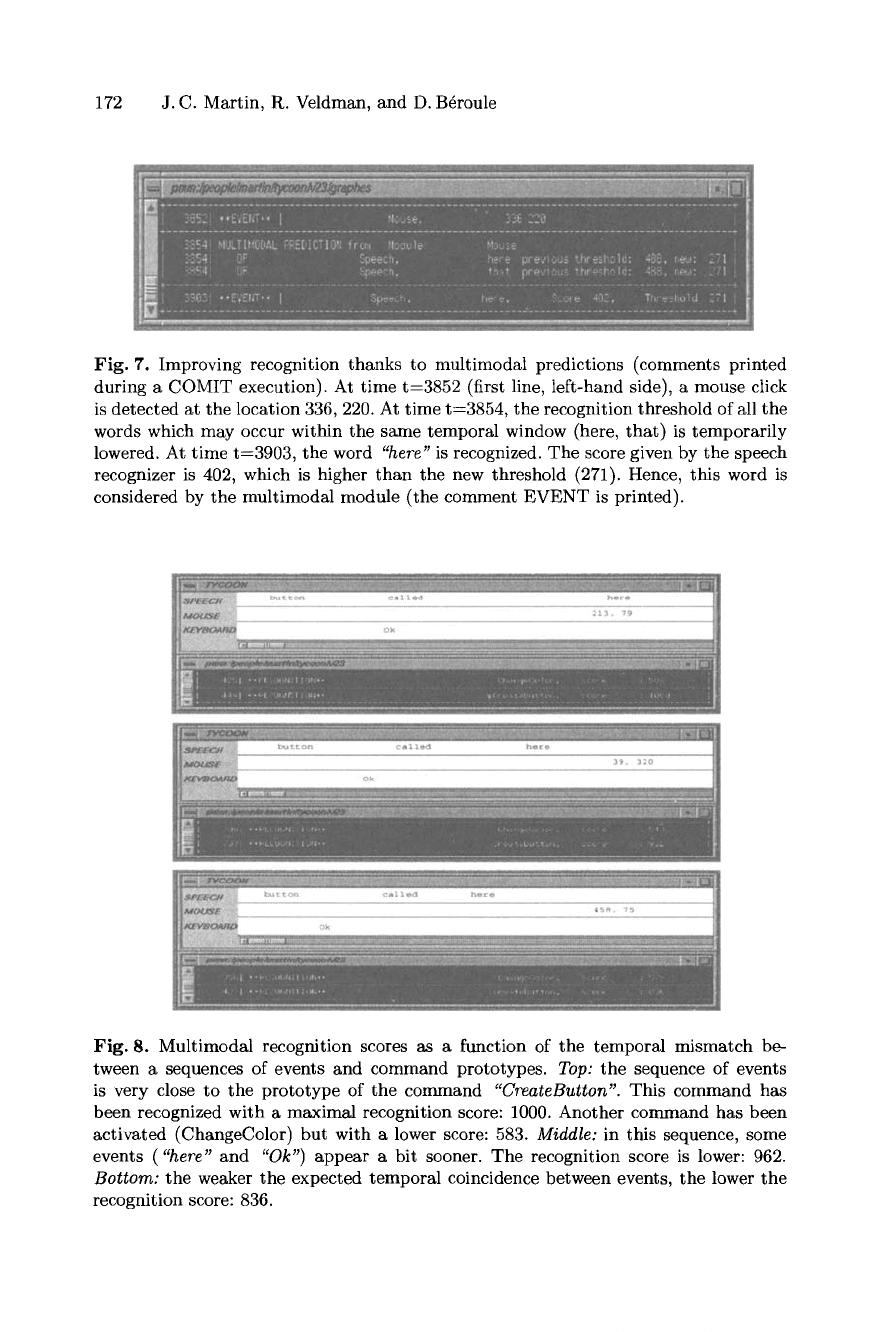

Unexpected events also occur in multimodal interfaces: one or several words

may be misrecognized (or even not uttered by the user), the user may make mis-

takes while typing on the keyboard, the user switches very slowly between modal-

ities ... Considering all the possibilities in the specifications would be very com-

plex. Instead, we have chosen to specify only one prototype per command, and

to provide a

multimodal recognition score

which is proportional to: the temporal

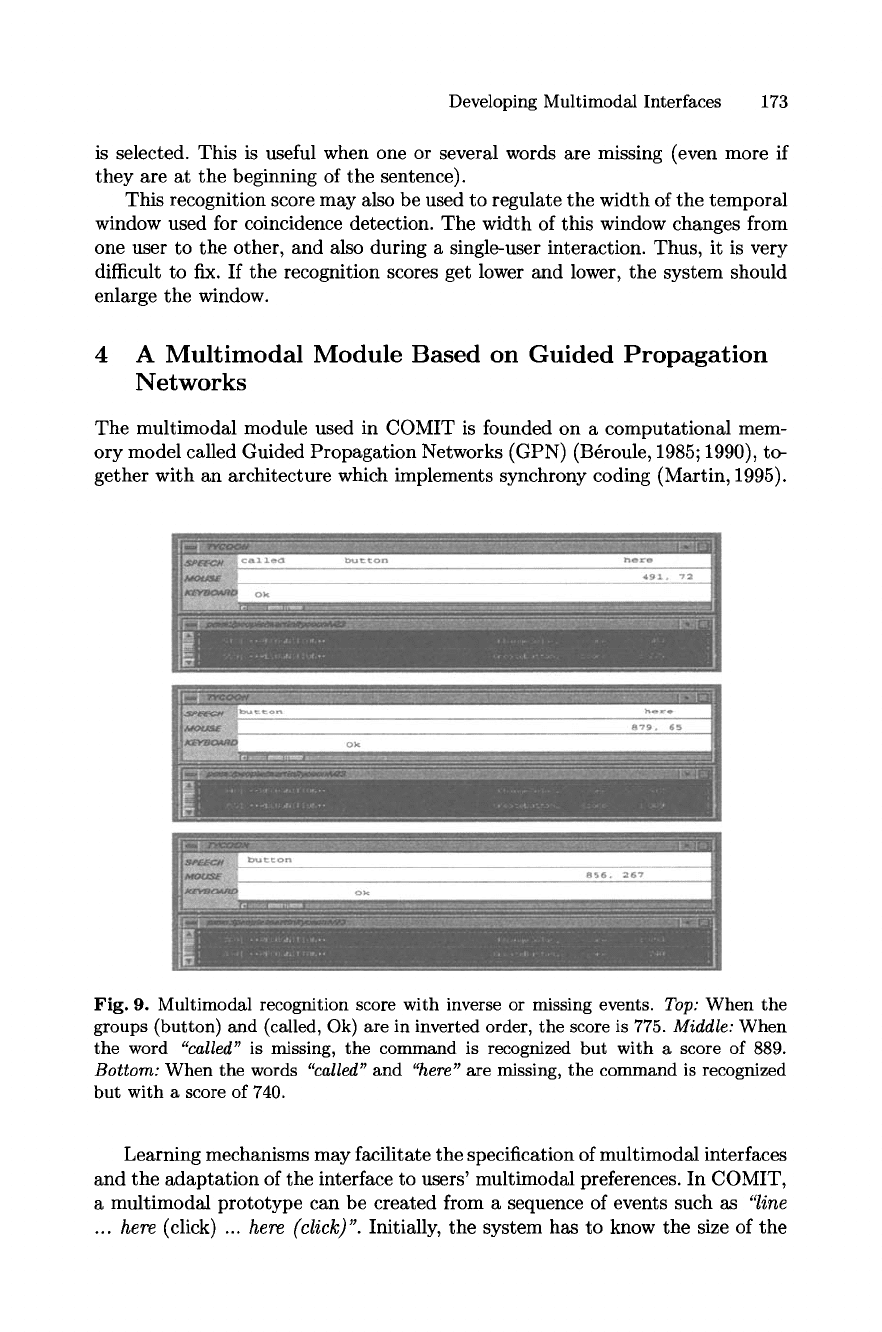

matching between the sequence of detected events and the prototype (Fig. 8),

the number of detected events which were expected in the prototype (Fig. 9), and

the recognition scores provided by the speech recognizer. When several proto-

types are activated, the prototype with the higher multimodal recognition score

172 J.C. Martin, R. Veldman, and D. B~roule

Fig. 7. Improving recognition thanks to multimodal predictions (comments printed

during a COMIT execution). At time t=3852 (first line, left-hand side), a mouse click

is detected at the location 336,220. At time t=3854, the recognition threshold of all the

words which may occur within the same temporal window (here, that) is temporarily

lowered. At time t--3903, the word

"here"

is recognized. The score given by the speech

recognizer is 402, which is higher than the new threshold (271). Hence, this word is

considered by the multimodal module (the comment EVENT is printed).

Fig. 8. Multimodal recognition scores as a function of the temporal mismatch be-

tween a sequences of events and command prototypes.

Top:

the sequence of events

is very close to the prototype of the command

"CreateButton".

This command has

been recognized with a maximal recognition score: 1000. Another command has been

activated (ChangeColor) but with a lower score: 583.

Middle:

in this sequence, some

events ("here" and

"Ok")

appear a bit sooner. The recognition score is lower: 962.

Bottom:

the weaker the expected temporal coincidence between events, the lower the

recognition score: 836.

Developing Multimodal Interfaces 173

is selected. This is useful when one or several words are missing (even more if

they are at the beginning of the sentence).

This recognition score may also be used to regulate the width of the temporal

window used for coincidence detection. The width of this window changes from

one user to the other, and also during a single-user interaction. Thus, it is very

difficult to fix. If the recognition scores get lower and lower, the system should

enlarge the window.

4 A Multimodal Module Based on Guided Propagation

Networks

The multimodal module used in COMIT is founded on a computational mem-

ory model called Guided Propagation Networks (GPN) (B@roule, 1985; 1990), to-

gether with an architecture which implements synchrony coding (Martin, 1995).

Fig. 9. Multimodal recognition score with inverse or missing events.

Top:

When the

groups (button) and (called, Ok) are in inverted order, the score is 775.

Middle:

When

the word

"called"

is missing, the command is recognized but with a score of 889.

Bottom:

When the words

"called"

and

"here"

are missing, the command is recognized

but with a score of 740.

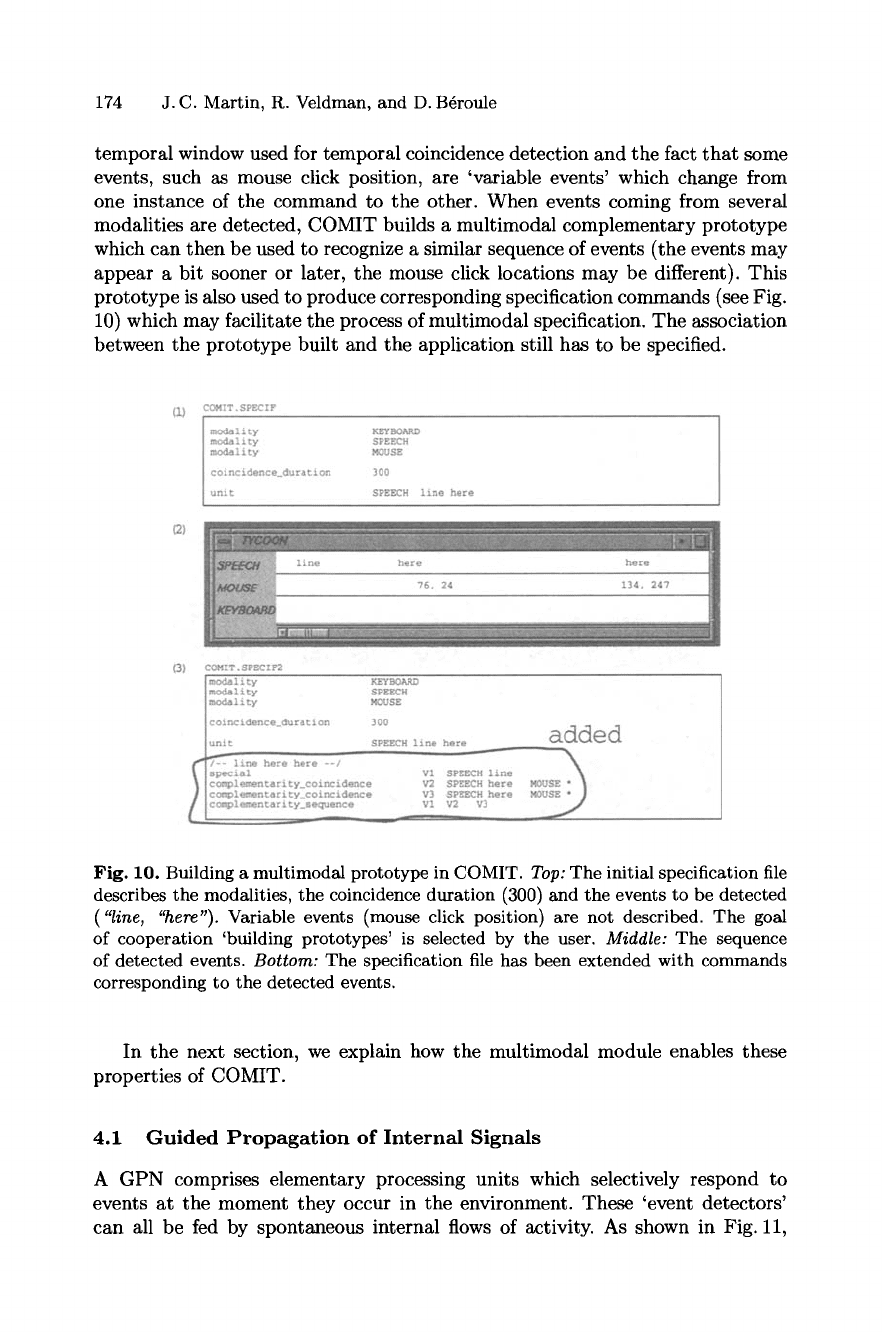

Learning mechanisms may facilitate the specification of multimodal interfaces

and the adaptation of the interface to users' multimodal preferences. In COMIT,

a multimodal prototype can be created from a sequence of events such as

"line

... here

(click) ...

here (click,)".

Initially, the system has to know the size of the

174 J.C. Martin, R. Veldman, and D. B~roule

temporal window used for temporal coincidence detection and the fact that some

events, such as mouse click position, are 'variable events' which change from

one instance of the command to the other. When events coming from several

modalities are detected, COMIT builds a multimodal complementary prototype

which can then be used to recognize a similar sequence of events (the events may

appear a bit sooner or later, the mouse click locations may be different). This

prototype is also used to produce corresponding specification commands (see Fig.

10) which may facilitate the process of multimodal specification. The association

between the prototype built and the application still has to be specified.

Fig. 10. Building a multimodal prototype in COMIT.

Top:

The initial specification file

describes the modalities, the coincidence duration (300) and the events to be detected

("line, "here").

Variable events (mouse click position) are not described. The goal

of cooperation 'building prototypes' is selected by the user.

Middle:

The sequence

of detected events.

Bottom:

The specification file has been extended with commands

corresponding to the detected events.

In the next section, we explain how the multimodal module enables these

properties of COMIT.

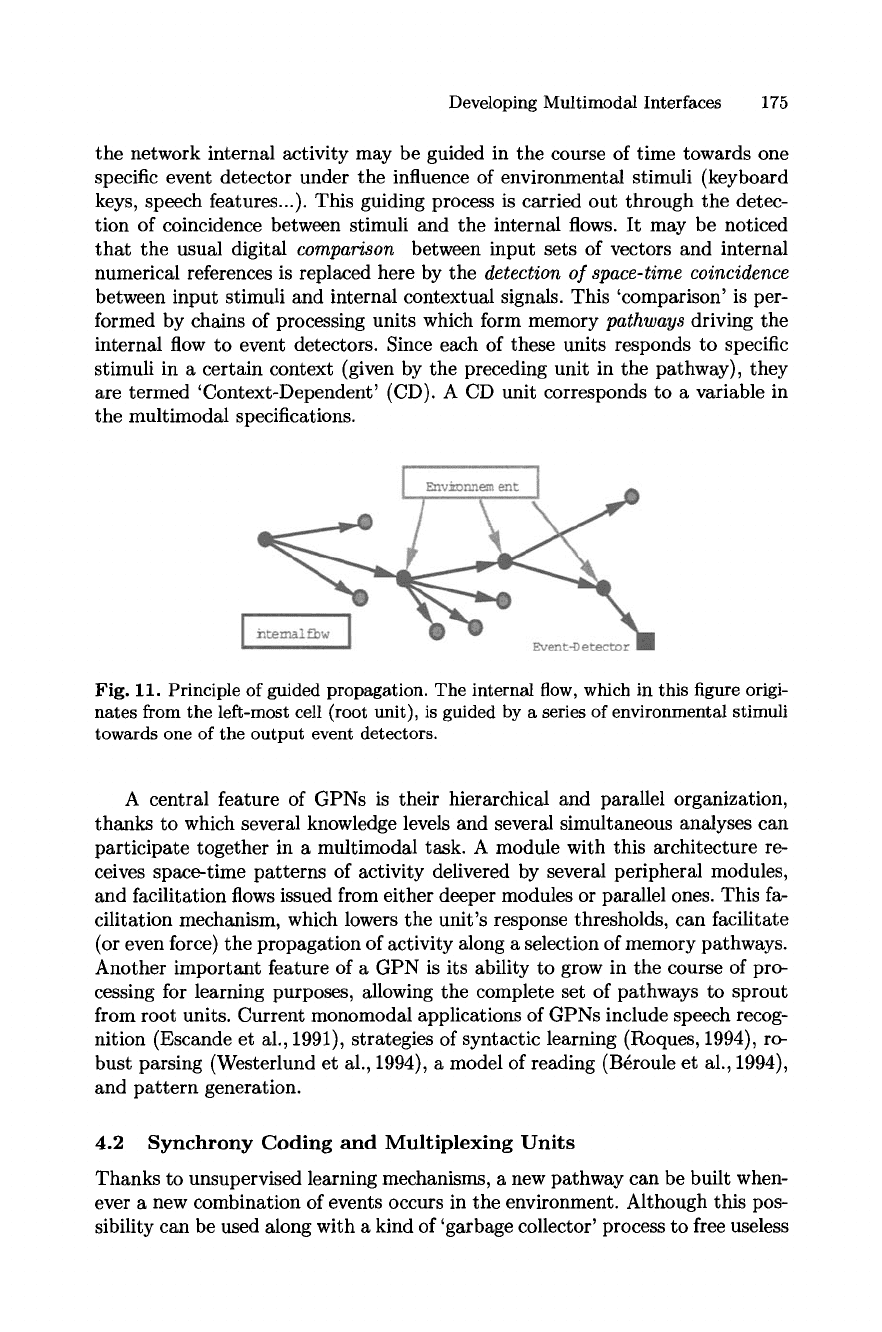

4.1 Guided Propagation of Internal Signals

A GPN comprises elementary processing units which selectively respond to

events at the moment they occur in the environment. These 'event detectors'

can all be fed by spontaneous internal flows of activity. As shown in Fig. 11,

Developing Multimodal Interfaces 175

the network internal activity may be guided in the course of time towards one

specific event detector under the influence of environmental stimuli (keyboard

keys, speech features...). This guiding process is carried out through the detec-

tion of coincidence between stimuli and the internal flows. It may be noticed

that the usual digital

comparison between input sets of vectors and internal

numerical references is replaced here by the

detection of space-time coincidence

between input stimuli and internal contextual signals. This 'comparison' is per-

formed by chains of processing units which form memory

pathways driving the

internal flow to event detectors. Since each of these units responds to specific

stimuli in a certain context (given by the preceding unit in the pathway), they

are termed 'Context-Dependent' (CD). A CD unit corresponds to a variable in

the multimodal specifications.

Fig. 11. Principle of guided propagation. The internal flow, which in this figure origi-

nates from the left-most cell (root unit), is guided by a series of environmental stimuli

towards one of the output event detectors.

A central feature of GPNs is their hierarchical and parallel organization,

thanks to which several knowledge levels and several simultaneous analyses can

participate together in a multimodal task. A module with this architecture re-

ceives space-time patterns of activity delivered by several peripheral modules,

and facilitation flows issued from either deeper modules or parallel ones. This fa-

cilitation mechanism, which lowers the unit's response thresholds, can facilitate

(or even force) the propagation of activity along a selection of memory pathways.

Another important feature of a GPN is its ability to grow in the course of pro-

cessing for learning purposes, allowing the complete set of pathways to sprout

from root units. Current monomodal applications of GPNs include speech recog-

nition (Escande et al., 1991), strategies of syntactic learning (Roques, 1994), ro-

bust parsing (Westerlund et al., 1994), a model of reading (B~roule et al., 1994),

and pattern generation.

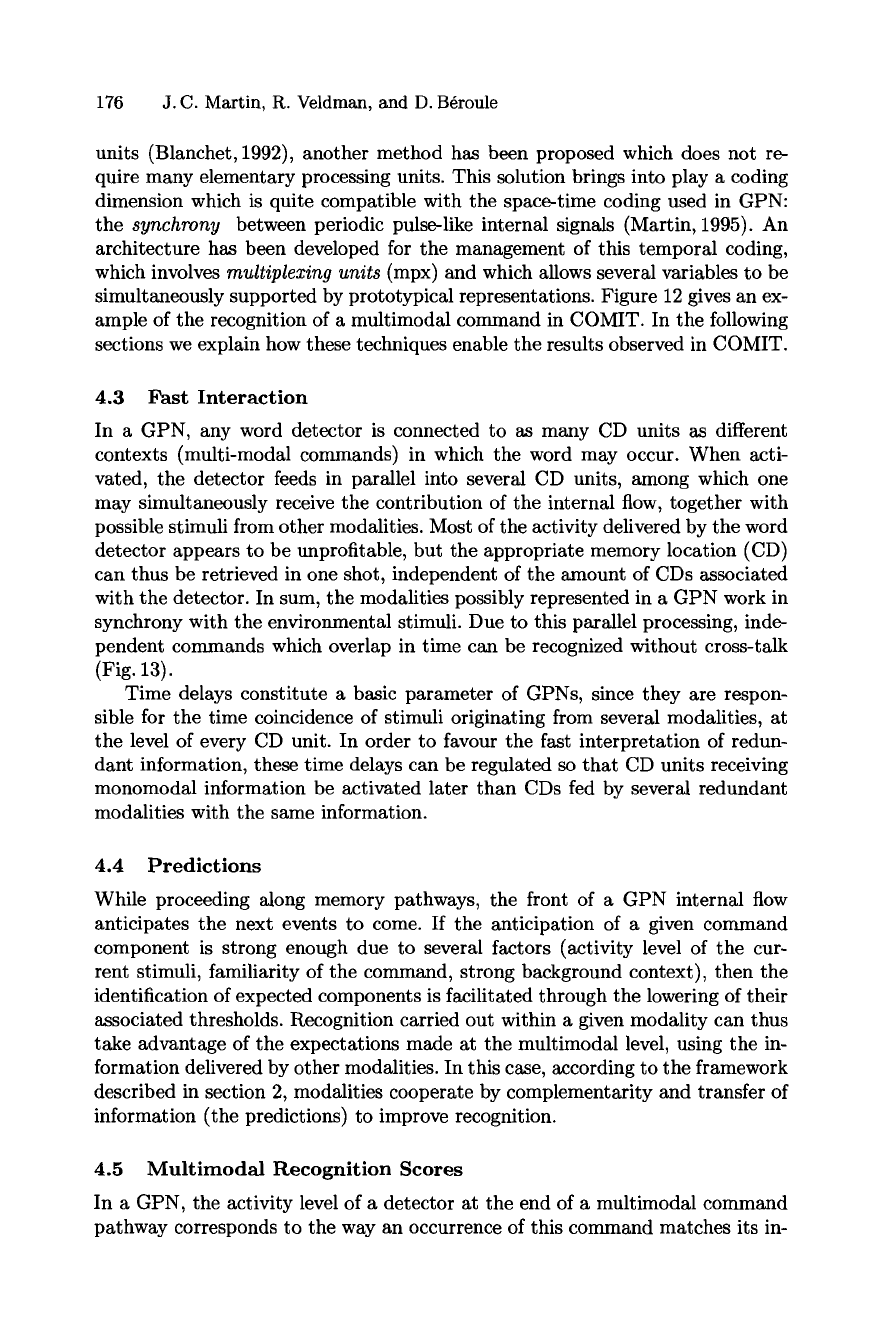

4.2 Synchrony Coding and Multiplexing Units

Thanks to unsupervised learning mechanisms, a new pathway can be built when-

ever a new combination of events occurs in the environment. Although this pos-

sibility can be used along with a kind of 'garbage collector' process to free useless

176 J.C. Martin, R. Veldman, and D. B~roule

units (Blanchet, 1992), another method has been proposed which does not re-

quire many elementary processing units. This solution brings into play a coding

dimension which is quite compatible with the space-time coding used in GPN:

the synchrony between periodic pulse-like internal signals (Martin, 1995). An

architecture has been developed for the management of this temporal coding,

which involves multiplexing units (mpx) and which allows several variables to be

simultaneously supported by prototypical representations. Figure 12 gives an ex-

ample of the recognition of a multimodal command in COMIT. In the following

sections we explain how these techniques enable the results observed in COMIT.

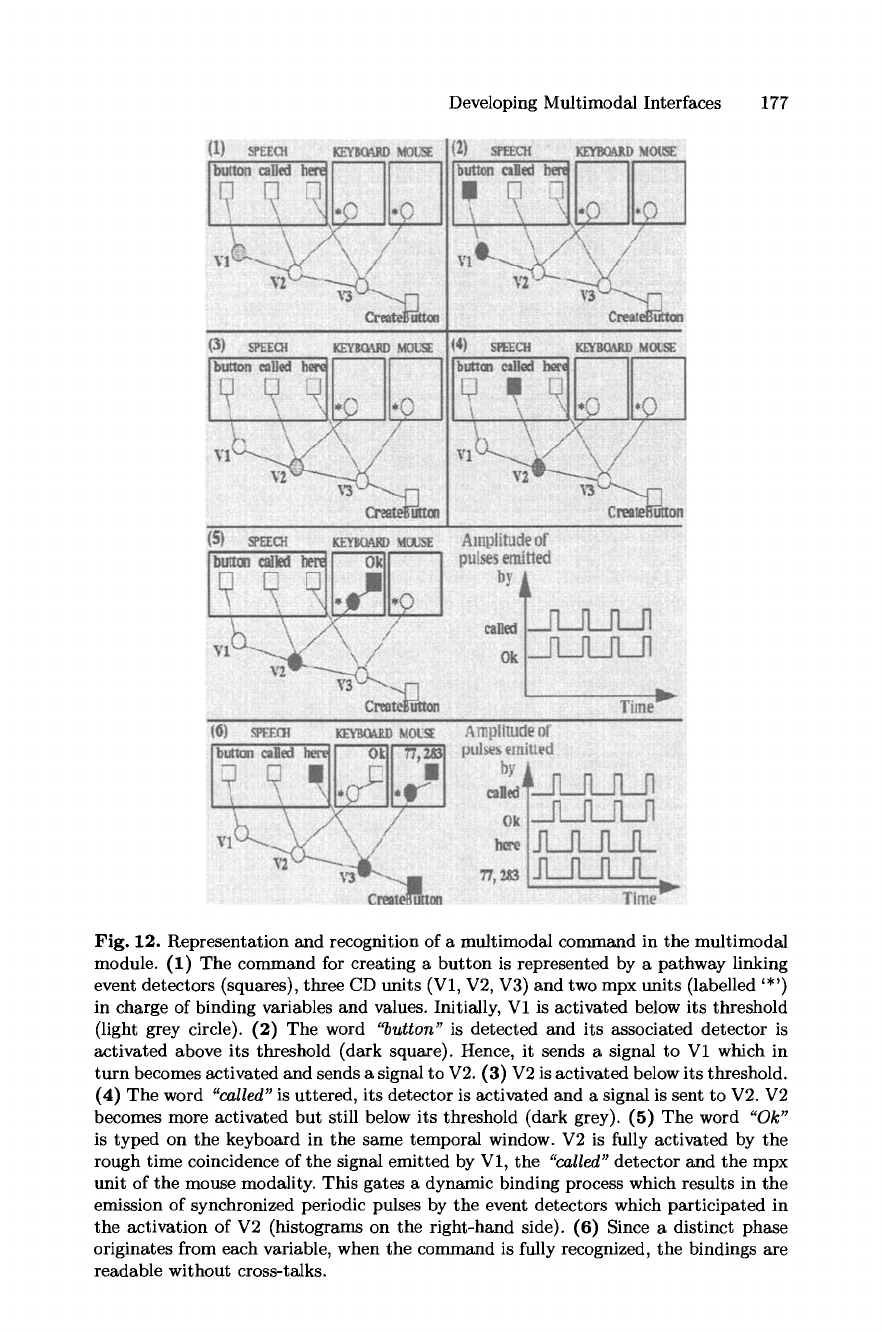

4.3 Fast Interaction

In a GPN, any word detector is connected to as many CD units as different

contexts (multi-modal commands) in which the word may occur. When acti-

vated, the detector feeds in parallel into several CD units, among which one

may simultaneously receive the contribution of the internal flow, together with

possible stimuli from other modalities. Most of the activity delivered by the word

detector appears to be unprofitable, but the appropriate memory location (CD)

can thus be retrieved in one shot, independent of the amount of CDs associated

with the detector. In sum, the modalities possibly represented in a GPN work in

synchrony with the environmental stimuli. Due to this parallel processing, inde-

pendent commands which overlap in time can be recognized without cross-talk

(Fig. 13).

Time delays constitute a basic parameter of GPNs, since they are respon-

sible for the time coincidence of stimuli originating from several modalities, at

the level of every CD unit. In order to favour the fast interpretation of redun-

dant information, these time delays can be regulated so that CD units receiving

monomodal information be activated later than CDs fed by several redundant

modalities with the same information.

4.4

Predictions

While proceeding along memory pathways, the front of a GPN internal flow

anticipates the next events to come. If the anticipation of a given command

component is strong enough due to several factors (activity level of the cur-

rent stimuli, familiarity of the command, strong background context), then the

identification of expected components is facilitated through the lowering of their

associated thresholds. Recognition carried out within a given modality can thus

take advantage of the expectations made at the multimodal level, using the in-

formation delivered by other modalities. In this case, according to the framework

described in section 2, modalities cooperate by complementarity and transfer of

information (the predictions) to improve recognition.

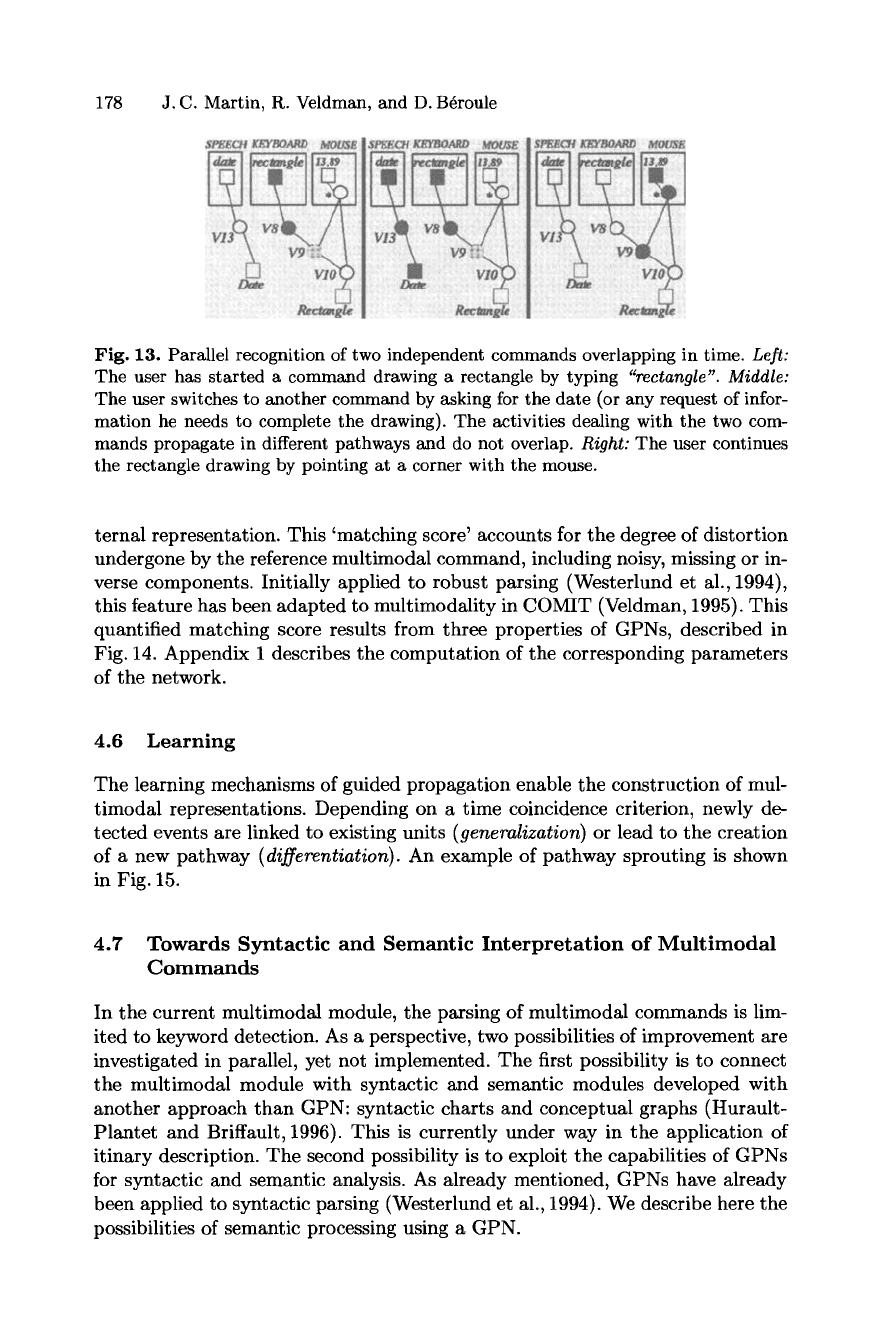

4.5 Multimodal Recognition Scores

In a GPN, the activity level of a detector at the end of a multimodal command

pathway corresponds to the way an occurrence of this command matches its in-

Developing Multimodal Interfaces 177

Fig. 12. Representation and recognition of a multimodal command in the multimodal

module. (1) The command for creating a button is represented by a pathway linking

event detectors (squares), three CD units (V1, V2, V3) and two mpx units (labelled '*')

in charge of binding variables and values. Initially, V1 is activated below its threshold

(light grey circle). (2) The word

"button" is detected and its associated detector is

activated above its threshold (dark square). Hence, it sends a signal to V1 which in

turn becomes activated and sends a signal to V2. (3) V2 is activated below its threshold.

(4) The word

"called" is uttered, its detector is activated and a signal is sent to V2. V2

becomes more activated but still below its threshold (dark grey). (5) The word

"Ok"

is typed on the keyboard in the same temporal window. V2 is fully activated by the

rough time coincidence of the signal emitted by V1, the

"called" detector and the mpx

unit of the mouse modality. This gates a dynamic binding process which results in the

emission of synchronized periodic pulses by the event detectors which participated in

the activation of V2 (histograms on the right-hand side). (6) Since a distinct phase

originates from each variable, when the command is fully recognized, the bindings are

readable without cross-talks.

178 J.C. Martin, R. Veldman, and D. B~roule

Fig. 13. Parallel recognition of two independent commands overlapping in time.

Left:

The user has started a command drawing a rectangle by typing

"rectangle". Middle:

The user switches to another command by asking for the date (or any request of infor-

mation he needs to complete the drawing). The activities dealing with the two com-

mands propagate in different pathways and do not overlap.

Right:

The user continues

the rectangle drawing by pointing at a corner with the mouse.

ternal representation. This 'matching score' accounts for the degree of distortion

undergone by the reference multimodal command, including noisy, missing or in-

verse components. Initially applied to robust parsing (Westerlund et al., 1994),

this feature has been adapted to multimodality in COMIT (Veldman, 1995). This

quantified matching score results from three properties of GPNs, described in

Fig. 14. Appendix 1 describes the computation of the corresponding parameters

of the network.

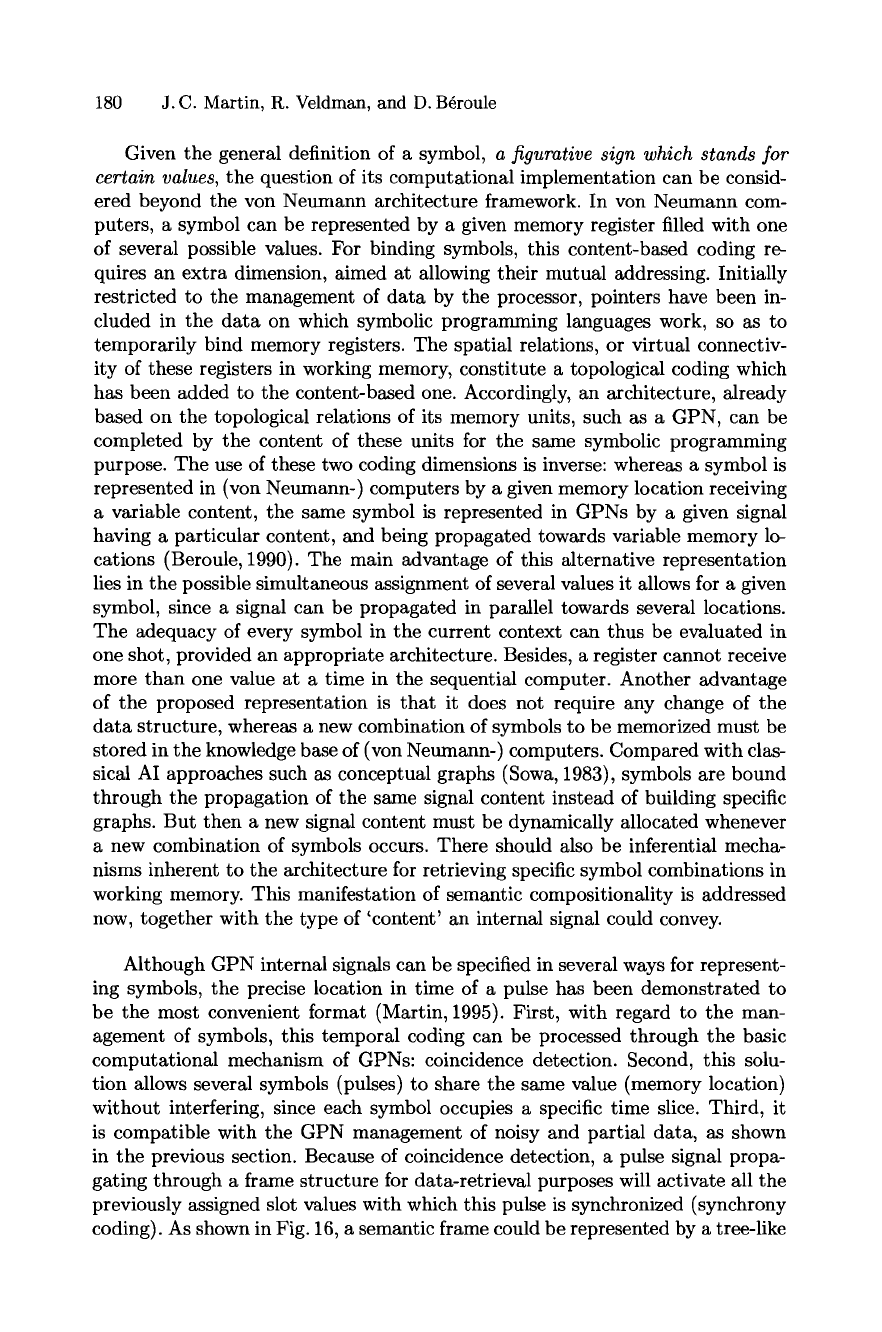

4.6 Learning

The learning mechanisms of guided propagation enable the construction of mul-

timodal representations. Depending on a time coincidence criterion, newly de-

tected events are linked to existing units

(generalization)

or lead to the creation

of a new pathway

(di~erentiation).

An example of pathway sprouting is shown

in Fig. 15.

4.7 Towards Syntactic and Semantic Interpretation of Multimodal

Commands

In the current multimodal module, the parsing of multimodal commands is lim-

ited to keyword detection. As a perspective, two possibilities of improvement are

investigated in parallel, yet not implemented. The first possibility is to connect

the multimodal module with syntactic and semantic modules developed with

another approach than GPN: syntactic charts and conceptual graphs (Hurault-

Plantet and Briffault, 1996). This is currently under way in the application of

itinary description. The second possibility is to exploit the capabilities of GPNs

for syntactic and semantic analysis. As already mentioned, GPNs have already

been applied to syntactic parsing (Westerlund et al., 1994). We describe here the

possibilities of semantic processing using a GPN.

Developing Multimodal Interfaces 179

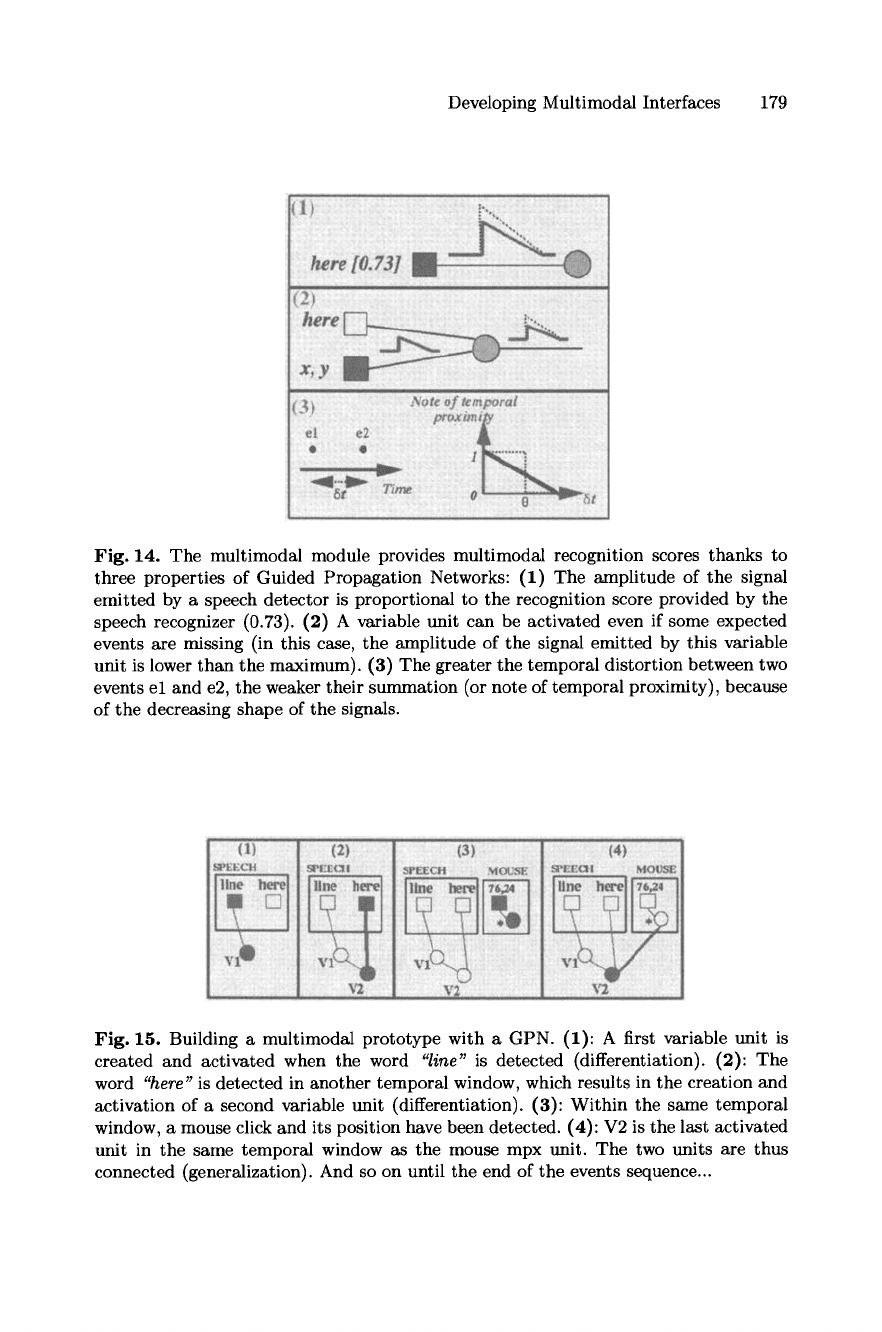

Fig. 14. The multimodal module provides multimodal recognition scores thanks to

three properties of Guided Propagation Networks: (1) The amplitude of the signal

emitted by a speech detector is proportional to the recognition score provided by the

speech recognizer (0.73). (2) A variable unit can be activated even if some expected

events are missing (in this case, the amplitude of the signal emitted by this variable

unit is lower than the maximum). (3) The greater the temporal distortion between two

events el and e2, the weaker their summation (or note of temporal proximity), because

of the decreasing shape of the signals.

Fig. 15. Building a multimodal prototype with a GPN. (1): A first variable unit is

created and activated when the word

"line"

is detected (differentiation). (2): The

word

"here"

is detected in another temporal window, which results in the creation and

activation of a second variable unit (differentiation). (3): Within the same temporal

window, a mouse click and its position have been detected. (4): V2 is the last activated

unit in the same temporal window as the mouse mpx unit. The two units are thus

connected (generalization). And so on until the end of the events sequence...

180 J.C. Martin, R. Veldman, and D. B~roule

Given the general definition of a symbol,

a figurative sign which stands for

certain values,

the question of its computational implementation can be consid-

ered beyond the von Neumann architecture framework. In yon Neumann com-

puters, a symbol can be represented by a given memory register filled with one

of several possible values. For binding symbols, this content-based coding re-

quires an extra dimension, aimed at allowing their mutual addressing. Initially

restricted to the management of data by the processor, pointers have been in-

cluded in the data on which symbolic programming languages work, so as to

temporarily bind memory registers. The spatial relations, or virtual connectiv-

ity of these registers in working memory, constitute a topological coding which

has been added to the content-based one. Accordingly, an architecture, already

based on the topological relations of its memory units, such as a GPN, can be

completed by the content of these units for the same symbolic programming

purpose. The use of these two coding dimensions is inverse: whereas a symbol is

represented in (von Neumann-) computers by a given memory location receiving

a variable content, the same symbol is represented in GPNs by a given signal

having a particular content, and being propagated towards variable memory lo-

cations (Beroule, 1990). The main advantage of this alternative representation

lies in the possible simultaneous assignment of several values it allows for a given

symbol, since a signal can be propagated in parallel towards several locations.

The adequacy of every symbol in the current context can thus be evaluated in

one shot, provided an appropriate architecture. Besides, a register cannot receive

more than one value at a time in the sequential computer. Another advantage

of the proposed representation is that it does not require any change of the

data structure, whereas a new combination of symbols to be memorized must be

stored in the knowledge base of (yon Neumann-) computers. Compared with clas-

sical AI approaches such as conceptual graphs (Sowa, 1983), symbols are bound

through the propagation of the same signal content instead of building specific

graphs. But then a new signal content must be dynamically allocated whenever

a new combination of symbols occurs. There should also be inferential mecha-

nisms inherent to the architecture for retrieving specific symbol combinations in

working memory. This manifestation of semantic compositionality is addressed

now, together with the type of 'content' an internal signal could convey.

Although GPN internal signals can be specified in several ways for represent-

ing symbols, the precise location in time of a pulse has been demonstrated to

be the most convenient format (Martin, 1995). First, with regard to the man-

agement of symbols, this temporal coding can be processed through the basic

computational mechanism of GPNs: coincidence detection. Second, this solu-

tion allows several symbols (pulses) to share the same value (memory location)

without interfering, since each symbol occupies a specific time slice. Third, it

is compatible with the GPN management of noisy and partial data, as shown

in the previous section. Because of coincidence detection, a pulse signal propa-

gating through a frame structure for data-retrieval purposes will activate all the

previously assigned slot values with which this pulse is synchronized (synchrony

coding). As shown in Fig. 16, a semantic frame could be represented by a tree-like