Jaeger G. Quantum Information: An Overview

Подождите немного. Документ загружается.

3.7 The Franson interferometer 61

n

j=1

p

j

2

i=1

tr

ρ

(i)

j

A

(i)

. (3.33)

Any system with a density matrix of the form shown in Eq. 3.32 (with j ≥ 2)

is said to be classically correlated, even if formed by mixing entangled states

[450]. The greatest two-particle interference visibility that can be obtained

with classically correlated states in the arrangement of Fig. 3.2 is 0.5 [353].

Nonclassically correlated states (i.e. entangled states) can give rise to higher

values of visibility of two-particle interference; the CHSH Bell-type inequality

of Eq. 3.10 can be violated once the visibility surpasses 1/

√

2 ≈ 0.71.

3.7 The Franson interferometer

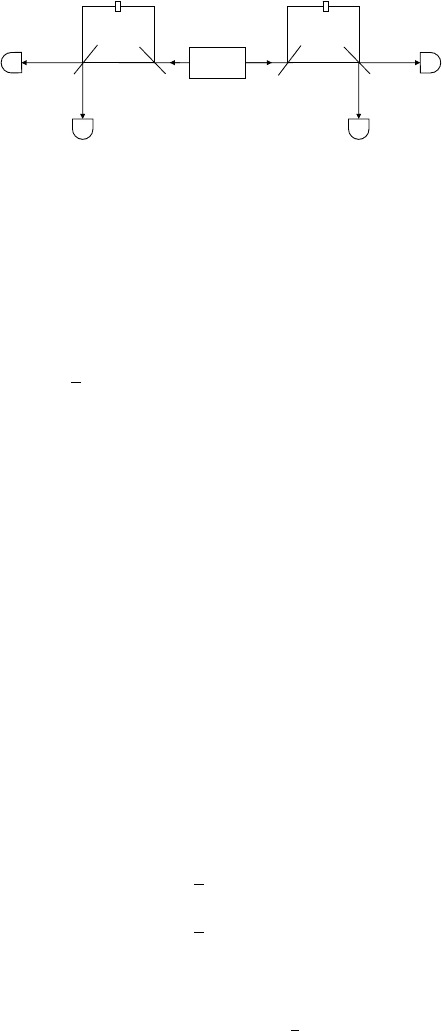

Similarly to the above two-spatial-qubit interferometer, the Franson interfer-

ometer is a distributed interferometer composed of two single-qubit Mach–

Zehnder interferometers. In the Franson interferometer, the interference of

particlepairswiththemselvesispossiblebecausetherearealternativepaths

of different length in both sub-interferometers, giving rise to a “temporal”

(time-bin, or phase) qubit in each due to the corresponding pair of alternative

timesofarrivalateachfinalbeam-splitter;seeFig.3.3.Thisinterferometer

corresponds to a (constrained) temporal-qubit version of the two-spatial-qubit

interferometer considered above and shown in Fig. 3.2, with only the relative

phase between two-qubit alternative processes free to be altered (rather than

the general pair of local unitary transformations of Eq. 3.23) in order to

produce an interferogram in coincidence counts; it is designed to realize the

limiting case of maximal two-particle interference visibility. The path-length

difference, ∆l = d

long

−d

short

, in each of the two single-qubit interferometers,

arranged to be the same for both, corresponds to the transit-time difference

between paths ∆T = ∆l/c in each and is arranged to be greater than the

single-particle coherence length, δl, corresponding to a single-particle coher-

ence time τ

1

= δl/c, precluding single-particle self-interference in either wing.

In practice, the particles used in this interferometer are now typically photons

produced by spontaneous parametric down-conversion.

20

Thus, conditions are imposed so that there is no fixed phase-relation be-

tween amplitudes for single-photon passage along short and long paths at

the final beam-splitter of either interferometer as described above, ensuring

that the entanglement of the two qubits is as strong as possible, in accor-

dance with the interferometric complementarity relations described by Eqs.

3.28–29.

21

The transit-time difference ∆T is also kept shorter than the corre-

lation time τ

2

of the two photons, still allowing two-photon interference to be

20

This process is described in detail in Sect. 6.16. When James Franson introduced

this interferometer, he envisioned a source based on an atomic cascade [174].

21

Explicit entanglement measures beyond the visibility of entanglement are dis-

cussedinChapter6,below.

62 3 Quantum nonlocality and interferometry

I

B

I

A

L

L

S

D

A

1

D

B

1

D

B

2

D

A

2

S

source

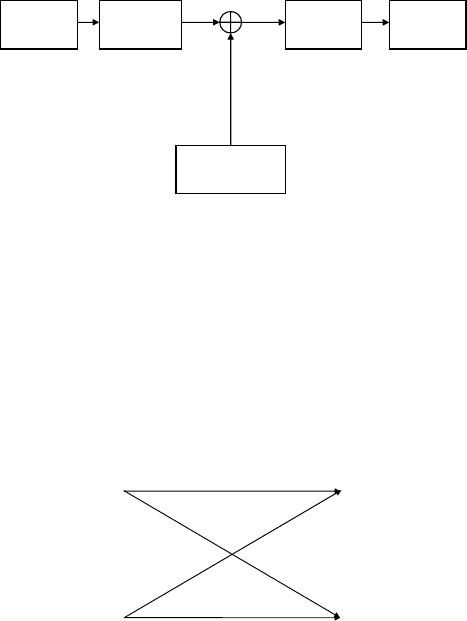

Fig. 3.3. The Franson interferometer for two phase-time qubits. The unique portions

of the short and long paths of each wing are indicated by “S” and “L,” respectively.

The φ

A

,φ

B

indicate phase shifts in labs A and B, respectively [174].

observed. The effective quantum state in the interferometer is

|Ψ =

1

2

|short|short−e

i(φ

A

+φ

B

)

|long|long

, (3.34)

where “short” and “long” are shorthand notation for the alternative (multi-

ple segment) photon paths available and φ

A

and φ

B

are the variable phase

shifts of the two interferometers, because this temporal constraint ensures that

there are no other contributions to the state, such as e

iφ

A

|short|long and

e

iφ

B

|long|short, that would otherwise be present. ∆T is kept significantly

longer than the photon detector resolution dt (which is generally on the order

of 1 ns duration) allowing for the observation of the possibilities of passage of

pairs of photons in pairs of paths |short|short and |long|long above, and

their interference. Overall, then, one requires that

τ

2

>∆T >τ

1

, (3.35)

with ∆T arranged to be, for example, of an order greater than that of 1 ns.

This results in the selection of the large, central interferometric feature of the

three features that can appear in the temporal coincidence interferogram in

an experimental configuration such as that shown in Fig. 3.3 [174].

Joint detection in distant wings A and B of the interferometer of Fig. 3.3,

at pairs of detectors D

X

i

(where X = A, B), thus occurs with the probabilities

P (D

A

i

D

B

j

)=

1

4

1 + cos(φ

A

+ φ

B

)

, (3.36)

P (D

A

k

D

B

k

)=

1

4

1 − cos(φ

A

+ φ

B

)

, (3.37)

where i, j, k =1, 2andi = j, similarly to those appearing in the two-

spatial qubit interferometer discussed in the previous section. The probabili-

ties P (D

X

i

) of single-photon counts are just

1

2

, being marginal probabilities

3.8 Two-qubit quantum gates 63

obtained by adding the joint probabilities (3.36) and (3.37) in which the corre-

sponding detector appears; the positive and negative cosine modulation terms

simply cancel each other out. That is, there is no interference of single-photon

possibilities, the counts of which occur at random, regardless of how the indi-

vidual phases φ

A

and φ

B

—or the joint phase φ

A

+ φ

B

, for that matter—are

varied.

The Franson interferometer has been used for entangled-photon QKD,

where component interferometers lie one each in the separated laboratories of

Alice or Bob; for example, see [188, 422].

3.8 Two-qubit quantum gates

The transformations carried out by the general single-qubit transducers (local

unitary gates) T

i

of Section 3.6 have been used in the various above-described

situations to realize independent pairs of local, single-qubit operations de-

scribed by Eq. 1.31, each acting on one of two qubits. Such local unitary

transformations allow the exhibition of the effect of existing entanglement in

quantum interferometers. More general two-qubit operations also exist, which

play an essential role in quantum information processing under the quantum

circuit model and can in some cases create entangled states rather than merely

aid in exhibiting existing entanglement.

Let us now consider the creation of entanglement through the use of the

very important two-qubit example of the C-NOT (or XOR) quantum gate.

|b

1

Ó |b

1

Ó

|b

1

b

2

Ó

|b

2

Ó

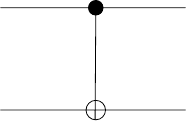

Fig. 3.4. Action of the C-NOT gate. The computational-basis states are labeled by

binary values b

i

(i =1, 2);intheketatlower-right,⊕ signifies addition mod 2.

The quantum C-NOT gate corresponds to a unitary operation of the form

|00|⊗I + |11|⊗

|01| + |10|

. The C-NOT gate can be used to create

entanglement between previously unentangled qubits and is represented by

the matrix

C − NOT

.

=

⎛

⎜

⎜

⎝

1000

0100

0001

0010

⎞

⎟

⎟

⎠

.

The first qubit of a C-NOT gate is called the “control qubit” and the second

the “target qubit.” From the point of view of the computational basis, the

64 3 Quantum nonlocality and interferometry

control bit is fixed and the target bit is changed if and only if the control bit

takes the value 1, as can be seen by noting that the lower-right submatrix,

corresponding to that condition, is that of the quantum bit-flip operator σ

1

,

whereas the upper-left submatrix block, corresponding to the opposite condi-

tion, is that of the identity operator, I.Thegateisusuallydrawnasshown

in Fig. 3.4, with the control bit on top and the target bit on the bottom.

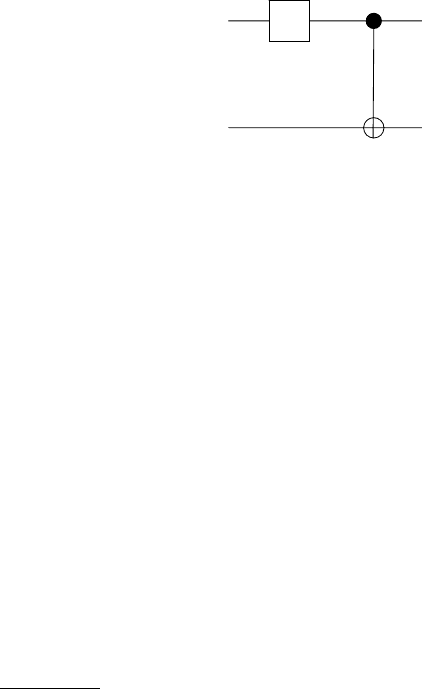

This gate can be used together with the single-qubit Hadamard gate to

create Bell states from two independent qubits initially described collectively

by an unentangled product state, as described by the quantum circuit shown

in Fig. 3.5.

H

|b

1

Ó

|B

b

1

b

2

Ó

|b

2

Ó

Fig. 3.5. A quantum circuit for the creation of the (entangled) Bell states |B

b

1

b

2

defined by Eqs. 6.13–14 from a product state of two qubits, |b

1

|b

2

.

It is important to note that the distinction between the control and target

qubits is relative to the choice of basis. Consider the effect of a quantum

gate that acts as a controlled gate in the computational basis in a different

basis, say the diagonal basis {| , |}. For example, the effect of the C-

NOT gate in this basis is to invert the role of control and target, leaving the

“target qubit” unaffected but interchanging the states |and |of the

“control qubit” in the event that the former enters the gate in the state |.

Controlled versions of all the single-qubit gates described previously can be

similarly implemented; their matrix representations can be obtained from that

of the C-NOT gate above by simply replacing the lower-right 2 ×2 submatrix

with that describing the single-qubit gate.

22

That is, one constructs operators

of the form |00|⊗I + |11|⊗U,whereU is the operation to be conditionally

performed.

One similarly obtains multiple-qubit controlled gates by generalizing this

construction. For example, in order to extend the controlled-NOT gate so

as to perform the NOT operation on a target qubit conditional on the state

of two control qubits, one performs a unitary operation represented by the

matrix

22

Explicit matrix representations for such gates in the case of path and polarization

degrees of freedom are readily worked out, and have been provided in full detail

in the literature; for example, see [161, 315].

3.8 Two-qubit quantum gates 65

C − C − NOT

.

=

⎛

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎜

⎝

10000000

01000000

00100000

00010000

00001000

00000100

00000001

00000010

⎞

⎟

⎟

⎟

⎟

⎟

⎟

⎟

⎟

⎟

⎟

⎠

.

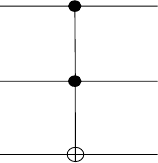

This is the control-control-NOT (or Toffoli) gate, whereby the third target

bit is flipped if and only if the both control bits take the value 1: the upper-

left block is the two-qubit-space identity whereas the lower-right block is the

matrix representation of a control-NOT. The quantum circuit for this gate is

shown in Fig. 3.6.

|b

1

Ó |b

1

Ó

|b

2

Ó |b

2

Ó

|(b

1

b

2

)b

3

Ó

|b

3

Ó

Fig. 3.6. Action of the C-C-NOT (or Toffoli) gate on three qubits. In the ket at

lower-right, ∧ signifies the AND operation and ⊕ indicates addition mod 2.

4

Classical information and communication

Information theory has, until relatively recently, almost exclusively focused on

what is now considered classical information, namely, information as stored in

or transferred by classical mechanical systems. Quantum information theory

has also largely consisted of the extension of methods developed for classical

information to analogous situations involving quantum systems. Furthermore,

because measurements of quantum systems produce classical information, tra-

ditional information-theoretical methods play an essential role in quantum

communication and quantum information processing because measurements

play an essential role in them. Accordingly, a concise overview of the elements

of classical information theory is provided in this chapter. Several specific

classical information measures are discussed and related here, as are classical

error-correction and data-compression techniques. The quantum analogues of

these concepts and methods are discussed in subsequent chapters.

Classical information theory is based largely on the conception of informa-

tion developed by Claude Shannon in the late 1940s, which uses the bit as the

unit of information [378].

1

Entropies hold a central place in this approach to

information, following naturally from Shannon’s conception of information as

the improbability of the occurrence of symbols occurring in memories or sig-

nals. The choice of a binary unit of information naturally leads to the choice

of 2 as the logarithmic base for measuring information. Any device having

two states stable over the pertinent time scale is capable of storing one bit of

information. A number, n, of identical such devices can store log

2

2

n

= n bits

of information, because there are 2

n

states available to them as a collective.

An example of such a set of devices is a memory register. A variable taking

two values 0 and 1 is also referred to as a bit and can be represented by

x ∈ GF (2); similarly, strings of bits can be represented by x ∈ GF (2)

n

.

Consider first the properties of a string of characters that are produced and

sequentially transmitted in a classical communication channel. The Shannon

information content of such a string of text can be understood in terms of how

1

John W. Tukey first introduced the term bit for the binary information unit [12].

68 4 Classical information and communication

improbable the string is to occur in such a channel. The information content

(or self-information,orsurprisal) associated with a given signal event x is

I(x)=log

2

1

p(x)

= −log

2

p(x) , (4.1)

where p(x)istheprobability of occurrence of the event; the logarithm appears

as a matter of mathematical convenience because the number of available

states can be very large. The simplest events are the occurrence of individual

symbols, in which case one is concerned with their probabilities, as in the case

of bit transmission when one considers p(0) and p(1). The information asso-

ciated with an event is considered obtained when the event actually occurs.

This is consistent with the idea that no information is gained by learning an

event that occurs with certainty whereas learning, for example, the outcome

of a fair coin-toss provides all the information essential to the toss and entirely

eliminates previous uncertainty as to its outcome. One generally considers a

number of such events associated with a given signal.

A finite number of mutually exclusive events together with their probabil-

ities constitutes a finite scheme. To every such scheme there is an associated

uncertainty, because only the probabilities of occurrence of these events is

known.

2

This uncertainty is captured by the Shannon entropy, which is in-

troduced below. The information associated with the joint occurrence of two

independent events, which happens with a probability given by the product

of those of the individual events, can therefore be written as a sum of the

associated information values.

3

4.1 Communication channels

The fundamental task of communication is to obtain, at a remote destination

and with the greatest possible accuracy, information sent from a given ini-

tial source through a channel joining their locations. One way of defining a

classical information source is as a sequence of probability distributions over

sets of strings produced in a number of emissions by a transmitter into such

a communication channel to a receiver. The output at the end of the channel

endows an agent at the destination with a given amount of information about

its source, provided the information is not altered during transmission, that

is, provided the channel is noiseless. The essential requirement on a means

of communication is that any message sent via it belong to a set of possible

messages that could have been sent by a source in this way, because the actual

message being transmitted is not known a priori. Assuming a finite number

of such possible messages, any monotonic function of the number of messages

is a good measure of the information produced when one message is selected

2

Various of measures of uncertainty are discussed in [427].

3

This follows from Bayes’ theorem; see Sect. A.8.

4.1 Communication channels 69

from this set. The logarithmic function above is a natural choice because, in

addition to being mathematically convenient, it can also be retained when the

number of possible messages is infinite.

Communication channels can be attributed capacities for transmitting in-

formation. By comparing the entropy of a source with the capacity of a chan-

nel, one can determine whether the information produced by the source can

be fully transmitted through the channel. A very simple description of a re-

source transmitter receiver destination

source of noise

Fig. 4.1. The additive noise channel.

alistic classical communication channel is that of the additive noise channel

wherein the transmitted signal, s(t), is influenced by additive random noise,

n(t); see Fig. 4.1. Due to the the presence of this noise, the resulting signal,

r(t), is given by

r(t)=s(t)+n(t) . (4.2)

The primary model of a discrete, memoryless noisy channel is the binary

00

p

p

11

Fig. 4.2. Schematic of the binary symmetric channel (BSC). A transition between

values of any given bit occurs with probability p, known as the symbol-error proba-

bility. Initial bit values appear at left, final bit values at right. Bit values therefore

remain unchanged with probability 1−p.

symmetric channel (BSC), which is schematized in Fig. 4.2. In this channel

there is a probability p that noise can introduce a bit error, an unintended

70 4 Classical information and communication

change of value of any given bit during transmission.

4

Error correction, which

is discussed in Sections 4.5, is the process of eliminating such errors.

4.2 Shannon entropy

The traditional measure of classical information is the Shannon entropy,

H(X) ≡ H[p

1

,p

2

,...,p

n

]=−

n

i

p

i

log

2

p

i

, (4.3)

which is a functional of the probability distribution {p

i

}

n

i=1

, associated with

events x

i

in a sample space S = {x

i

},givenbytheprobability mass function

as p

i

≡ p

X

(x

i

)=P (X = x

i

) for the random variable X over n possible values

that characterizes the information.

5

The Shannon entropy is thus the average

information associated with the set of events, in units of bits.

6

It can also be

thought of as the number of bits, on average, needed to describe the random

variable X.

If one considers a sequence of n independent and identically distributed

(i.i.d.) random variables, X

n

, drawn from a probability mass function p(X =

x

i

), the probability of a typical sequence is of order 2

−nH(X)

; there are, ac-

cordingly, roughly 2

nH(X)

possible such sequences.

7

The latter can be seen

by noting that typical sequences will contain p(x

i

)n instances of x

i

,sothat

the number of typical sequences is n!/

n

i

(np(x

i

))! which, under the Stirling

4

More detailed models of classical communication channels can be found, for ex-

ample, in [344].

5

See Sect. A.2 for pertinent definitions. The events associated with the random

variable X here correspond, for example, to different numbers or letters of an

alphabet on the sides of a die appearing face-up in a toss.

6

Such a measure and a fundamental unit of information were also independently

(and previously) introduced by Alan Turing, who chose 10 as a base rather than

Shannon’s 2, and so the “ban” rather than the bit as the fundamental information

unit, while he was working at Bletchley Park during the Second World War. For

a description of Turing’s formulation of information entropy, which involved the

“weight of evidence,” see [190].

7

This is known as the asymptotic equipartition property (AEP). One can distin-

guish two sets of sequences, the typical and atypical sequences, being comple-

ments of each other, where the typical sequences are those with probability close

to 2

−nH(X)

; in particular, the typical set is that of sequences {x

1

,x

2

, ..., x

n

} for

the random variable X such that 2

−nH(X)−

≤ p(x

1

,x

2

, ..., x

n

) ≤ 2

−nH(X)+

for

all >0. The AEP is a consequence of the weak law of large numbers, namely

that, for i.i.d. random variables, the average is close to the expected value of X for

large n.Thetheorem of typical sequences can also be proven, which supports the

notion that in the limit of large sequence length almost all sequences produced

byasourcebelongtothisset.