Meszaros G. xUnit Test Patterns Refactoring Test Code

Подождите немного. Документ загружается.

28

Chapter 3 Goals of Test Automation

Goal: Simple Tests

To avoid “biting off more than they can chew,” our tests should be small and test

one thing at a time. Keeping tests simple is particularly important during test-

driven development because code is written to pass one test at a time and we want

each test to introduce only one new bit of behavior into the SUT. We should strive

to Verify One Condition per Test by creating a separate Test Method (page 348)

for each unique combination of pre-test state and input. Each Test Method should

drive the SUT through a single code path.

4

The major exception to the mandate to keep Test Methods short occurs with

customer tests that express real usage scenarios of the application. Such extend-

ed tests offer a useful way to document how a potential user of the software

would go about using it; if these interactions involve long sequences of steps,

the Test Methods should refl ect this reality.

Goal: Expressive Tests

The “expressiveness gap” can be addressed by building up a library of Test

Utility Methods that constitute a domain-specifi c testing language. Such a col-

lection of methods allows test automaters to express the concepts that they

wish to test without having to translate their thoughts into much more detailed

code. Creation Methods (page 415) and Custom Assertion (page 474) are good

examples of the building blocks that make up such a Higher-Level Language.

The key to solving this dilemma is avoiding duplication within tests. The DRY

principle—“Don’t repeat yourself”—of the Pragmatic Programmers (http://www.

pragmaticprogrammer.com) should be applied to test code in the same way it is

applied to production code. There is, however, a counterforce at play. Because

the tests should Communicate Intent, it is best to keep the core test logic in each

Test Method so it can be seen in one place. Nevertheless, this idea doesn’t pre-

clude moving a lot of supporting code into Test Utility Methods, where it needs

to be modifi ed in only one place if it is affected by a change in the SUT.

Goal: Separation of Concerns

Separation of Concerns applies in two dimensions: (1) We want to keep test code

separate from our production code (Keep Test Logic Out of Production Code)

and (2) we want each test to focus on a single concern (Test Concerns Separately)

to avoid Obscure Tests (page 186). A good example of what not to do is testing

the business logic in the same tests as the user interface, because it involves testing

4

There should be at least one Test Method for each unique path through the code; often

there will be several, one for each boundary value of the equivalence class.

29

two concerns at the same time. If either concern is modifi ed (e.g., the user inter-

face changes), all the tests would need to be modifi ed as well. Testing one concern

at a time may require separating the logic into different components. This is a

key aspect of design for testability, a consideration that is explored further in

Chapter 11, Using Test Doubles.

Tests Should Require Minimal Maintenance as the System

Evolves Around Them

Change is a fact of life. Indeed, we write automated tests mostly to make change

easier, so we should strive to ensure that our tests don’t inadvertently make

change more diffi cult.

Suppose we want to change the signature of some method on a class. When we

add a new parameter, suddenly 50 tests no longer compile. Does that result en-

courage us to make the change? Probably not. To counter this problem, we intro-

duce a new method with the parameter and arrange to have the old method call

the new method, defaulting the missing parameter to some value. Now all of the

tests compile but 30 of them still fail! Are the tests helping us make the change?

Goal: Robust Test

Inevitably, we will want to make many kinds of changes to the code as a project

unfolds and its requirements evolve. For this reason, we want to write our tests

in such a way that the number of tests affected by any one change is quite small.

That means we need to minimize overlap between tests. We also need to ensure

that changes to the test environment don’t affect our tests; we do this by isolat-

ing the SUT from the environment as much as possible. This results in much

more Robust Tests.

We should strive to Verify One Condition per Test. Ideally, only one kind of

change should cause a test to require maintenance. System changes that affect

fi xture setup or teardown code can be encapsulated behind Test Utility Methods

to further reduce the number of tests directly affected by the change.

What’s Next?

This chapter discussed why we have automated tests and specifi c goals we

should try to achieve when writing Fully Automated Tests. Before moving on to

Chapter 5, Principles of Test Automation, we need to take a short side-trip to

Chapter 4, Philosophy of Test Automation, to understand the different mindsets

of various kinds of test automaters.

What’s Next?

This page intentionally left blank

31

Chapter 4

Philosophy of Test

Automation

About This Chapter

Chapter 3, Goals of Test Automation, described many of the goals and benefi ts

of having an effective test automation program in place. This chapter introduces

some differences in the way people think about design, construction, and testing

that change the way they might naturally apply these patterns. The “big picture”

questions include whether we write tests fi rst or last, whether we think of them

as tests or examples, whether we build the software from the inside-out or from

the outside-in, whether we verify state or behavior, and whether we design the

fi xture upfront or test by test.

Why Is Philosophy Important?

What’s philosophy got to do with test automation? A lot! Our outlook on life

(and testing) strongly affects how we go about automating tests. When I was

discussing an early draft of this book with Martin Fowler (the series editor), we

came to the conclusion that there were philosophical differences between how

different people approached xUnit-based test automation. These differences lie

at the heart of why, for example, some people use Mock Objects (page 544)

sparingly and others use them everywhere.

Since that eye-opening discussion, I have been on the lookout for other phil-

osophical differences among test automaters. These alternative viewpoints tend

to come up as a result of someone saying, “I never (fi nd a need to) use that pat-

tern” or “I never run into that smell.” By questioning these statements, I can

learn a lot about the testing philosophy of the speaker. Out of these discussions

have come the following philosophical differences:

32

Chapter 4 Philosophy of Test Automation

• “Test after” versus “test fi rst”

• Test-by-test versus test all-at-once

• “Outside-in” versus “inside-out” (applies independently to design and

coding)

• Behavior verifi cation versus state verifi cation

• “Fixture designed test-by-test” versus “big fi xture design upfront”

Some Philosophical Differences

Test First or Last?

Traditional software development prepares and executes tests after all software

is designed and coded. This order of steps holds true for both customer tests and

unit tests. In contrast, the agile community has made writing the tests fi rst the

standard way of doing things. Why is the order in which testing and develop-

ment take place important? Anyone who has tried to retrofi t Fully Automated

Tests (page 22) onto a legacy system will tell you how much more diffi cult it is

to write automated tests after the fact. Just having the discipline to write auto-

mated unit tests after the software is “already fi nished” is challenging, whether

or not the tests themselves are easy to construct. Even if we design for testability,

the likelihood that we can write the tests easily and naturally without modifying

the production code is low. When tests are written fi rst, however, the design of

the system is inherently testable.

Writing the tests fi rst has some other advantages. When tests are written fi rst

and we write only enough code to make the tests pass, the production code tends

to be more minimalist. Functionality that is optional tends not to be written;

no extra effort goes into fancy error-handling code that doesn’t work. The tests

tend to be more robust because only the necessary methods are provided on each

object based on the tests’ needs.

Access to the state of the object for the purposes of fi xture setup and result

verifi cation comes much more naturally if the software is written “test fi rst.”

For example, we may avoid the test smell Sensitive Equality (see Fragile Test on

page 239) entirely because the correct attributes of objects are used in assertions

rather than comparing the string representations of those objects. We may even

fi nd that we don’t need to implement a

String representation at all because we

33

have no real need for it. The ability to substitute dependencies with Test Doubles

(page 522) for the purpose of verifying the outcome is also greatly enhanced be-

cause substitutable dependency is designed into the software from the start.

Tests or Examples?

Whenever I mention the concept of writing automated tests for software before the

software has been written, some listeners get strange looks on their faces. They ask,

“How can you possibly write tests for software that doesn’t exist?” In these cases,

I follow Brian Marrick’s lead by reframing the discussion to talk about “examples”

and example-driven development (EDD). It seems that examples are much easier

for some people to envision writing before code than are “tests.” The fact that the

examples are executable and reveal whether the requirements have been satisfi ed

can be left for a later discussion or a discussion with people who have a bit more

imagination.

By the time this book is in your hands, a family of EDD frameworks is likely

to have emerged. The Ruby-based RSpec kicked off the reframing of TDD to

EDD, and the Java-based JBehave followed shortly thereafter. The basic design

of these “unit test frameworks” is the same as xUnit but the terminology has

changed to refl ect the Executable Specifi cation (see Goals of Test Automation on

page 21) mindset.

Another popular alternative for specifying components that contain business

logic is to use Fit tests. These will invariably be more readable by nontechnical

people than something written in a programming language regardless of how

“business friendly” we make the programming language syntax!

Test-by-Test or Test All-at-Once?

The test-driven development process encourages us to “write a test” and then

“write some code” to pass that test. This process isn’t a case of all tests being

written before any code, but rather the writing of tests and code being inter-

leaved in a very fi ne-grained way. “Test a bit, code a bit, test a bit more”—this

is incremental development at its fi nest. Is this approach the only way to do

things? Not at all! Some developers prefer to identify all tests needed by the

current feature before starting any coding. This strategy enables them to “think

like a client” or “think like a tester” and lets developers avoid being sucked into

“solution mode” too early.

Test-driven purists argue that we can design more incrementally if we build

the software one test at a time. “It’s easier to stay focused if only a single test is

failing,” they say. Many test drivers report not using the debugger very much

Some Philosophical Differences

Chapter 4 Philosophy of Test Automation

because the fi ne-grained testing and incremental development leave little doubt

about why tests are failing; the tests provide Defect Localization (see Goals of

Test Automation on page 22) while the last change we made (which caused the

problem) is still fresh in our minds.

This consideration is especially relevant when we are talking about unit tests

because we can choose when to enumerate the detailed requirements (tests) of

each object or method. A reasonable compromise is to identify all unit tests at

the beginning of a task—possibly roughing in empty Test Method (page 348)

skeletons, but coding only a single Test Method body at a time. We could also

code all Test Method bodies and then disable all but one of the tests so that we

can focus on building the production code one test at a time.

With customer tests, we probably don’t want to feed the tests to the devel-

oper one by one within a user story. Therefore, it makes sense to prepare all the

tests for a single story before we begin development of that story. Some teams

prefer to have the customer tests for the story identifi ed—although not neces-

sarily fl eshed out—before they are asked to estimate the effort needed to build

the story, because the tests help frame the story.

Outside-In or Inside-Out?

Designing the software from the outside inward implies that we think fi rst about

black-box customer tests (also known as storytests) for the entire system and

then think about unit tests for each piece of software we design. Along the way,

we may also implement component tests for the large-grained components we

decide to build.

Each of these sets of tests inspires us to “think like the client” well before we

start thinking like a software developer. We focus fi rst on the interface provided

to the user of the software, whether that user is a person or another piece of

software. The tests capture these usage patterns and help us enumerate the vari-

ous scenarios we need to support. Only when we have identifi ed all the tests are

we “fi nished” with the specifi cation. Some people prefer to design outside-in

but then code inside-out to avoid dealing with the “dependency problem.” This

tactic requires anticipating the needs of the outer software when writing the

tests for the inner software. It also means that we don’t actually test the outer

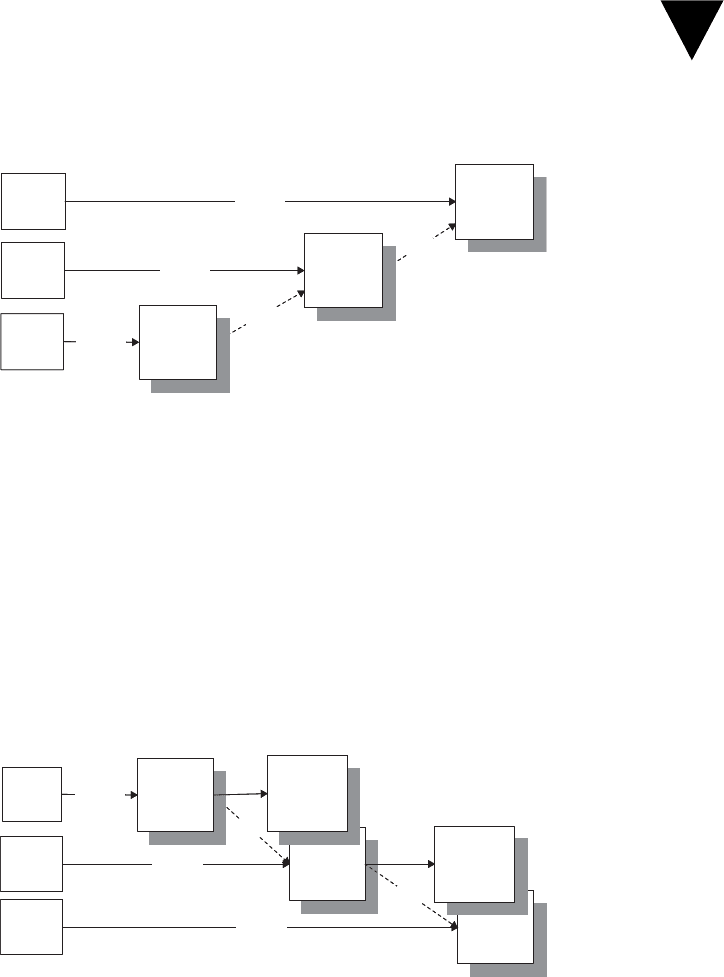

software in isolation from the inner software. Figure 4.1 illustrates this concept.

The top-to-bottom progression in the diagram implies the order in which we

write the software. Tests for the middle and lower classes can take advantage of

the already-built classes above them—a strategy that avoids the need for Test

Stubs (page 529) or Mock Objects in many of the tests. We may still need to use

Test Stubs in those tests where the inner components could potentially return

34

35

specifi c values or throw exceptions, but cannot be made to do so on cue. In

such a case, a Saboteur (see Test Stub) comes in very handy.

Figure 4.1 “Inside-out” development of functionality. Development starts with

the innermost components and proceeds toward the user interface, building on

the previously constructed components.

Other test drivers prefer to design and code from the outside-in. Writing the

code outside-in forces us to deal with the “dependency problem.” We can use

Test Stubs to stand in for the software we haven’t yet written, so that the outer

layer of software can be executed and tested. We can also use Test Stubs to inject

“impossible” indirect inputs (return values, out parameters, or exceptions) into

the SUT to verify that it handles these cases correctly.

In Figure 4.2, we have reversed the order in which we build our classes. Be-

cause the subordinate classes don’t exist yet, we used Test Doubles to stand in

for them.

Figure 4.2 “Outside-in” development of functionality supported by Test

Doubles. Development starts at the outside using Test Doubles in place of the

depended-on components (DOCs) and proceeds inward as requirements for

each DOC are identifi ed.

SUT

SUT

Test

Exercise

SUTTest

Exercise

Test

Exercise

Uses

Uses

SUT

SUT

Test

Exercise

SUTTest

Exercise

Test

Exercise

Uses

Uses

SUT

Test

Double

SUTTest

Exercise

SUTTest

Exercise

Test

Double

Test

Exercise

Uses

Uses

SUT

Test

Double

SUTTest

Exercise

SUTTest

Exercise

Test

Double

Test

Exercise

Uses

Uses

Some Philosophical Differences

36

Chapter 4 Philosophy of Test Automation

Once the subordinate classes have been built, we could remove the Test Doubles

from many of the tests. Keeping them provides better Defect Localization at the

cost of potentially higher test maintenance cost.

State or Behavior Verifi cation?

From writing code outside-in, it is but a small step to verifying behavior rather

than just state. The “statist” view suggests that it is suffi cient to put the SUT

into a specifi c state, exercise it, and verify that the SUT is in the expected state

at the end of the test. The “behaviorist” view says that we should specify not

only the start and end states of the SUT, but also the calls the SUT makes to its

dependencies. That is, we should specify the details of the calls to the “outgoing

interfaces” of the SUT. These indirect outputs of the SUT are outputs just like

the values returned by functions, except that we must use special measures to

trap them because they do not come directly back to the client or test.

The behaviorist school of thought is sometimes called behavior-driven

development. It is evidenced by the copious use of Mock Objects or Test

Spies (page 538) throughout the tests. Behavior verification does a better

job of testing each unit of software in isolation, albeit at a possible cost of

more difficult refactoring. Martin Fowler provides a detailed discussion of

the statist and behaviorist approaches in [MAS].

Fixture Design Upfront or Test-by-Test?

In the traditional test community, a popular approach is to defi ne a “test bed”

consisting of the application and a database already populated with a variety of

test data. The content of the database is carefully designed to allow many differ-

ent test scenarios to be exercised.

When the fi xture for xUnit tests is approached in a similar manner, the test

automater may defi ne a Standard Fixture (page 305) that is then used for all

the Test Methods of one or more Testcase Classes (page 373). This fi xture may

be set up as a Fresh Fixture (page 311) in each Test Method using Delegated

Setup (page 411) or in the setUp method using Implicit Setup (page 424). Alter-

natively, it can be set up as a Shared Fixture (page 317) that is reused by many

tests. Either way, the test reader may fi nd it diffi cult to determine which parts of

the fi xture are truly pre-conditions for a particular Test Method.

The more agile approach is to custom design a Minimal Fixture (page 302)

for each Test Method. With this perspective, there is no “big fi xture design up-

front” activity. This approach is most consistent with using a Fresh Fixture.

37

When Philosophies Differ

We cannot always persuade the people we work with to adopt our philosophy,

of course. Even so, understanding that others subscribe to a different philosophy

helps us appreciate why they do things differently. It’s not that these individuals

don’t share the same goals as ours;

1

it’s just that they make the decisions about

how to achieve those goals using a different philosophy. Understanding that dif-

ferent philosophies exist and recognizing which ones we subscribe to are good

fi rst steps toward fi nding some common ground between us.

My Philosophy

In case you were wondering what my personal philosophy is, here it is:

• Write the tests fi rst!

• Tests are examples!

• I usually write tests one at a time, but sometimes I list all the tests I can

think of as skeletons upfront.

• Outside-in development helps clarify which tests are needed for the

next layer inward.

• I use primarily State Verifi cation (page 462) but will resort to Behavior

Verifi cation (page 468) when needed to get good code coverage.

• I perform fi xture design on a test-by-test basis.

There! Now you know where I’m coming from.

What’s Next?

This chapter introduced the philosophies that anchor software design, construc-

tion, testing, and test automation. Chapter 5, Principles of Test Automation,

describes key principles that will help us achieve the goals described in Chapter

3, Goals of Test Automation. We will then be ready to start looking at the over-

all test automation strategy and the individual patterns.

1

For example, high-quality software, fi t for purpose, on time, under budget.

What’s Next?