Meszaros G. xUnit Test Patterns Refactoring Test Code

Подождите немного. Документ загружается.

78

Chapter 7 xUnit Basics

somewhere, so we defi ne them as methods of a Testcase Class.

3

We then pass the

name of this Testcase Class (or the module or assembly in which it resides) to

the Test Runner (page 377) to run our tests. This may be done explicitly—such

as when invoking the Test Runner on a command line—or implicitly by the

integrated development environment (IDE) that we are using.

What’s a Fixture?

The test fi xture is everything we need to have in place to exercise the SUT. Typi-

cally, it includes at least an instance of the class whose method we are testing. It

may also include other objects on which the SUT depends. Note that some mem-

bers of the xUnit family call the Testcase Class the test fi xture—a preference that

likely refl ects an assumption that all Test Methods on the Testcase Class should use

the same fi xture. This unfortunate name collision makes discussing test fi xtures

particularly problematic. In this book, I have used different names for the Testcase

Class and the test fi xture it creates. I trust that the reader will translate this termi-

nology to the terminology of his or her particular member of the xUnit family.

Defi ning Suites of Tests

Most Test Runners “auto-magically” construct a test suite containing all of the

Test Methods in the Testcase Class. Often, this is all we need. Sometimes we

want to run all the tests for an entire application; at other times we want to run

just those tests that focus on a specifi c subset of the functionality. Some mem-

bers of the xUnit family and some third-party tools implement Testcase Class

Discovery (see Test Discovery) in which the Test Runner fi nds the test suites by

searching either the fi le system or an executable for test suites.

If we do not have this capability, we need to use Test Suite Enumeration (see

Test Enumeration), in which we defi ne the overall test suite for the entire system or

application as an aggregate of several smaller test suites. To do so, we must defi ne

a special Test Suite Factory class whose suite method returns a Test Suite Object

containing the collection of Test Methods and other Test Suite Objects to run.

This collection of test suites into increasingly larger Suites of Suites is com-

monly used as a way to include the unit test suite for a class into the test suite

for the package or module, which is in turn included in the test suite for the

entire system. Such a hierarchical organization supports the running of test

suites with varying degrees of completeness and provides a practical way for

developers to run that subset of the tests that is most relevant to the software of

3

This scheme is called a test fi xture in some variants of xUnit, probably because the

creators assumed we would have a single Testcase Class per Fixture (page 631).

79

interest. It also allows them to run all the tests that exist with a single command

before they commit their changes into the source code repository [SCM].

Running Tests

Tests are run by using a Test Runner. Several different kinds of Test Runners are

available for most members of the xUnit family.

A Graphical Test Runner (see Test Runner) provides a visual way for the user

to specify, invoke, and observe the results of running a test suite. Some Graphi-

cal Test Runners allow the user to specify a test by typing in the name of a Test

Suite Factory; others provide a graphical Test Tree Explorer (see Test Runner)

that can be used to select a specifi c Test Method to execute from within a tree

of test suites, where the Test Methods serve as the tree’s leaves. Many Graphical

Test Runners are integrated into an IDE to make running tests as easy as select-

ing the Run As Test command from a context menu.

A Command-Line Test Runner (see Test Runner) can be used to execute tests

when running the test suite from the command line, as in Figure 7.2. The name

of the Test Suite Factory that should be used to create the test suite is included

as a command-line parameter. Command-Line Test Runners are most common-

ly used when invoking the Test Runner from Integration Build [SCM] scripts or

sometimes from within an IDE.

>ruby testrunner.rb c:/examples/tests/SmellHandlerTest.rb

Loaded suite SmellHandlerTest

Started

.....

Finished in 0.016 seconds.

5 tests, 6 assertions, 0 failures, 0 errors

>Exit code: 0

Figure 7.2 Using a Command-Line Test Runner to run tests from the command

line.

Test Results

Naturally, the main reason for running automated tests is to determine the re-

sults. For the results to be meaningful, we need a standard way to describe them.

In general, members of the xUnit family follow the Hollywood principle (“Don’t

call us; we’ll call you”). In other words, “No news is good news”; the tests will

“call you” when a problem occurs. Thus we can focus on the test failures rather

than inspecting a bunch of passing tests as they roll by.

Test results are classifi ed into one of three categories, each of which is trea-

ted slightly differently. When a test runs without any errors or failures, it is

The Bare Minimum

80

Chapter 7 xUnit Basics

considered to be successful. In general, xUnit does not do anything special for

successful tests—there should be no need to examine any output when a Self-

Checking Test (page 26) passes.

A test is considered to have failed when an assertion fails. That is, the test

asserts that something should be true by calling an Assertion Method, but

that assertion turns out not to be the case. When it fails, an Assertion Method

throws an assertion failure exception (or whatever facsimile the programming

language supports). The Test Automation Framework increments a counter for

each failure and adds the failure details to a list of failures; this list can be ex-

amined more closely later, after the test run is complete. The failure of a single

test, while signifi cant, does not prevent the remaining tests from being run; this

is in keeping with the principle Keep Tests Independent (see page 42).

A test is considered to have an error when either the SUT or the test itself

fails in an unexpected way. Depending on the language being used, this problem

could consist of an uncaught exception, a raised error, or something else. As

with assertion failures, the Test Automation Framework increments a counter

for each error and adds the error details to a list of errors, which can then be

examined after the test run is complete.

For each test error or test failure, xUnit records information that can be ex-

amined to help understand exactly what went wrong. As a minimum, the name

of the Test Method and Testcase Class are recorded, along with the nature of

the problem (whether it was a failed assertion or a software error). In most

Graphical Test Runners that are integrated with an IDE, one merely has to

(double-) click on the appropriate line in the traceback to see the source code

that emitted the failure or caused the error.

Because the name test error sounds more drastic than a test failure, some

test automaters try to catch all errors raised by the SUT and turn them into test

failures. This is simply unnecessary. Ironically, in most cases it is easier to deter-

mine the cause of a test error than the cause of a test failure: The stack trace for

a test error will typically pinpoint the problem code within the SUT, whereas

the stack track for a test failure merely shows the location in the test where

the failed assertion was made. It is, however, worthwhile using Guard Asser-

tions (page 490) to avoid executing code within the Test Method that would

result in a test error being raised from within the Test Method

4

itself; this is just

a normal part of verifying the expected outcome of exercising the SUT and does

not remove useful diagnostic tracebacks.

4

For example, before executing an assertion on the contents of a fi eld of an object

returned by the SUT, it is worthwhile to

assertNotNull on the object reference so as to avoid

a “null reference” error.

81

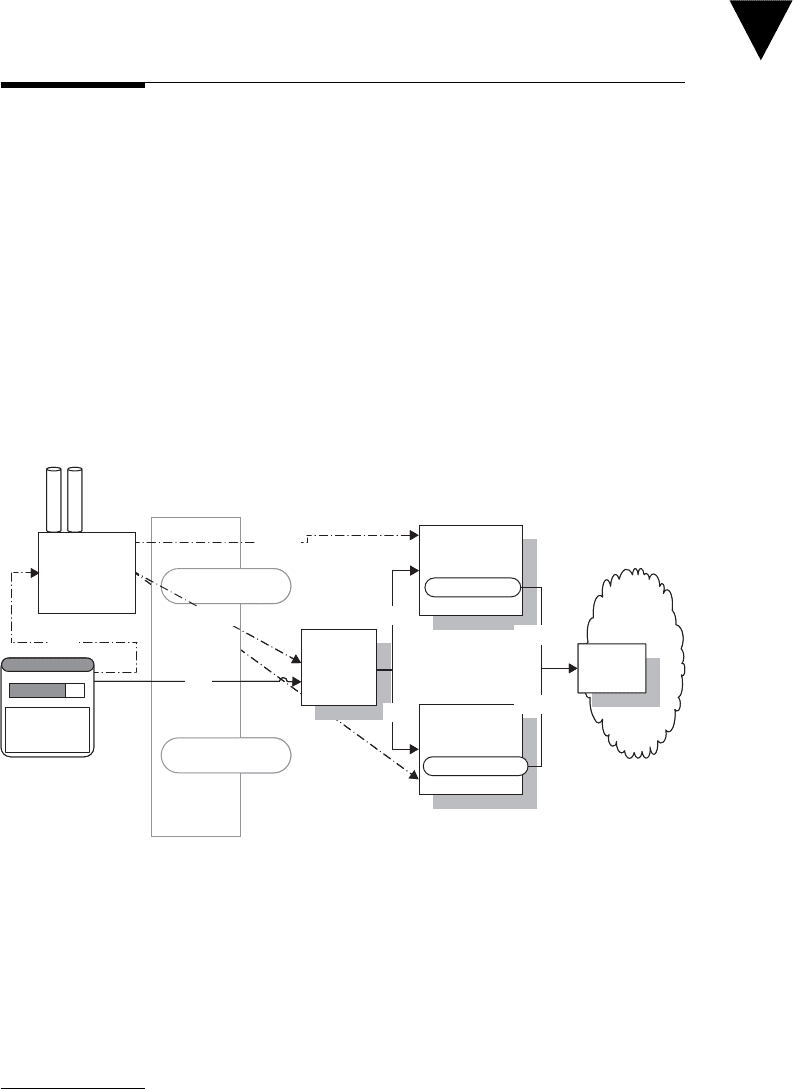

Under the xUnit Covers

The description thus far has focused on Test Methods and Testcase Classes with

the odd mention of test suites. This simplifi ed “compile time” view is enough

for most people to get started writing automated unit tests in xUnit. It is pos-

sible to use xUnit without any further understanding of how the Test Automa-

tion Framework operates—but the lack of more extensive knowledge is likely

to lead to confusion when building and reusing test fi xtures. Thus it is better

to understand how xUnit actually runs the Test Methods. In most

5

members

of the xUnit family, each Test Method is represented at runtime by a Testcase

Object (page 382) because it is a lot easier to manipulate tests if they are “fi rst-

class” objects (Figure 7.3). The Testcase Objects are aggregated into Test Suite

Objects, which can then be used to run many tests with a single user action.

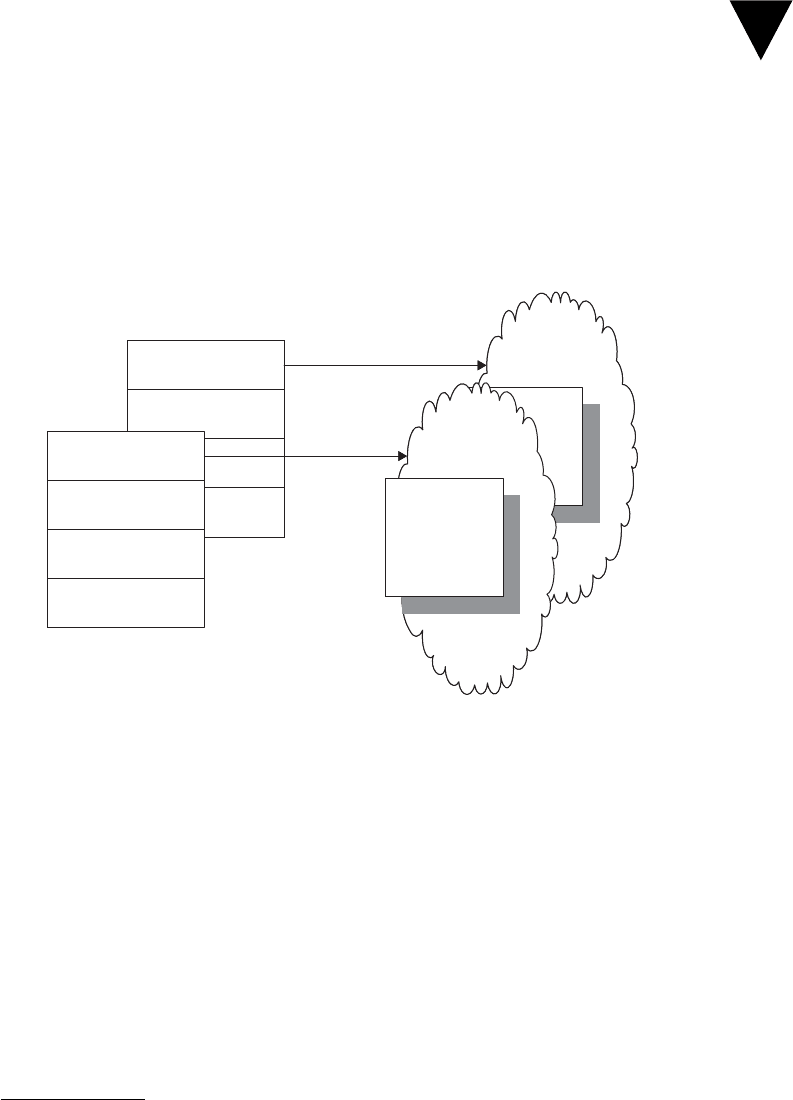

Figure 7.3 The runtime test structure as seen by the Test Automation

Framework. At runtime, the Test Runner asks the Testcase Class or a Test

Suite Factory to instantiate one Testcase Object for each Test Method, with the

objects being wrapped up in a single Test Suite Object. The Test Runner tells this

Composite [GOF] object to run its tests and collect the results. Each Testcase

Object runs one Test Method.

5

NUnit is a known exception and others may exist. See the sidebar “There’s Always an

Exception” (page 384) for more information.

Create

Test

Suite

Object

Run

Testcase

Object

testMethod_n

Testcase

Object

testMethod_1

Testcase

Class

testMethod_1

testMethod_n

Exercise

Exercise

Create

Fixture

SUT

Run

Test Runner

Test

Suite

Factory

Suite

Create

Run

Create

Test

Suite

Object

Run

Testcase

Object

testMethod_n

Testcase

Object

testMethod_1

Testcase

Class

testMethod_1

testMethod_n

Exercise

Exercise

Create

Fixture

SUT

Run

Test Runner

Test

Suite

Factory

Suite

Create

Run

Under the xUnit Covers

82

Chapter 7 xUnit Basics

Test Commands

The Test Runner cannot possibly know how to call each Test Method individu-

ally. To avoid the need for this, most members of the xUnit family convert each

Test Method into a Command [GOF] object with a run method. To create these

Testcase Objects, the Test Runner calls the suite method of the Testcase Class to

get a Test Suite Object. It then calls the run method via the standard test inter-

face. The run method of a Testcase Object executes the specifi c Test Method for

which it was instantiated and reports whether it passed or failed. The run method

of a Test Suite Object iterates over all the members of the collection of tests,

keeping track of how many tests were run and which ones failed.

Test Suite Objects

A Test Suite Object is a Composite object that implements the same standard

test interface that all Testcase Objects implement. That interface (implicit in lan-

guages lacking a type or interface construct) requires provision of a run method.

The expectation is that when run is invoked, all of the tests contained in the

receiver will be run. In the case of a Testcase Object, it is itself a “test” and

will run the corresponding Test Method. In the case of a Test Suite Object, that

means invoking run on all of the Testcase Objects it contains. The value of using

a Composite Command is that it turns the processes of running one test and

running many tests into exactly the same process.

To this point, we have assumed that we already have the Test Suite Object

instantiated. But where did it come from? By convention, each Testcase Class

acts as a Test Suite Factory. The Test Suite Factory provides a class method

called suite that returns a Test Suite Object containing one Testcase Object for

each Test Method in the class. In languages that support some form of refl ec-

tion, xUnit may use Test Method Discovery to discover the test methods and

automatically construct the Test Suite Object containing them. Other mem-

bers of the xUnit family require test automaters to implement the suite method

themselves; this kind of Test Enumeration takes more effort and is more likely

to lead to Lost Tests (see Production Bugs on page 268).

xUnit in the Procedural World

Test Automation Frameworks and test-driven development became popular only

after object-oriented programming became commonplace. Most members of

the xUnit family are implemented in object-oriented programming languages

83

that support the concept of a Testcase Object. Although the lack of objects

should not keep us from testing procedural code, it does make writing Self-

Checking Tests more labor-intensive and building generic, reusable Test Runners

more diffi cult.

In the absence of objects or classes, we must treat Test Methods as global

(public static) procedures. These methods are typically stored in fi les or mod-

ules (or whatever modularity mechanism the language supports). If the language

supports the concept of procedure variables (also known as function pointers),

we can defi ne a generic Test Suite Procedure (see Test Suite Object) that takes

an array of Test Methods (commonly called “test procedures”) as an argument.

Typically, the Test Methods must be aggregated into the arrays using Test Enu-

meration because very few non-object-oriented programming languages sup-

port refl ection.

If the language does not support any way of treating Test Methods as data,

we must defi ne the test suites by writing Test Suite Procedures that make explicit

calls to Test Methods and/or other Test Suite Procedures. Test runs may be initi-

ated by defi ning a main method on the module.

A fi nal option is to encode the tests as data in a fi le and use a single Data-

Driven Test (page 288) interpreter to execute them. The main disadvantage of

this approach is that it restricts the kinds of tests that can be run to those imple-

mented by the Data-Driven Test interpreter, which must itself be written anew

for each SUT. This strategy does have the advantage of moving the coding of

the actual tests out of the developer arena and into the end-user or tester arena,

which makes it particularly appropriate for customer tests.

What’s Next?

In this chapter we established the basic terminology for talking about how xUnit

tests are put together. Now we turn our attention to a new task—constructing

our fi rst test fi xture in Chapter 8, Transient Fixture Management.

What’s Next?

This page intentionally left blank

85

Chapter 8

Transient Fixture

Management

About This Chapter

Chapter 6, Test Automation Strategy, looked at the strategic decisions that we need

to make. That included the defi nition of the term “fi xture” and the selection of a

test fi xture strategy. Chapter 7, xUnit Basics, established our basic xUnit terminol-

ogy and diagramming notation. This chapter builds on both of these earlier chap-

ters by focusing on the mechanics of implementing the chosen fi xture strategy.

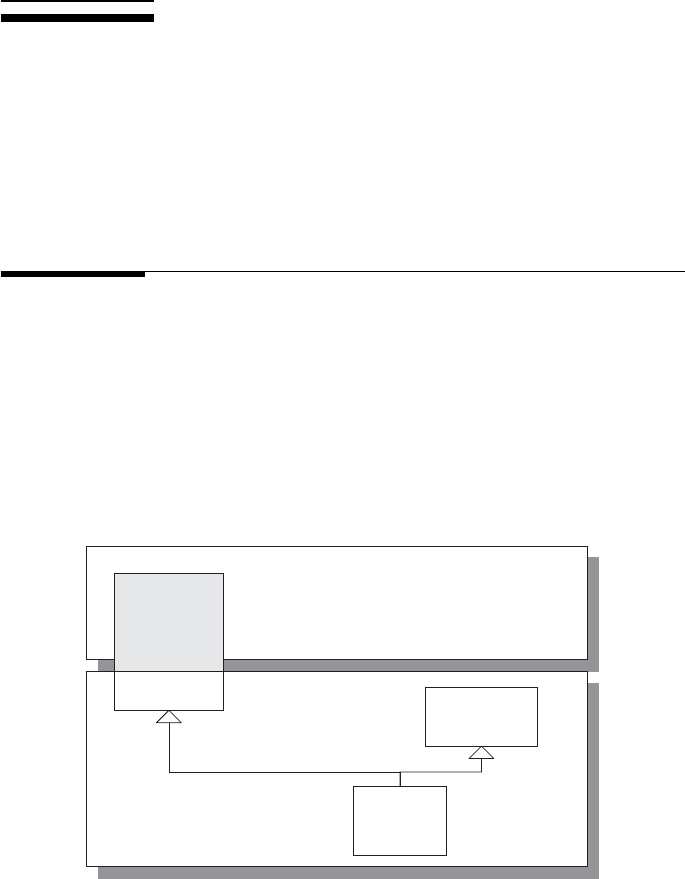

There are several different ways to set up a Fresh Fixture (page 311), and our

decision will affect how much effort it takes to write the tests, how much effort

Transient

Persistent

Immutable

Shared

Fixture

Shared

Fixture

Fresh

Fixture

Figure 8.1 Transient Fresh Fixture. Fresh Fixtures come in two fl avors:

Transient and Persistent. Both require fi xture setup; the latter also requires

fi xture teardown.

86

Chapter 8 Transient Fixture Management

it takes to maintain our tests, and whether we achieve Tests as Documentation

(see page 23). Persistent Fresh Fixtures (see Fresh Fixture) are set up the same way

as Transient Fresh Fixtures (see Fresh Fixture), albeit with some additional factors

to consider related to fi xture teardown (Figure 8.1). Shared Fixtures (page 317)

introduce another set of considerations. Persistent Fresh Fixtures and Shared

Fixtures are discussed in detail in Chapter 9.

Test Fixture Terminology

Before we can talk about setting up a fi xture, we need to agree what a fi xture is.

What Is a Fixture?

Every test consists of four parts, as described in Four-Phase Test (page 358). The

fi rst part is where we create the SUT and everything it depends on and where we

put those elements into the state required to exercise the SUT. In xUnit, we call

everything we need in place to exercise the SUT the test fi xture and the part of

the test logic that we execute to set it up the fi xture setup.

The most common way to set up the fi xture is using front door fi xture set-

up—that is, to call the appropriate methods on the SUT to put it into the start-

ing state. This may require constructing other objects and passing them to the

SUT as arguments of method calls. When the state of the SUT is stored in other

objects or components, we can do Back Door Setup (see Back Door Manipulation

on page 327)—that is, we can insert the necessary records directly into the other

component on which the behavior of the SUT depends. We use Back Door Setup

most often with databases or when we need to use a Mock Object (page 544) or

Test Double (page 522). These possibilities are covered in Chapter 13, Testing

with Databases, and Chapter 11, Using Test Doubles, respectively.

It is worth noting that the term “fi xture” is used to mean different things in

different kinds of test automation. The xUnit variants for the Microsoft lan-

guages call the Testcase Class (page 373) the test fi xture. Most other variants

of xUnit distinguish between the Testcase Class and the test fi xture (or test con-

text) it sets up. In Fit [FitB], the term “fi xture” is used to mean the custom-built

parts of the Data-Driven Test (page 288) interpreter that we use to defi ne our

Higher-Level Language (see page 41). Whenever this book says “test fi xture”

without further qualifying this term, it refers to the stuff we set up before ex-

ercising the SUT. To refer to the class that hosts the Test Methods (page 348),

whether it be in Java or C#, Ruby or VB, this book uses Testcase Class.

87

What Is a Fresh Fixture?

In a Fresh Fixture strategy, we set up a brand-new fi xture for every test we run

(Figure 8.2). That is, each Testcase Object (page 382) builds its own fi xture be-

fore exercising the SUT and does so every time it is rerun. That is what makes

the fi xture “fresh.” As a result, we completely avoid the problems associated

with Interacting Tests (see Erratic Test on page 228).

Figure 8.2 A pair of Fresh Fixtures, each with its creator. A Fresh Fixture is

built specifi cally for a single test, used once, and then retired.

What Is a Transient Fresh Fixture?

When our fi xture is an in-memory fi xture referenced only by local variables

or instance variables,

1

the fi xture just “disappears” after every test courtesy of

Garbage-Collected Teardown (page 500). When fi xtures are persistent, this is

not the case. Thus we have some decisions to make about how we implement

the Fresh Fixture strategy. In particular, we have two different ways to keep

them “fresh.” The obvious option is tear down the fi xture after each test. The

less obvious option is to leave the old fi xture around and then build a new

fi xture in such a way that it does not collide with the old fi xture.

1

See the sidebar “There’s Always an Exception” (page 384).

Fixture

Setup

Exercise

Verify

Teardown

SUT

Fixture

Setup

Exercise

Verify

Teardown

SUT

Fixture

Setup

Exercise

Verify

Teardown

SUT

Fixture

Setup

Exercise

Verify

Teardown

SUT

Test Fixture Terminology