Middleton W.M. (ed.) Reference Data for Engineers: Radio, Electronics, Computer and Communications

Подождите немного. Документ загружается.

47-30

REFERENCE

DATA

FOR ENGINEERS

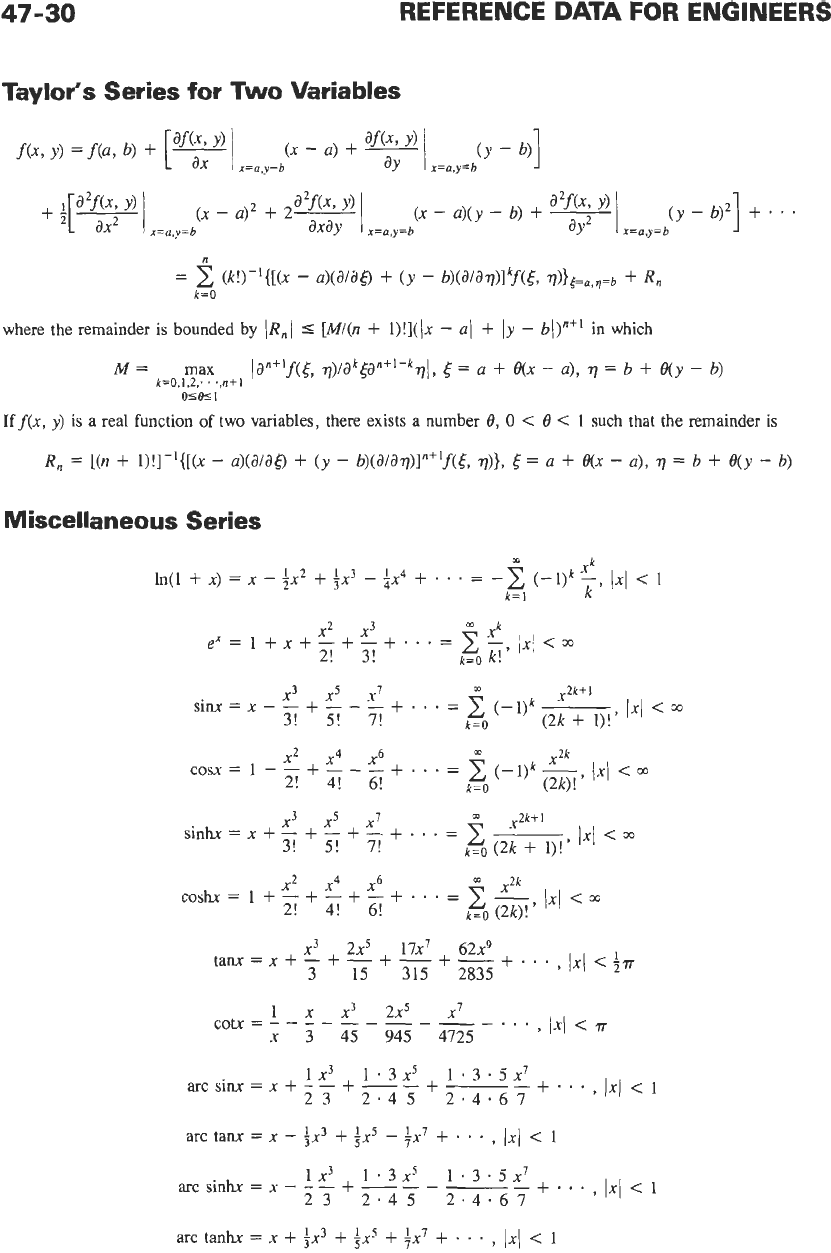

Taylor‘s Series for

Two

Variables

n

k=O

=

C,

(w-I{[(x

-

awao

+

(Y

-

b)(a/a~)i”f(t,

V))t=a,q=b

+

Rn

where the remainder is bounded

by

/Rnl

5

[M/(n

+

l)!]((x

-

a/

+

Iy

-

bont1

in which

M

=

max

lan+lf(&,

V)/aktantl-kv(,

8

=

a

+

-

a),

77

=

b

+

B(y

-

b)

k=0,1,2;

.,ntI

oce5-l

Iff(x,

y)

is a real function of two variables, there exists a number

8,

0

<

0

<

1

such that the remainder is

R,

=

[(n

+

i)!]--l([(x

-

a)(a/a&

+

(y

-

b)(a/a~)]~+If(t,

VI},

5

=

+

B(X

-

u),

71

=

b

+

e(y

-

b)

Miscellaneous Series

m

x3

x5

x7

sinx=x--+---+.

.

.

=

x2

x4

x6

x3

x5

x7

1x1

<

3! 5! 7!

k=O

m

cosx

=

1

-

-

+

-

-

-

+

.

.

.

=

2! 4! 6!

k=O

9

1x1

<

cC

sinb=x+-+-+-+.n.=

3! 5!

7!

k=O

(2k

f

I)!

.=

x4

x6

X2k

2! 4! 6!

k=O

(2k).

coshx

=

1

+

-

+

-

+

-

+

.

.

*

=

y,/xI

<

m

x3 2x5 17x7 62x9

3

15

315 2835

tanx

=

x

+

-

+

-

+

-

+

-

+

*.

.

,

1x1

<

trr

1

x

x3 2x5 x7

cotx

=

-

-

-

-

-

-

-

-

-

-

.

.

*

x

3 45

945

4725

,

1x1

<

iX3

1.3~5 1’3.5~7

acsim=x+

--+--+--+...

,

1x1

<

1

arc tanx

=

x

-

5.’

+

fx’

-

3.’

+ *

-

.

,

1x1

<

1

23 2.45 2‘4.67

1x3 1.3x5 1.3.5x7

,

1x1

<

1

arc sink

=

x

-

-

-

+

-

-

-

___

-

+

. .

.

23 2.45 2‘4.67

arc tanhx

=

x

+

fx3

+

ix’

+

fx’

+

.

.

.

3

1x1

<

1

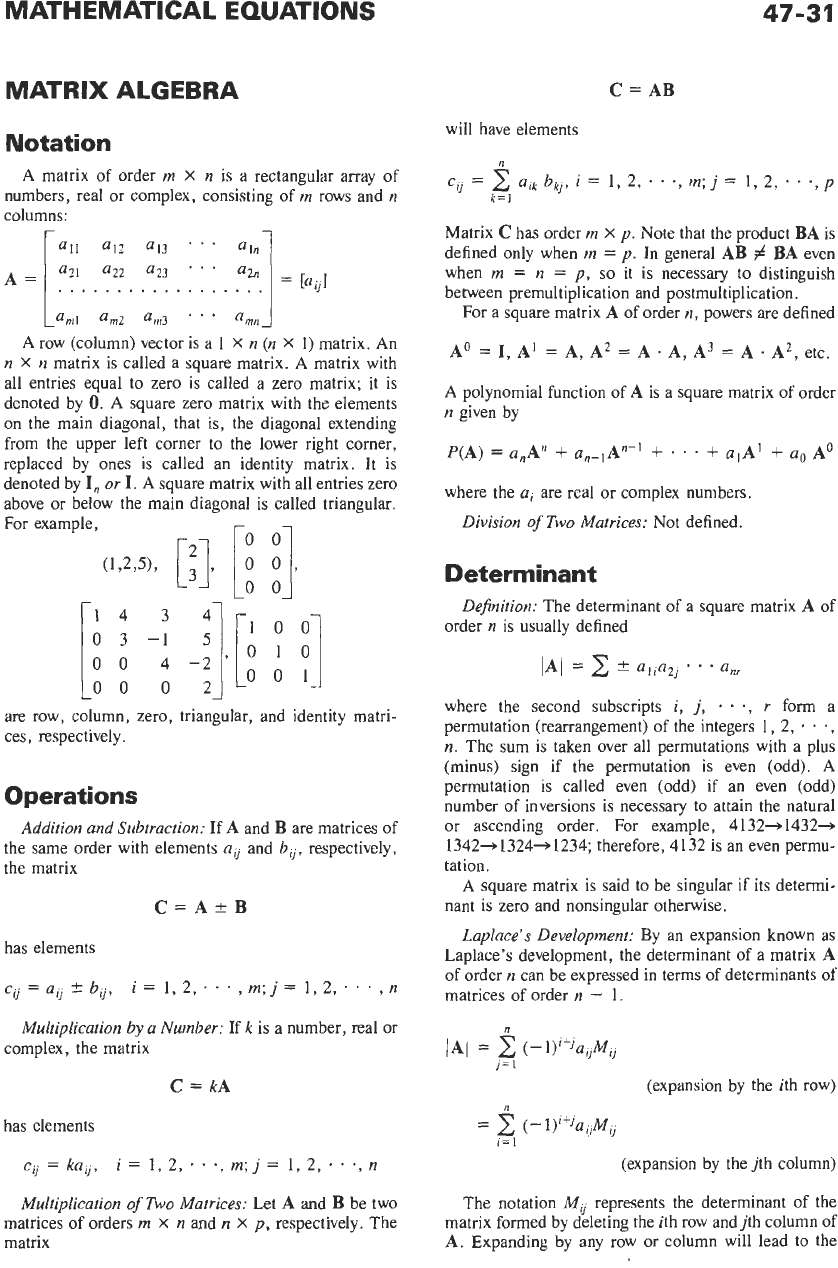

MATHEMATICAL EQUATIONS

MATRIX ALGEBRA

47-31

C

=

AB

will have elements

cij

=

2

ajk

bkj, i

=

1,

2,

*

.

.,

rn;

j

=

1, 2,

-

*

.,

p

n

k=

I

Notation

A

matrix of order

rn

X

n

is a rectangular array of

numbers, real or complex, consisting of

m

rows and

n

columns:

=

[aijl

all

a12

a13

'

'

*

a21

a22

%3

'

*

'

A

row (column) vector is a 1

X

n

(n

X

1) matrix. An

n

X

n

matrix is called a square matrix.

A

matrix with

all entries equal to zero is called a zero matrix; it is

denoted by

0.

A square zero matrix with the elements

on the main diagonal, that is, the diagonal extending

from the upper left corner to the lower right corner,

replaced by ones is called an identity matrix. It is

denoted by

I,

or

I.

A

square matrix with

all

entries zero

above or below the main diagonal is called triangular.

For example,

(123,

[:I,

[!

%].

r"

00

-;

-;],[;

001

;

"I

are row, column, zero, triangular, and identity matri-

ces, respectively.

Operations

Addition and Subtraction:

If

A

and

B

are matrices of

the same order with elements

aij

and

bV,

respectively,

the matrix

C=ArB

has elements

c..

ll

=

a,.

ll

-

f

b..

i

=

1, 2,

'

.

*

,

rn;

j

=

1,2,

*

.

,

n

Multiplication

by

a

Number:

If

k

is a number, real or

complex, the matrix

C

=

kA

has elements

cij

=

kajj,

Multiplication

of

Two

Matrices:

Let

A

and

€3

be two

matrices of orders

m

X

n

and

n

X

p,

respectively. The

matrix

i

=

1, 2,

. .

.,

rn;

j

=

1, 2,

.

.,

n

Matrix

C

has order

rn

X

p.

Note that the product

BA

is

defined only when

m

=

p.

In general

AB

#

BA

even

when

rn

=

n

=

p,

so

it is necessary to distinguish

between premultiplication and postmultiplication.

For a square matrix

A

of order

n,

powers are defined

A'

=

I,

A'

=

A,

A2

=

A

.

A,

A3

=

A.

A2,

etc.

A

polynomial function of

A

is a square matrix of order

n

given by

P(A)

=

anAn

+

an-lAn-'

+

*

.

.

+

alA'

'i

a0

A'

where the

ai

are real or complex numbers.

Division

of

Two

Matrices:

Not defined.

Determinant

Definition:

The determinant of a square matrix

A

of

order

n

is usually defined

where the second subscripts

i,

j,

.,

r

form a

permutation (rearrangement) of the integers 1, 2,

*

*

a,

n.

The sum is taken over all permutations with a plus

(minus) sign if the permutation is even (odd).

A

permutation is called even (odd) if an even (odd)

number

of

inversions

is

necessary to attain the natural

or ascending order. For example, 4132+1432+

134241324-1234; therefore, 4132 is an even permu-

tation.

A

square matrix is said to be singular if its determi-

nant is zero and nonsingular otherwise.

Laplace's Development:

By an expansion known as

Laplace's development, the determinant of a matrix

A

of order

n

can be expressed in terms of determinants of

matrices of order

n

-

1.

(expansion by the ith row)

(expansion by the jth column)

The notation

Mij

represents the determinant of the

matrix formed by deleting the ith row and jth column of

A.

Expanding by any row or column will lead to the

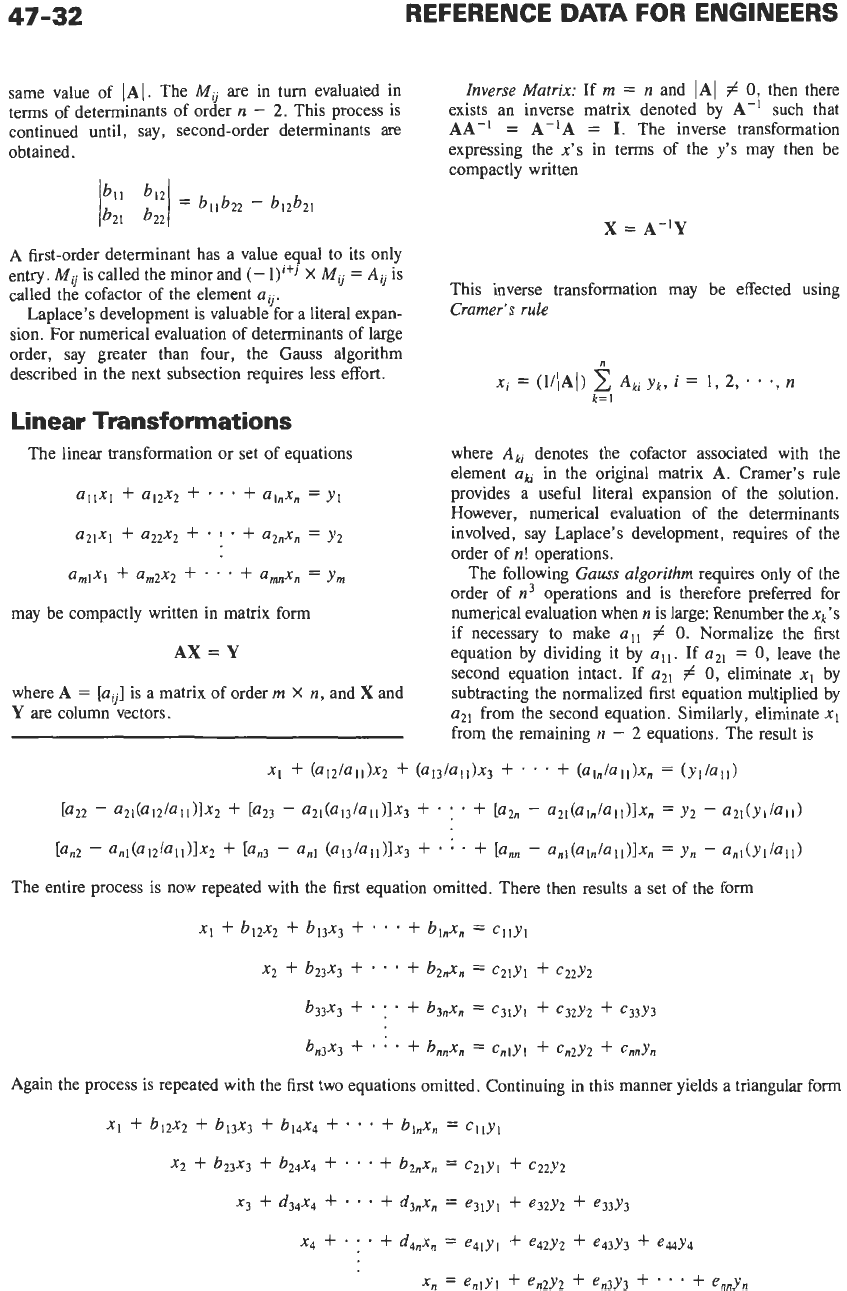

47-32

REFERENCE

DATA

FOR ENGINEERS

same value of

[AI.

The

Mij

are in turn evaluated in

terms of determinants of order

n

-

2.

This process is

continued until, say, second-order determinants are

obtained.

A first-order determinant has a value equal to its only

entry.

Mii

is called the minor and

(-

l)itj

X

M..

11

=

A-

11

is

called the cofactor

of

the element

aii.

Laplace's development is valuable for a literal expan-

sion. For numerical evaluation

of

determinants of large

order, say greater than four, the Gauss algorithm

described in the next subsection requires less effort.

Linear Transformations

The linear transformation

or

set of equations

U1lXl

+

a12x2

+

-

* *

+

al,xn

=

y]

a21x1

+

aZ2x2

+

amlxl

+

am2x2

+

:

-

+

aZnxn

=

y2

*

+

amnxn

=

ym

may be compactly written in matrix form

AX=Y

where

A

=

[aij]

is a matrix of order

m

X

n,

and

X

and

Y

are

column vectors.

Inverse

Matrix:

If

m

=

n

and

IAl

#

0,

then there

exists

an

inverse matrix denoted by

A-'

such that

AA-I

=

A-'A

=

I.

The inverse transformation

expressing the

x's

in terms of the

y's

may then be

compactly written

X

=

A-'Y

This inverse transformation may be effected using

Cramer's

rule

where

Aki

denotes the cofactor associated with the

element

aki

in the original matrix

A.

Cramer's rule

provides a useful literal expansion of the solution.

However, numerical evaluation of the determinants

involved, say Laplace's development, requires of the

order of

n!

operations.

The following

Gauss

algorithm

requires only of the

order of

n3

operations and is therefore preferred for

numerical evaluation when

n

is large: Renumber the

xk's

if necessary to make

all

#

0.

Normalize the first

equation by dividing it by

all.

If

aZ1

=

0,

leave the

second equation intact.

If

aZ1

#

0,

eliminate

xl

by

subtracting the normalized first equation multiplied by

az1

from the second equation. Similarly, eliminate

x1

from the remaining

n

-

2

equations. The result is

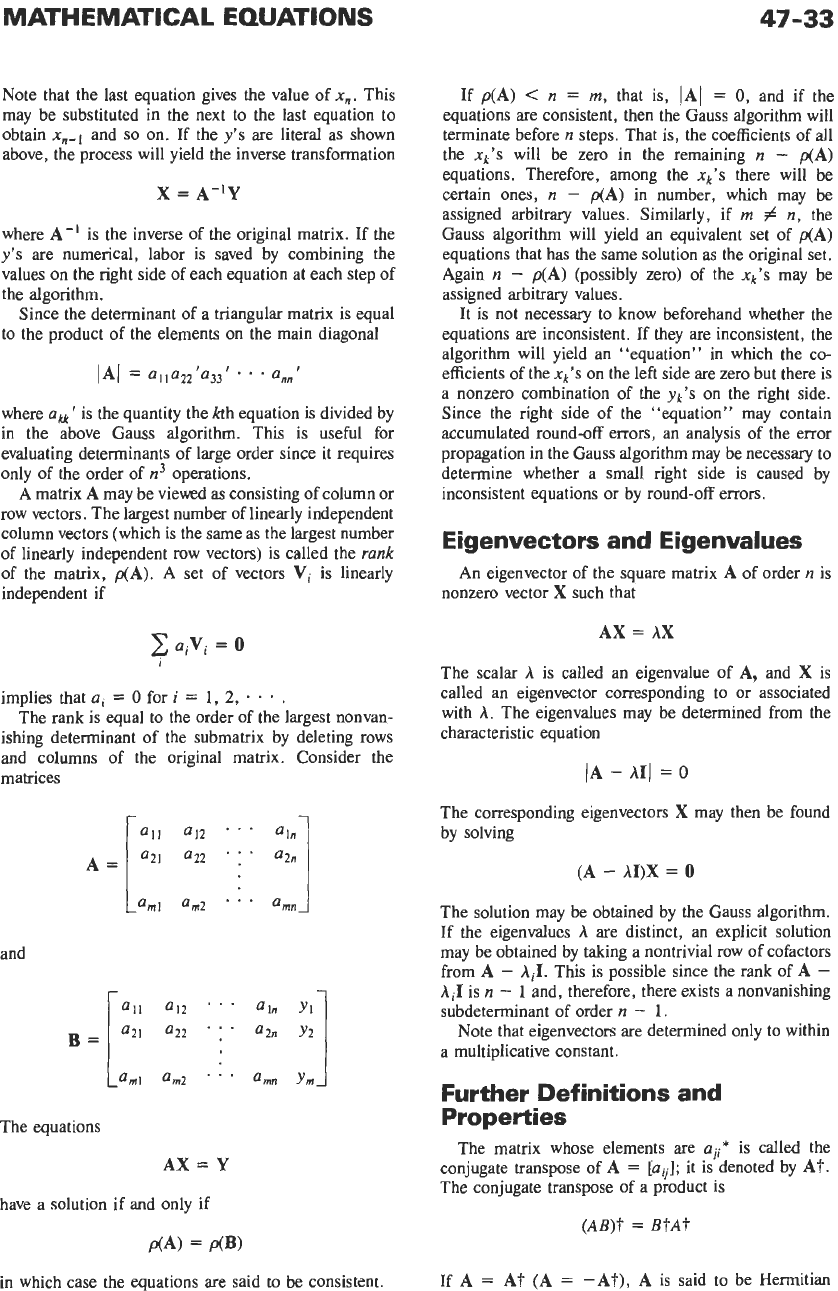

MATHEMATICAL EQUATIONS

47-33

Note that the last equation gives the value of

n,.

This

may be substituted in the next to the last equation to

obtain

x,-~

and

so

on.

If the

y’s

are literal as shown

above, the process will yield the inverse transformation

X

=

A-‘Y

where

A-‘

is the inverse of the original matrix. If the

y’s

are numerical, labor is saved by combining the

values

on

the right side of each equation at each step of

the algorithm.

Since the determinant of a triangular matrix is equal

to the product of the elements on the main diagonal

IAl

=

a11a22’a33’

* *

annf

where

awr

is the quantity the kth equation is divided by

in the above Gauss algorithm. This is useful for

evaluating determinants of large order since it requires

only of the order of

n3

operations.

A matrix

A

may be viewed as consisting of column or

row vectors. The largest number of linearly independent

column vectors (which is the same as the largest number

of linearly independent row vectors) is called the

rank

of the matrix,

p(A).

A

set of vectors

Vi

is linearly

independent if

2

a,vi

=

0

1

implies that

ai

=

0

for

i

=

1,

2,

* *

+

.

The rank is equal to the order of the largest nonvan-

ishing determinant of the submatrix by deleting rows

and columns of the original matrix. Consider the

matrices

and

The equations

AX

=

Y

have a solution if and only if

d-4)

=

p(B)

in which case the equations are said to be consistent.

If

p(A)

<

n

=

m,

that is,

[AI

=

0,

and if the

equations are consistent, then the Gauss algorithm will

terminate before

n

steps. That is, the coefficients of all

the

nk’s

will be zero in the remaining

n

-

p(A)

equations. Therefore, among the

xk’s

there will be

certain ones,

n

-

p(A)

in number, which may be

assigned arbitrary values. Similarly, if

m

#

n,

the

Gauss algorithm will yield an equivalent set of

p(A)

equations that has the same solution as the original set.

Again

n

-

p(A)

(possibly zero) of the

xk’s

may be

assigned arbitrary values.

It is not necessary

to

know beforehand whether the

equations are inconsistent. If they are inconsistent, the

algorithm will yield an “equation” in which the co-

efficients of the

xk’s

on the left side are zero but there is

a nonzero combination of the

yk’s

on the right side.

Since the right side of the “equation” may contain

accumulated round-off errors, an analysis

of

the error

propagation in the Gauss algorithm may be necessary to

determine whether a small right side is caused by

inconsistent equations or by round-off errors.

Eigenvectors and Eigenvalues

An eigenvector of the square matrix

A

of order

n

is

nonzero vector

X

such that

AX

=

AX

The scalar

A

is called an eigenvalue of

A,

and

X

is

called an eigenvector corresponding to or associated

with

A.

The eigenvalues may be determined from the

characteristic equation

(A

-

AI1

=

0

The corresponding eigenvectors

X

may then be found

by solving

(A

-

AI)X

=

0

The solution may be obtained by the Gauss algorithm.

If the eigenvalues

A

are distinct, an explicit solution

may be obtained by taking a nontrivial row of cofactors

from

A

-

A,I.

This is possible since the rank of

A

-

AiI

is

n

-

1

and, therefore, there exists a nonvanishing

subdeterminant of order

n

-

1.

Note that eigenvectors are determined only to within

a multiplicative constant.

Further Definitions

and

Properties

The matrix whose elements are

aji*

is called the

conjugate transpose of

A

=

[aij];

it is denoted by

A?.

The conjugate transpose of a product is

(AB)?

=

BtA?

If

A

=

At

(A

=

-At),

A

is said to be Hermitian

47-34

REFERENCE

DATA

FOR

ENGINEERS

(skew-Hermitian). If

AtA

=

I,

A

is called unitary. If

AIA

=

AAt,

A

is called normal, Diagonal, unitary,

Hermitian, and skew-Hermitian matrices

are

special

cases of normal matrices. If the matrix

A

is real (that is,

all entries are real), the terms Hermitian, skew-

Hermitian, and unitary are usually replaced by symmet-

ric, skew-symmetric, and unitary, respectively. The

eigenvalues of Hermitian, skew-Hermitian, and unitary

matrices are real, pure imaginary, and of unit absolute

value, respectively.

The inner product of vectors

x

and

y

is

n

i=

I

XtY

=

xi*yi

where

xi*

is the complex conjugate of

xi.

(A

square

matrix of order one is considered here as a scalar.)

The length of a vector is given by

Two vectors are said to be orthogonal if

xtY

=

0

Orthogonality corresponds to perpendicularity in two

and three dimensions. Inner products obey the follow-

ing inequalities

IXtYI

5

llXllllYll

(Schwarz)

IIX

-t

Y/I

5

llxll

+

IIYII

(Triangle)

Two matrices

A

and

B

are called similar if there

exists a nonsingular matrix

C

such that

B

=

CAC-I

Of particular interest is the case in which matrix

B

is

diagonal

The diagonal elements

hi

are the eigenvalues of the

matrix

A.

Not all matrices are similar to diagonal ones.

However, if a matrix of order

n

has

n

linearly indepen-

dent eigenvectors, as is the case with normal matrices

or when all its eigenvalues

are

distinct, it can be

diagonalized. This may be done as follows: Let

Then

0

A2

0

0

0

0

A,

J

The matrix formed from the eigenvectors is nonsingular

since the eigenvectors are linearly independent. Hence

If a matrix

A

is reducible to diagonal form, polyno-

mial functions of

A

are readily calculated

f(A)

=

f(C-’BC)

Matrices can always be reduced to the Jordan canoni-

cal form, in which the eigenvalues are on the main

diagonal and there are ones in certain places just above

the main diagonal, and zeros elsewhere. In this form,

operations such as polynomial functions are simplified.

Hermitian Forms

A

Hermitian

form

is

a polynomial

where

A

is a Hermitian matrix. Hermitian forms are

real-valued and satisfy the inequality

h,l(X112

5

XIAX

5

hlIjXl(2

in which

A,

and

A,

are the largest and smallest eigen-

values of

A.

By a suitable change of variable

Y=TX

any Hermitian form

XtAX

may be reduced.

MATHEMATICAL EQUATIONS

47-35

n

XtAX

=

YSTATtY

=

AilyiI2

i=l

by choosing

T

to be that unitary matrix which diag-

onalizes

A.

The maximum value of

XtAX

for all unit vectors

X,

that is,

llXll

=

1,

is the largest eigenvalue of

A,

say

AI.

A

vector yielding this largest value will be a correspond-

ing eigenvector, say

XI.

The maximum of

XtAX

overall unit vectors orthogonal to

XI

will be another

eigenvalue, say

A2.

A

vector yielding

A,

will be a

corresponding eigenvector, say

Xz.

The process is

repeated considering unit vectors orthogonal to both

X

and

X2.

In this way, the eigenvalues of any Hermitian

matrix may be found.

VECTOR-ANALYSIS

EQUATIONS

Rectangular Coordinates

type.)

(In

the following, vectors are indicated in bold-faced

Notation:

a

=

ai

a

=

magnitude of

a

i

=

unit vector in direction of

a

Associative Law:

For addition

a

+

(b

+

c)

=

(a

+

b)

+

c

=

a

+

b

+

c

Commutative Law:

For

addition

a+b=b+a

Scalar or “Dot” Product (Fig.

21

1:

a.b=b.a

=

ab

cos6

where

0

=

angle included by

a

and b.

i

Fig.

21.

“Dot”

product.

Vector or

“Cross”

Product:

ax

b= -bxa

=

absin0ir

where,

0

=

smallest angle swept in rotating

a

into b,

f

=

unit vector perpendicular to plane of

a

and b,

and directed in the sense of travel of a

right-hand screw rotating from

a

to b through

the angle

6.

Distributive Law for Scalar Multiplication:

a-(b+ c)

=

a-b

+

a.c

Distributive Law for Vector Multiplication:

a

X

(b

+

c)

=a

X

b

+

a

X

c

Scalar Triple Product:

a.

(b

X

c)

=

(a

x

b)

.

c

=

c

-

(a

X

b)

=

b (c

x

a)

Vector Triple Product:

a

x

(b

X

c)

=

(a c)b

-

(a b)c

(a

x

b)

.

(c

x

d)

=

(a

.

c)(b

.

d)

-

(a

-

d)(b

.

c)

(a

x

b)

x

(c

x

d)

=

(a

X

b

*

d)c

-

(a

x

b

.

c)d

Del Operator:

V

=

i(a/ax)

+

j(a/ay)

+

k(d/az)

where

i,

j,

k

are unit vectors in the directions of the

x,

y,

z

coordinate axes, respectively.

Gradient:

grad4

=

V4

=

i(d+//ax)

+

j(a@dy)

+

k(&$//az),

in Cartesian coordinates

Divergence:

diva

=

V

.

a

=

(aa,/ax)

+

(da,/dy)

+-

(aa,/az),

in Cartesian coordinates

where

a,,

uy,

a,

are components of

a

in the directions

of the

x,

y,

z

coordinate axes, respectively.

div(a

+

b)

=

diva

+

divb

div(c#Ja)

=

c#J

diva

+

a

grad4

Curl:

curla

=

V

x

a

47-36

I

REFERENCE DATA FOR ENGINEERS

coordinates

curl(a

+

b)

=

curla

+

curlb

curl(&)

=

grad4

X

a

+

@curla

curl grad4

=

0

div curla

=

0

div(a

x

b)

=

b

-

curla

-

a

.

curlb

Laplacian

V2

=

V

V

v24

=

(a2yax2)

+

(a2@ay2)

+

(a2@az2),

in Cartesian coordinates

curl curla

=

grad diva

-

=

V(V

a)

-

(iV2ax

+

jV 2ay

+

kV 2az)

Va

Directional Derivative:

Derivative of

4

in the direc-

tion of

s

Integral Relations:

In the following equations,

T

is a

volume bounded by a closed surface,

S.

The unit vector

n

is normal

to

the surface and

is

directed outward. The

symbol

dS

represents an element

of

surface area.

If

the

surface is represented by

z

=

f(x,

y)

then

ds

=

[i

+

(af/axl2

+

(af/axl2

+

dx dy

where

alan

is the derivative in the direction of

n

(Green’s theorem).

In the two following equations,

S

is an open surface

bounded by a contour

C,

with distance along

C

represented by

s.

I,

(V

x

a)

-

ndS

=

a

*

ds

(Stokes’ theorem)

I,

where,

s

=

s3,

3

is the unit tangent vector along

C.

Gradient, Divergence, Curl, and Laplacian

in

Coordinate

Systems Other Than Rectangular

grad$

=

V$

=

(a+/ap)j5

+

p-’(a$/a@$

+

(aX/az)k

Cylindrical Coordinates:

(p,

4,

z),

unit vectors

j5,

6,

k,

respectively

Let

a

=

app

+

as6

+

a,k.

Then

diva

=

V

a

=

p-’(a/ap)(pa,)

+

p-’(aas/&$)

+

(aaJaz)

curla

=

V

x

a

=

[p-l(aa,/a@

-

(a~,pz)]j5

+

[(aa,/dz)

-

(aa,/ap)]&

+

[p-l(a/ap)(pa+)

-

p-i(a~,/a+)lk

v2q

=

p-l(a/ap)[~(a$/ap)i

+

p-2(a2$/a42)

+

(aZ$/az2)

Spherical Coordinates: (r,

0,

4),

unit vectors

i,

6,

6

r

=

distance to origin

MATHEMATICAL EQUATIONS

47-37

0

=

polar

angle

4

=

azimuthal angle

grad$

=

V$

=

(aJ//ar)i

+

r-’(a$/ae)8

+

(r

sine)-’(a$/a4)&

Let

a

=

a,f

+

ud6

+

a,+..

Then

diva

=

V

-

a

=

r-’(a/ar)(r2a,)

+

(r sine)-l(alae)(a, sine)

+

(r

sine)-’(aa,la4)

curla

=

V

x

a

=

(r sine)-’[(a/aO)(a, sine)

-

(aas/a4)]i

Space

Curves

A

curve may be represented vectorially as

r=r(s).

A

unit tangent

t

is then given by

See Fig.

22.

t

=

drlds

The principal normal

n

is

given by

n

=

(l/k)(dt/ds)

where

k

is the curvature. The radius of curvature

R=

Ilk.

For a plane curve

y=f(x),

the curvature may be

computed from

k=

ly”l/[l

+

(Y’)~]~’?

The binormal is defined by

b=t

x

n

These vectors satisfy Frenet’s equations

dnlds

=

-kt

+

rb

dblds

=

-rn

X

\

J

Fig.

22.

Space

curve.

where

T

is the torsion. The torsion

is

zero everywhere if

and only if the curve lies in a plane.

47-38

REFERENCE DATA FOR ENGINEERS

complex, then it will converge for all p such that Rep

>

RePo.

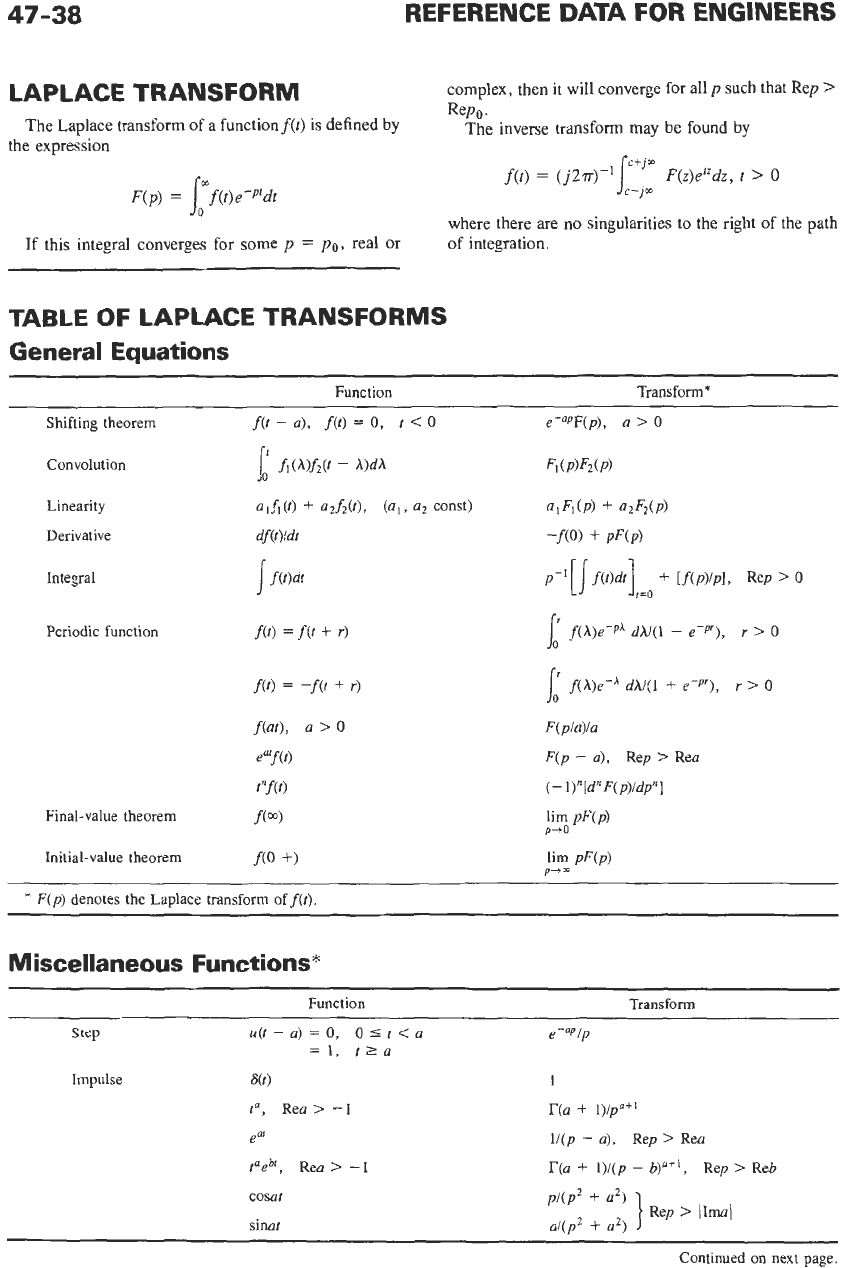

LAPLACE TRANSFORM

The Laplace transform of a functionf(t) is defined by

The inverse transform may be found by

the expression

ctjw

c-jm

f(t)

=

(jZr)-l/

F(z)erzciz,

t

>

o

F(p)

=

f(t)e-Prdt

where there are no singularities to the right of the path

of integration.

I,"

If

this integral converges for some

p

=

po,

real

or

TABLE OF LAPLACE TRANSFORMS

General Equations

~~~ ~

Function Transform*

Shifting theorem

f(t

-

a),

f(t)

=

0,

t

<

0

e-"PF(p),

a

>

0

Convolution

id

fi(hlfi(t

-

Ndh

FI

(PF2

(P)

Linearity

Derivative

Integral

Initial-value theorem

f(0

+)

F(p/a)la

F(p

-

a),

(

-

1)"

[d"

F(p)idp"

1

Rep

7

Rea

*

F(p)

denotes the Laplace transform

off(?).

~~

Miscellaneous Functions*

Function Transform

Step

u(t-a)=O,

o=tca

-

-1,

??a

Impulse

1

tu,

Rea

>

-1

r(a

.t

l)/poi'

e

"

l/(p

-

a),

Rep

>

Rea

t'ebt,

Rea

>

-1

r(a

$.

l)l(p

-

b)OT', Rep

>

Reb

cosat

sinat

>

Continued

on

next page.

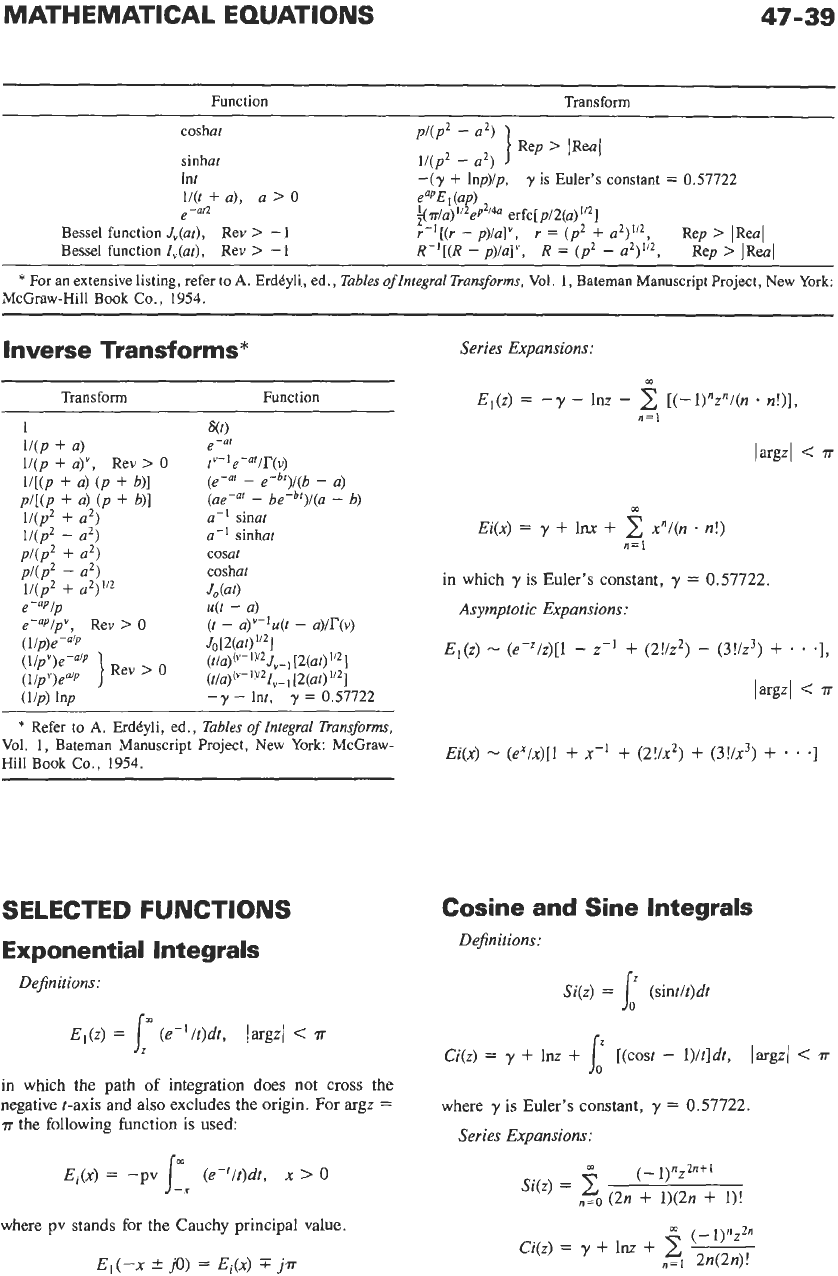

MATHEMATICAL EQUATIONS

47-39

Function Transform

pi(p2

-

")

1

Rep

>

lReu1

coshat

sinhat

ll(p2

-

a2)

Int

-(y

+

lnp)/p,

y

is

Euler's constant

=

0.57722

Il(t

+

a),

a

>

0

eapE1 (up)

e

-at2

$z-/a)

1'2ep2/40

erfc[p/2(a)

1/21

Bessel function

J,(at),

Rev

>

-

1

r-'[(r

-

p)/a]",

r

=

(p2

+

u~)"~, Rep

>

[Real

Bessel function I,(at),

Rev

>

-

1

R-'[(R

-

p)/a]",

R

=

(p2

-

a2)112,

Rep

>

lReul

*

For an extensive listing, refer

to

A.

ErdCyli, ed., Tables oflntegral Transforms, Vol.

1,

Bateman Manuscript Project, New York:

McGraw-Hill Book

Co.,

1954.

Inverse Transforms*

Transform Function

s(t)

e-nt

tv-l

-af

(e-0r

-

edb')/(b

-

a)

@el-"'

- -

b)

a-

sinat

.-I

sinhat

cosat

coshat

J,

(at)

u(t

-

a)

e

iT(v)

*

Refer to

A.

ErdCyli, ed., Tables

of

Integral Transforms,

Vol.

1, Bateman Manuscript Project, New York: McCraw-

Hill Book

Co.,

1954.

SELECTED FUNCTIONS

Exponential Integrals

Dejinitions:

E,(z)

=

JIP

(e-'/t)dt, largz/

<

r

in which the path of integration does not cross

the

negative t-axis and

also

excludes the origin. For argz

=

T

the following function is used:

where pv stands for the Cauchy principal value.

E,(-x

&

j0)

=

Ei(x)

7

j.rr

Series Expansions:

m

El(z)

=

-y

-

lnz

-

2

[(-l)"zn/(n

*

n!)],

n=l

in which

y

is

Euler's constant,

y

=

0.57722.

Asymptotic Expansions:

E,(z)

-

(e-'/z)[I

-

z-'

+

(2!/z2)

-

(3!/z3)

+

*

-1,

largzl

<

r

Ei(x)

-

(e"/x)[l

+

x-l

+

(2!/x2)

f

(3!/x3)

+

*

.

.]

Cosine and Sine Integrals

Dejnitions:

si(z)

=

(sint/t)dt

Ci(z)

=

y

+

lnz

+

where

y

is Euler's constant,

y

=

0.57722.

[(cost

-

l)/t]dt,

largz/

<

T

l

Series

Expansions: