Banner A. The Calculus Lifesaver: All the Tools You Need to Excel at Calculus

Подождите немного. Документ загружается.

236 • The Derivative and Graphs

Now we have assumed that f

0

is always equal to 0, the quantity f

0

(c)

must be 0. So the above equation says that

f(x) − f (S)

x − S

= 0,

which means that f(x) = f (S). If we now let C = f(S), we have

shown that f(x) = C for all x in the interval (a, b), so f is constant! In

summary,

if f

0

(x) = 0 for all x in (a, b), then f is constant on (a, b).

Actually, we’ve already used this fact in Section 10.2.2 of the previous

chapter. There we saw that if f(x) = sin

−1

(x)+cos

−1

(x), then f

0

(x) = 0

for all x in the interval (−1, 1). We concluded that f is constant on that

interval, and in fact since f (0) = π/2, we have sin

−1

(x)+cos

−1

(x) = π/2

for all x in (−1, 1).

2. Suppose that two differentiable functions have exactly the same deriva-

tive. Are they the same function? Not necessarily. They could differ

by a constant; for example, f(x) = x

2

and g(x) = x

2

+ 1 have the

same derivative, 2x, but f and g are clearly not the same function. Is

there any other way that two functions could have the same derivative

everywhere? The answer is no. Differing by a constant is the only way:

if f

0

(x) = g

0

(x) for all x, then f(x) = g(x) + C for some constant C.

It turns out to be quite easy to show this using #1 above. Suppose

that f

0

(x) = g

0

(x) for all x. Now set h(x) = f (x) − g(x). Then we

can differentiate to get h

0

(x) = f

0

(x) − g

0

(x) = 0 for all x, so h is

constant. That is, h(x) = C for some constant C. This means that

f(x) − g(x) = C, or f(x) = g(x) + C. The functions f and g do indeed

differ by a constant. This fact will be very useful when we look at

integration in a few chapters’ time.

3. If a function f has a derivative that’s always positive, then it must be

increasing. This means that if a < b, then f(a) < f(b). In other words,

take two points on the curve; the one on the left is lower than the one

on the right. The curve is getting higher as you look from left to right.

Why is it so? Well, suppose f

0

(x) > 0 for all x, and also suppose that

a < b. By the Mean Value Theorem, there’s a c in the interval (a, b)

such that

f

0

(c) =

f(b) − f(a)

b − a

.

This means that f(b)−f (a) = f

0

(c)(b−a). Now f

0

(c) > 0, and b−a > 0

since b > a, so the right-hand side of this equation is positive. So we

have f(b) − f(a) > 0, hence f (b) > f(a), and the function is indeed

increasing. On the other hand, if f

0

(x) < 0 for all x, the function is

always decreasing; this means that if a < b then f(b) < f(a). The proof

is basically the same.

Section 11.4: The Second Derivative and Graphs • 237

11.4 The Second Derivative and Graphs

So far, we haven’t paid much attention to the second derivative. We’ve only

used it to define acceleration, and that’s about all. Actually, the second

derivative can tell you a lot about what the graph of your function looks

like. For example, suppose that you know that f

00

(x) > 0 for all x in some

interval (a, b). If you think of the second derivative f

00

as the derivative of the

derivative, then you can write (f

0

)

0

(x) > 0. This means that the derivative

f

0

(x) is always increasing.

So what? Well, if you know that the derivative is increasing, this means

that it’s getting more and more difficult to “climb up” the function. The

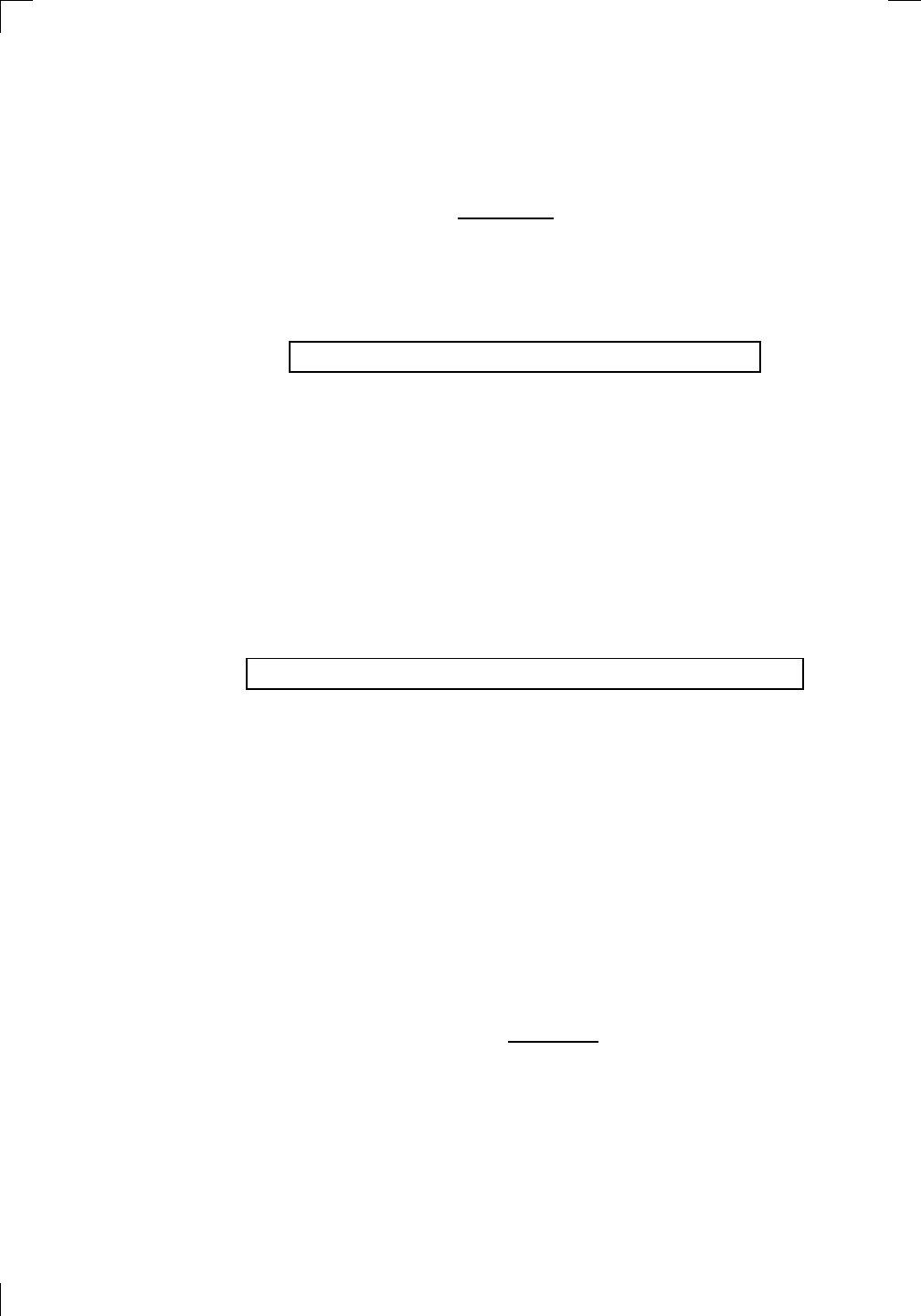

situation could look like this:

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−

1

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

5

π

2

2

π

2

π

3

π

2

π

π

2

y =

sin(x)

y =

cos(x)

−

π

2

π

2

y =

tan(x), −

π

2

<

x <

π

2

0

−

π

2

π

2

y =

tan(x)

−

2π

−

3π

−

5

π

2

−

3

π

2

−

π

−

π

2

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

y =

sec(x)

y =

csc(x)

y =

cot(x)

y = f(

x)

−

1

1

2

y = g(

x)

3

y = h

(x)

4

5

−

2

f(

x) =

1

x

g(

x) =

1

x

2

etc.

0

1

π

1

2

π

1

3

π

1

4

π

1

5

π

1

6

π

1

7

π

g(

x) = sin

1

x

1

0

−

1

L

10

100

200

y =

π

2

y = −

π

2

y =

tan

−1

(x)

π

2

π

y =

sin(

x)

x

,

x > 3

0

1

−

1

a

L

f(

x) = x sin (1/x)

(0 <

x < 0.3)

h

(x) = x

g(

x) = −x

a

L

lim

x

→a

+

f(x) = L

lim

x

→a

+

f(x) = ∞

lim

x

→a

+

f(x) = −∞

lim

x

→a

+

f(x) DNE

lim

x

→a

−

f(x) = L

lim

x

→a

−

f(x) = ∞

lim

x

→a

−

f(x) = −∞

lim

x

→a

−

f(x) DNE

M

}

lim

x

→a

−

f(x) = M

lim

x

→a

f(x) = L

lim

x

→a

f(x) DNE

lim

x

→∞

f(x) = L

lim

x

→∞

f(x) = ∞

lim

x

→∞

f(x) = −∞

lim

x

→∞

f(x) DNE

lim

x

→−∞

f(x) = L

lim

x

→−∞

f(x) = ∞

lim

x

→−∞

f(x) = −∞

lim

x

→−∞

f(x) DNE

lim

x →a

+

f(

x) = ∞

lim

x →a

+

f(

x) = −∞

lim

x →a

−

f(

x) = ∞

lim

x →a

−

f(

x) = −∞

lim

x →a

f(

x) = ∞

lim

x →a

f(

x) = −∞

lim

x →a

f(

x) DNE

y = f(

x)

a

y =

|

x|

x

1

−

1

y =

|

x + 2|

x +

2

1

−

1

−

2

1

2

3

4

a

a

b

y = x sin

1

x

y = x

y = −

x

a

b

c

d

C

a

b

c

d

−

1

0

1

2

3

time

y

t

u

(

t, f(t))

(

u, f(u))

time

y

t

u

y

x

(

x, f(x))

y = |

x|

(

z, f(z))

z

y = f(

x)

a

tangen

t at x = a

b

tangen

t at x = b

c

tangen

t at x = c

y = x

2

tangen

t

at x = −

1

u

v

uv

u +

∆u

v +

∆v

(

u + ∆u)(v + ∆v)

∆

u

∆

v

u

∆v

v∆

u

∆

u∆v

y = f(

x)

1

2

−

2

y = |

x

2

− 4|

y = x

2

− 4

y = −

2x + 5

y = g(

x)

1

2

3

4

5

6

7

8

9

0

−

1

−

2

−

3

−

4

−

5

−

6

y = f(

x)

3

−

3

3

−

3

0

−

1

2

easy

hard

flat

y = f

0

(

x)

3

−

3

0

−

1

2

1

−

1

y =

sin(x)

y = x

x

A

B

O

1

C

D

sin(

x)

tan(

x)

y =

sin

(x)

x

π

2

π

1

−

1

x =

0

a =

0

x

> 0

a

> 0

x

< 0

a

< 0

rest

position

+

−

y = x

2

sin

1

x

N

A

B

H

a

b

c

O

H

A

B

C

D

h

r

R

θ

1000

2000

α

β

p

h

y = g(

x) = log

b

(x)

y = f(

x) = b

x

y = e

x

5

10

1

2

3

4

0

−

1

−

2

−

3

−

4

y =

ln(x)

y =

cosh(x)

y =

sinh(x)

y =

tanh(x)

y =

sech(x)

y =

csch(x)

y =

coth(x)

1

−

1

y = f(

x)

original

function

in

verse function

slop

e = 0 at (x, y)

slop

e is infinite at (y, x)

−

108

2

5

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−5

−6

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

3π

5π

2

2π

3π

2

π

π

2

y = sin(x)

1

0

−1

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

5π

2

2π

2π

3π

2

π

π

2

y = sin(x)

y = sin(x), −

π

2

≤ x ≤

π

2

−2

−1

0

2

π

2

−

π

2

y = sin

−1

(x)

y = cos(x)

π

π

2

y = cos

−1

(x)

−

π

2

1

x

α

β

y = tan(x)

y = tan(x)

1

y = tan

−1

(x)

y = sec(x)

y = sec

−1

(x)

y = csc

−1

(x)

y = cot

−1

(x)

1

y = cosh

−1

(x)

y = sinh

−1

(x)

y = tanh

−1

(x)

y = sech

−1

(x)

y = csch

−1

(x)

y = coth

−1

(x)

(0, 3)

(2, −1)

(5, 2)

(7, 0)

(−1, 44)

(0, 1)

(1, −12)

(2, 305)

y = 1

2

(2, 3)

y = f(x)

y = g(x)

a

b

c

a

b

c

s

c

0

c

1

(a, f(a))

(b, f(b))

Just to the right of x = a, the mountain-climber has it nice and easy: the

slope is negative. It’s getting harder all the time, though; first it gets flatter,

until the climber reaches the flat part at x = c; then the going keeps on getting

tougher as the slope increases up to x = b. The important thing is that the

slope is increasing all the way from x = a up to x = b. This is exactly what

is implied by the equation f

00

(x) > 0.

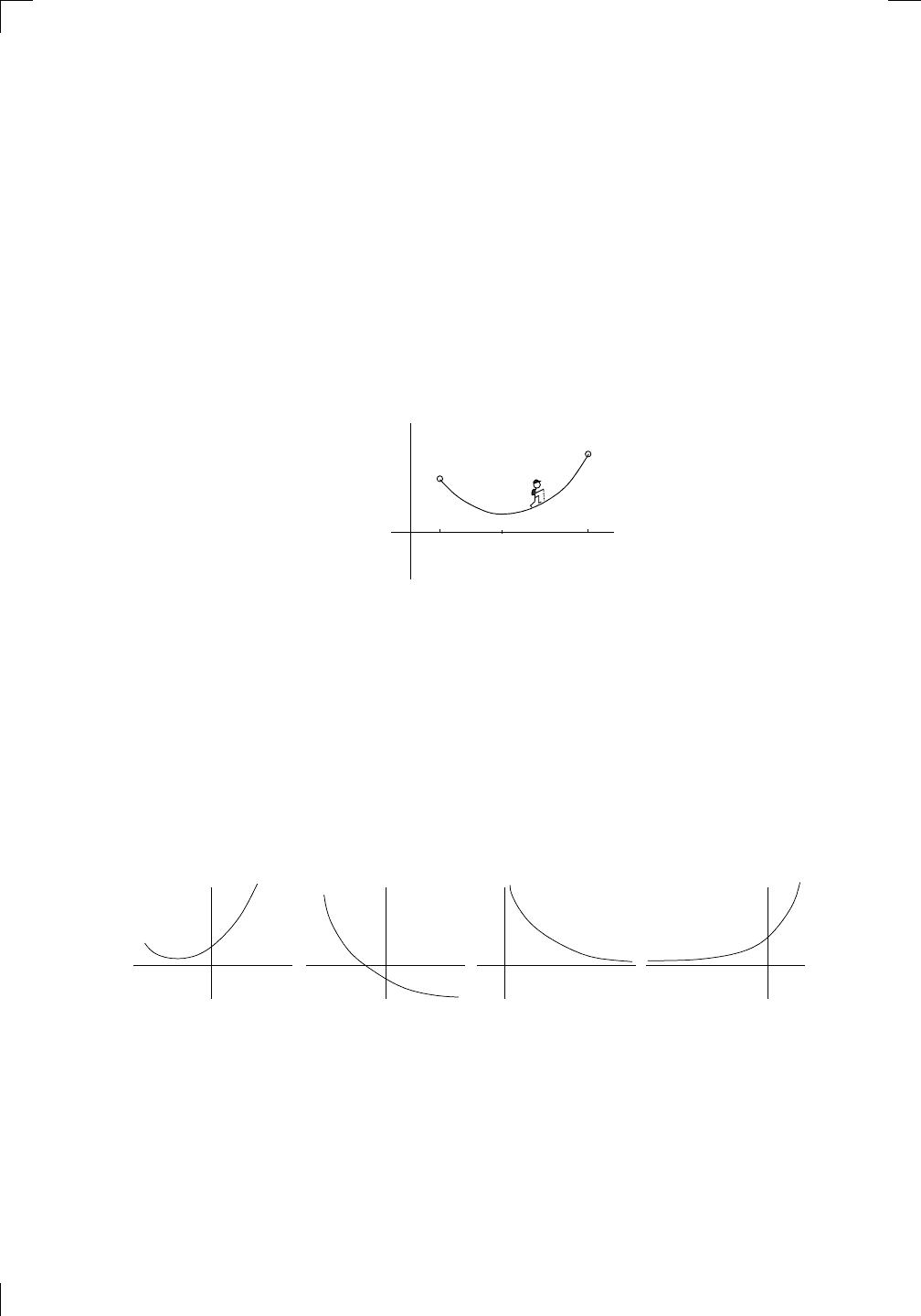

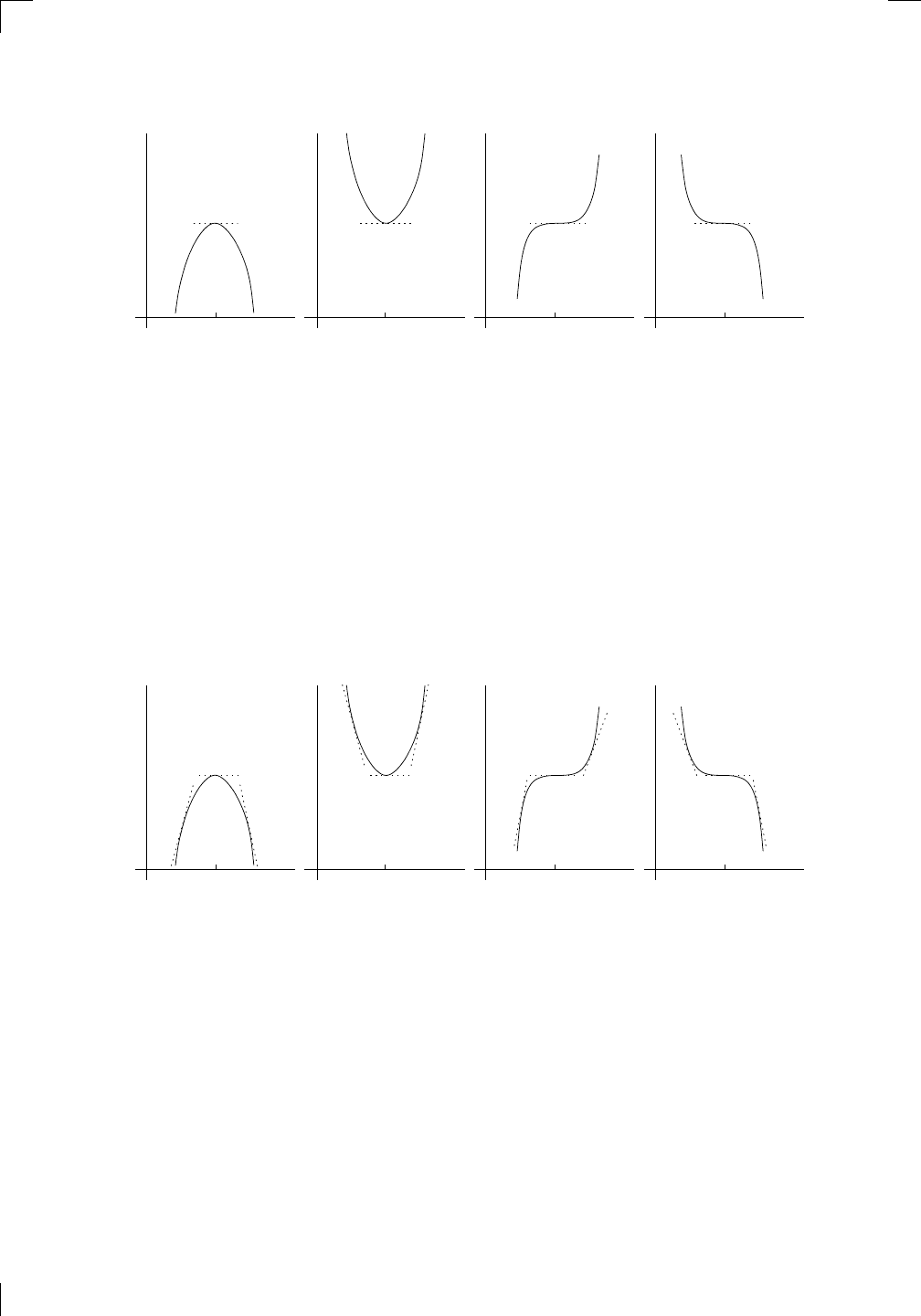

We need a way to describe this sort of behavior. We’ll say a function is

concave up on an interval (a, b) if its slope is always increasing on that inter-

val, or equivalently if its second derivative is always positive on the interval

(assuming that the second derivative exists). Here are some other examples

of graphs of functions which are concave up on their whole domains:

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−

1

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

5

π

2

2

π

2

π

3

π

2

π

π

2

y =

sin(x)

y =

cos(x)

−

π

2

π

2

y =

tan(x), −

π

2

<

x <

π

2

0

−

π

2

π

2

y =

tan(x)

−

2π

−

3π

−

5

π

2

−

3

π

2

−

π

−

π

2

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

y =

sec(x)

y =

csc(x)

y =

cot(x)

y = f(

x)

−

1

1

2

y = g(

x)

3

y = h

(x)

4

5

−

2

f(

x) =

1

x

g(

x) =

1

x

2

etc.

0

1

π

1

2

π

1

3

π

1

4

π

1

5

π

1

6

π

1

7

π

g(

x) = sin

1

x

1

0

−

1

L

10

100

200

y =

π

2

y = −

π

2

y =

tan

−1

(x)

π

2

π

y =

sin(

x)

x

,

x > 3

0

1

−

1

a

L

f(

x) = x sin (1/x)

(0 <

x < 0.3)

h

(x) = x

g(

x) = −x

a

L

lim

x

→a

+

f(x) = L

lim

x

→a

+

f(x) = ∞

lim

x

→a

+

f(x) = −∞

lim

x

→a

+

f(x) DNE

lim

x

→a

−

f(x) = L

lim

x

→a

−

f(x) = ∞

lim

x

→a

−

f(x) = −∞

lim

x

→a

−

f(x) DNE

M

}

lim

x

→a

−

f(x) = M

lim

x

→a

f(x) = L

lim

x

→a

f(x) DNE

lim

x

→∞

f(x) = L

lim

x

→∞

f(x) = ∞

lim

x

→∞

f(x) = −∞

lim

x

→∞

f(x) DNE

lim

x

→−∞

f(x) = L

lim

x

→−∞

f(x) = ∞

lim

x

→−∞

f(x) = −∞

lim

x

→−∞

f(x) DNE

lim

x →a

+

f(

x) = ∞

lim

x →a

+

f(

x) = −∞

lim

x →a

−

f(

x) = ∞

lim

x →a

−

f(

x) = −∞

lim

x →a

f(

x) = ∞

lim

x →a

f(

x) = −∞

lim

x →a

f(

x) DNE

y = f (

x)

a

y =

|

x|

x

1

−

1

y =

|

x + 2|

x +

2

1

−

1

−

2

1

2

3

4

a

a

b

y = x sin

1

x

y = x

y = −

x

a

b

c

d

C

a

b

c

d

−

1

0

1

2

3

time

y

t

u

(

t, f(t))

(

u, f(u))

time

y

t

u

y

x

(

x, f(x))

y = |

x|

(

z, f(z))

z

y = f(

x)

a

tangen

t at x = a

b

tangen

t at x = b

c

tangen

t at x = c

y = x

2

tangen

t

at x = −

1

u

v

uv

u +

∆u

v +

∆v

(

u + ∆u)(v + ∆v)

∆

u

∆

v

u

∆v

v∆

u

∆

u∆v

y = f(

x)

1

2

−

2

y = |

x

2

− 4|

y = x

2

− 4

y = −

2x + 5

y = g(

x)

1

2

3

4

5

6

7

8

9

0

−

1

−

2

−

3

−

4

−

5

−

6

y = f (

x)

3

−

3

3

−

3

0

−

1

2

easy

hard

flat

y = f

0

(

x)

3

−

3

0

−

1

2

1

−

1

y =

sin(x)

y = x

x

A

B

O

1

C

D

sin(

x)

tan(

x)

y =

sin(

x)

x

π

2

π

1

−

1

x =

0

a =

0

x

> 0

a

> 0

x

< 0

a

< 0

rest

position

+

−

y = x

2

sin

1

x

N

A

B

H

a

b

c

O

H

A

B

C

D

h

r

R

θ

1000

2000

α

β

p

h

y = g(

x) = log

b

(x)

y = f (

x) = b

x

y = e

x

5

10

1

2

3

4

0

−1

−2

−3

−4

y = ln(x)

y = cosh(x)

y = sinh(x)

y = tanh(x)

y = sech(x)

y = csch(x)

y = coth(x)

1

−1

y = f(x)

original function

inverse function

slope = 0 at (x, y)

slope is infinite at (y, x)

−108

2

5

1

2

1

2

3

4

5

6

0

−1

−2

−3

−4

−5

−6

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

3π

5π

2

2π

3π

2

π

π

2

y = sin(x)

1

0

−1

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

5π

2

2π

2π

3π

2

π

π

2

y = sin(x)

y = sin(x), −

π

2

≤ x ≤

π

2

−2

−1

0

2

π

2

−

π

2

y = sin

−1

(x)

y = cos(x)

π

π

2

y = cos

−1

(x)

−

π

2

1

x

α

β

y = tan(x)

y = tan(x)

1

y = tan

−1

(x)

y = sec(x)

y = sec

−1

(x)

y = csc

−1

(x)

y = cot

−1

(x)

1

y = cosh

−1

(x)

y = sinh

−1

(x)

y = tanh

−1

(x)

y = sech

−1

(x)

y = csch

−1

(x)

y = coth

−1

(x)

(0, 3)

(2, −1)

(5, 2)

(7, 0)

(−1, 44)

(0, 1)

(1, −12)

(2, 305)

y = 1

2

(2, 3)

y = f(x)

y = g(x)

a

b

c

a

b

c

s

c

0

c

1

(a, f(a))

(b, f(b))

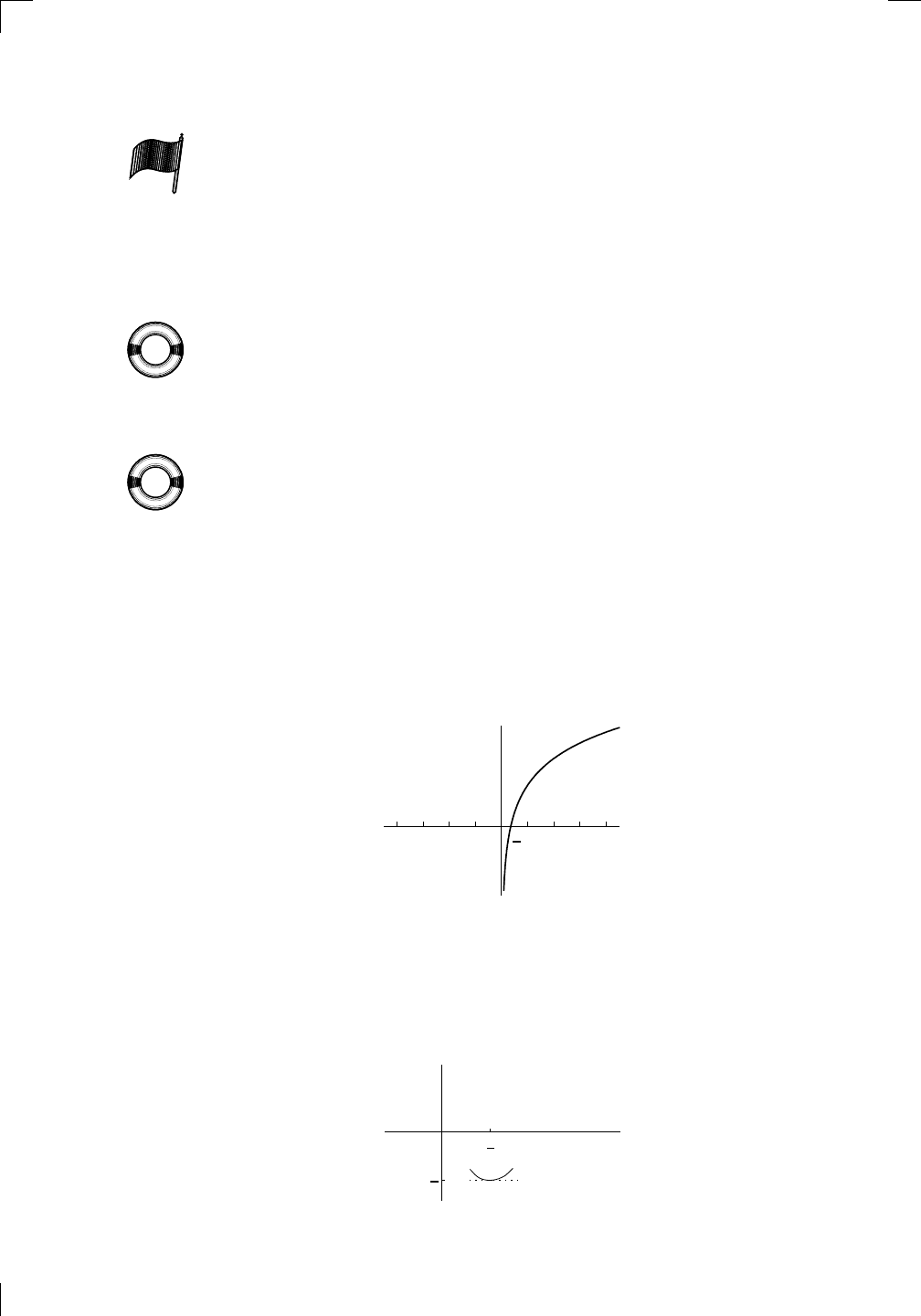

They all look like part of a bowl. Notice that you can’t tell anything about

the sign of the first derivative f

0

(x) just by knowing that f

00

(x) > 0. Indeed,

the middle two graphs have negative first derivative; the rightmost graph has

positive first derivative; while the leftmost graph has a first derivative that is

negative and then positive.

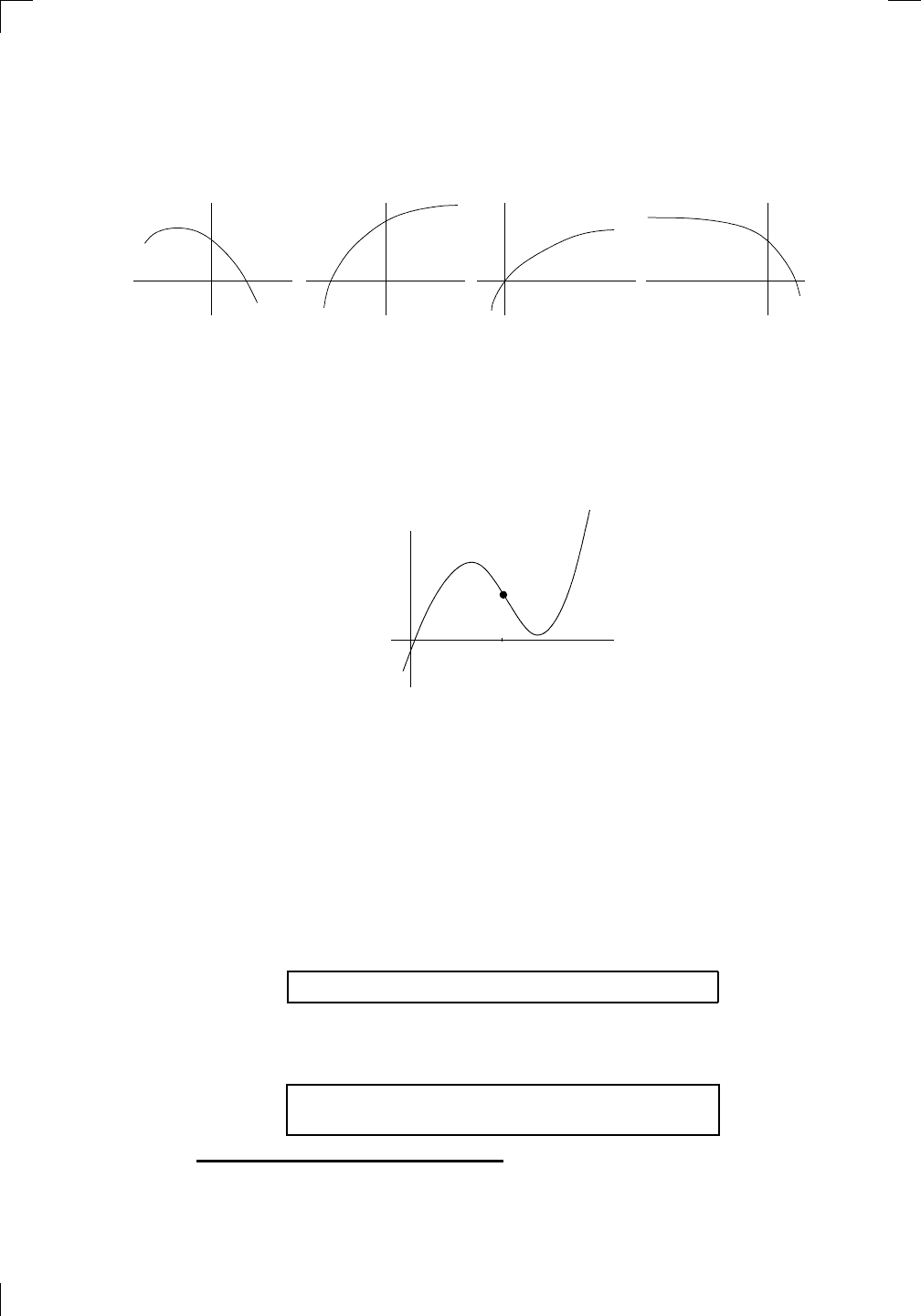

If instead the second derivative f

00

(x) is negative, then everything is re-

versed. You end up with something more like an upside-down bowl, saying

that f is concave down on any interval where its second derivative is always

238 • The Derivative and Graphs

negative.

∗

Here are some examples of functions which are concave down on

their entire domain:

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−

1

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

5

π

2

2

π

2

π

3

π

2

π

π

2

y =

sin(x)

y =

cos(x)

−

π

2

π

2

y =

tan(x), −

π

2

<

x <

π

2

0

−

π

2

π

2

y =

tan(x)

−

2π

−

3π

−

5

π

2

−

3

π

2

−

π

−

π

2

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

y =

sec(x)

y =

csc(x)

y =

cot(x)

y = f(

x)

−

1

1

2

y = g(

x)

3

y = h

(x)

4

5

−

2

f(

x) =

1

x

g(

x) =

1

x

2

etc.

0

1

π

1

2

π

1

3

π

1

4

π

1

5

π

1

6

π

1

7

π

g(

x) = sin

1

x

1

0

−

1

L

10

100

200

y =

π

2

y = −

π

2

y =

tan

−1

(x)

π

2

π

y =

sin(

x)

x

,

x > 3

0

1

−

1

a

L

f(

x) = x sin (1/x)

(0 <

x < 0.3)

h

(x) = x

g(

x) = −x

a

L

lim

x

→a

+

f(x) = L

lim

x

→a

+

f(x) = ∞

lim

x

→a

+

f(x) = −∞

lim

x

→a

+

f(x) DNE

lim

x

→a

−

f(x) = L

lim

x

→a

−

f(x) = ∞

lim

x

→a

−

f(x) = −∞

lim

x

→a

−

f(x) DNE

M

}

lim

x

→a

−

f(x) = M

lim

x

→a

f(x) = L

lim

x

→a

f(x) DNE

lim

x

→∞

f(x) = L

lim

x

→∞

f(x) = ∞

lim

x

→∞

f(x) = −∞

lim

x

→∞

f(x) DNE

lim

x

→−∞

f(x) = L

lim

x

→−∞

f(x) = ∞

lim

x

→−∞

f(x) = −∞

lim

x

→−∞

f(x) DNE

lim

x →a

+

f(

x) = ∞

lim

x →a

+

f(

x) = −∞

lim

x →a

−

f(

x) = ∞

lim

x →a

−

f(

x) = −∞

lim

x →a

f(

x) = ∞

lim

x →a

f(

x) = −∞

lim

x →a

f(

x) DNE

y = f (

x)

a

y =

|

x|

x

1

−

1

y =

|

x + 2|

x +

2

1

−

1

−

2

1

2

3

4

a

a

b

y = x sin

1

x

y = x

y = −

x

a

b

c

d

C

a

b

c

d

−

1

0

1

2

3

time

y

t

u

(

t, f(t))

(

u, f(u))

time

y

t

u

y

x

(

x, f(x))

y = |

x|

(

z, f(z))

z

y = f(

x)

a

tangen

t at x = a

b

tangen

t at x = b

c

tangen

t at x = c

y = x

2

tangen

t

at x = −

1

u

v

uv

u +

∆u

v +

∆v

(

u + ∆u)(v + ∆v)

∆

u

∆

v

u

∆v

v∆

u

∆

u∆v

y = f(

x)

1

2

−

2

y = |

x

2

− 4|

y = x

2

− 4

y = −

2x + 5

y = g(

x)

1

2

3

4

5

6

7

8

9

0

−

1

−

2

−

3

−

4

−

5

−

6

y = f (

x)

3

−

3

3

−

3

0

−

1

2

easy

hard

flat

y = f

0

(

x)

3

−

3

0

−

1

2

1

−

1

y =

sin(x)

y = x

x

A

B

O

1

C

D

sin(

x)

tan(

x)

y =

sin(

x)

x

π

2

π

1

−

1

x =

0

a =

0

x

> 0

a

> 0

x

< 0

a

< 0

rest

position

+

−

y = x

2

sin

1

x

N

A

B

H

a

b

c

O

H

A

B

C

D

h

r

R

θ

1000

2000

α

β

p

h

y = g(

x) = log

b

(x)

y = f (

x) = b

x

y = e

x

5

10

1

2

3

4

0

−

1

−

2

−

3

−

4

y =

ln(x)

y =

cosh(x)

y =

sinh(x)

y =

tanh(x)

y =

sech(x)

y =

csch(x)

y =

coth(x)

1

−

1

y = f(

x)

original

function

in

verse function

slop

e = 0 at (x, y)

slop

e is infinite at (y, x)

−

108

2

5

1

2

1

2

3

4

5

6

0

−1

−2

−3

−4

−5

−6

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

3π

5π

2

2π

3π

2

π

π

2

y = sin(x)

1

0

−1

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

5π

2

2π

2π

3π

2

π

π

2

y = sin(x)

y = sin(x), −

π

2

≤ x ≤

π

2

−2

−1

0

2

π

2

−

π

2

y = sin

−1

(x)

y = cos(x)

π

π

2

y = cos

−1

(x)

−

π

2

1

x

α

β

y = tan(x)

y = tan(x)

1

y = tan

−1

(x)

y = sec(x)

y = sec

−1

(x)

y = csc

−1

(x)

y = cot

−1

(x)

1

y = cosh

−1

(x)

y = sinh

−1

(x)

y = tanh

−1

(x)

y = sech

−1

(x)

y = csch

−1

(x)

y = coth

−1

(x)

(0, 3)

(2, −1)

(5, 2)

(7, 0)

(−1, 44)

(0, 1)

(1, −12)

(2, 305)

y = 1

2

(2, 3)

y = f(x)

y = g(x)

a

b

c

a

b

c

s

c

0

c

1

(a, f(a))

(b, f(b))

In this case, the derivative is always decreasing: it’s getting easier and easier

to climb as you go along in each case. If you’re going uphill, this means it’s

getting less and less steep, but if you’re going downhill, it’s getting steeper

and steeper downhill (as you go from left to right).

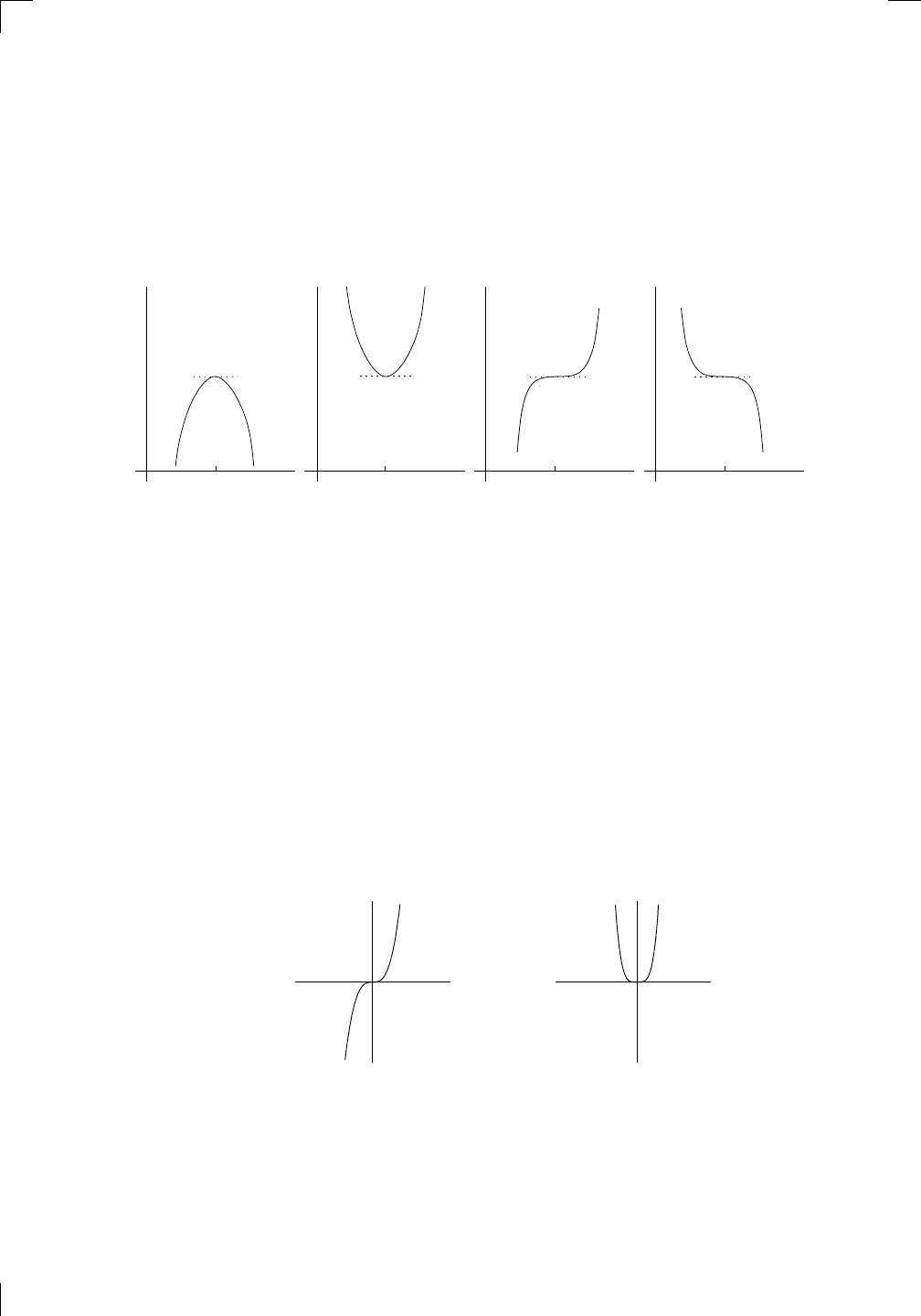

Of course, the concavity doesn’t have to be the same everywhere: it can

change:

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−

1

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

5

π

2

2

π

2

π

3

π

2

π

π

2

y =

sin(x)

y =

cos(x)

−

π

2

π

2

y =

tan(x), −

π

2

<

x <

π

2

0

−

π

2

π

2

y =

tan(x)

−

2π

−

3π

−

5

π

2

−

3

π

2

−

π

−

π

2

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

y =

sec(x)

y =

csc(x)

y =

cot(x)

y = f(

x)

−

1

1

2

y = g(

x)

3

y = h

(x)

4

5

−

2

f(

x) =

1

x

g(

x) =

1

x

2

etc.

0

1

π

1

2

π

1

3

π

1

4

π

1

5

π

1

6

π

1

7

π

g(

x) = sin

1

x

1

0

−

1

L

10

100

200

y =

π

2

y = −

π

2

y =

tan

−1

(x)

π

2

π

y =

sin(

x)

x

,

x > 3

0

1

−

1

a

L

f(

x) = x sin (1/x)

(0 <

x < 0.3)

h

(x) = x

g(

x) = −x

a

L

lim

x

→a

+

f(x) = L

lim

x

→a

+

f(x) = ∞

lim

x

→a

+

f(x) = −∞

lim

x

→a

+

f(x) DNE

lim

x

→a

−

f(x) = L

lim

x

→a

−

f(x) = ∞

lim

x

→a

−

f(x) = −∞

lim

x

→a

−

f(x) DNE

M

}

lim

x

→a

−

f(x) = M

lim

x

→a

f(x) = L

lim

x

→a

f(x) DNE

lim

x

→∞

f(x) = L

lim

x

→∞

f(x) = ∞

lim

x

→∞

f(x) = −∞

lim

x

→∞

f(x) DNE

lim

x

→−∞

f(x) = L

lim

x

→−∞

f(x) = ∞

lim

x

→−∞

f(x) = −∞

lim

x

→−∞

f(x) DNE

lim

x →a

+

f(

x) = ∞

lim

x →a

+

f(

x) = −∞

lim

x →a

−

f(

x) = ∞

lim

x →a

−

f(

x) = −∞

lim

x →a

f(

x) = ∞

lim

x →a

f(

x) = −∞

lim

x →a

f(

x) DNE

y = f(

x)

a

y =

|

x|

x

1

−

1

y =

|

x + 2|

x +

2

1

−

1

−

2

1

2

3

4

a

a

b

y = x sin

1

x

y = x

y = −

x

a

b

c

d

C

a

b

c

d

−

1

0

1

2

3

time

y

t

u

(

t, f(t))

(

u, f(u))

time

y

t

u

y

x

(

x, f(x))

y = |

x|

(

z, f(z))

z

y = f(

x)

a

tangen

t at x = a

b

tangen

t at x = b

c

tangen

t at x = c

y = x

2

tangen

t

at x = −

1

u

v

uv

u +

∆u

v +

∆v

(

u + ∆u)(v + ∆v)

∆

u

∆

v

u

∆v

v∆

u

∆

u∆v

y = f(

x)

1

2

−

2

y = |

x

2

− 4|

y = x

2

− 4

y = −

2x + 5

y = g(

x)

1

2

3

4

5

6

7

8

9

0

−

1

−

2

−

3

−

4

−

5

−

6

y = f(

x)

3

−

3

3

−

3

0

−

1

2

easy

hard

flat

y = f

0

(

x)

3

−

3

0

−

1

2

1

−

1

y =

sin(x)

y = x

x

A

B

O

1

C

D

sin(

x)

tan(

x)

y =

sin

(x)

x

π

2

π

1

−

1

x =

0

a =

0

x

> 0

a

> 0

x

< 0

a

< 0

rest

position

+

−

y = x

2

sin

1

x

N

A

B

H

a

b

c

O

H

A

B

C

D

h

r

R

θ

1000

2000

α

β

p

h

y = g(

x) = log

b

(x)

y = f(

x) = b

x

y = e

x

5

10

1

2

3

4

0

−

1

−

2

−

3

−

4

y =

ln(x)

y =

cosh(x)

y =

sinh(x)

y =

tanh(x)

y =

sech(x)

y =

csch(x)

y =

coth(x)

1

−

1

y = f(

x)

original

function

in

verse function

slop

e = 0 at (x, y)

slop

e is infinite at (y, x)

−

108

2

5

1

2

1

2

3

4

5

6

0

−1

−2

−3

−4

−5

−6

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

3π

5π

2

2π

3π

2

π

π

2

y = sin(x)

1

0

−1

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

5π

2

2π

2π

3π

2

π

π

2

y = sin(x)

y = sin(x), −

π

2

≤ x ≤

π

2

−2

−1

0

2

π

2

−

π

2

y = sin

−1

(x)

y = cos(x)

π

π

2

y = cos

−1

(x)

−

π

2

1

x

α

β

y = tan(x)

y = tan(x)

1

y = tan

−1

(x)

y = sec(x)

y = sec

−1

(x)

y = csc

−1

(x)

y = cot

−1

(x)

1

y = cosh

−1

(x)

y = sinh

−1

(x)

y = tanh

−1

(x)

y = sech

−1

(x)

y = csch

−1

(x)

y = coth

−1

(x)

(0, 3)

(2, −1)

(5, 2)

(7, 0)

(−1, 44)

(0, 1)

(1, −12)

(2, 305)

y = 1

2

(2, 3)

y = f(x)

y = g(x)

a

b

c

a

b

c

s

c

0

c

1

(a, f(a))

(b, f(b))

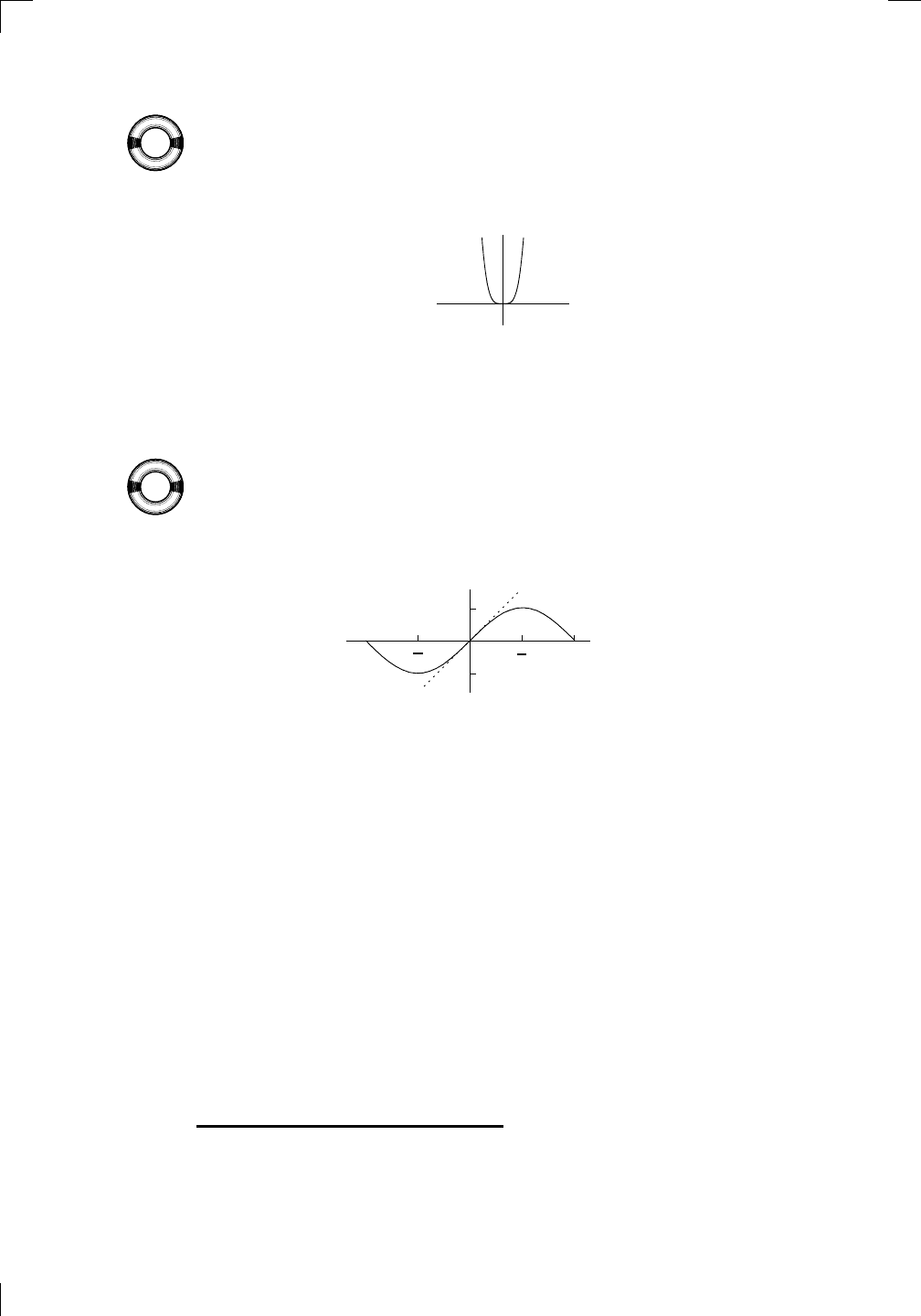

To the left of x = c, the curve is concave down, while to the right of x = c,

the curve is concave up. We’ll say that the point x = c is a point of inflection

for f because the concavity changes as you go from left to right through c.

11.4.1 More about points of inflection

In the above picture, we see that f

00

(x) < 0 to the left of c and f

00

(x) > 0

to the right of c. What about f

00

(c) itself? It must be 0, since everything

is nice and smooth. In general, if c is a point of inflection, then the sign of

f

00

(x) must be different on either side of x = c, assuming of course that f

00

(x)

actually exists when x is near c. In that case, it must be true that

if x = c is a point of inflection for f, then f

00

(c) = 0.

On the other hand, if f

00

(c) = 0, then c may or may not be an inflection point!

That is,

if f

00

(c) = 0, then it’s not always true that x = c is a

point of inflection for f.

∗

If you have trouble remembering which one is concave up and which is concave down,

the following rhyme might help: “like a cup, concave up; like a frown, concave down.”

Section 11.5: Classifying Points Where the Derivative Vanishes • 239

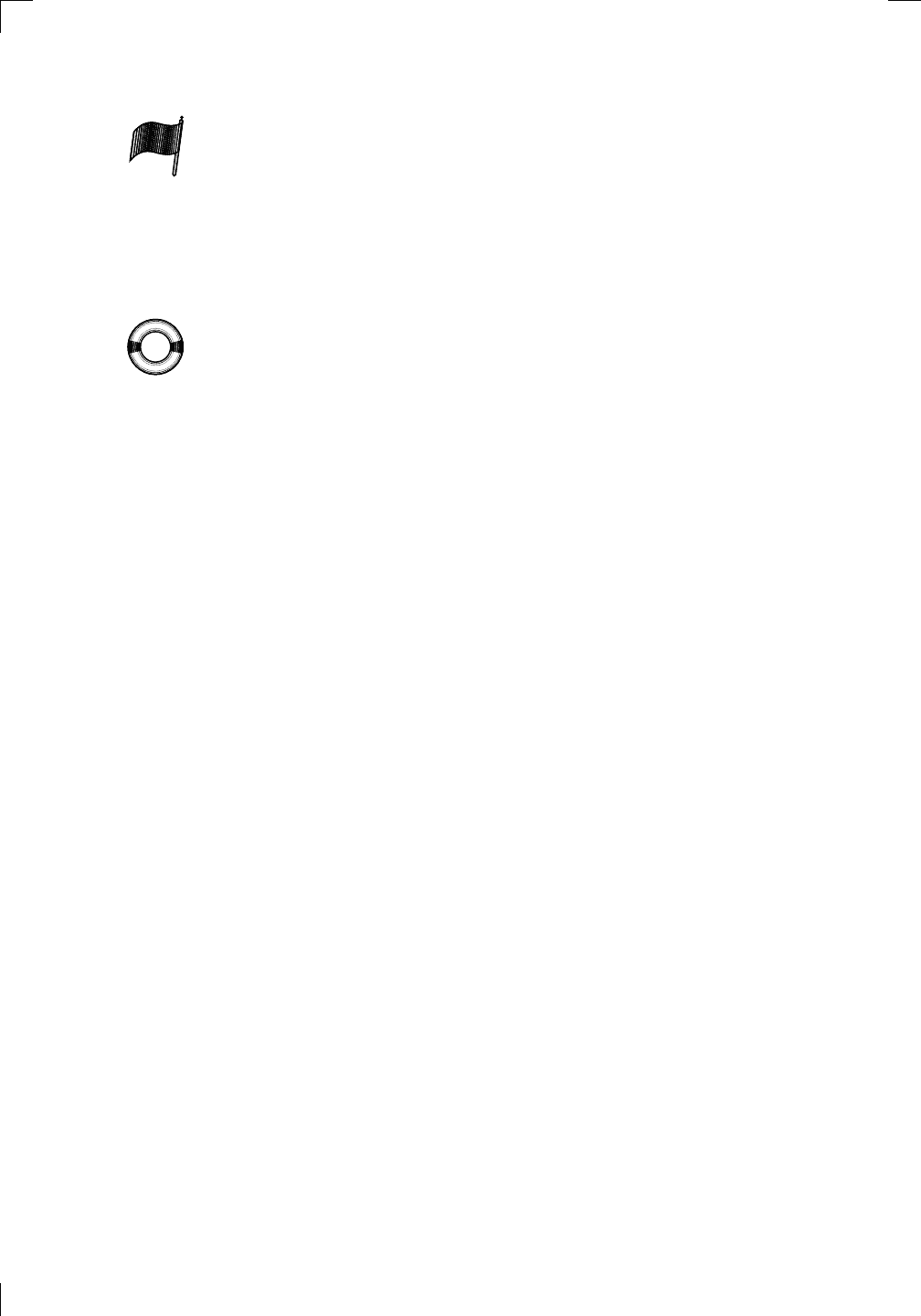

For example, suppose that f(x) = x

4

. Then f

0

(x) = 4x

3

and f

00

(x) = 12x

2

.

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−

1

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

5

π

2

2

π

2

π

3

π

2

π

π

2

y =

sin(x)

y =

cos(x)

−

π

2

π

2

y =

tan(x), −

π

2

<

x <

π

2

0

−

π

2

π

2

y =

tan(x)

−

2π

−

3π

−

5

π

2

−

3

π

2

−

π

−

π

2

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

y =

sec(x)

y =

csc(x)

y =

cot(x)

y = f(

x)

−

1

1

2

y = g(

x)

3

y = h

(x)

4

5

−

2

f(

x) =

1

x

g(

x) =

1

x

2

etc.

0

1

π

1

2

π

1

3

π

1

4

π

1

5

π

1

6

π

1

7

π

g(

x) = sin

1

x

1

0

−

1

L

10

100

200

y =

π

2

y = −

π

2

y =

tan

−1

(x)

π

2

π

y =

sin(

x)

x

,

x > 3

0

1

−

1

a

L

f(

x) = x sin (1/x)

(0 <

x < 0.3)

h

(x) = x

g(

x) = −x

a

L

lim

x

→a

+

f(x) = L

lim

x

→a

+

f(x) = ∞

lim

x

→a

+

f(x) = −∞

lim

x

→a

+

f(x) DNE

lim

x

→a

−

f(x) = L

lim

x

→a

−

f(x) = ∞

lim

x

→a

−

f(x) = −∞

lim

x

→a

−

f(x) DNE

M

}

lim

x

→a

−

f(x) = M

lim

x

→a

f(x) = L

lim

x

→a

f(x) DNE

lim

x

→∞

f(x) = L

lim

x

→∞

f(x) = ∞

lim

x

→∞

f(x) = −∞

lim

x

→∞

f(x) DNE

lim

x

→−∞

f(x) = L

lim

x

→−∞

f(x) = ∞

lim

x

→−∞

f(x) = −∞

lim

x

→−∞

f(x) DNE

lim

x →a

+

f(

x) = ∞

lim

x →a

+

f(

x) = −∞

lim

x →a

−

f(

x) = ∞

lim

x →a

−

f(

x) = −∞

lim

x →a

f(

x) = ∞

lim

x →a

f(

x) = −∞

lim

x →a

f(

x) DNE

y = f (

x)

a

y =

|

x|

x

1

−

1

y =

|

x + 2|

x +

2

1

−

1

−

2

1

2

3

4

a

a

b

y = x sin

1

x

y = x

y = −

x

a

b

c

d

C

a

b

c

d

−

1

0

1

2

3

time

y

t

u

(

t, f(t))

(

u, f(u))

time

y

t

u

y

x

(

x, f(x))

y = |

x|

(

z, f(z))

z

y = f(

x)

a

tangen

t at x = a

b

tangen

t at x = b

c

tangen

t at x = c

y = x

2

tangen

t

at x = −

1

u

v

uv

u +

∆u

v +

∆v

(

u + ∆u)(v + ∆v)

∆

u

∆

v

u

∆v

v∆

u

∆

u∆v

y = f(

x)

1

2

−

2

y = |

x

2

− 4|

y = x

2

− 4

y = −

2x + 5

y = g(

x)

1

2

3

4

5

6

7

8

9

0

−

1

−

2

−

3

−

4

−

5

−

6

y = f (

x)

3

−

3

3

−

3

0

−

1

2

easy

hard

flat

y = f

0

(

x)

3

−

3

0

−

1

2

1

−

1

y =

sin(x)

y = x

x

A

B

O

1

C

D

sin(

x)

tan(

x)

y =

sin(

x)

x

π

2

π

1

−

1

x =

0

a =

0

x

> 0

a

> 0

x

< 0

a

< 0

rest

position

+

−

y = x

2

sin

1

x

N

A

B

H

a

b

c

O

H

A

B

C

D

h

r

R

θ

1000

2000

α

β

p

h

y = g(

x) = log

b

(x)

y = f(

x) = b

x

y = e

x

5

10

1

2

3

4

0

−

1

−

2

−

3

−

4

y =

ln(x)

y =

cosh(x)

y =

sinh(x)

y =

tanh(x)

y =

sech(x)

y =

csch(x)

y =

coth(x)

1

−

1

y = f(

x)

original

function

in

verse function

slop

e = 0 at (x, y)

slop

e is infinite at (y, x)

−

108

2

5

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−1

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

5π

2

2π

2π

3π

2

π

π

2

y = sin(x)

y = sin(x), −

π

2

≤ x ≤

π

2

−2

−1

0

2

π

2

−

π

2

y = sin

−1

(x)

y = cos(x)

π

π

2

y = cos

−1

(x)

−

π

2

1

x

α

β

y = tan(x)

y = tan(x)

1

y = tan

−1

(x)

y = sec(x)

y = sec

−1

(x)

y = csc

−1

(x)

y = cot

−1

(x)

1

y = cosh

−1

(x)

y = sinh

−1

(x)

y = tanh

−1

(x)

y = sech

−1

(x)

y = csch

−1

(x)

y = coth

−1

(x)

(0, 3)

(2, −1)

(5, 2)

(7, 0)

(−1, 44)

(0, 1)

(1, −12)

(2, 305)

y = 1

2

(2, 3)

y = f(x)

y = g(x)

a

b

c

a

b

c

s

c

0

c

1

(a, f(a))

(b, f(b))

At x = 0, the second derivative vanishes, because f

00

(0) = 12(0)

2

= 0. So is

x = 0 a point of inflection? The answer is no. Here’s a miniature graph of

y = x

4

:

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

π

2

y =

sin(x)

1

0

−

1

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

5

π

2

2

π

2

π

3

π

2

π

π

2

y =

sin(x)

y =

cos(x)

−

π

2

π

2

y =

tan(x), −

π

2

<

x <

π

2

0

−

π

2

π

2

y =

tan(x)

−

2π

−

3π

−

5

π

2

−

3

π

2

−

π

−

π

2

π

2

3

π

3

π

5

π

2

2

π

3

π

2

π

y =

sec(x)

y =

csc(x)

y =

cot(x)

y = f (

x)

−

1

1

2

y = g(

x)

3

y = h

(x)

4

5

−

2

f(

x) =

1

x

g(

x) =

1

x

2

etc.

0

1

π

1

2

π

1

3

π

1

4

π

1

5

π

1

6

π

1

7

π

g(

x) = sin

1

x

1

0

−

1

L

10

100

200

y =

π

2

y = −

π

2

y =

tan

−1

(x)

π

2

π

y =

sin

(x)

x

,

x > 3

0

1

−

1

a

L

f(

x) = x sin (1/x)

(0 <

x < 0.3)

h

(x) = x

g(

x) = −x

a

L

lim

x

→a

+

f(x) = L

lim

x

→a

+

f(x) = ∞

lim

x

→a

+

f(x) = −∞

lim

x

→a

+

f(x) DNE

lim

x

→a

−

f(x) = L

lim

x

→a

−

f(x) = ∞

lim

x

→a

−

f(x) = −∞

lim

x

→a

−

f(x) DNE

M

}

lim

x

→a

−

f(x) = M

lim

x

→a

f(x) = L

lim

x

→a

f(x) DNE

lim

x

→∞

f(x) = L

lim

x

→∞

f(x) = ∞

lim

x

→∞

f(x) = −∞

lim

x

→∞

f(x) DNE

lim

x

→−∞

f(x) = L

lim

x

→−∞

f(x) = ∞

lim

x

→−∞

f(x) = −∞

lim

x

→−∞

f(x) DNE

lim

x →a

+

f(

x) = ∞

lim

x →a

+

f(

x) = −∞

lim

x →a

−

f(

x) = ∞

lim

x →a

−

f(

x) = −∞

lim

x →a

f(

x) = ∞

lim

x →a

f(

x) = −∞

lim

x →a

f(

x) DNE

y = f (

x)

a

y =

|

x|

x

1

−

1

y =

|

x + 2|

x +

2

1

−

1

−

2

1

2

3

4

a

a

b

y = x sin

1

x

y = x

y = −

x

a

b

c

d

C

a

b

c

d

−

1

0

1

2

3

time

y

t

u

(

t, f(t))

(

u, f(u))

time

y

t

u

y

x

(

x, f(x))

y = |

x|

(

z, f(z))

z

y = f (

x)

a

tangen

t at x = a

b

tangen

t at x = b

c

tangen

t at x = c

y = x

2

tangen

t

at x = −

1

u

v

uv

u +

∆u

v +

∆v

(

u + ∆u)(v + ∆v)

∆

u

∆

v

u

∆v

v∆

u

∆

u∆v

y = f (

x)

1

2

−

2

y = |

x

2

− 4|

y = x

2

− 4

y = −

2x + 5

y = g(

x)

1

2

3

4

5

6

7

8

9

0

−

1

−

2

−

3

−

4

−

5

−

6

y = f(

x)

3

−

3

3

−

3

0

−

1

2

easy

hard

flat

y = f

0

(

x)

3

−

3

0

−

1

2

1

−

1

y =

sin(x)

y = x

x

A

B

O

1

C

D

sin(

x)

tan(

x)

y =

sin

(x)

x

π

2

π

1

−

1

x =

0

a =

0

x

> 0

a

> 0

x

< 0

a

< 0

rest

position

+

−

y = x

2

sin

1

x

N

A

B

H

a

b

c

O

H

A

B

C

D

h

r

R

θ

1000

2000

α

β

p

h

y = g(

x) = log

b

(x)

y = f (

x) = b

x

y = e

x

5

10

1

2

3

4

0

−

1

−

2

−

3

−

4

y =

ln(x)

y =

cosh(x)

y =

sinh(x)

y =

tanh(x)

y =

sech(x)

y =

csch(x)

y =

coth(x)

1

−

1

y = f (

x)

original

function

in

verse function

slop

e = 0 at (x, y)

slop

e is infinite at (y, x)

−

108

2

5

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−

π

2

3

π

3

π

5

π

2

2π

3π

2

π

π

2

y = sin(x)

1

0

−1

−3π

−

5π

2

−2π

−

3π

2

−π

−

π

2

3π

5π

2

2π

2π

3π

2

π

π

2

y = sin(x)

y = sin(x), −

π

2

≤ x ≤

π

2

−2

−1

0

2

π

2

−

π

2

y = sin

−1

(x)

y = cos(x)

π

π

2

y = cos

−1

(x)

−

π

2

1

x

α

β

y = tan(x)

y = tan(x)

1

y = tan

−1

(x)

y = sec(x)

y = sec

−1

(x)

y = csc

−1

(x)

y = cot

−1

(x)

1

y = cosh

−1

(x)

y = sinh

−1

(x)

y = tanh

−1

(x)

y = sech

−1

(x)

y = csch

−1

(x)

y = coth

−1

(x)

(0, 3)

(2, −1)

(5, 2)

(7, 0)

(−1, 44)

(0, 1)

(1, −12)

(2, 305)

y = 1

2

(2, 3)

y = f (x)

y = g(x)

a

b

c

a

b

c

s

c

0

c

1

(a, f(a))

(b, f(b))

You can see that f is always concave up; so the concavity doesn’t change

around x = 0. That is, x = 0 is not a point of inflection, despite the fact that

f

00

(0) = 0.

On the other hand, if you want to find points of inflection, you do need

to find where the second derivative vanishes. That at least narrows down the

list of potential candidates, which you can check one by one. For example,

suppose that f(x) = sin(x). We have f

0

(x) = cos(x) and f

00

(x) = −sin(x).

PSfrag

replacements

(

a, b)

[

a, b]

(

a, b]

[

a, b)

(

a, ∞)

[

a, ∞)

(

−∞, b)

(

−∞, b]

(

−∞, ∞)

{

x : a < x < b}

{

x : a ≤ x ≤ b}

{

x : a < x ≤ b}

{

x : a ≤ x < b}

{

x : x ≥ a}

{

x : x > a}

{

x : x ≤ b}

{

x : x < b}

R

a

b

shado

w

0

1

4

−

2

3

−

3

g(

x) = x

2

f(

x) = x

3

g(

x) = x

2

f(

x) = x

3

mirror

(y = x)

f

−

1

(x) =

3

√

x

y = h

(x)

y = h

−

1

(x)

y =

(x − 1)

2

−

1

x

Same

height

−

x

Same

length,

opp

osite signs

y = −

2x

−

2

1

y =

1

2

x − 1

2

−

1

y =

2

x

y =

10

x

y =

2

−x

y =

log

2

(x)

4

3

units

mirror

(x-axis)

y = |

x|

y = |

log

2

(x)|

θ radians

θ units

30

◦

=

π

6

45

◦

=

π

4

60

◦

=

π

3

120

◦

=

2

π

3

135

◦

=

3

π

4

150

◦

=

5

π

6

90

◦

=

π

2

180

◦

= π

210

◦

=

7

π

6

225

◦

=

5

π

4

240

◦

=

4

π

3

270

◦

=

3

π

2

300

◦

=

5

π

3

315

◦

=

7

π

4

330

◦

=

11

π

6

0

◦

=

0 radians

θ

hypotenuse

opp

osite

adjacen

t

0

(≡ 2π)

π

2

π

3

π

2

I

I

I

I

II

IV

θ

(

x, y)

x

y

r

7

π

6

reference

angle

reference

angle =

π

6

sin

+

sin −

cos

+

cos −

tan

+

tan −

A

S

T

C

7

π

4

9

π

13

5

π

6

(this

angle is

5π

6

clo

ckwise)

1

2

1

2

3

4

5

6

0

−

1

−

2

−

3

−

4

−

5

−

6

−

3π

−

5

π

2

−

2π

−

3

π

2

−

π

−