Birge J.R., Louveaux F. Introduction to Stochastic Programming

Подождите немного. Документ загружается.

274 6 Multistage Stochastic Programs

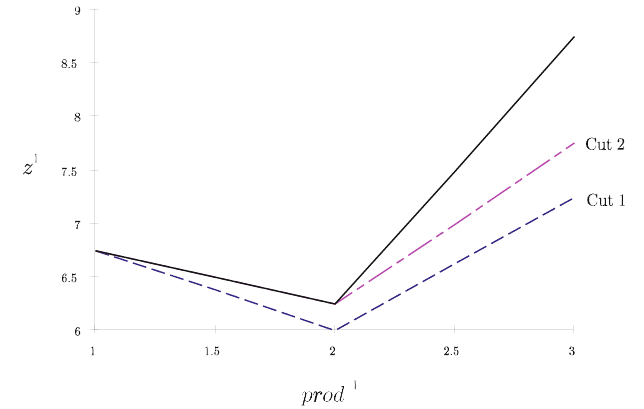

In Figure 1, the solid line gives the objective value in (1.7) as a function of total

production prod

1

= x

1

+ w

1

in the first period. The dashed lines correspond to the

cuts made by the algorithm (Cut 1,2). The first cut was 2.25y

1

+

θ

≥ 5.75 from

(1.15)–(1.16) on Iteration 2. Because y

1

= x

1

+ w

1

−1 , we can substitute for y

1

to obtain, 2.25x

1

+ 2.25w

1

+

θ

≥ 8 . The objective in (1.17)is z

1

= x

1

+ 3w

1

+

0.5y

1

+

θ

, so, combined with 1 ≤ x

1

≤ 2 , we can substitute

θ

≥ 8 −2.25(prod

1

)

to obtain z

1

(prod

1

)=7.5 +(1.5) min{2, prod

1

}+ 3.5(prod

1

−2)

+

−2.25prod

1

,

where prod

1

≥ 1 . This can also be written as:

z

1

(prod

1

)=

7.5 −0.75prod

1

if prod

1

≤ 2 ,

3.5 + 1.25prod

1

if prod

1

> 2 ,

(1.18)

which corresponds to the wide dashed line (Cut 1) in Figure 1.

Fig. 1 The first period objective function (solid line) for the example and cuts (dashed lines) gen-

erated by the nested L -shaped method.

The second cut occurs on Iteration 4 (verify this in Exercise 2) as 2x

1

+ 2w

1

+

θ

≥

7.75 , which yields z

1

(prod

1

)=x

1

+3w

1

+0.5y

2

+

θ

≥7.25+(1.5)min{2, prod

1

}+

3.5(prod

1

−2)

+

−2prod

1

or

z

1

(prod

1

) ≥

7.25 −0.5prod

1

if prod

1

≤ 2 ,

3.25 + 1.5prod

1

if prod

1

> 2 .

(1.19)

This cut corresponds to the narrow width dashed line (Cut 2) in Figure 1.

6.1 Nested Decomposition Procedures 275

The optimal value and solution in terms of prod

1

can be read from Figure 1 as

each cut is added. With only Cut 1, the lowest value of z

1

occurs when prod

1

= 2.

With Cuts 1 and 2, the minimum is also achieved at prod

1

= 2 . Note that the first

cut is not, however, a facet of the objective function’s graph. The cuts meet the

objective at prod

1

= 1andprod

1

= 2 , respectively, but they need not even do

this, as we mentioned earlier (see Exercise 3). The other parts of the Period 1 cuts

are generated from bounds on Q

2

2

.

This example illustrates some of the features of the nested L -shaped method.

Besides our not being guaranteed of obtaining a support of the function at each step,

another possible source of delay in the algorithm’s convergence is degeneracy. As

the example illustrates, the solutions at each step occur at the links of the piecewise

linear pieces generated by the method (Exercises 5 and 5). At these places, many

bases may be optimal so that several bases may be repeated. Some remedies are

possible, as in Birge [1980] and, for deterministic problems, Abrahamson [1983].

As with the standard two-stage L -shaped method, the nested L -shaped method

acquires its greatest gains by combining the solutions of many subproblems through

bunching (or sifting). In addition, multicuts are valuable in multistage as well as

two-stage problems. Infanger [1991, 1994] has also suggested the uses of generat-

ing many cuts simultaneously when future scenarios all have similar structure. This

procedure may make bunching efficient for periods other than H by making ev-

ery constraint matrix identical for all scenarios in a given period. In this way, only

objective and right-hand side constraint coefficients vary among the different sce-

narios.

In terms of primal decomposition, we mentioned the work of No¨el and Smeers

at the outset of this chapter. They apply nested Dantzig-Wolfe decomposition to the

dual of the original problem. As we saw in Chapter 5, this is equivalent to applying

outer linearization to the primal problem. The only difference is that they allow for

some nonlinear terms in their constraints, which would correspond to a nonlinear

objective in the primal model. Because the problems are still convex, nonlinearity

does not really alter the algorithm. The only problem may be in the finiteness of

convergence.

The advantage of a primal or dual implementation generally rests in the problem

structure, although primal or dual simplex may be used in either method, making

them indistinguishable. Gassmann [1990] presents some indication that dual iter-

ations may be preferred in bunching. In general, many primal columns and few

rows would tend to favor a primal approach (outer linearization as in the L -shaped

method) while few columns and many rows would tend to favor a dual approach.

In any case, the form of the algorithm and all proofs of convergence apply to either

form.

While nested decomposition (and other linearization methods) are particularly

well-suited for linear problems, the general methods apply equally well for con-

vex nonlinear problems (i.e., problems with convex, time-separable objectives and

convex constraints, see Exercise 8). Birge and Rosa [1996] describe a nested de-

composition of this form applied to global energy-economy-environmentinteraction

276 6 Multistage Stochastic Programs

models. They use an active set approach for the subproblems, but interior point

methods might also be used.

Exercises

1. Verify that the infeasibility condition is as given in Step 1 of the nested L -

shaped method. (Hint: note that if x

t

k

satisfies (1.2)and(1.3), then there exists

θ

t

k

such that (x

t

k

,

θ

t

k

) satisfy (1.4).)

2. Continue Example 1 with the nested L -shaped method until you obtain an op-

timal solution.

3. Construct a multistage example in which a cut generated by the second period

in following the nested L -shaped method does not meet Q

1

(x

1

) for any value

of x

1

,i.e., −E

1

1

x

1

+ e

1

1

< Q(x

1

) .

4. Show that the situation in (1.1) is not possible if the fast-forward protocol is

always followed.

5. Suppose a feasibility cut (1.3) is active for x

t

k

for any t and k . Show that

every basic feasible solution of NLDS(t +1, j) with input x

t

k

for some scenario

j ∈ D

t+1

(k) must be degenerate.

6. Suppose two optimality cuts (1.4) are active for (x

t

k

,

θ

t

k

) for any t and k .Show

that either the subproblems generate a new cut with

¯

θ

t

k

>

θ

t

k

or an optimal

solution of NLDS(t + 1, j) with input x

t

k

for some scenario j ∈D

t+1

(k) must

be degenerate.

7. Using four processors, what efficiency can be gained by solving the preceding

example in parallel? Find the utilization of each processor and the speed-up of

elapsed time, assuming each subproblem requires the same solution time.

8. Suppose

θ

1

is broken into separate components for Q

2

1

and Q

2

2

as in the two-

stage multicut approach. How does that alter the solution of the example?

9. Suppose that the objective in each period t for each scenario k is a general

convex function f

t

k

(x

t−1

k

,x

t

k

) and, in addition to the linear constraints, there is

an additional convex constraint, g

t

k

(x

t−1

k

,x

t

k

) ≤0 . Assuming relatively complete

recourse for simplicity and that your solver can return the primal solution and

dual multipliers for the K-K-T system of equations, describe how you would

modify the nested decomposition steps to accommodate these nonlinear func-

tions.

6.2 Quadratic Nested Decomposition

Decomposition techniques for multistage nonlinear programs are available for the

case in which the objective function is quadratic convex, the constraint set polyhe-

6.2 Quadratic Nested Decomposition 277

dral, and the random variables discrete. For the sake of clarity, we repeat the recur-

sive definition of the deterministic equivalent program, already given in Section 3.4.

(MQSP) min z

1

(x

1

)=(c

1

)

T

x

1

+(x

1

)

T

D

1

x

1

+ Q

2

(x

1

)

s. t. W

1

x

1

= h

1

,

x

1

≥0 ,

(2.1)

where Q

t

(x

t−1

,

ξ

t

(

ω

)) =

min (c

t

(

ω

))

T

x

t

(

ω

)+(x

t

(

ω

))

T

D

t

(

ω

)x

t

(

ω

)+Q

t+1

(x

t+1

)

s. t. W

t

x

t

(

ω

)=h

t

(

ω

) −T

t−1

(

ω

)x

t−1

,

x

t

(

ω

) ≥ 0 ,

(2.2)

Q

t+1

(x

t

)=E

ξ

t+1

Q

t+1

(x

t

,

ξ

t+1

(

ω

)) , t = 1,...,H −1 , (2.3)

and

Q

H

(x

H−1

)=0 . (2.4)

In MQSP , D

t

is an n

t

×n

t

matrix. All other matrices have the dimensions

defined in the linear case. The random vector,

ξ

t

(

ω

) , is formed by the elements of

c

t

(

ω

) , h

t

(

ω

) , T

t−1

(

ω

) ,and D

t

(

ω

) . We keep the notation that ξ

t

is an N

t

-vector

on (

Ω

,W

t

,P) , with support

Ξ

t

. Finally, we again define

K

t

= {x

t

| Q

t+1

(x

t

) < ∞} .

We also define z

t

(x

t

)=(c

t

)

T

x

t

+(x

t

)

T

D

t

x

t

+ Q

t+1

(x

t

) .

Theorem 2. If the matrices D

t

(

ω

) are positive semi-definite for all

ω

∈

Ω

and t = 1,...,H , then the sets K

t

and the functions Q

t+1

(x

t

) are convex for

t = 1,...,H −1 .If

Ξ

t

is also finite for t = 2,...,H,then K

t

is polyhedral. More-

over z

t

(x

t

) is either identically −∞ or there exists a decomposition of K

t

into a

polyhedral complex such that the t

th

-stage deterministic equivalent program (2.2)

is a piecewise quadratic program.

Proof: The piecewise quadratic property of (2.2) is obtained by inductively apply-

ing to each cell of the polyhedral complex of K

t

the result that if z

t

(·) is a finite

positive semi-definite quadratic form, there exists a piecewise affine continuous op-

timal decision rule for (2.2). All others results were given in Section 3.4.

We now describe a nested decomposition algorithm for MQSP first presented in

Louveaux [1980]. For simplicity in the presentation of the algorithms, we assume

relatively complete recourse. This means that we skip the step that consists of gener-

ating feasibility cuts. If needed, those cuts are generated exactly as in the multistage

linear case. We keep the notation of a(k) for the ancestor scenario of k at stage

278 6 Multistage Stochastic Programs

t −1 . As in Section 6.1, c

t

k

, D

t

k

,and Q

t+1

k

represent realizations of c

t

, D

t

,and

Q

t+1

for scenario k and x

t

k

is the corresponding decision vector. In Stage 1, we

use the notations, z

1

and z

1

1

and x

1

and x

1

1

, as equivalent.

Nested PQP Algorithm for MQSP

Step 0.Set t = 1, k = 1, C

1

= S

1

= K

1

. Choose x

1

1

∈ K

1

.

Step 1.If t = H ,gotoStep2.For i = t + 1,...,H ,let k = 1, z

i

1

(x

i

1

)=(c

i

1

)

T

x

i

1

+

(x

i

1

)

T

D

i

1

x

i

1

and C

i

1

(x

i−1

a(1)

)=S

i

1

(x

i−1

a(1)

)=K

i

(x

i−1

a(1)

) . Choose x

i

1

∈ K

i

(x

i−1

a(1)

) .Set t =

H .

Step 2.Find v ∈ argmin{z

t

k

(x

t

k

) | x

t

k

∈ S

t

k

(x

t−1

a(k)

)}.Find w ∈ argmin{z

t

k

(x

t

k

) | x

t

k

∈

C

t

k

(x

t−1

a(k)

)}.If w is the limiting point on a ray on which z

t

k

(·) is decreasing to −∞ ,

then (DEP)

t

k

is unbounded and the algorithm terminates.

Step 3.If ∇

T

z

t

k

(w)(v −w)=0 , go to Step 4. Otherwise, redefine

S

t

k

(x

t−1

a(k)

) ← S

t

k

(x

t−1

a(k)

) ∩{x

t

k

| ∇

T

z

t

k

(w)(x

t

k

−w) ≤ 0} .

Let x

t

k

= v , z

t

k

=(c

t

k

)

T

x

t

k

+(x

t

k

)

T

D

t

k

x

t

k

and C

t

k

= K

t

(x

t−1

a(k)

) .GotoStep1.

Step 4.If t = 1 , stop; w is an optimal first-period decision. Otherwise, find the cell

G

t

k

(x

t−1

a(k)

) containing w and the corresponding quadratic form Q

t

k

(x

t−1

a(k)

) . Redefine

z

t−1

a(k)

(x

t−1

a(k)

) ← z

t−1

a(k)

(x

t−1

a(k)

)+p

t

k

Q

t

k

(x

t−1

a(k)

)

C

t−1

a(k)

(x

t−1

a(k)

) ←C

t−1

a(k)

(x

t−1

a(k)

) ∩G

t

a(k)

(x

t−1

k

) .

If k = K

t

,let t ←t −1 , go to Step 2. Otherwise, let k ←k+1, z

t

k

(x

t

k

)=(c

t

k

)

T

x

t

k

+

(x

t

k

)

T

D

t

k

x

t

k

, C

t

k

= S

t

k

(x

t−1

a(k)

)=K

t

(x

t−1

a(k)

) . Choose x

t

k

∈S

t

k

(x

t−1

a(k)

) .GotoStep1.

Theorem 3. The nested PQP algorithm terminates in a finite number of steps by

either detecting an unbounded solution or finding an optimal solution of the multi-

stage quadratic stochastic program with relatively complete recourse.

Proof: The proof of the finite convergence of the PQP algorithm in Section 5.3

amounts to showing that Step 2 of the algorithm can be performed at most a finite

number of times. The same result holds for a given piecewise quadratic program

(2.2) in the nested sequence. The theorem follows from the observations that there

is only a finite number of different problems (2.2) and that all other steps of the

algorithm are finite.

Numerical experiments are reported in Louveaux [1980]. It should be noted that

the MQSP easily extends to the multistage piecewise convex case. The limit there

is that the objective function and the description of the cell are usually much more

difficult to obtain. One simple example is proposed in Exercise 3.

6.2 Quadratic Nested Decomposition 279

It is interesting to observe that the MQSP method has a tendency to require few

iterations when the quadratic terms play a significant role and a good starting point is

chosen. (This probably relates to the good behavior of regularized decomposition.)

Example 1 (continued)

Assume that the cost of overtime is now quadratic (for example, larger increases

of salary are needed to convince more people to work overtime). We replace ev-

erywhere 3.0w

t

k

by 2.0w

t

k

+(w

t

k

)

2

. Assume all other data are unchanged. Take as

the starting point a situation where 0 ≤ y

1

≤ 1, 0≤ y

2

k

≤ 1, k = 1,2 . (It is rela-

tively easy to see what the corresponding values for the other first- and second-stage

variables should be.) We now proceed backward. Let t = 3.

i) t = 3, k = 1.Wesolve

min x

3

1

+ 2w

3

1

+(w

3

1

)

2

s. t. y

2

1

+ x

3

1

+ w

3

1

= 1 , x

3

1

≤ 2 ,

x

3

1

,w

3

1

≥ 0 ,

where inventory at the end of Period 3 has been omitted for simplicity. The solution

is easily seen to be x

3

1

= 1 −y

2

1

, w

3

1

= 0 and is valid for 0 ≤ y

2

1

≤1 . It follows that

Q

3

1

(y

2

1

)=1−y

2

1

.

ii) t = 3, k = 2.Wesolve

min x

3

2

+ 2w

3

2

+(w

3

2

)

2

s. t. y

2

1

+ x

3

2

+ w

3

2

= 3 , x

3

2

≤ 2 ,

x

3

2

,w

3

2

≥ 0 .

The solution is now x

3

2

= 2, w

3

2

= 1 −y

2

1

, valid for 0 ≤y

2

1

≤1 . It yields Q

3

2

(y

2

1

)=

4 −2y

2

1

+(1 −y

2

1

)

2

.

Combining (i) and (ii), we obtain

Q

2

1

(y

2

1

)=

1

2

Q

3

1

(y

2

1

)+

1

2

Q

3

2

(y

2

1

)=

5

2

−

3

2

y

2

1

+

(1 −y

2

1

)

2

2

and

C

2

1

(y

2

1

)={y

2

1

| 0 ≤ y

2

1

≤ 1} .

iii) and iv) Because the randomness is only in the right-hand side, we conclude

that cases (iii) and (iv) are identical to (i) and (ii), respectively. Hence,

Q

2

2

(y

2

2

)=

5

2

−

3

2

y

2

2

+

(1 −y

2

2

)

2

2

and C

2

2

(y

2

2

)={y

2

2

| 0 ≤ y

2

2

≤ 1} .

280 6 Multistage Stochastic Programs

Next, we have t = 2.

i) t = 2, k = 1 . The objective z

2

1

is computed as

z

2

1

= x

2

1

+ 2w

2

1

+(w

2

1

)

2

+ 0.5y

2

1

+

5

2

−

3

2

y

2

1

+

(1 −y

2

1

)

2

2

,

i.e.,

z

2

1

=

5

2

+ x

2

1

+ 2w

2

1

+(w

2

1

)

2

−y

2

1

+

(1 −y

2

1

)

2

2

.

The constraint sets are

S

2

1

= {x

2

1

,w

2

1

,y

2

1

| y

1

+ x

2

1

+ w

2

1

−y

2

1

= 1 , 0 ≤x

2

1

≤ 2 , x

2

1

,w

2

1

,y

2

1

≥ 0}

and

C

2

1

= S

2

1

∩{0 ≤y

2

1

≤ 1} .

The solution v of minimizing z

2

1

(·) over S

2

1

is

y

2

1

= 1 , x

2

1

= 2 −y

1

.

Because the solution belongs to C

2

1

, we can take w = v .(Bewarethat w without

superscript and subscript corresponds to the optimal solution on a cell defined in

Step 2, while w with superscript and subscript corresponds to overtime.) Thus, this

point satisfies the optimality criterion in Step 3. It yields

Q

2

1

(y

1

)=

5

2

+ 2 −y

1

−1 =

7

2

−y

1

and

C

2

1

(y

1

)={y

1

| 0 ≤ y

1

≤ 2} .

ii) t = 2, k = 2 . The objective z

2

2

is similarly computed as

z

2

2

=

5

2

+ x

2

2

+ 2w

2

2

+(w

2

2

)

2

−y

2

2

+

(1 −y

2

2

)

2

2

.

The constraint set

S

2

2

= {x

2

2

,w

2

2

,y

2

2

| y

1

+ x

2

2

+ w

2

2

−y

2

2

= 3 , 0 ≤x

2

2

≤ 2 , x

2

2

,w

2

2

,y

2

2

≥ 0}

only differs in the right-hand side of the inventory constraint with

C

2

2

= S

2

2

∩{0 ≤y

2

2

≤ 1} .

The solution v is now x

2

2

= 2, w

2

2

= 1−y

1

,y

2

2

= 0.Again v ∈C

2

2

,sothatwehave

w = v , which satisfies the optimality criterion in Step 3. It yields

6.2 Quadratic Nested Decomposition 281

Q

2

2

(y

1

)=

5

2

+ 2 + 2(1 −y

1

)+(1 −y

1

)

2

+

1

2

= 7 −2y

1

+(1 −y

1

)

2

and

C

2

2

(y

1

)={y

1

| 0 ≤ y

1

≤ 1} .

Next is the case for t = 1.

The current objective function is computed as

z

1

= 21/4 −y

1

+

(1 −y

1

)

2

2

+ x

1

+ 2w

1

+(w

1

)

2

.

The constraint sets are

S

1

1

= {x

1

,w

1

,y

1

| x

1

+ w

1

−y

1

= 1 , x

1

≤ 2 , x

1

,w

1

,y

1

≥ 0} ,

C

1

1

= S

1

1

∩{0 ≤y

1

≤ 1} .

The solution v of minimizing z

1

over S

1

1

is

x

1

= 2 , y

1

= 1 , w

1

= 0 ,

with objective value z

1

=

25

4

. Because this solution belongs to C

1

, it is the optimal

solution of the problem. Thus, no cut was needed to optimize the problem.

Exercises

1. Consider Example 1 with quadratic terms as in this section and take 1 ≤y

1

≤2,

1 ≤ y

2

1

≤ 2, 0≤ y

2

2

≤ 1 as a starting point. Show that the following steps are

generated. Obtain 0.5Q

3

1

(y

2

1

)+0.5Q

3

2

(y

2

1

)=

5

4

−

1

4

y

2

1

.In t = 2, k = 1 , solution

v is x

2

1

= 0, y

2

1

= y

1

−1 while w is y

2

1

= 1, x

2

1

= 2 −y

1

, both with w

2

1

= 0.

Acut x

2

1

+ 2w

2

1

+

1

4

y

2

1

≤

9

4

−y

1

is added. The new starting point is v ,which

corresponds to 0 ≤y

2

1

≤1 . Then the case t = 2, k = 1 is as in the text, yielding

Q

2

1

(y

1

)=

7

2

−y

1

and C

2

1

(y

1

)={0 ≤y

1

≤ 2} .

In t = 2, k = 2 (see the calculations in the text), we obtain Q

2

2

(y

1

)=6 −y

1

and C

2

(y

1

)={1 ≤y

1

≤3}.Thus,in t = 1, z

1

= x

1

+2w

1

+(w

1

)

2

+

19

4

−y

1

/2

and C = {1 ≤ y

1

≤ 2}. Again, the solution v : x

1

= 1, y

1

= 0, w

1

= 0 does

not coincide with w : x

1

= 2, y

1

= 1, w

1

= 0.Acut x

1

−

y

1

2

+ w

1

≤ 3/2is

generated. The new starting point now coincides with the one in the text and the

solution is obtained in one more iteration.

282 6 Multistage Stochastic Programs

6.3 Block Separability and Special Structure

The definition of block separability was given in Section 3.4. It permits separate

calculation of the recourse functions for the aggregate level decisions and the de-

tailed level decisions. This is an advantage in terms of the number of variables and

constraints, but often it makes the computation of the recourse functions and of

the cells of the decomposition much easier in the case of a quadratic multistage

program. This has been exploited in Louveaux [1986] and Louveaux and Smeers

[2011].

We will illustrate a further benefit. It also consists of separating the random vec-

tors. Consider the production of a single product. Now, assume the product cannot

be stored (as in the case of a perishable good) or that the policy of the firm is to use

a just-in-time system of production so that only a fixed safety stock is kept at the

end of each period.

Assume that units are such that one worker produces exactly one product per

stage. Two elements are uncertain: labor cost and demand. Labor cost is currently 2

per period. Next period, labor cost may be 2 or 3 , with equal probability. Current

revenue is 5 per product in normal time and 4 in overtime. Overtime is possible

for up to 50% of normal time. Demand is a uniform continuous random variable

within (0,200) and (0,100) , respectively, for the next two periods. The original

workforce is 50 . Hiring and firing is possible once a period, at the cost of one unit

each. Clearly, the labor decision is the aggregate level decision.

To keep notation in line with Section 3.4, we consider a three-stage model. In

Stage 1, the decision about labor is made, say for Year 1. Stage 2 consists of pro-

duction of Year 1 and decision about labor for Year 2. Stage 3 only consists of

production of Year 2. Let

ξ

t

1

be labor cost in stage t , while

ξ

t

2

is the demand in

stage t .Let w

t

be the workforce in stage t . Then,

Q

t

w

(w

t−1

,ξ

t

1

)=min|w

t

−w

t−1

|+ ξ

t

1

w

t

+ Q

t+1

(w

t

) , (3.1)

Q

t+1

(w

t

)=E

ξ

t+1

[Q

t+1

w

(w

t

,ξ

t+1

1

)+Q

t+1

y

(w

t

,ξ

t+1

2

)] , (3.2)

and Q

t+1

y

(w

t

,ξ

t+1

1

) is minus the expected revenue of production in stage t + 1

given a workforce w

t

and a demand scenario ξ

t+1

2

. It is obtained as follows.

Let D

t

represent the maximal demand in stage t ( 200 for t = 2 , 100 for t =

3 ). Observe that the expectation of ξ

t

2

is D

t

/2 because ξ

t

2

is uniformly continuous

over [0,D

t

] .If w

t

≥D

t

, all demand can be satisfied with normal time. If w

t

≤D

t

≤

1.5w

t

, demand up to w

t

is satisfied with normal time, the rest in overtime. Finally,

if D

t

≥1.5w

t

, normal time is possible up to a demand of w

t

, overtime from w

t

to

1.5w

t

, and extra demand is lost. Taking expectations over these cases, we obtain

Q

t+1

y

(w

t

)=E

ξ

t+1

[Q

t+1

y

(w

t

,

ξ

t+1

2

)] =

⎧

⎪

⎨

⎪

⎩

−2.5D

t

if w

t

≥ D

t

,

(w

t

)

2

2D

t

−w

t

−2D

t

if w

t

≤ D

t

≤1.5w

t

,

5(w

t

)

2

D

t

−7w

t

if 1.5w

t

≤ D

t

.

6.3 Block Separability and Special Structure 283

This problem can now be solved with the MQSP algorithm. Assume w

0

= 50 ,

w

1

≥ 50 .

Let Stage (2, 1) represent the first labor scenario in Stage 2, i.e.,

ξ

2

1

= 2.The

problem consists of finding

min |w

2

−w

1

|+ 2w

2

+ Q

3

(w

2

)

s. t. w

2

≥ 0 .

We compute Q

3

(w

2

)=Q

3

y

(w

2

)=

5(w

2

)

2

100

−7w

2

,for w

2

≤

200

3

, because D

3

= 100 .

We also replace |w

2

−w

1

| by an explicit expression in terms of hiring (h

2

) and

firing ( f

2

). The problem in Stage (2,1) now reads:

Q

2

w

(w

1

,1)=min h

2

+ f

2

−5w

2

+

5(w

2

)

2

100

s. t. w

2

−h

2

+ f

2

= w

1

,

w

2

≥ 0 , h

2

≥ 0 , f

2

≥ 0 .

Under this form, the problem is clearly quadratic convex (remember w

2

is w in

Stage 2, not the square of w ). Classical Karush-Kuhn-Tucker conditions give the

optimal solution w

2

= w

1

, as long as 40 ≤ w

1

≤ 60 . Then

Q

2

w

(w

1

,1)=−5w

1

+

5(w

1

)

2

100

.

Similarly, in Scenario (2, 2) where

ξ

2

1

= 3 , the solution of

min |w

2

−w

1

|+ 3w

2

+ Q

3

(w

2

)

s. t. w

2

≥ 0

is w

2

= 50 , f

2

= w

1

−50 , as long as w

1

≥ 50 . Then

Q

2

w

(w

1

,2)=w

1

−125 ,

and

Q

2

w

(w

1

)=−

125

2

−2w

1

+

2.5(w

1

)

2

100

,

which is valid within C

2

= {50 ≤w

1

≤ 60}.

The Stage 1 objective is:

min h

1

+ f

1

+ 2w

1

+ Q

2

y

(w

1

)+Q

2

w

(w

1

),

so that the Stage 1 problem reads:

min h

1

+ f

1

−7w

1

+

(w

1

)

2

20

−

125

2