Meszaros G. xUnit Test Patterns Refactoring Test Code

Подождите немного. Документ загружается.

Recorded Test

How do we prepare automated tests for our software?

We automate tests by recording interactions with the application and

playing them back using a test tool.

Automated tests serve several purposes. They can be used for regression testing

software after it has been changed. They can help document the behavior of the

software. They can specify the behavior of the software before it has been writ-

ten. How we prepare the automated test scripts affects which purposes they can

be used for, how robust they are to changes in the SUT, and how much skill and

effort it takes to prepare them.

Recorded Tests allow us to rapidly create regression tests after the SUT has

been built and before it is changed.

How It Works

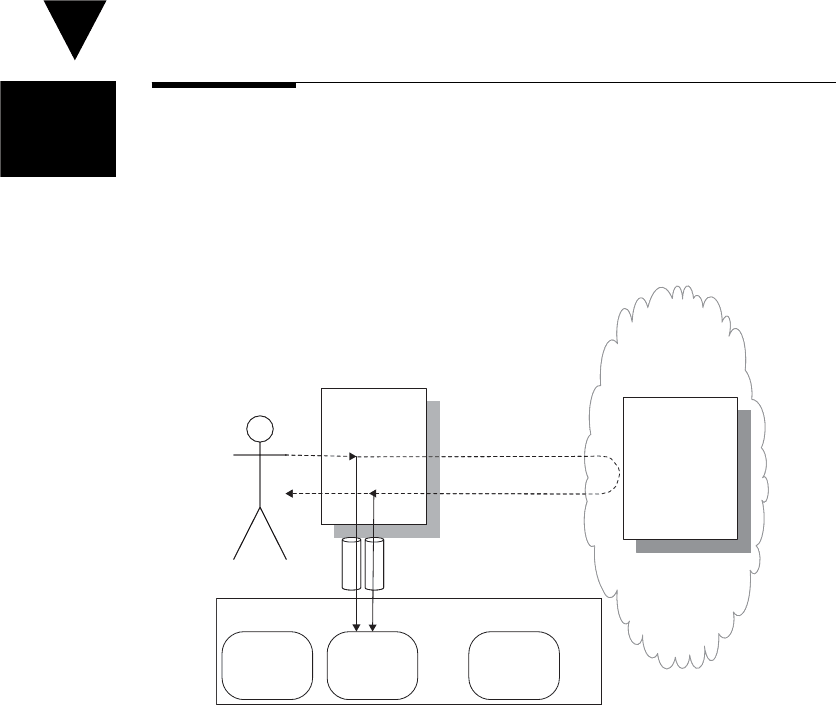

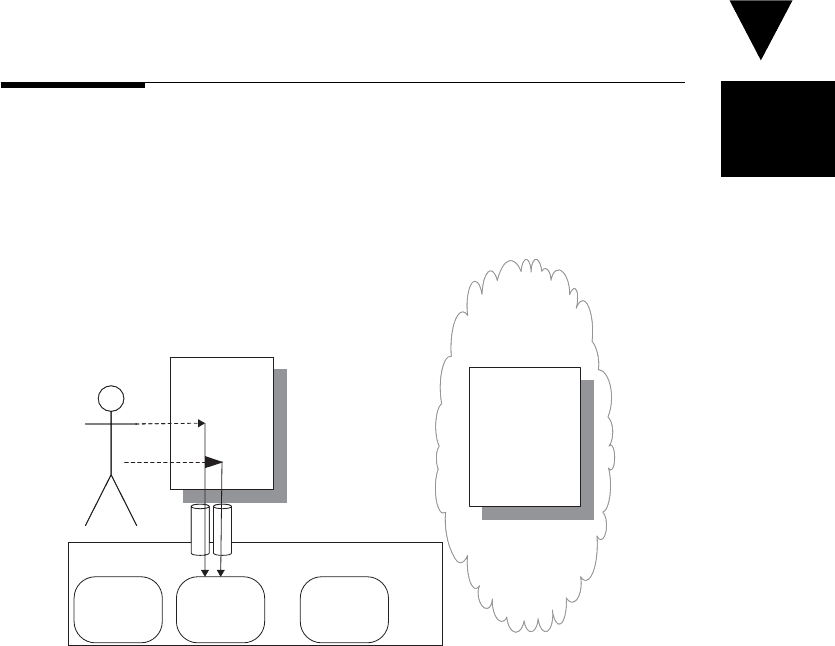

We use a tool that monitors our interactions with the SUT as we work with

it. This tool keeps track of most of what the SUT communicates to us and our

responses to the SUT. When the recording session is done, we can save the ses-

sion to a fi le for later playback. When we are ready to run the test, we start up

Fixture

Test

Script 1

Test

Script 2

Test

Script n

Test Script Repository

Test

Recorder

SUT

OutputsInputs

Inputs

Outputs

Inputs

Outputs

Fixture

Test

Script 1

Test

Script 2

Test

Script n

Test Script Repository

Test

Recorder

SUT

OutputsInputs

Inputs

Outputs

Inputs

Outputs

Also known as:

Record and

Playback Test,

Robot User

Test, Capture/

Playback Test

Recorded

Test

Chapter 18 Test Strategy Patterns

278

279

the “playback” part of the tool and point it at the recorded session. It starts up

the SUT and feeds it our recorded inputs in response to the SUT’s outputs. It may

also compare the SUT’s outputs with the SUT’s responses during the recording

session. A mismatch may be cause for failing the test.

Some Recorded Test tools allow us to adjust the sensitivity of the compari-

sons that the tool makes between what the SUT said during the recording ses-

sion and what it said during the playback. Most Recorded Test tools interact

with the SUT through the user interface.

When to Use It

Once an application is up and running and we don’t expect a lot of changes

to it, we can use Recorded Tests to do regression testing. We could also use

Recorded Tests when an existing application needs to be refactored (in anticipa-

tion of modifying the functionality) and we do not have Scripted Tests (page 285)

available to use as regression tests. It is typically much quicker to produce a set

of Recorded Tests than to prepare Scripted Tests for the same functionality. In

theory, the test recording can be done by anyone who knows how to operate

the application; very little technical expertise should be required. In practice,

many of the commercial tools have a steep learning curve. Also, some technical

expertise may be required to add “checkpoints,” to adjust the sensitivity of the

playback tool, or to adjust the test script if the recording tool became confused

and recorded the wrong information.

Most Recorded Test tools interact with the SUT through the user interface. This

approach makes them particularly prone to fragility if the user interface of the

SUT is evolving (Interface Sensitivity; see Fragile Test on page 239). Even small

changes such as changing the internal name of a button or fi eld may be enough

to cause the playback tool to stumble. The tools also tend to record information

at a very low and detailed level, making the tests hard to understand (Obscure

Test; page 186); as a result, they are also diffi cult to repair by hand if they are

broken by changes to the SUT. For these reasons, we should plan on rerecording

the tests fairly regularly if the SUT will continue to evolve.

If we want to use the Tests as Documentation (see page 23) or if we want to

use the tests to drive new development, we should consider using Scripted Tests.

These goals are diffi cult to address with commercial

Recorded Test tools because

most do not let us defi ne a Higher-Level Language (see page 41) for the test

recording. This issue can be addressed by building the Recorded Test capability

into the application itself or by using Refactored Recorded Test.

Recorded Test

Recorded

Test

Variation: Refactored Recorded Test

A hybrid of the two strategies is to use the “record, refactor, playback”

1

sequence

to extract a set of “action components” or “verbs” from the newly Recorded

Tests and then rewire the test cases to call these “action components” instead

of having detailed in-line code. Most commercial capture/replay tools provide

the means to turn Literal Values (page 714) into parameters that can be passed

into the “action component” by the main test case. When a screen changes, we

simply rerecord the “action component”; all the test cases continue to function

by automatically using the new “action component” defi nition. This strategy is

effectively the same as using Test Utility Methods (page 599) to interact with the

SUT in unit tests. It opens the door to using the Refactored Recorded Test com-

ponents as a Higher-Level Language in Scripted Tests. Tools such as Mercury

Interactive’s BPT

2

use this paradigm for scripting tests in a top-down manner;

once the high-level scripts are developed and the components required for the

test steps are specifi ed, more technical people can either record or hand-code the

individual components.

Implementation Notes

We have two basic choices when using a Recorded Test strategy: We can either

acquire third-party tools that record the communication that occurs while we

interact with the application or we can build a “record and playback” mecha-

nism right into our application.

Variation: External Test Recording

Many test recording tools are available commercially, each of which has its own

strengths and weaknesses. The best choice will depend on the nature of the user

interface of the application, our budget, the complexity of the functionality to

be verifi ed, and possibly other factors.

If we want to use the tests to drive development, we need to pick a tool that

uses a test-recording fi le format that is editable by hand and easily understood.

We’ll need to handcraft the contents—this situation is really an example of a

Scripted Test even if we are using a “record and playback” tool to execute the

tests.

1

The name “record, refactor, playback” was coined by Adam Geras.

2

BPT is short for “Business Process Testing.”

Recorded

Test

280

Chapter 18 Test Strategy Patterns

Variation: Built-In Test Recording

It is also possible to build a Recorded Test capability into the SUT. In such a

case, the test scripting “language” can be defi ned at a fairly high level—high

enough to make it possible to hand-script the tests even before the system is

built. In fact, it has been reported that the VBA macro capability of Microsoft’s

Excel spreadsheet started out as a mechanism for automated testing of Excel.

Example: Built-In Test Recording

On the surface, it doesn’t seem to make sense to provide a code sample for a

Recorded Test because this pattern deals with how the test is produced, not

how it is represented. When the test is played back, it is in effect a Data-Driven

Test (page 288). Likewise, we don’t often refactor to a Recorded Test because it

is often the fi rst test automation strategy attempted on a project. Nevertheless,

we might introduce a Recorded Test after attempting Scripted Tests if we discover

that we have too many Missing Tests (page 268) because the cost of manual auto-

mation is too high. In that case, we would not be trying to turn existing Scripted

Tests into Recorded Tests; we would just record new tests.

Here’s an example of a test recorded by the application itself. This test was

used to regression-test a safety-critical application after it was ported from C on

OS2 to C++ on Windows. Note how the recorded information forms a domain-

specifi c Higher-Level Language that is quite readable by a user.

<interaction-log>

<commands>

<!-- more commands omitted -->

<command seqno="2" id="Supply Create">

<field name="engineno" type="input">

<used-value>5566</used-value>

<expected></expected>

<actual status="ok"/>

</field>

<field name="direction" type="selection">

<used-value>SOUTH</used-value>

<expected>

<value>SOUTH</value>

<value>NORTH</value>

</expected>

<actual>

<value status="ok">SOUTH</value>

<value status="ok">NORTH</value>

</actual>

</field>

</command>

<!-- more commands omitted -->

</commands>

</interaction-log>

Recorded Test

Recorded

Test

281

This sample depicts the output of having played back the tests. The actual elements

were inserted by the built-in playback mechanism. The status attributes indicate

whether these elements match the expected values. We applied a style sheet to these

fi les to format them much like a Fit test with color-coded results. The business users

on the project then handled the recording, replaying, and result analysis.

This recording was made by inserting hooks in the presentation layer of the

software to record the lists of choices offered the user and the user’s responses.

An example of one of these hooks follows:

if (playback_is_on()) {

choice = get_choice_for_playback(dialog_id, choices_list);

} else {

choice = display_dialog(choices_list, row, col, title, key);

}

if (recording_is_on()) {

record_choice(dialog_id, choices_list, choice, key);

}

The method get_choice_for_playback retrieves the contents of the used-value element

instead of asking the user to pick from the list of choices. The method record_choice

generates the actual element and makes the “assertions” against the expected

elements, recording the result in the status attribute of each element. Note that

recording_is_on() returns true whenever we are in playback mode so that the test

results can be recorded.

Example: Commercial Record and Playback Test Tool

Almost every commercial testing tool uses a “record and playback” metaphor.

Each tool also defi nes its own Recorded Test fi le format, most of which are

very verbose. The following is a “short” excerpt from a test recorded using

Mercury Interactive’s QuickTest Professional [QTP] tool. It is shown in “Expert

View,” which exposes what is really recorded: a VbScript program! The example

includes comments (preceded by “@@”) that were inserted manually to clarify

what this test is doing; these comments would be lost if the test were rerecorded

after a change to the application caused the test to no longer run.

@@

@@ GoToPageMaintainTaxonomy()

@@

Browser("Inf").Page("Inf").WebButton("Login").Click

Browser("Inf").Page("Inf_2").Check CheckPoint("Inf_2")

Browser("Inf").Page("Inf_2"").Link("TAXONOMY LINKING").Click

Browser("Inf").Page("Inf_3").Check CheckPoint("Inf_3")

Browser("Inf").Page("Inf_3").Link("MAINTAIN TAXONOMY").Click

Browser("Inf").Page("Inf_4").Check CheckPoint("Inf_4")

@@

Recorded

Test

282

Chapter 18 Test Strategy Patterns

@@ AddTerm("A","Top Level", "Top Level Definition")

@@

Browser("Inf").Page("Inf_4").Link("Add").Click

wait 4

Browser("Inf_2").Page("Inf").Check CheckPoint("Inf_5")

Browser("Inf_2").Page("Inf").WebEdit("childCodeSuffix").Set "A"

Browser("Inf_2").Page("Inf").

WebEdit("taxonomyDto.descript").Set "Top Level"

Browser("Inf_2").Page("Inf").

WebEdit("taxonomyDto.definiti").Set "Top Level Definition"

Browser("Inf_2").Page("Inf").WebButton("Save").Click

wait 4

Browser("Inf").Page("Inf_5").Check CheckPoint("Inf_5_2")

@@

@@ SelectTerm("[A]-Top Level")

@@

Browser("Inf").Page("Inf_5").

WebList("selectedTaxonomyCode").Select "[A]-Top Level"

@@

@@ AddTerm("B","Second Top Level", "Second Top Level Definition")

@@

Browser("Inf").Page("Inf_5").Link("Add").Click

wait 4

Browser("Inf_2").Page("Inf_2").Check CheckPoint("Inf_2_2")

infofile_;_Inform_Alberta_21.inf_;_hightlight id_;

_Browser("Inf_2").Page("Inf_2")_;_

@@

@@ and it goes on, and on, and on ....

Note how the test describes all inputs and outputs in terms of the user interface

of the application. It suffers from two main issues: Obscure Tests (caused by the

detailed nature of the recorded information) and Interface Sensitivity (resulting

in Fragile Tests).

Refactoring Notes

We can make this test more useful as documentation, reduce or avoid High Test

Maintenance Cost (page 265), and support composition of other tests from a

Higher-Level Language by using a series of Extract Method [Fowler] refactorings.

Example: Refactored Commercial Recorded Test

The following example shows the same test refactored to Communicate Intent

(see page 41):

GoToPage_MaintainTaxonomy()

AddTerm("A","Top Level", "Top Level Definition")

SelectTerm("[A]-Top Level")

AddTerm("B","Second Top Level", "Second Top Level Definition")

Recorded Test

Recorded

Test

283

Note how much more intent revealing this test has become. The Test Utility

Methods we extracted look like this:

Method GoToPage_MaintainTaxonomy()

Browser("Inf").Page("Inf").WebButton("Login").Click

Browser("Inf").Page("Inf_2").Check CheckPoint("Inf_2")

Browser("Inf").Page("Inf_2").Link("TAXONOMY LINKING").Click

Browser("Inf").Page("Inf_3").Check CheckPoint("Inf_3")

Browser("Inf").Page("Inf_3").Link("MAINTAIN TAXONOMY").Click

Browser("Inf").Page("Inf_4").Check CheckPoint("Inf_4")

End

Method AddTerm( code, name, description)

Browser("Inf").Page("Inf_4").Link("Add").Click

wait 4

Browser("Inf_2").Page("Inf").Check CheckPoint("Inf_5")

Browser("Inf_2").Page("Inf").

WebEdit("childCodeSuffix").Set code

Browser("Inf_2").Page("Inf").

WebEdit("taxonomyDto.descript").Set name

Browser("Inf_2").Page("Inf").

WebEdit("taxonomyDto.definiti").Set description

Browser("Inf_2").Page("Inf").WebButton("Save").Click

wait 4

Browser("Inf").Page("Inf_5").Check CheckPoint("Inf_5_2")

end

Method SelectTerm( path )

Browser("Inf").Page("Inf_5").

WebList("selectedTaxonomyCode").Select path

Browser("Inf").Page("Inf_5").Link("Add").Click

wait 4

end

This example is one I hacked together to illustrate the similarities to what we do

in xUnit. Don’t try running this example at home—it is probably not syntactically

correct.

Further Reading

The paper “Agile Regression Testing Using Record and Playback” [ARTRP]

describes our experiences building a Recorded Test mechanism into an applica-

tion to facilitate porting it to another platform.

Recorded

Test

Chapter 18 Test Strategy Patterns

284

Scripted Test

How do we prepare automated tests for our software?

We automate the tests by writing test programs by hand.

Automated tests serve several purposes. They can be used for regression testing

software after it has been changed. They can help document the behavior of the

software. They can specify the behavior of the software before it has been written.

How we prepare the automated test scripts affects which purpose they can be

used for, how robust they are to changes in the SUT, and how much skill and

effort it takes to prepare them.

Scripted Tests allow us to prepare our tests before the software is developed

so they can help drive the design.

How It Works

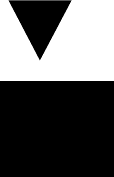

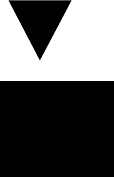

We automate our tests by writing test programs that interact with the SUT for the

purpose of exercising its functionality. Unlike Recorded Tests (page 278), these

tests can be either customer tests or unit tests. These test programs are often

called “test scripts” to distinguish them from the production code they test.

Fixture

Test Script Repository

Test

Development

SUT

Test

Script 1

Test

Script 2

Test

Script n

Expected

Outputs

Inputs

Inputs

Outputs

Fixture

Test Script Repository

Test

Development

SUT

Test

Script 1

Test

Script 2

Test

Script n

Expected

Outputs

Inputs

Inputs

Outputs

Also known as:

Hand-Written

Test, Hand-

Scripted Test,

Programmatic

Test, Automated

Unit Test

Scripted Test

Scripted

Test

285

When to Use It

We almost always use Scripted Tests when preparing unit tests for our software.

This is because it is easier to access the individual units directly from software

written in the same programming language. It also allows us to exercise all the

code paths, including the “pathological” cases.

Customer tests are a slightly more complicated picture; we should use a

Scripted Test whenever we use automated storytests to drive the develop-

ment of software. Recorded Tests don’t serve this need very well because

it is diffi cult to record tests without having an application from which to

record them. Preparing Scripted Tests takes programming experience as well

as experience in testing techniques. It is unlikely that most business users on

a project would be interested in learning how to prepare Scripted Tests. An

alternative to scripting tests in a programming language is to defi ne a Higher-

Level Language (see page 41) for testing the SUT and then to implement

the language as a Data-Driven Test (page 288) Interpreter [GOF]. An open-

source framework for defi ning Data-Driven Tests is Fit and its wiki-based

cousin, FitNesse. Canoo WebTest is another tool that supports this style

of testing.

In case of an existing legacy application,

3

we can consider using Recorded

Tests as a way of quickly creating a suite of regression tests that will protect us

while we refactor the code to introduce testability. We can then prepare Scripted

Tests for our now testable application.

Implementation Notes

Traditionally, Scripted Tests were written as “test programs,” often using a spe-

cial test scripting language. Nowadays, we prefer to write Scripted Tests using

a Test Automation Framework (page 298) such as xUnit in the same language

as the SUT. In this case, each test program is typically captured in the form

of a Test Method (page 348) on a Testcase Class (page 373). To minimize Manual

Intervention (page 250), each test method should implement a Self-Checking

Test (see page 26) that is also a Repeatable Test (see page 26).

3

Among test drivers, a legacy application is any system that lacks a safety net of auto-

mated tests.

Scripted

Test

286

Chapter 18 Test Strategy Patterns

Example: Scripted Test

The following is an example of a Scripted Test written in JUnit:

public void testAddLineItem_quantityOne(){

final BigDecimal BASE_PRICE = UNIT_PRICE;

final BigDecimal EXTENDED_PRICE = BASE_PRICE;

// Set Up Fixture

Customer customer = createACustomer(NO_CUST_DISCOUNT);

Invoice invoice = createInvoice(customer);

// Exercise SUT

invoice.addItemQuantity(PRODUCT, QUAN_ONE);

// Verify Outcome

LineItem expected =

createLineItem( QUAN_ONE, NO_CUST_DISCOUNT,

EXTENDED_PRICE, PRODUCT, invoice);

assertContainsExactlyOneLineItem( invoice, expected );

}

public void testChangeQuantity_severalQuantity(){

final int ORIGINAL_QUANTITY = 3;

final int NEW_QUANTITY = 5;

final BigDecimal BASE_PRICE =

UNIT_PRICE.multiply( new BigDecimal(NEW_QUANTITY));

final BigDecimal EXTENDED_PRICE =

BASE_PRICE.subtract(BASE_PRICE.multiply(

CUST_DISCOUNT_PC.movePointLeft(2)));

// Set Up Fixture

Customer customer = createACustomer(CUST_DISCOUNT_PC);

Invoice invoice = createInvoice(customer);

Product product = createAProduct( UNIT_PRICE);

invoice.addItemQuantity(product, ORIGINAL_QUANTITY);

// Exercise SUT

invoice.changeQuantityForProduct(product, NEW_QUANTITY);

// Verify Outcome

LineItem expected = createLineItem( NEW_QUANTITY,

CUST_DISCOUNT_PC, EXTENDED_PRICE, PRODUCT, invoice);

assertContainsExactlyOneLineItem( invoice, expected );

}

About the Name

Automated test programs are traditionally called “test scripts,” probably due

to the heritage of such test programs—originally they were implemented in

interpreted test scripting languages such as Tcl. The downside of calling them

Scripted Tests is that this nomenclature opens the door to confusion with the

kind of script a person would follow during manual testing as opposed to

unscripted testing such as exploratory testing.

Further Reading

Many books have been written about the process of writing Scripted Tests and

using them to drive the design of the SUT. A good place to start would be [TDD-BE]

or [TDD-APG].

Scripted Test

Scripted

Test

287