Li S.Z., Jain A.K. (eds.) Encyclopedia of Biometrics

Подождите немного. Документ загружается.

calibration and monitoring requires standardized

approaches to data collection, data management, pro-

cessing, and results generation.

Types of Biometric Performance Testing

Standards

Biometric performance tests are typically categorized

as technology tests, scenario tests, or operational tests.

These test types share commonalities – addressed in

framework performance testing standards – but also

differ in important ways.

▶ Technology tests are those in which biomet-

ric algorithms enroll and compare archived (i.e.,

previously-collected) data. An essential characteristic

of technology testing is that the test subject is not ‘‘in

the loop’’ – the test subject provides data in advance,

and biometric algorithms are implemented to process

large quantities of test data. Technology tests often

involve cross-comparison of hundreds of thousands

of biometric samples over the course of days or

weeks. Methods of executing and handling the out-

puts of such cross-comparisons are a major component

of technology-based performance testing standards.

Technology tests are suitable for evaluation of both

verification- and identification-based systems, although

most technology tests are verification-based. Technolo-

gy testing standards accommodate evaluations based on

biometric data collected in an operational system as well

as evaluations based on biometric data collected for the

specific purpose of testing. Technology tests based on

operational data are often designed to validate or project

the performance of a fielded system, whereas technology

tests based on specially-collected data are typically more

exploratory or experimental.

▶ Scenario tests are those in which biometric sys-

tems collect and process data from test subjects in a

specified application. An essential characteristic of sce-

nario testing is that the test subject is ‘‘in the loop,’’

interacting with capture devices in a fashion represen-

tative of a target application. Scenario tests evaluate

end-to-end systems, inclusive of capture device, qual-

ity validation software, enrollment software, and

matching software. Scenario tests are based on smaller

sample sizes than technology tests due to the costs of

recruiting and managing interactions with test subjects

(even large scenario tests rarely exceed more than

several hundred test subjects). Scenario tests are also

limited in that there is no practical way to standardize

the time between enrollment- and recognition-phase

data collection. This duration may be days or weeks,

depending on the accessibility of test subjects.

Scenario-based performance testing standards have

defined the taxonomy for interaction between the

test subject and the sensor; this taxonomy addresses

presentations, attempts, and transactions, each of

which describes a type of interaction between a test

subject and a biometric system. This is particularly

important in that scenario testing is uniquely able

to quantify ‘‘level of effort’’ in biometric system

usage; level of effort directly impacts both accuracy

and capture rates.

▶ Operational tests are those in which a biometric

system collects and processes data from actual system

users in a field application. Operational tests differ

fundamentally from technology and scenario tests in

that the experimenter has limited control over data

collection and processing. Because operational tests

should not interfere with or alter the operational usage

being evaluated, it may be difficult to establish ground

truth at the subject or sample level. As a result, opera-

tional tests may or may not be able to evaluate false

accept rates (FAR), false reject rates (FRR), or failure

to enroll rates (FTE); instead they may only be able to

evaluate acceptance rates (without distinction between

genuine and impostor) and operational throughput.

One of the many challenges facing developers of

operational testing standards is the fact that each opera-

tional system differs in some way from all others, such

that defining commonalities across all such tests is diffi-

cult to achieve. It is therefore essential that opera-

tional performance test reports specify which elements

were measurable and which were not. Operational

tests may also evaluate performance over time, such

as with a system in operation for a number of months

or years.

In a general sense, as a given biometric technol-

ogy matures, it passes through the cycle of technology,

scenario, and then operational testing. Biometric tests

may combine aspects of technology, scenario, and op-

erational testing . For example, a test might combine

controlled, ‘‘online’’ data collection from test subjects

(an element of scenario testing) with full, ‘‘offline’’

comparison of this data (an element of technology

testing). This methodology was impleme nted in iris

recognition testing sponsored by the US Department

of Homeland Securit y in 2005 [1 ].

1070

P

Performance Testing Methodology Standardization

Elements Required in Biometric

Performance Testing Standards

Biometric performance testing standards address the

following areas:

1. Test planning, including requirements pertaining

to t est objectives, timeframes, controlling test vari-

ables, data collection methods, and data processing

methods.

2. Hardware and software configuration and calibra-

tion, including requirements pertaining to algo-

rithm implementation and device settings.

3. Data collection and management, including require-

ments per taining to identification of random and

systematic errors, collection of personally-identifi-

able-data, and establishing ground truth.

4. Enrollment and comparison processes, including

requirements pertaining to implementation of gen-

uine and impostor attempts and transactions for

identification and verification.

5. Calculation of performance results, including for-

mulae for calculating match rates, capture rates

(FTA and FTE), and throughput rates.

6. Determination of statistical significance, including

requirements pertaining to confidence interval cal-

culation and repor ting.

7. Methodology and results reporting, including require-

ments pertaining to test report contents and format.

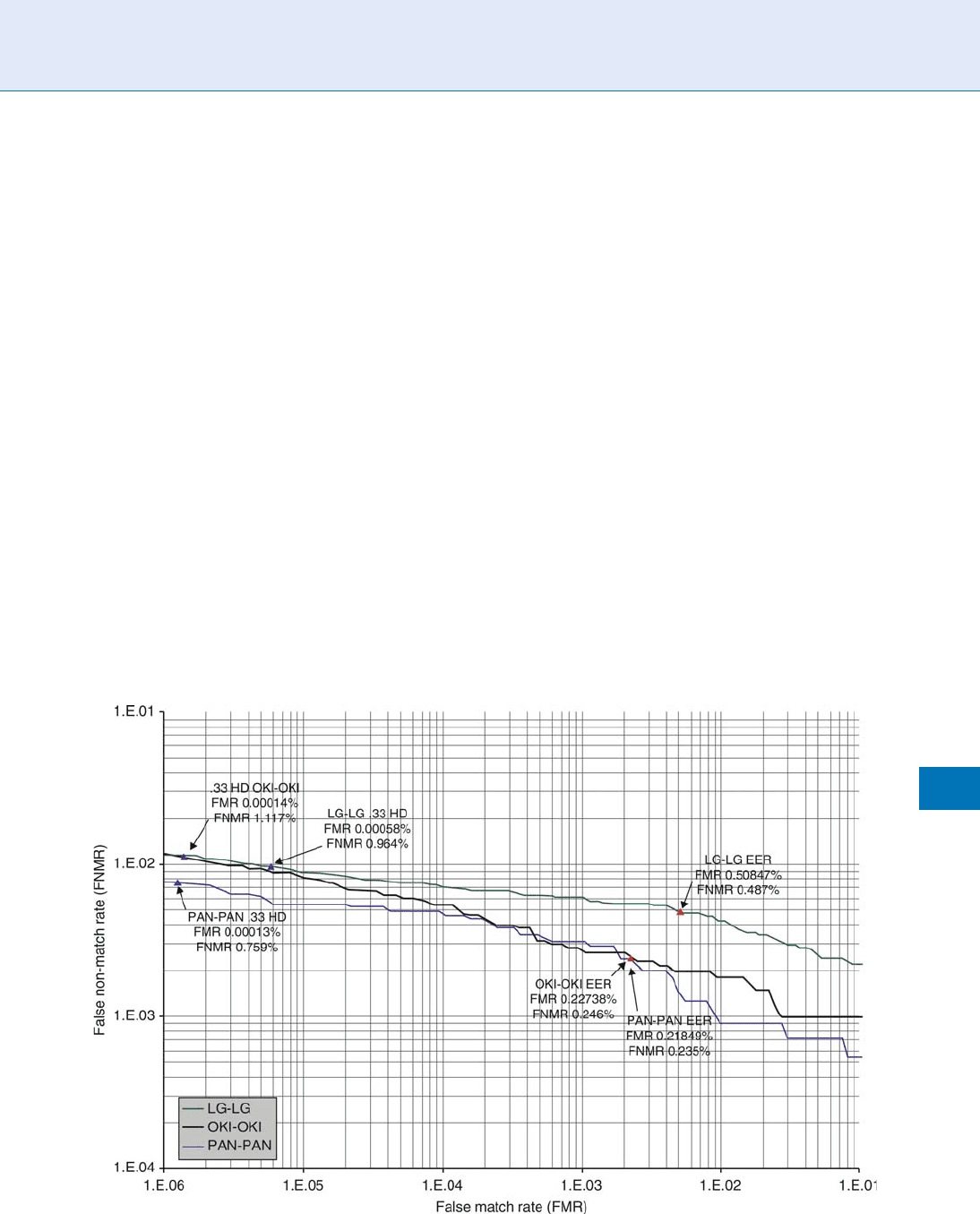

One of the major accomplishments of biometric test-

ing standards has been to specify the manner in which

the tradeoff between FMR and FNMR is rendered in

chart form.

Verification system performance can be rendered

through detection error trade-off (DET) curves or

receiver operating characteristic (ROC) curves. DET

curve plots false positive and false negative error rates

on both axes (false positives on the x-axis and false

negatives on the y-axis), as shown below. ROC curves

plot of the rate of false positives (i.e., impostor attempts

accepted) on the x-axis against the corresponding rate

of true positives (i.e., genuine attempts accepted) on

the y-axis plotted parametrically as a function of the

decision threshold (Fig. 1).

Identification system performance rendering is

slightly more complex, and is dependent on whether

the test is open-set or closed-set.

Performance Testing Methodology Standardization. Figure 1 Detection error tradeoff (DET) curve.

Performance Testing Methodology Standardization

P

1071

P

Depending on the type of test (technology, scenario,

operational), certain elements will be emphasized more

than others, and results of the presentation will differ

based on whether a test implements verification or

identification.

Published Standards and Ongoing

Efforts – International and National

Activities

Several biometric performance testing standards hav e

been published, and many additional biometric per-

formance testing standards are in development. This sec-

tion discusses ISO/IEC 19795-1, -2, and -3. ISO/IEC

19795-4 is discussed below under Performance testing

and interoperability. These four standards are listed

in the Registry of USG Recommended Biometric

Standards Version 1.0, DRAFT for Public Comment,

NSTC Subcommittee on Biometrics and Identity

Management.

ISO/IEC 19795-1:2006 Information technology –

Biometric per formance testing and reporting – Part 1:

Principles and framework [2] can be considered the

starting point for biometric performance testing stan-

dardization. This document specifies how to calculate

metrics such as false match rates (FMR), false non-

match rates (FNMR), false accept rates (FAR), false

reject rates (FRR), failu re to enroll rates (FTE), failure

to acquire rates (FTA), false positive identification

rates (FPIR), and false negative identification rates

(FNIR). 19795-1 treats both verification and identifi-

cation testing, and is agnostic as to modality (e.g.,

fingerprint, face recognition) and test type (technolo-

gy, scenario, operational).

ISO/IEC 19795-2:2007 Information technology –

Biometric performance testing and reporting – Part 2:

Testing methodologies for technology and scenario evalua-

tion [3] specifies requirements for technology and sce-

nario evaluations, describe d above. The large majority

of biometric tests are of one of these two generic evalu-

ation types. 19795-2 builds on 19795-1, and is

concerned with ‘‘development and full description

of protocols for technology and scenario evaluations’’

as well as ‘‘execution and reporting of biometric evalua-

tions reflective of the parameters associated with bio-

metric evaluation types.’’ [4] 19795-2 specifies which

performance metrics and associated data must be

reported for each type of test. The standard also

specifies requirements for reporting on decision

policies whereby enrollment and matching errors are

declared.

ISO/IEC TR 19795-3:2007 Information technology –

Biometric per formance testing and reporting – Part 3:

Modality-specific testing [5] is a technical report

on modality-specific considerations. 19795-1 and -2

are modality-agnostic (although they are heavily

informed by experts’ experience with fingerprint, face,

and iris recognition systems). 19795-3, by contrast,

reports on considerations specific to performance

testing of fingerprint, face, iris, hand geometry, voice,

vein recognition, signature verification, and other

modalities. These considerations are important to

deployers and system developers, as test processes

vary from modality to modality. For example, in iris

recognition testing, documenting biometric-oriented

interaction between the subject and sensor is a central

consideration to both usability and accuracy; in face

recognition testing, capture variables are much less

likely to impact performance.

Within the US, three biometric performance test-

ing standards were developed prior to publication of

the ISO IEC standards discussed above.

1. ANSI INCITS 409.1-2005 Information Technology –

Biometric Performance Testing and Reporting –

Part 1: Principles and Framework

2. ANSI INCITS 409.2-2005 Information Technology –

Biometric Performance Testing and Reporting –

Part 2: Technology Testing and Reporting

3. ANSI INCITS 409.3-2005 Information Technology –

Biometric Performance Testing and Reporting –

Part 3: Scenario Testing and Reporting

Performance Testing and

Interoperability

ISO/IEC 19795-4 Bio metric performance testing and

reporting – Part 4: Interoperabilit y performance testing

specifies requirements for evaluating the accuracy and

interoperability of biometric data captured or pro-

cessed through different vendors’ systems. The stan-

dard, whose publication is anticipated in 2008, can be

used to evaluate systems that collect data in accordance

with 19794-N data exchange standards. 19795-4 helps

1072

P

Performance Testing Methodology Standardization

quantify the accuracy of standards’ generic data repre-

sentations relative to those of proprietary solutions.

For example, is System A less accurate when processing

standardized data than when processing proprietary

data? Can System A reliably process standardized data

from System B, and vice versa? 19795-4 contemplates

online (scenario), offline (technology), and hybrid

(scenario and technology) tests.

19795-4 is perha ps the highest-visibilit y perfor-

mance testing standard due to the close relationship

it bears with 19794-N standards. Data interchange

standards (and conformance to these standards) have

been the focus of much of the international commu-

nity’s efforts in biometric standardization. 19795-4

specified methods through which the adequacy of

these standards can be implicitly or explicitly evalu-

ated, leading to revisions or improvements in the stan-

dards where necessary.

Related End-User Testing Activities

Test efforts that have asserted compliance with pub-

lished performance testing standards include but are

not limited to the following:

1. NIST Minuti ae Interoperability Exchange Test

(MINEX) [6], asserts compliance with ISO/IEC

19795-4, Interoperability performance testing.

2. U.S. Transportation Security Administration Qual-

ified Product List (QPL) Testing [7], asserts com-

pliance with ANSI INCITS 409.3, Scenario Testing

and Reporting.

3. NIST Iris Interoperability Exchange Test (IREX 08)

[8], asserts compliance with ISO/IEC 19795-4,

Interoperability performance testing.

Current and Anticipated Customer

Needs in Biometric Performance

Testing

One challenge facing biometric performance testing

standardization is that of successfully communi-

cating performance results to non-specialist customers

(e.g., managers responsible for making decisions on

system implementation). To successfully utilize even

standards-compliant test reports, the reader must learn

a range of acronyms, interpret specialized charts, and

understand the test conditions and constraints. The

‘‘so what’’? is not always evident in biometric perfor-

mance test reports. This is particularly the case when

trying to graphically render error bounds and similar

uncertainty indicators associated with performance

test results.

A difficult-to-avoid limitation of biometric

performance testing standards is that tests results

will differ based on test population, collection pro-

cesses, data quality, and target application. In other

words, a systems’ error rate is not necessarily a reflec-

tion of its robustness, even if a test conforms to a

standard.

Gaps in Standards Development

Performance has been defined somewhat narrowly in the

biometric standards arena, most likely because the first-

order consideration for biometric technologies has been

the ability to reduce matching error rates. The tradition-

al focus on matching error rates – particularly FMR – in

biometric performance testing may be considered

disproportionate in the overall economy of biometric

system performance. As accuracy, enrollment, and

throughput rates improve with the maturation of bio-

metric technologies, development of performance test-

ing standards may be required in areas such as usability,

reliability, availability, and resistance to deliberate

attacks. For example, the number of ‘‘touches’’ required

to negatively impact match rates associated with images

captured from a fingerprint sensor could be the subject

of a performance testing standard.

An additional gap in biometric performance testing

standards is in testing under non-mainstream condi-

tions, such as with devices exposed to cold or to direct

sunlight, or with untrained populations and/or opera-

tors. Many tests are predicated on controlled-condition

data collection, thoug h biometric applications for

population control or military operations are often

highly uncontrolled.

Conformance testing is a third gap in performance

testing standards. The international community is

working on methods for validating that test reports

and methodologies conform to published standards.

Certain elements can be validated in an automated

fashion, such as the presence of required performance

Performance Testing Methodology Standardization

P

1073

P

data; other elements, such as those that describe how

testing was conducted, may be reliant on test lab

assertions.

Role of Industry/Academia in the

Development of the Required Testing

Methods

Biometric performance tests predated the development

of standardized methodologies by several years. Gov-

ernment and academic researchers and scientists grad-

ually refined performa nce testing methods in the early

1990s, with many seminal works performed in voice

recognition and fingerprint. The National Biometric

Test Center at San Jose State University [9] was an early

focal po int of test methodology development. Today,

leading developers of performance testing standards

include the National Institute of Standards and Tech-

nology (NIST) [10], an element of the US Department

of Commerce, and the UK National Physical Labora-

tory (NPL) [11].

Biometric vendors bring to bear considerable

expertise on performance testing, having unparalleled

experience in testing their sensors and algorithms. How-

ever, vendors are not highly motivated to pub-

lish comprehensive, standards-compliant performance

tests. Speaking generally, vendors are most interested

in practical answer to questions such as, how many

test subjects and trials are necessary to assert a FMR of

0.1%? Biometric services companies (e.g., consultancies

and systems integrators) also support government

agencies in standardized performance test design and

execution.

Summary

Biometric performance testing standards enable

repeatable evaluations of biometric algorithms and

systems in controlled lab and real-world operational

environments. Performance testing standards are cen-

tral to successful implementation of biometric systems,

as government and commercial entities must be capa-

ble of precisely measuring the accuracy and usability

of implemented systems. Deployers must also be

able to predict future performance as identification

systems grow larger and as transaction volume

increases.

References

1. http://www.biometricgroup.com/reports/public/ITIRT.html

2. http://www.iso.org/iso/iso_catalogue/catalogue_tc/ cata logue _

detail.htm?csnumber= 41447

3. http://www.iso.org/iso/iso_catalogue/catalogue_tc/ cata logue _

detail.htm?csnumber= 41448

4. http://www.iso.org/iso/iso_catalogue/catalogue_tc/ cata logue _

detail.htm?csnumber= 41448

5. http://www.iso.org/iso/iso_catalogue/catalogue_tc/ cata logue _

detail.htm?csnumber= 41449

6. http://fingerprint.nist.gov/minex/

7. http://www.tsa.gov/join/business/biometric_qualification.shtm

8. http://iris.nist.gov/irex/IREX08_conops_API_v2.pdf

9. http://www.engr.sjsu.edu/biometrics/

10. http://www.itl.nist.gov/div893/biometrics/standards.html

11. http://www.npl.co.uk

Perpetrator Identification

▶ Gait, Forensic Evidence of

Personal Data

Personal data is any information that may be used,

either individually or when combined with other

data, to identify a person. For example, a name and

address are usually sufficient to identify a person, and

so this data pair would be considered personal data. A

more subtle example is hobbies and Postal Code

obtained from a retail liquor store’s customer database.

By themselves, these data items would not be uniquely

identifying, but when linked with another database,

say, that of an iguana owners club, and add to that

the common sense knowledge that iguana make rare

pets, it becomes almost certain who patronized the

liquor store. In other words, any data that could po-

tentially reveal the identity of a person must be treated

as personal data.

▶ Privacy Issues

1074

P

Perpetrator Identification

Personal Information Search

▶ Background Checks

Person-Independent Model

▶ Universal Background Models

Phase

Every complex number a+bi can be expressed in polar

coordinate form as Ae

ip

, where A is the amplitude and

p is the phase of the complex number. The phase p can

be computed by arctan (b/a). Phase is always in the

range between zero and 2p.

▶ Iris Recognition, Overview

Phoneme

The phoneme is to spoken language what the letter is

to written language – the representation of an individ-

ual speech sound. The English word ‘‘sick’’ comprises

the three phonemes /s-I-k/, whereas ‘‘thick’’ consists

of /y-I-k/, ‘‘sack’’ consists of /s-æ-k/, and sit consists

of /s-I-t/. The phonetic symbols, which can be used

for the sounds of any language, are defined in the

International Phonetic Alphabet. Strictly speaking,

the phoneme is an abstract concept used in linguistics,

and phonemes often correspond to complex com-

pound sounds. For example, the phoneme /b/ (as in

‘‘big’’) is produced by the speaker first closing the lips

and then releasing the built-up air pressure creating a

plosive sound. The period of lip closure, the plosion

and the transitions to and from the previous and

subsequent phonemes are all identifiable as distinct

events in the audio stream.

▶ Liveness Assurance in Voice Authentication

▶ Voice Sample Synthesis

Photogrammetry

Photogrammetry is an examination of two images taken

from different positions to identify three-dimensional

points on an object or face represented in two dimen-

sions. It is akin to methods of stereoscopy.

▶ Face, Forensic Evidence of

Photography for Face Image Data

TED TOMONAGA

Konica Minolta Technology Center, Inc., Tokyo, Japan

Synonyms

Face photograph; Facial photograph; ID photograph;

Photography guidelines; Photometric guidelines

Definition

Face photography is used in passports, visas, driver

licenses, or other identification documents. Face photo-

graphs can be used by human viewers or by automated

face recognition systems, either for confirmation of a

claimed identity (usually termed verification) or, by

searching a database of face images, for determining

the possible identity of an individual (usually termed

identification). ISO/IEC 19794-5 defines a standard

data format for digital face images to allow interopera-

bility among face recognition systems, government

agencies, and other creators and users of face images.

In addition to image quality factors such as

▶ resolu-

tion,

▶ contrast, and ▶ brightness, many other factors

affect face recognition accuracy, including subject po-

sitioning,

▶ pose and ▶ expression, ▶ illumination

Photography for Face Image Data

P

1075

P

uniformity, and the use of eyeglasses or makeup, as

well as the time difference between two photographs

being compared.

Introduction

Following t he selection of a digital face image as the

primary biometric technology for use in

▶ ePassports

by the International Civil Aviation Organization

(ICAO) in 2003, machine-assisted face recognition

has become widely used for various identification and

verification purposes. Compared with other

▶ biomet-

ric technologies, face recognition is usually considered

non-invasive and more socially acceptable [1]. The

accuracy and speed of face recognition technology

has improved considerably recently, due, in part, to a

series of government-sponsored, objective competi-

tions among companies and academic institutions

producing face recognition systems [2, 3].

The face imag e interchange standard known as ISO/

IEC (International Organization for Standardization/

International Electrotechnical Commission) 19794-5

Biometric Data Interchange Formats – Face Image

Data was approved as an international standard by

ISO/IEC JTC1 SC37 in 2005 [4]. That standard defines

adataformatfordigitalfaceimagestoallowinterop-

erability among face-image-processing systems.

However, there are many factors that affect face rec-

ognition system performance, including an indivi-

dual’s appearance, such as his or her facial

characteristics, hair style, and accessories, and the

image acquisition conditions, su ch as the camera’s

field-of-view, focus and shutter speed, depth-of-

field, background, and lighting. As a consequence,

many of the countries producing ePassports have

their own guidelines for the production and submis-

sion of face photographs [5–8].

This chapter describes how to arrange lighting

and reflective surfaces relative to the camera and

subject, and provides specific adv ice on the acceptable

amount of variation in

▶ illumination across the face,

on how to avoid shadows on the face and background,

and on the design of a user interface that can help

ensure proper head positioning . Further info rmation

may be found in ISO/IEC 19794-5 Amendment

1:2007, Conditions for taking photographs for face

image data.[9]. The amendment provides explicit guid-

ance for the design of photographic studios, photo

booths, and other sites producing conventional printed

photographs or digital images of faces that may be used

in passports, visas, driver licenses, or other identifica-

tion documents.

Enrollment Guidelines

The use of automated face recognition requires that an

input face image first be enrolled by the system, that is,

the specific set of face features to be used by the

recognition system must be measured and stored. Cor-

rect enrollment requires that the input image be of

high quality and meet the following criteria. Note

that the numbers displayed in parentheses in the fol-

lowing sections are the relevant subclause numbers of

the ISO/IEC 19794-5 face image interchange standard.

Subject Guidelines

For purposes of enrollment in an automated face rec-

ognition system, the following general subject guide-

lines should be observed:

Pose angle – The subject must be in a frontal pose.

Subjects should have their shoulders square towards

the camera and be looking directly at the camera.

There should be no rotation of the head left or right

or up or down, nor should it be tilted towards either

shoulder. For rotation of the head left/right and up/

down (yaw and pitch) – the compliance requirement is

< 5

(7.2.2). For head tilt (roll) – the compliance

requirement is < 8

(7.2.2). The requirement for roll

is less restrictive, because an in-plane rotation of the

head can be corrected by automated face recognition

systems more easily.

Face size/position adjustment – The adjustment of

face size can be made, if needed, by changing the

distance between the subject and the camera or by

optical zoom magnification.

Neutral expression – The subject’s face should be

relaxed and w ithout expression; in particular, the sub-

ject should not be smiling. That is, his/her expression

should be neutral with eyes open and mouth closed.

Eyes closed/obstructed – There should be no ob-

struction of the eyes due to eyeglass rims, tint, or

glare, bangs, eye patches, head clothing, or closed

eyes (7.2.3, 7.2.11) Hats, scarves, or any other apparel

that may obstruct the face should be removed.

1076

P

Photography for Face Image Data

Background – The background should be unpat-

ternedandplain,suchasasolid-colorwallorcloth.

The background color may be a light gray , light blue,

whiteoroff-white.Thebackgroundshouldbeseparately

illuminated such that there are no shadows visible on the

background behind the subject’s face (A.2.4.3).

Camera Guidelines

To ensure correct camera focus, color, and exposure,

and minimal motion blur and geometric distortion,

the following general guidelines should be observed:

Shutter speed – The shutter speed should be hig h

enough to prevent motion blur (1/60–1/250 s), unless

electronic flash is the predominant source of

illumination.

Color balance – The image color balance should

reflect nat ural colors with respect to expected skin

tones. This value can be affected by inappropriate

white balancing or red-eye (7.3.4).

Brightness exposure/contrast – Exposure should be

checked with an exposure meter. Gradations in skin

texture should be visible, with no saturation on the

face ( 7.3.2).

Camera-to-subject distance – To ensure minimal

geometric distortion, the camera-to-subject distance

should be within the range of 1.2–2.5 m in a typical

photo studio.

Camera–subject height – The camera should be

tripod-mounted for stability. The optimum height of

the camera is at the subject’s eye-level. Height adjust-

ment can be done either by using a height-adjusting

stool for the subject or by adjusting the tripod’s height.

Centering – Keeping the subject’s face at the center

of the frame is recommended. The horizontal center of

face shall be between 45% and 55% of the image width

(8.3.2). The vertical center of the face shall be between

30% and 50% of the image height, as measured from

the top of the image (8.3.3).

Head size relative to the image size – Head width to

image width ratio should be between 5:7 and 1:2 (8.3.4,

8.3.5, A.3.2.2).

Focal length of camera lens (35 mm format equiva-

lent) – Use a normal to medium telephoto lens (50–

130 mm) (under shooting distance of 1.2–2.5 m).

Resolution – The spatial resolution should be greater

than about 2 pixels per mm. Resolution can easily be

checked by test shooting a ruler (7.3.3). For optimum

performance of a face recognition system, the number

of pixels between the eyes shall be at least 90 (8.4.1) and

preferably 120 (A.3.1.1).

Dy namic Range in Face – There should be at least

7 bits of intensity variation (i.e., at least 128 unique

values) in the facial region after conversion to grayscale

(7.4.2).

Lighting Guidelines

Artistic portraits are often taken with intentionally

uneven illumination, while face photographs taken

for the purposes of identification by humans or

machines should display even illumination of the

face. Various technical publications have reported

that the use of lighting arrangements that evenly illu-

minate the face w ithout producing shadows around

the nose or eyes improves the accuracy of automated

face recognition systems [10, 11].

Example Configurations for a Photo Studio or Store

Ty pically, a photo studio or a photo store is a profes-

sionally operated facility, equipped with a film or

digital camera, multiple adjustable light sources, a

suitable background or backdrop cloth, and subject

positioning apparatus designed to obtain high qu ality

portraits. S ome of the design consideratio ns for a

photo studio or store are described in the following

paragraphs:

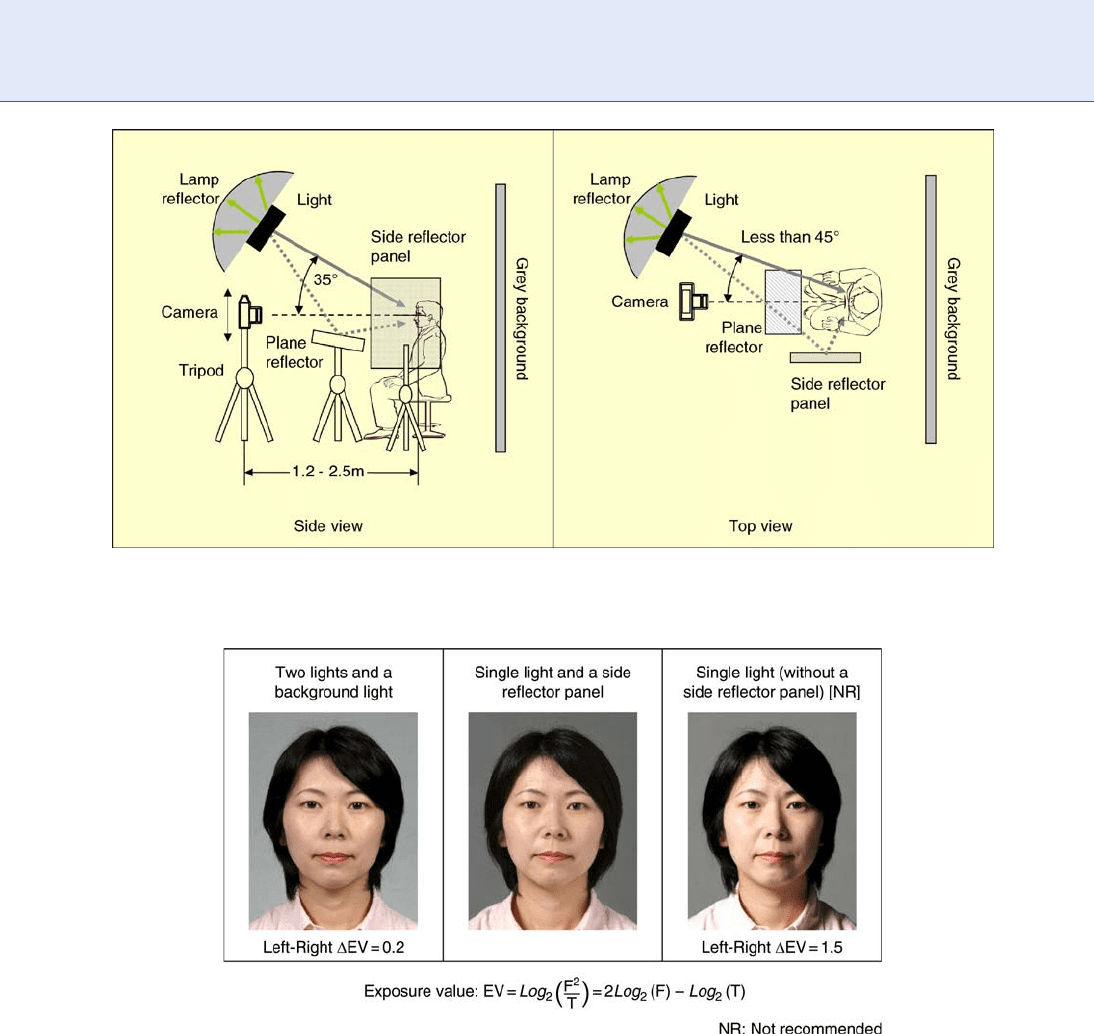

Lighting uniformity (No shadows and glare) on the

face – A simple arrangement is a single light source and

multiple reflector panels to illuminate the subject’s face

uniformly. The light, shown with a lamp reflector,

should be placed approximately 35

above the line

between the camera and the subject, and be directed

towards the subject’s face at a horizontal angle of less

than 45

from the line (Fig. 1). Ideally there would be

two diffused light sources in front of the face at 45

on

either side of the camera. The maximum difference of

four exposure values on the left and right sides of

a face, chin, and forehead should be less than 1 EV

(Fig. 2). The measurements may be made by placing an

incident light meter at tho se four positions of a sub-

ject’s face and pointing the meter towards the camera.

If the values are not within 1 EV, the lights should be

repositioned more symmetrically about the subject-to-

camera line or additional reflective surfaces may be

used to redistribute the light.

Photography for Face Image Data

P

1077

P

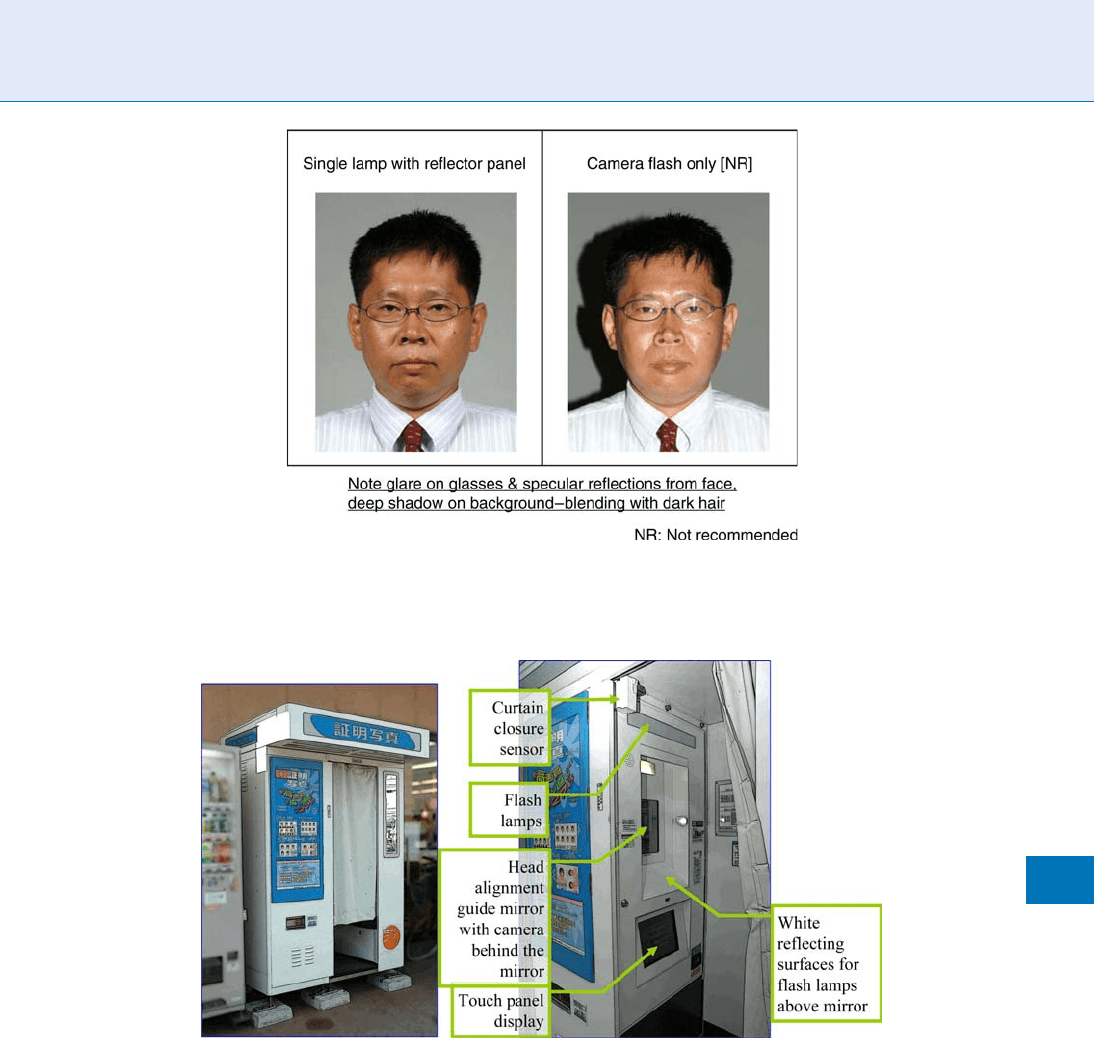

No on-camera flash – An on-camera flash should

notbeused.Asinglebare‘‘point’’lightsourcesuchasa

camera-mounted flash often produces ‘‘hot spots’ ’

(very bright ar eas on the face) and is not acceptable for

imaging. (Fig. 3) The use of on-camera flash also can

produce ‘‘red-eye,’’ particularly for dark-adapted

subjects.

Example Configuration for a Photo Booth

A photo booth is typically a coin-operated, self-portrait

photography unit, mostly used for taking ID pictures.

Similar environments are used in registration offices for

drivers’ licenses, etc. (Figs. 4 and 5) Fig. 6 shows an

example of an arrangement of lighting and a camera for

a photo booth. Some of the system c onsiderations for a

photo booth are described in the following paragraphs:

Adjustment of head size, expression, etc. by monitor-

GUI – There are many kinds of user interface displays

for adjustment of head size and position. Figure 7

shows one of the example s of a user interface: a

head positioning frame. Even with the use of a

head-positioning display, an image preview should be

Photography for Face Image Data. Figure 2 Evenness of illumination.

Photography for Face Image Data. Figure 1 Single lamp arrangement for a photo studio.

1078

P

Photography for Face Image Data

provided to allow thesubject to recapture the image

before it’s printed or written to a storage medium,

in case the subject might deem his/her pose or expres-

sion unacceptable. Illustrations of acceptable poses

and expressions should be provided inside the booth.

Face image quality assessment software – As an

alternative to a head-positioning display,

▶ face

detection software or

▶ quality assessment software

that automatically sizes and centers the head within the

field-of-view can be used to ensur e proper head posi-

tioning. However, given that such software sometimes

does not find the face position correctly, a preview image

should be provided with provision for manual override

of the automatically determined position.

Summary

The use of proper illumination is important to obtain

face photographs suitable for identification purposes.

A key factor is the evenness of the illumination. Proper

lighting arrangements to reduce excessive shading and

Photography for Face Image Data. Figure 4 Example of a photo booth(1).

Photography for Face Image Data. Figure 3 Effect of using on-camera flash note glare glasses & specular reflections

from face, deep shadow on background –blending with dark hair.

Photography for Face Image Data

P

1079

P