Li S.Z., Jain A.K. (eds.) Encyclopedia of Biometrics

Подождите немного. Документ загружается.

a multipart standard is under development, covering

several biometric modalities. Such multip art standard

is known as ISO/IEC 19794. There are four parts in this

standard which cover finger-based biometrics , or what

can be better understood as fingerprint biometrics.

1. Part 2 of the Standard series, deals with the way a

minutiae-based feature vector or template has to

be coded

2. Part 3 standardizes the way to code information

referring to the spectral information of the

fingerprint

3. Part 4 determines the coding of a fingerprint raw

image and

4. Part 8 establishes a way to code a fingerprint by its

skeleton

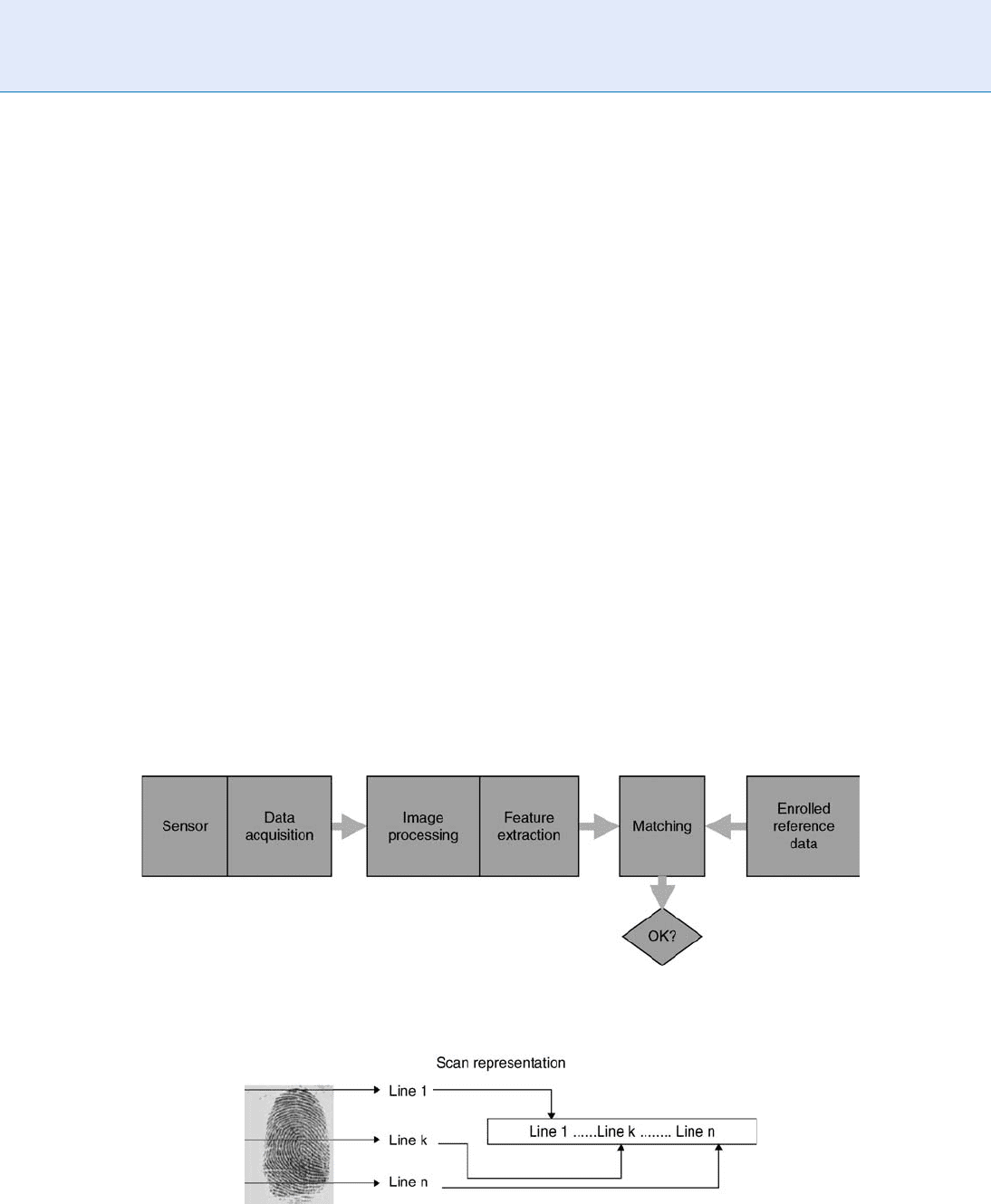

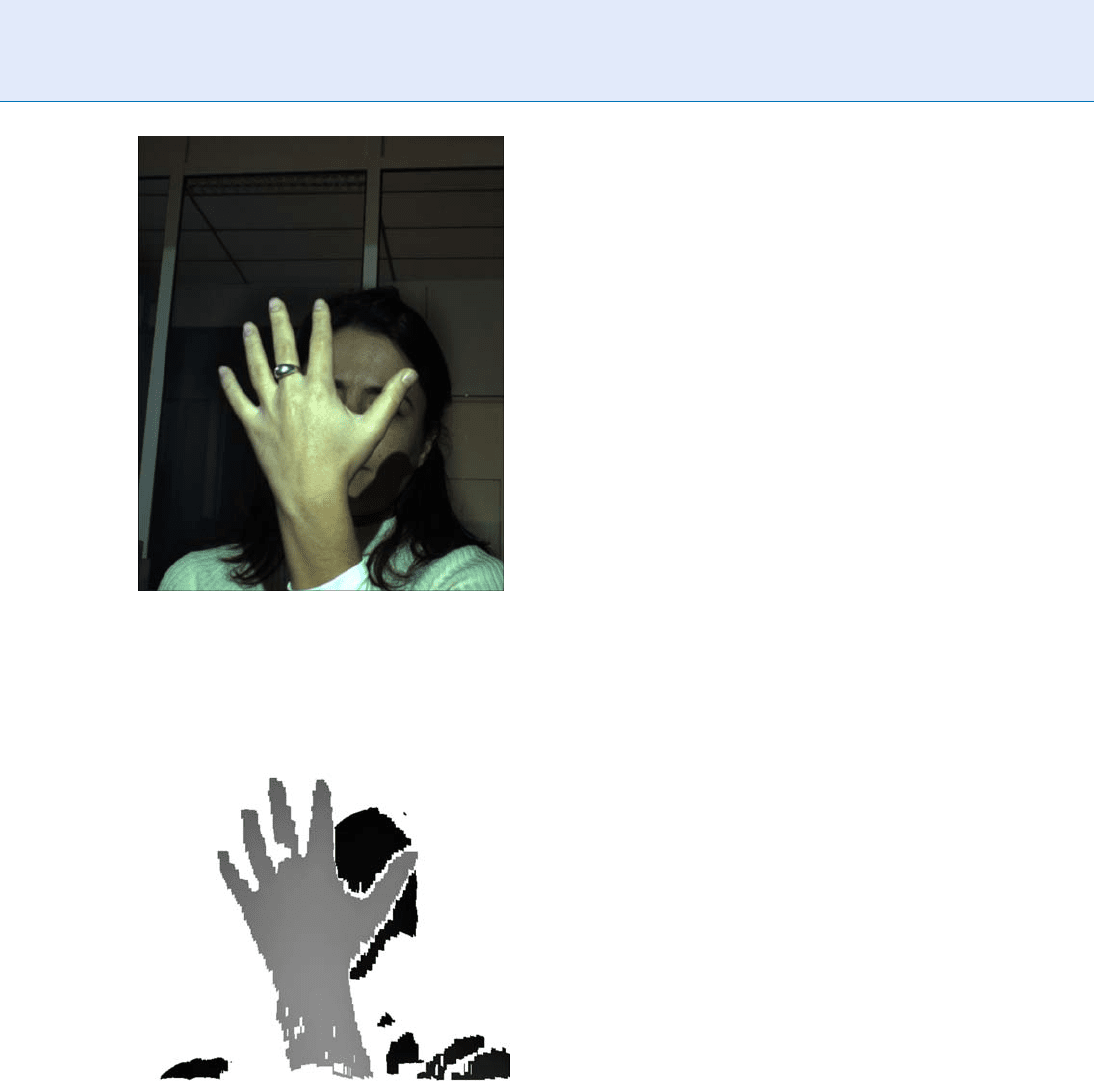

Figure 1 shows the basic architecture of a typical

fingerprint verification system. A finger is presented to

a sensor and a raw image acquired. Image processing

techniques enhance the image quality before a feature

vector of characteristic features can be extracted. The

features are compared with a previously recorded ref-

erence data set to determine the similarit y between the

two sets before the user presenting the finger is authen-

ticated. The reference data is stored in a database or on

a portable data carrier.

The following subsections explain the basic char-

acteristics of each type of finger-based standard. The

image standard (Part 4) is presented first as it is the

first step in the fingerprint comparison process as

shown in the architecture above. This is followed by

the other finger based standards, each of which deals

with samples already processed.

Finger Images

As already mentioned, the way a fingerprint image is

to be coded is defined in ISO/IEC 19794-4 Inter-

national Standard [1], whose title is ‘‘Information

technolog y - Biometric data interchange formats -

Part 4: Finger image data.’’ The way the finger is scanned

is out of the scope of the standard, but after image

acquisition, the image shall represent a finger in upright

position, i.e., vertical and with the tip of the finger in the

upper part of the image. The way to code such an image

is represented in Fig. 2, where the top line is the first to

be stored and/or transmitted. This is in contradiction

to mathematical graphing practice but in conjunction

with typical di gital image processing. For those images

that require two or more bytes per pixel intensity, the

most significant byte is stored/transmitted first, and

bytes follow most significant bit coding.

Finger Data Interchange Format, Standardization. Figure 1 Typical Biometric Verification System.

Finger Data Interchange Format, Standardization. Figure 2 Coding structure of a fingerprint image. Image taken

from [1].

410

F

Finger Data Interchange Format, Standardization

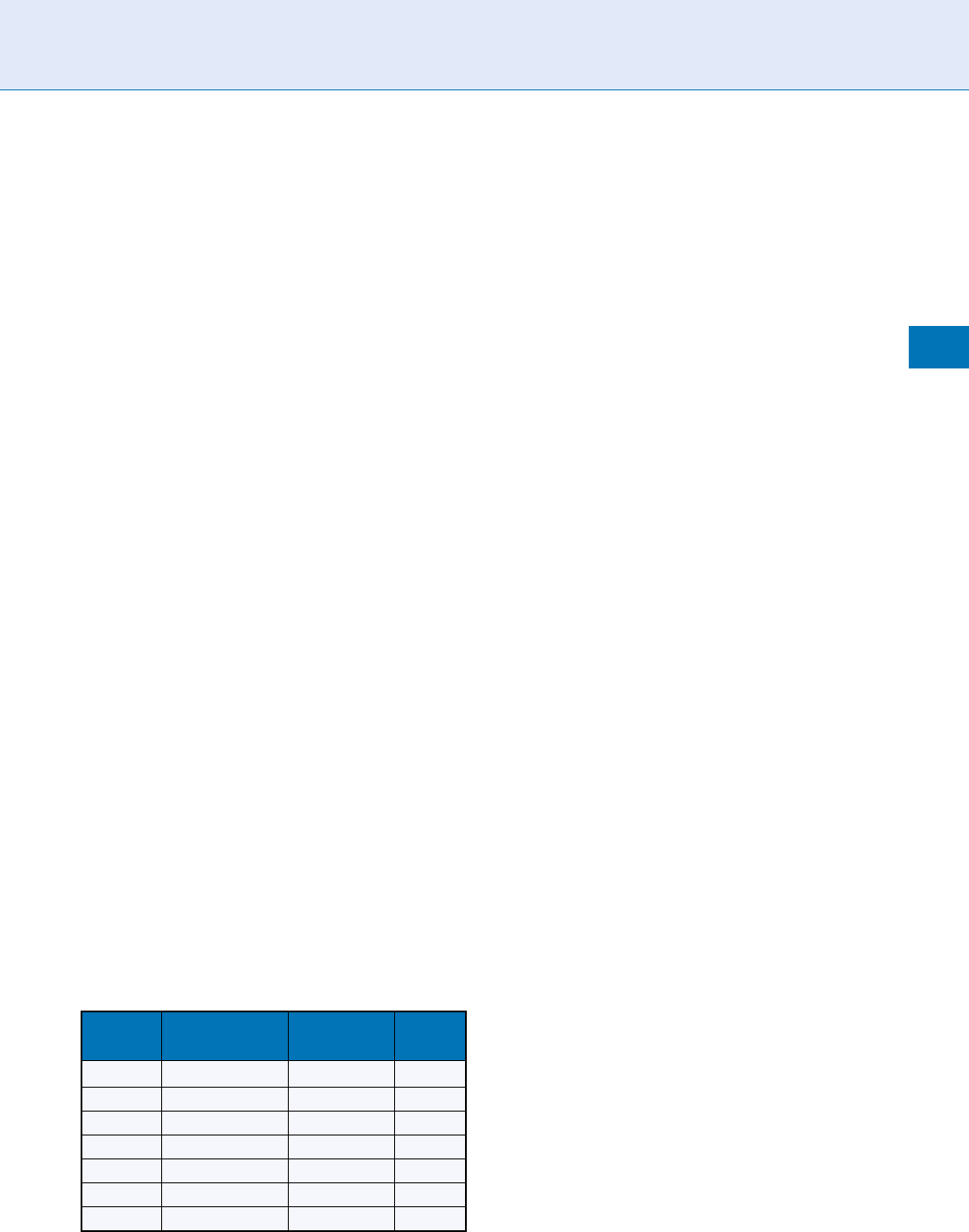

This International Standard also includes a set of

constraints for image acquisition. It determines the

pixel aspect ratio, which shall be between 0.99 and

1.01 (horizontal/vertical sizes), as well as several

image acquisition levels, as stated in Table 1.

After the requirements for the image to be stored

or transmitted have been specified, this International

Standard details the structure of the data record refer-

ring to a finger image. Following CBEFF specifications

[2] (see entry ‘‘Common Biometric Exchange Frame-

work Formats’’), a record referring to a finger image

has the following structure (for detai ls refer to the

last version of this International Standard [1]):

A single fixed-length (32-byte) general record

header containing information about the overall

record, with the following fields:

– Format identifier (4 bytes with the hexadecimal

value 0x46495200) and version number (coded

in another 4 bytes)

– Record length (in byte s) including all finger

images within that record (coded in 6 bytes)

– Capture device ID (2 bytes) and Image acquisi-

tion level (2 bytes)

– Number of fingers (1 byte), Scale units used

(1 byte), and Scan resolution used (2 bytes

for horizontal and another 2 for vertical

resolution)

– Image resolution, coded the same way as the

scan resolution, and whose value shall be less

or equal to scan resolution

– Pixel depth (1 byte) and Image compression

algorithm used (coded in 1 byte)

– 2 bytes reserved for future use

A single finger record for each finger, view, multi-

finger image, or palm consisting of:

– A fixed-length (14-byte) finger header con-

taining information pertaining tothe data for

a single or multi-finger image, which gives

information about:

Length of the finger data block (4 bytes)

Finger/palm position (1 byte)

Count of views (1 byte) and View number

(1 byte)

Finger/palm image quality (1 byte) and Im-

pression type (1 byte)

Number of pixels per horizontal line (2

bytes) and Number of horizontal lines

(2 bytes)

1 byte reserved for future use

– Compressed or uncompressed image data view

for a single, multi-finger, or palm image , which

has to be smaller than 43x10

8

bytes.

The raw finger format is used, for example, in data-

bases containing standard fingerprints. Law enforce-

ment agencies are typical applicants of the standard.

The largest fingerprint image databases are maintained

by the FBI in the United States and are encoded with

a national counterpart of this standard.

Fingerprint Minutiae

While Part 4 of the 19794 Series of Standards is dedi-

cated to raw biometric sample data, Part 2 refers to the

format in which a minutiae-based feature vector or

template has to be coded. Therefore ISO/IEC 19794-2

‘‘Information Technology - Biometric data interchange

Formats - Part 2: Finger minutiae data’’ [3] deals

with processed biometric data, ready to be sent to a

comparison block to obtain a matching score.

Finger minutiae are local point patterns present in

a fingerprint image. The comparison of these charac-

teristic features is sufficient to positively identify a

person. Sir Francis Galton first defined the features of

a fingerprint [4].

In order to reach interoperability, this International

Standard defines not only the record format, but also

the rules for fingerprint minutiae extraction. Regarding

record formats, due to the application of fingerprint

biometrics to systems based on smart cards, compact

record formats are also defined to cope with memory

and transmission speed limitations of such devices.

Finger Data Interchange Format, Standardization.

Table 1 Image acquisition levels for finger biometrics.

Extract from Table 1 in [1 ]

Setting

level

Scan resolution

(dpi)

Pixel depth

(bits)

Gray

levels

10 125 1 2

20 250 3 5

30 500 8 80

31 500 8 200

35 750 8 100

40 1,000 8 120

41 1,000 8 200

Finger Data Interchange Format, Standardization

F

411

F

Fingerprint scientists have defined more than 150

different types of minutiae [5]. Within this Standard,

minutiae types are simplified to the following: (1) ridge

ending, (2) ridge bifurcation, and (3) other. The location

of each minutiae is determined by its horizontal and

vertical position within the image. To determine such

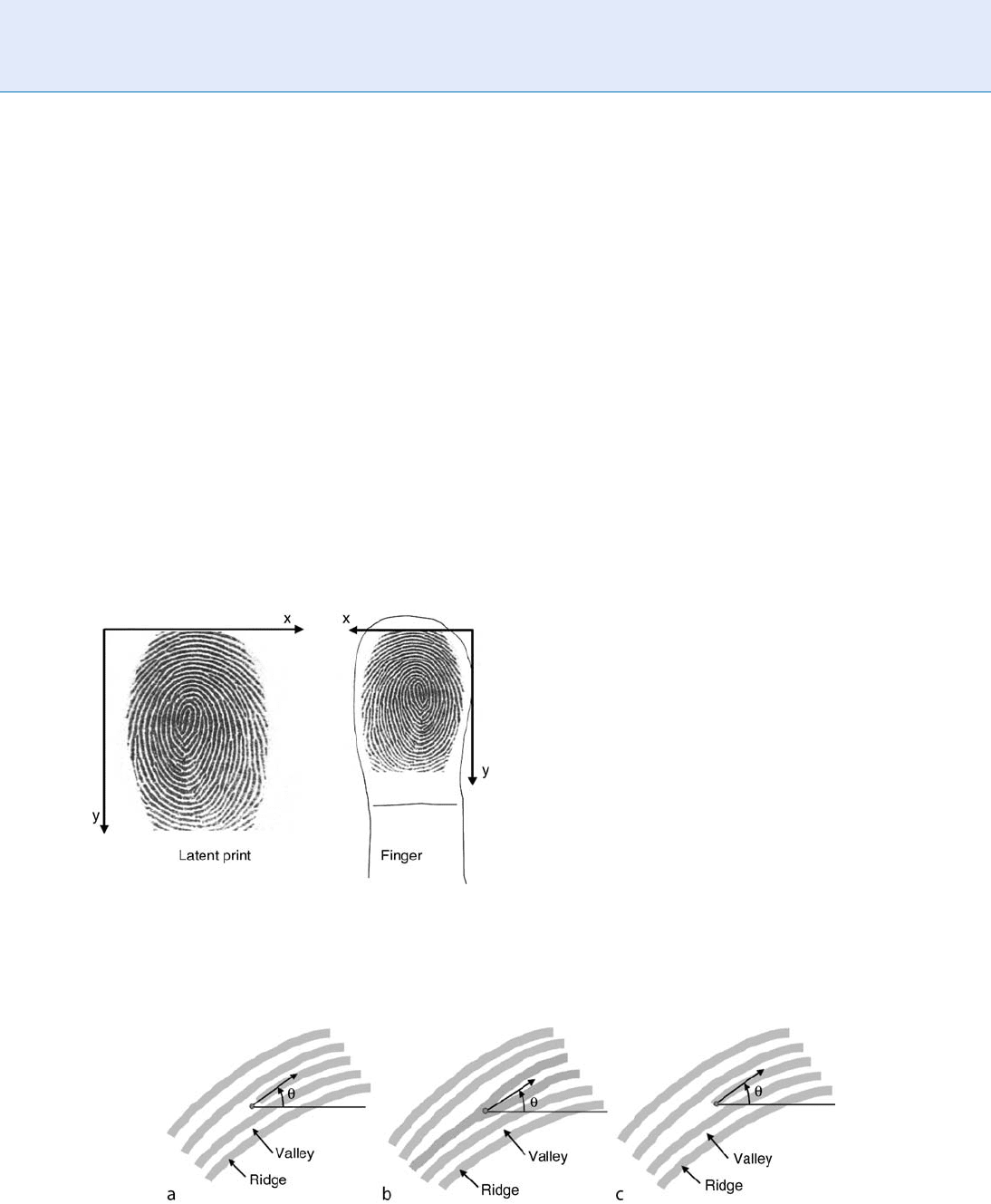

location a coor dinate system is to be defined. Figure 3

shows how such coordinate system is chosen. Granular-

ity to be taken to determine location is of one hun-

dredth of a millimetre for the normal format, while just

one tenth of a millimetre for card compact formats.

Figure 4 shows the different ways to consider

the location of a minutiae. (1) represents a Ridge

Ending, encoded as a Valley Skeleton Bifurcation

Point, (2) shows how to locate a Ridge Bifurcation,

encoded as a Ridge Skeleton Bifurcation Point, Finally

(3) illustrat es how to locate a Ridge Ending encoded as

a Ridge Skeleton Endpoint. How to determine the

encoding of ridge ending actually used in a specific

dataset is a subject currently under revision in the stan-

dard. The other types of minutia have to be coded

consistent with the Standards (see details in [3]).

To define the minutiae direction, its angle has to be

determined. This Standards specifies that the angle is

obtained, increasing counter-clockwise rotation start-

ing from the horizontal axis to the right of the loca-

tion of the minutiae point. The angle is encoded in

a unsigned single byte, so the granularity is 1.40625

∘

per bit (360∕256). Figure 4 also illustrates how the

angle is determined.

Additional information that may be included in

a minutiae-based record are cores, deltas, and ridge

crossings to neighboring minutiae.

With all these definitions, the two major format

types defined by this International Standard are:

(1) record format, and (2) card format. The structure

of the record format is summarized in the following

paragraphs and for additional details refer to the

standard [3].

A fixed-length (24-byte) record header containing

information about the overallrecord, including

the number of fingers represented and the overall

record length in bytes:

– Format identifier (4 bytes with the hexadecimal

value 0x464D5200) and Version number (coded

in another 4 bytes)

– Record length (in bytes) including all finger

images w ithin that record (coded in 4 bytes)

– Capture device ID (2 bytes)

– Size of the image in pixels (2 byte s for X dimen-

sion, and 2 bytes for Y dimension)

– Image resolution in pixels per centimetre

(2 bytes for X and 2 bytes for Y)

– Number of finger views included in the record

– 1 byte reser ved for future use

Finger Data Interchange Format, Standardization. Figure 4 Illustration of location of minutia. Image taken from [3].

Finger Data Interchange Format, Standardization.

Figure 3 Coordinate System for Minutiae Location. Image

taken from [3].

412

F

Finger Data Interchange Format, Standardization

A Single Finger record for each finger/view, con-

sisting of:

– A fixed-length (4-byte) header containing in-

formation about the data for asingle finger,

including the number of minutiae:

Finger position (1 byte)

View number (4 bits) and Impression type

(4 bits, to make a 1 byte in total)

Finger quality (1 byte)

Number of minutia (1 byte)

– A series of fixed-length(6-byte) minutia

descriptions:

Minutia type (2 bits) and X location in

pixels (14 bits)

2 bits reserved and Y location in pixels

(14 bits)

Minutiae angle (1 byte)

Quality of minutiae (1 byte)

– One or more ‘‘extended’’ data areas for each

finger/view , containing optional or vendor-

specific information. It starts always with 2 bytes

which determine the length of Extended Data

Block. If this is 0x0000, no Extended Data is

included. If it has a nonnull value, then it

is followed by vendor-specific data which could

include information about ridge count, cores

and deltas, or cell information.

Regarding the card formats, the current version of

the standard allows 2 sub-formats: (1) normal format

(also referred as 5-byte minutiae), and (2) compact

format (also known as 3-byte minutiae). The way mi-

nutia are coded in each format is described below.

Card normal format (like the record format, but

removing quality information):

– Minutia type (2 bits) and X location in pixels

(14 bits)

– 2 bits reserved and Y location in pixels (14 bits)

– Minutiae angle (1 byte)

Card compact format:

– X coordinate (8 bits) considering a unit of

10

1

mm

– Y coordinate (8 bits) considering a unit of

10

1

mm

– Minutia type (2 bits) using the sam e coding as

with the card normal format

– Angle (6 bits) having a granularity of 360∕64

Another important aspect related to card formats is

that as they are intended to be used with devices with

limited memory and processing power, the number of

minutia may be restricted, and in such case, truncation

is needed. Additionally in Match-on-Card systems, to

reduce algorithm complexity, minutia may need to be

sorted in a certain way. And finally, the way data is

exchanged differs from the traditional CBEFF format.

This International Standard covers all such cases. The

reader is suggested to refer to the last version of the

Standard [ 3] for further details.

The minutia standard is used e.g., by the ILO (In-

ternational Labour Organization) in its seafearers

identity card and in several national ID card imple-

mentations including Thailand and Spain [6].

Spectral Data of a Fingerprint

Part 3 of the 19794 series of standards deals with a

format suitable to process fingerprints when using

morphological approaches. But as seen in additional

Fingerprint entries in this Encyclopedia, there are

other approaches to perform biometric identification

using fingerprints. Some of those approaches relate to

the spectral information of the fingerprint. Algorithms

using spectral data look at the global structure of a

finger image rather than certain local point patterns.

In such cases, 19794-2 is of no use and the only possi-

bility would be to use the w hole image as stated in

19794-4, which has the inconvenience of requiring

the storage and/or transmission of a large amount of

data. This could be inconvenient if not blocking for

some application s.

In order to provide a new data format that could

increase interoperability among spectral based solu-

tions, reducing the amount of data to be used,

19794-3 has been developed under the title of ‘‘Infor-

mation technology - Biometr ic data interchange

formats - Part 3: Finger pattern spectral data’’ [7]. In

fact, this International Standard deals with three major

approaches in spe ctral based biometrics (wavelet based

approaches are not supported by this standard).

1. Quantized co-sinusoidal triplets

2. Discrete Fourier transform

3. Gabor filters

After declaring the basic requirements for the origi-

nal image in order to be considered for these algorithms

(same coordinate system as in 19794-2, 255 levels of

grey with 0 representing black and 255 being white, and

Finger Data Interchange Format, Standardization

F

413

F

dark colours corresponding to ridges while light pixels

corresponding to valleys), and describing all the above

mentioned technologies, the Standards focuses on the

record structure (for details refer to [7]), which is:

A variable-length record header containing infor-

mation about the overall record, including:

– Format identifier (4 bytes with the hexadecimal

value 0x46535000) and Version number (coded

in another 4 bytes)

– Record length (in bytes) including all fingers

within that record (coded in 4 bytes)

– Number of finger records included (1 byte)

– Image resolution in pixels per centimetre (2 bytes

for X direction and 2 bytes for Y direction)

– Number of cells (2 bytes for X direction and

2 bytes for Y direction)

– Number of pixels in cells (2 bytes for X direc-

tion and 2 bytes for Y direction)

– Number of pixels between cells centres (2 bytes

for X direction and 2 bytes for Y direction)

– SCSM (Spectral component selection method -

1 byte), which can be 0, 1, or 2. Depending on

the value of this field the following fields could

refer to type of window, standard deviation,

number of frequencies, frequencies, number of

orientations and spectral components per cell,

and bit-depths (propagation angle, wavelength,

phase, and/or magnitude)

– Bit-depth of quality score (1 byte)

– Cell quality group granularity (1 byte)

– 2 bytes reserved for future use

A single finger record for each finger, consisting of:

– A fixed-length (6-byte) header containing in-

formation about the data for asingle finger:

Finger location ( 1 byte)

Impression type (1 byte)

Number of views in single finger record (1 byte)

Finger pattern quality (1 byte)

Length of finger pattern spectral data block

(2 bytes)

– A finger pattern spectral data block:

View number (1 byte)

Finger pattern spectral data

Cell quality data

– An extended data block containing vendor-

specific data, composed of block length

(2 bytes), area type code (2 bytes), area length,

and area.

As in 19794-2, this International Standard also

defines the Data Objects to be included for a card

format, with the reduction in granularity recom-

mended (for further details see [7 ]).

Some of the leading fingerprint verification algo-

rithms rely on spectral data or a combination of spec-

tral data and minutiae. This standard could enhance

the interoperability and performance of large scale

identification systems such as criminal or civil Auto-

matic Fingerprint Identification Systems (AFIS).

Skeletal Data of a Fingerprint

Finally 19794-8 titled ‘‘Information technology -

Biometric data interchange formats - Part 8: Finger pat-

tern skeletal data’’ [8] deals with the format for represent-

ing fingerprint images by a skeleton with ridges

represented by a sequence of lines. Skeletonization is a

standard procedure in image processing and generates a

single pixel wide skeleton of a binary image. Moreo v er the

start and endpoints of the skeleton ridge lines are includ-

ed as real or virtual minutiae, and the line from start to

endpoint is encoded by succ essive direction changes.

For minutiae location and coding, much of the

19794-2 card format is used, but here the position of

a ridge bifurcation minutiae shall be defined as the

point of forking of the skeleton of the ridge. In other

words, the point where three or more ridges intersect

is the location of the minutia. No valley representation

is accepted under this International Standard. Anothe r

difference w ith 19794-2 card formats , is that in this

Standard no other-type minutiae is considered (if a

minutiae has more than three arms, like a trifurcation,

it is considered a bifurcation), and that along this

standard codes for ‘‘virtual minutiae’’ are used.

Skeleton lines are coded as polygons. Every line

starts with a minutiae, and it is followed by a chain

of direction changes (coded with the granularit y stated

in the record header), until it reaches the final minu-

tiae. Several rules are defi ned in the standard (see [8]

for further reference).

All that information is coded in a record with the

following structure (limiting values as well as recom-

mended values can be found in [8]):

A fixed-length (24-byte) record header containing:

– Format identifier (4 bytes with the hexadecimal

value 0x46534B00) and Version number (coded

in another 4 bytes)

414

F

Finger Data Interchange Format, Standardization

– Record length (in bytes) including all finger

images w ithin that record (coded in 4 bytes)

– Capture device ID (2 bytes)

– Number of finger views in record (1 byte)

– Resolution of finger pattern in pixels per centi-

metre (1 byte)

– Bit depth of direction code start and stop point

coordinates (1 byte)

– Bit depth of direction code start and stop direc-

tion (1 byte)

– Bit depth of direction in direction code (1 byte)

– Step size of direction code (1 byte)

– Relative perpendicular step size (1 byte)

– Number of di rections on 180

∘

(1 byte)

– 2 bytes reserved for future use

A single finger record for each finger/view, consis-

ting of:

– A fixed-length (10 bytes) head er:

View number (1 byte)

Finger position (1 byte)

Impression type (1 byte)

Finger quality (1 byte)

Skeleton image size in pixels (2 bytes for

X-direction, 2 bytes for Y-direction)

Length of finger pattern skeletal data block

(2 bytes)

– The variable length fingerprint pattern skeletal

description:

Length of finger pattern skeletal data

(2 bytes)

Finger pattern skeletal data

Length of skeleton line neighbourhood

index data (2 bytes)

Skeleton line neighbourhood index data

– An extended data block containing the ex-

tended data block length and zero or more

extended data areas for each finger/view, defin-

ing length (2 bytes), area type code (2 bytes),

area length (2 bytes), and data.

This International Standard also defines two card

formats, a normal one and a compact one . As with

other parts, this means more limiting constraints to

code data tighter and the definition of the Data Objects

needed (for details refer to [8]).

The skeleton format is used in scienti fic research

[9] and by vendors, implementing Match-on-Card.

Further Steps

The fingerprint parts of ISO 19794 were published as

International Standards in 2005 and 2006. All the par ts

are currently under revision. A major task in the revi-

sion process is to address some defects and include a

common header format for all the parts. Some refer-

ences and vocabulary are needed to be updated to

harmonize the relation of these standards within the

ISO standardization landscape. The finger minutia

standard ISO 19794-2 is probably the most prominent

format in this series and is most frequently used by

industry, government, and science. Interoperability

tests have shown that the current standard allows

some room for interpretation. This will be compen-

sated by an amendment to describe the location, ori-

entation, and type in more detail. Another aspect in

the current revision of the standard is to reduce the

number of format types from currently ten to a maxi-

mum of two. Experts from all continents and various

backgrounds meet on a regular basis to lay down the

future of the standards. The delegates take care of

current requirements in terms of technology and

applications.

Summary

To provide interoperability in storing and transmitting

finger-related biometric information, four standards

are already developed to define the formats needed

for raw images, minutia-based feature vectors, spectral

information, and skeletal representation of a finger-

print. Beyond that, other standards deal with confor-

mance and quality control, as well as interfaces or

performance evaluation and reporting (see related

entries below for further information).

Related Entries

▶ Biometric Data Interchange Format

▶ Common Biometric Exchange Framework Formats

▶ Conformance Testing for Biome tric Data Inter-

change Formats, Standardization of

▶ Fingerprint Recognition

▶ International Standardization of Biome trics

Finger Data Interchange Format, Standardization

F

415

F

References

1. ISO/IEC: 19794-4:2005 - information technology - biometric

data interchange formats - part 4: Finger image data (2005)

2. ISO/IEC: 19785-1:2005 - information technology - common

biometric exchange formats framework - part 1: Data element

specification (2005)

3. ISO/IEC: 19794-2:2005 - information technology - biometric

data interchange formats - part 2: Finger minutiae data (2005)

4. Galton, F.: Finger Prints. Macmillan, London (Reprint: Da Capo,

New York, 1965) (1892)

5. Moenssens, A.: Fingerprint Techniques. Chilton Book Company,

London (1971)

6. Spanish-Homeland-Ministry: Spanish national electronic

identity card information portal (in spanish). http://www.dnie

lectronico.es/ (2007)

7. ISO/IEC: 19794-3:2006 - information technology - biometric

data interchange formats - part 3: Finger pattern spectral data

(2006)

8. ISO/IEC: 19794-8:2006 - information technology - biometric data

interchange formats - part 8: Finger pattern skeletal data (2006)

9. Robert Mueller, U.M.: Decision level fusion in standardized

fingerprint match-on-card. In: 1-4244-0342-1/06, ICARCV

2006, Hanoi, Vietnam (2006)

Finger Geometry, 3D

SOT IRIS MALASSIOTIS

Informatics and Telematics Institute, Center for

Research and Technolog y Hellas, Thessaloniki, Greece

Synonym

3D hand biometrics

Definition

Biometrics based on 3D finger geometry exploit discrim-

inatory information provided by the 3D structure of the

hand, and more specifically the fingers, as captured by

a3Dsensor.Theadvantagesofcurrent3Dfinger

biometrics over traditional 2D hand geometry authenti-

cation techniques are improved accuracy, the ability to

work in contact free mode, and the ability to combine

with 3D face recognition using the same sensor.

Introduction

The motivation behind 3D finger geometry biometrics

is the same as with 3D face recognition. The 3D geom-

etry of the hand as captured by a 3D sensor offers

additional discriminatory information while being in-

variant to variations such as illumination or pigment

of the skin, compared with an image captured with a

plain 2D camera. The current accuracy and resolution

of 3D sensors are not adequate for capturing fine

details on the surface of the fingers such as skin wrin-

kles over the knuckles, but is sufficient to measure,

local curvature, finger circumference, or finger length.

Another motivation comes from a limitation of

current hand geometry recognition systems, that is

obtrusiveness. The user is required to put hi s/her

hand on a special platter with knobs or pegs that

constrain the placement of the hand on the platter.

This step greatly facilitates the process of feature ex-

traction by guaranteeing a uniform background and

hand posture. Thus it guarantees very good perfor-

mance. However, several users would find touching of

the platter unhygienic, while others would face diffi-

culty correctly placing their hands (for example chil-

dren or older people with arthritis problems). Since 3D

data can facilitate the detection of the hand and fin-

gers, even in a cluttered scene, the above constraint

may be raised and the biometric system becomes more

user friendly.

Since the placement of the hand is not a constraint,

one may then combine 3D finger geometry with 3D

face using the same 3D sensor. The user either places

his/her hand on the side of the face or in front of the

face. In the first case, face and hand biometric features

are extracted in parallel, whi le in the second case se-

quentially and the scores obtained are finally com-

bined. This combination has demonstrated ver y high

accuracy even under difficult conditions.

State-of-the-Art

3D hand geometry biometrics is a very recent research

topic and therefore, only a few results are currently

available.

The first to investigate 3D geometry of the fingers

as a biometric modality were Woodard and Flynn [1].

416

F

Finger Geometry, 3D

They used a 3D laser scanner to capture range images

and associated color images of the back of the hand. The

users were instructed to place their palm flat against a

wall with uniform color and remove any rings. For each

subject out of 132, four images were captured in two

recording sessions one week apart. An additional session

was also performed a few months later with 86 of the

original subjects and 89 new subjects.

The authors used the color images to perform

segmentation of the hand from the background.

A combination of skin-color detection and edge detec-

tion was used. The resulting hand segmentation is used

to extract the hand silhouette from which the bound-

aries of index, middle, and ring fingers are detected.

Then for each detected finger a mask is constructed

and an associated normalized (with respect to pose)

range image is created.

For each valid pixel of the finger mask in the output

image, a

▶ surface curvature estimate is computed

with the corresponding range data. The principal cur-

vatures are estimated first by locally fitting a bicubic

Monge patch on the range data to deal with the noise

in the data. However, the number of pixels in the

neighborhood of each point that are used to fit the

patch has to be carefully selected, otherwise fine detail

on the surface may be lost. The principal curvatures are

subsequently used to compute a shape index, which is

a single measure of curvature.

The similarity between two finger surfaces may be

computed by estimating the normalized correlation

coefficient among the associated shape index images.

The average of the similarity scores obtained by the

three fingers demonstrated the best results when used

for classification.

Recognition experiments demonstrat ed an 95%

accuracy, falling to 85% in the case that probe and

gallery images are recorded more than one week apart.

This performance was similar with that reported by a

2D face recognition experiment. The authors managed

to cope with this decline in performance due to time

lapse by matching multiple probe images with multiple

gallery images of the same subject. Similarly, the equal

error rate obtained in verification experiments, is about

9% when a single probe image is matched against a

single gallery image and falls to 5.5% when multiple

probe and gallery images are matched.

The above results validated the assumption that 3D

finger geometry offers discriminato ry information and

may provide an alternative to 2D hand geometry

recognition. However it remains unclear how such an

approach will fair against a 2D hand geometry based

system, given the hig h cost of 3D sensor.

‘‘The main advantage of a biometric sy stem based

on 3D finger geometry is its ability to work in an

unobtrusive (contact-free) manner [2].’’ They propose

a biometric authentication scenario where the user

freely places his hand in front of his face with the

back of the hand visible from the 3D sensor. Although

the palm should be open with the finge rs extended,

small finger bending and moder ate rotation of the

hand plane with respect to the camera are allowed as

well as wearing of rings.

The acquisition of range images and quasi-

synchronous color images are achieved using a real-

time 3D sensor, which is based on the structured light

approach. Thus, data are more noisy and contain more

artifacts compared with those obtained with high-end

laser scanners. Using this setup, the authors acquired

several images of 73 subjects in two recording sessions.

For each subject, images depicting several variations in

the geometry of the hand were captured. These includ-

ed, bending of the fingers, rotation of the hand, and

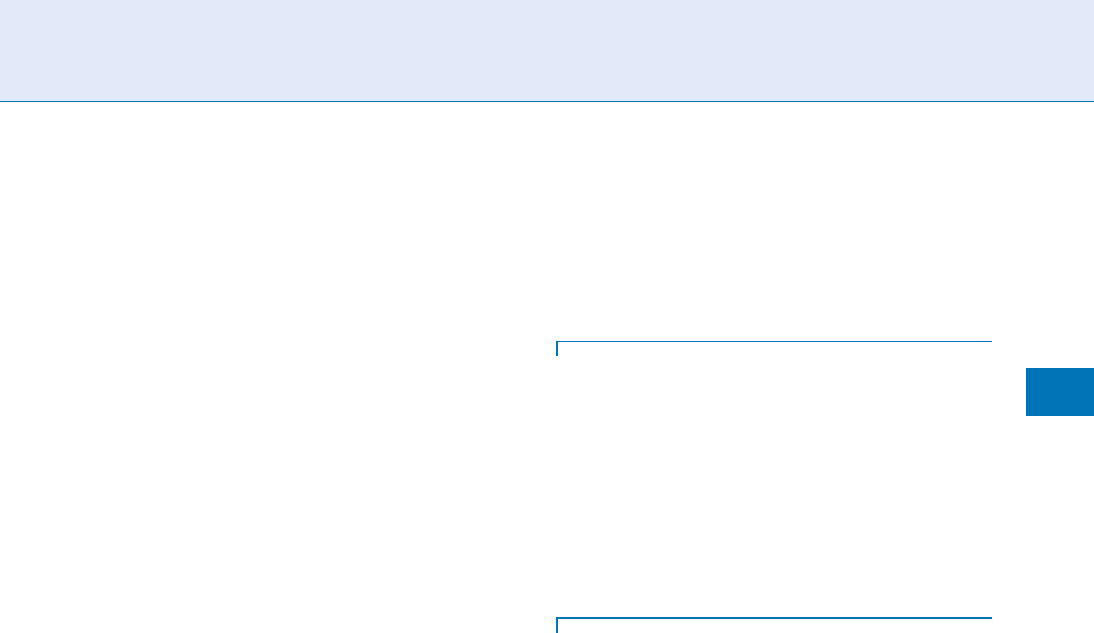

presence or absence of rings (see fig. 1).

The proposed algorithm starts by segmenting the

hand from the face and torso using thresholding and

subsequently from the arm using an iterative clustering

technique. Then, the approximate center of the palm

and the orientation of the hand is detected from the

hand segmentation mask. These are used to locate the

fingers. Homocentric circular arcs are drawn around the

center of the palm with increasing radius excluding the

lower part of the circle that corresponds to the wrist.

Intersection of these arcs with the hand mask gives raise

to candidates of finger segments, which are then clus-

tered to form finger bounding polygons. This approach

avoids using the hand silhouette, which is usually noisy

and may contain discontinuities, e.g., in the presence of

rings. The initial polygon delineating each finger is

refined by exploiting the associated color image edges.

Then, for each finger two signature functions are

defined, parameterized by the 3D distance from the

finger tip computed along the ridge of each finger and

measuring cross-sectional features. Computing fea-

tures along cross-sections offers quasi- invariance to

bending. The first function corresponds to the width of

the finger in 3D, while the second corresponds to the

mean curvature of the curve that is defined by the 3D

points corresponding to the cross-section at the specific

Finger Geometry, 3D

F

417

F

point. Twelve samples are uniformly computed from

each signature function and each finger giving rise to

96 measurements (the thumb was excluded) that are

used for classification. Matching between hand geom-

etry of probe and gallery images is estimated as the L

1

distance between the associated measurement vectors.

Experimental results are similar with those re-

ported in [1]. Rank-1 identification rates range from

86 to 98% depending on using a single or multiple

probe images of the same subject respectively. Cor-

responding equal error rates are 5.8 and 3.5%. The

benefit of the approach in [2 ] is that the algorithm can

withstand moderate variations in hand geometry thus

allowing for contact free operation.

Malassiotis et. al [2] conclude that given the cur-

rent results biometric systems that exploit 3D hand

geometry would be more suitable in low securit y appli-

cations such as personalization of services and atten-

dance control where user-friendliness is prioritized

over accuracy. However, there is another possible ap-

plication in systems combining several biometrics. In

particular, the combination of 3D face modality with

3D finger geometry was shown to offer both high

accuracy and also be relatively unobtrusive.

Woodard et al. [3] compared the recognition per-

formance of 3D face, 3D ear, and 3D finger surface as

well as their combination. The original 93% obtained

using 3D face geometry was improved to 97% when

this was combined with the other two modalities.

Tsalakanidou et al. [4] also combined 2Dþ3D face

recognition with 3D finger geometry recognition, in

the presence of several variations in shape and appear-

ance of the face and hand. According to their applica-

tion scenario, the 3D sensor grabs first images of the

user’s face and then the user is asked to place his hand

in front of his face and another set of images is acquired.

Thescoresobtainedusingfacialandhandfeaturesre-

spectively are normalized and fused to provide a single

score on which identification/verification is based. An

Equal Error Rate equal to 0.82% and a rank-1 identifi-

cation rate equal to 100% was reported for a test-set

comprised of 17,285 pairs of face and hand images of

50 subjects depicting significant variations.

The above results validate our original claim that

3D face geometry + 3D finger geom etry may provide

both high accuracy and user acceptance whi le sharing

the same sensor for data acquisition.

Challenges and Prospects

Biometric authentication/identification using 3D fin-

ger geometry is a very recent addition in the compen-

dium of 3D bio metrics. Although the potential of this

technique has been already demonstrated, several re-

search challenges have to be addressed before commer-

cial applications using this modality emerge.

Finger Geometry, 3D. Figure 1 (a) Color and (b) range

image in the hand geometry acquisition setup of [2].

418

F

Finger Geometry, 3D

Performance of techniques based on 3D finger ge-

ometry depends much more on the quality of range data

than 3D face recognition. Although, some of the fine

detail on the finger surface may be captured using high-

end (and therefore very expensive) 3D scanners, this is

not the case with low-cost systems. Such detail (e.g. the

wrinkles of the skin) may be alternatively detected if

associated brightness images are used. In this case, 3D

information may be used to facilitate the localization of

the finger and knuckles and 2D images may be subse-

quently used to extract the skin folding patterns. Also,

both studies in the literature do not use the thumb

finger, which however, seems to exhibit larger variability

from subject to subject than the rest of the fingers.

Further research is also needed to address the pro-

blem of the variability in the shape and appear ance

depicted on the hand images. Future techniques

should be able to deal w ith significant finger bending,

partial finger occlusion, and rotation of the hand with

respect to the camera and also be generic enough to

cope with diffe rent hand sizes and deformed finger due

to accident or aging.

In summary, 3D finger biometrics retain the bene-

fits of traditional 2D hand geometry biometrics espe-

cially w ith respect to privacy preservation, while

demonstrating similar or better performance. In addi-

tion, 3D finger biometrics may be applied with less

strict constraints on the placement of the hand and the

environment, which makes them suitable for a larger

range of low to medium security applications.

Since correlation of finger geometry features with

other discriminative features of the human body is

known to be very low, 3D finger geometry may be

efficiently combined with other biometrics in a multi-

modal system. In this case, this technolog y may be

applied to high security scenarios.

Related Entries

▶ 3D-Based Face Recognition

▶ Hand Geometry

References

1. Woodard, D.L., Flynn, P.J.: Finger surface as a biometric identi-

fier. Comput. Vision Image Understand 100, 357–384 (2005)

2. Malassiotis, S., Aifanti, N., Strintzis, M.G.: Personal Authentica-

tion Using 3-D Finger Geometry. IEEE Trans. Inform. Forens.

Secur. 1(1), 12–21 (2006)

3. Woodard, D.L., Faltemier, T.C., Yan, P., Flynn, P.J., Bowyer, K.W.:

A Comparison of 3D Biometric Modalities. In: Proc. Comput.

Vision Pattern Recogn. Workshop, pp. 57–60 (2006)

4. Tsalakanidou, F., Malassiotis, S., Strintzis, M.G.: A 3D Face and

Hand Biometric System for Robust User-Friendly Authentica-

tion. Pattern Recogn. Lett. 28(16), 2238–2249 (2007)

Finger Pattern Spectral Data

Set of spectral components derived from a fingerprint

image that may be processed (e.g., by cropping and/o r

down-sampling).

▶ Finger Data Interchange Format, Standardization

Finger Vein

HIS AO OGATA MITSUTOSHI HIMAGA

Hitachi-Omron Terminal Solutions, Corp.

Owari-asahi City, Aich i, Japan

Definition

Finger veins are hidden under the skin where red blood

cells are flowing. In biometrics, the term vein does not

entirely correspond to the terminology of medical sci-

ence. Its network patterns are used for authenticating

the identity of a person, in which the approximately

0.3–1.0 mm thick vein is visible by

▶ near infrared

rays. In this definition, the term finger includes not

only index, midd le, ring, and little fingers, but also the

thumb.

Introduction

Blood vessels are not exposed and their network pat-

terns are normally impossible to see with out the

range of visible light wavelength. The approximately

0.3–1.0 mm vein which constitutes the network pat-

terns are visualized by near infrared rays. Figure 1

shows a visualized finger vein pattern image. It is well

known that hemoglobin absorbs near infrared rays

more than other substances that comprise the human

Finger Vein

F

419

F