Li S.Z., Jain A.K. (eds.) Encyclopedia of Biometrics

Подождите немного. Документ загружается.

training sample, and others form the testing set. The

respective recognition rates based on the different

databases are presented in Table 1. From the experi-

mental results, it can be seen that:

1. When the testing image set includes images under

varying illumination, the HE can be utilized to

improve the recognition performance as compared

to that without using any preprocessing procedure.

Illumination Compensation. Figure 4 Some experimental results based on the yale database. (a) original images,

(b) images processed by the HE, (c) images processed by the BHE, (d) images processed by the introduced algorithm.

Illumination Compensation. Figure 5 Some experimental results based on the AR database. (a) original images,

(b) images processed by the HE, (c) images processed by the BHE, (d) images processed by the introduced algorithm.

720

I

Illumination Compensation

However, the improvement is very slight in some

cases, e.g., for the Yale database.

2. The BHE or the introduced algorithm can improve

the recognition rates significantly. The BHE leads

to an improvement varying from 29.9 to 45.4%,

and in the case of the introduced algorithm it varies

from 53.3 to 62.6%. In other words, these two

methods are both useful in eliminating the effect

of uneven illumination on face recognition. In

addition, the introduced algorithm can achieve

the best performance level of all the methods used

in the experimen t.

3. The BHE method is very simple and does not

require any prior knowledge. Compared to the

traditional local contrast enhancement methods

[5], its computational burden is for less. The

main reason for this is that all the pixels within a

block are equalized in the process, rather than just a

single pixel, as in the adaptive block enhancement

method. Nevertheless, as in the case of the tradi-

tional local contrast enhancement methods, noise

is amplified after this process.

4. If the images processed by the HE are adopted

to estimate the illumination category, the cor-

responding recognition rates using the different

databases will be lowered. This is because variations

between the images are affected not only by the

illumination, but also by other facto rs, such as

age, gender, race, and makeup. The illumination

map can eliminate the distinctive personal infor-

mation to the extent possible, while keeping the

illumination information unchanged. Therefore,

the illumination category can be estimated more

accurately, and a more suitable illumination mode

is selected.

The reconstructed facial images using the intro-

duced algorithm appear to be very natural, and display

great visual improvement and lighting smoothness.

The effect of uneven lighting, including shadows, is

almost eliminated. However, if there are glasses or a

mustache, which are not Lambertian surface, in an

image, some side effects may be seen under some

special light source models. For instance, the glasses

may disappear or the mustache may become faint.

Summary

This essay discussed a model-based method, which can

be used for illumination compensation in face recog-

nition. For a query image, the illumination category is

first evaluated, followed by shape normalization, then

the corresponding lighting model is used to compen-

sate for uneven illumination. Next, the reconstructed

texture is mapped backwards from the reference

shape to that of the original shape in order to build

an image under normal illumination. This lighting com-

pensation approach is not only useful for face recogni-

tion when the faces are under varying illumination,

but can also be used for face reconstruction. More im-

portantly, the images of a query input are not required

for training. In the introduced algorithm, 2D face shape

model is adopted in order to address the effect of differ-

ent geometries or shapes of human faces. Therefore, a

more reliable and exact reconstruction of the human

face is possible, and the reconstructed face is under

normal illumination and appears more natural visually.

Experimental results revealed that preprocessing the

faces using the lighting compensation algorithm greatly

improves the recognition rate.

Related Entries

▶ Face Recognition, Over view

References

1. Adini, Y., Moses, Y., Ullman, S.: Face recognition: the problem

of compensating for changes in illumination direction. IEEE T.

Pattern Anal. 19(7), 721–732 (1997)

2. Turk, M., Pentland, A.: Eigenfaces for recognition. J. Cognitive

Neurosci. 3, 71–86 (1991)

3. Bartlett, M.S., Movellan, J.R., Sejnowski, T.J.: Face recognition by

independent component analysis. IEEE T. Neural Networ. 13(6),

1450–1464 (2002)

4. Yale University [Online]. Available at: http://cvc.yale.edu/

projects/yalefacesB/yalefacesB.html

5. Pizer, S.M., Amburn, E.P.: Adaptive histogram equalization and

its variations. Comput. Vision Graph. 39, 355–368 (1987)

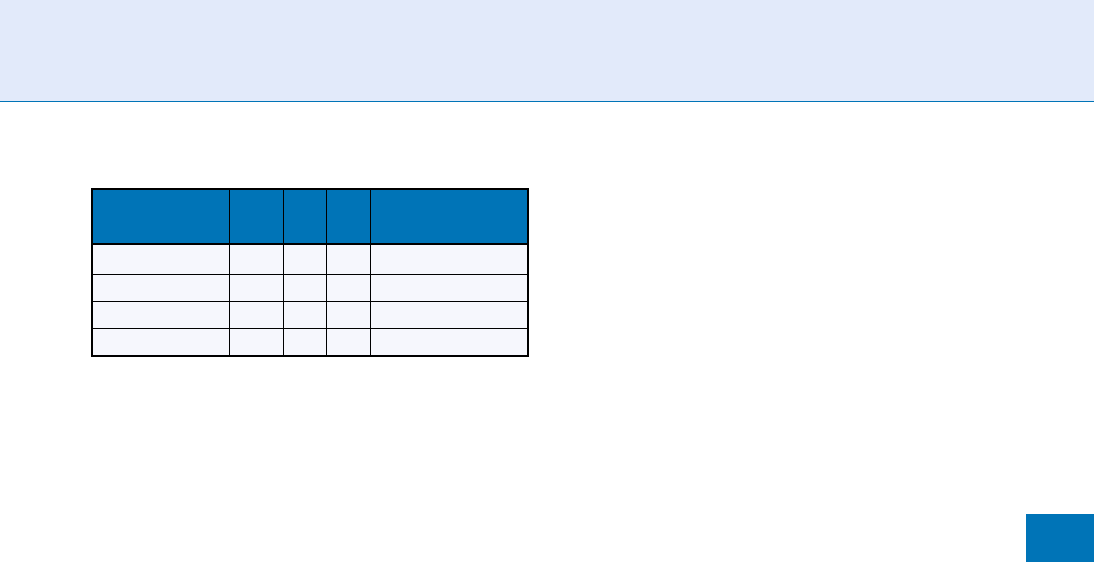

Illumination Compensation. Table 1 Face recognition

results using deferent preprocessing methods

Recognition

rate (%) None HE BHE

The introduced

method

YaleB 43.4 61.4 77.5 99.5

Yale 36.7 36.7 80.0 90.0

AR 25.9 37.7 71.3 81.8

Combined 30.1 32.2 60.0 92.7

Illumination Compensation

I

721

I

6. Zhu, J., Liu, B., Schwartz, S.C.: General illumination correction

and its application to face normalization. In proceedings of the

IEEE International Conference on Acoustics, Speech, and Signal

Processing, vol. 3, pp. 133–136. Hong Kong, China, (2003)

7. Xie, X., Lam, K.M.: Face recognition under var ying illumination

based on a 2D face shape model. Pattern Recogn. 38(2), 221–230

(2005)

8. Xie, X., Lam, K.M.: An efficient illumination normalization

method for face recognition. Pattern Recogn. Lett. 27(6),

609–617 (2006)

9. Zhao, J., Su, Y., Wang, D., Luo, S.: Illumination ratio image:

synthesizing and recognition with varying illuminations. Pattern

Recogn. Lett. 24(15), 2703–2710 (2003)

10. Liu, C., Wechsler, H.: A shape- and texture-based enhanced

fisher classifier for face recognition. IEEE T. Image Process.

10(4), 598–608 (2001)

11. Goshtasby, A.: Piecewise cubic mapping functions for image

registration. Pattern Recogn. 20(5), 525–533 (1987)

12. Belhumeur, P.N., Hespanha, J.P., Kriegman, D.J.: Eigenfaces vs.

Fisherfaces: recognition using class specific linear projection.

IEEE T. Pattern Anal. 19(7), 711–720 (1997)

13. Yale University [Online]. Available at: http://cvc.yale.edu/

projects/yalefaces/yalefaces.html

14. Martinez, A.M., Benavente, R.: The AR face database, CVC

technical report #24 (1998)

Illumination Normalization

▶ Illumination Compensation

Image Acquisition

▶ Image Formation

Image Capture

▶ Biometric Sample Acquisition

Image Classification

▶ Image Pattern Recognition

Image Enhancement

The use of computer algorithms to improve the quality

of an image by giving it higher contrast or making it

less blurred or less noisy.

▶ Fingerprint Recognition, Overview

▶ Skull, Forensic Evidence of

Image Formation

XIAOMING PENG

College of Automation, University of Electronic

Science and Technology of China, Chengdu,

Sichuan, China

Synonym

Image acquisition

Definition

Image formation is the process in which three-

dimensional (3D) scene points are projected into

two-dimensional (2D) image plane locations, both

geometrically and optically. It involves two parts. The

first part is the geometry (derived from camera models

assumed in the imaging process) that determines

where in the image plane the projection of a scene

point will be located. The other part of image forma-

tion, related with radiometry, measures the brightness

of a point in the image plane as a function of illumina-

tion and surface properties.

Introduction

Common visual images result from light intensity var-

iations across a two-dimensional plane. However, light

is not the only source used in imaging. To unde rstand

how vision might be modeled computationally and

replicated on a computer, it is important to under-

stand the image acquisition process. Also, understand-

ing image formation is a prerequisite before one could

solve some complex computer vision tasks, such as

722

I

Illumination Normalization

▶ camera, ▶ calibration and shape from shading. There

are many types of imaging devices, ranging from

biological vision systems (e.g., animal eyes) to video

cameras and medical imaging machines (e.g., a Magnet-

ic Resonance Imaging (MRI) scanner). Generally, the

mechanisms of image creation vary across different

imaging systems and as a result, it is not possible for

this entry to cover all these mechanisms. In fact, the

author confines the discussion of image formation pro-

cess to a widely-used type of camera, the television

camera in the v isual spectrum. The reader, who is

interested in image formation of other ty pes of imaging

devices might be referred to [1] for medica l image

formation, [2] for synthetic aperture radar (SAR)

image formation, [3] for infrared image formation,

and [4] for acoustic image for mation. The image for-

mation process of a television camera involves two

parts. The first part is the camera geometry that deter-

mines where the projection of a scene point in the

image plane will be located; the other part measures

the amount of received light energy in individual pixels

as the result of interaction among various scene surface

material and light sources.

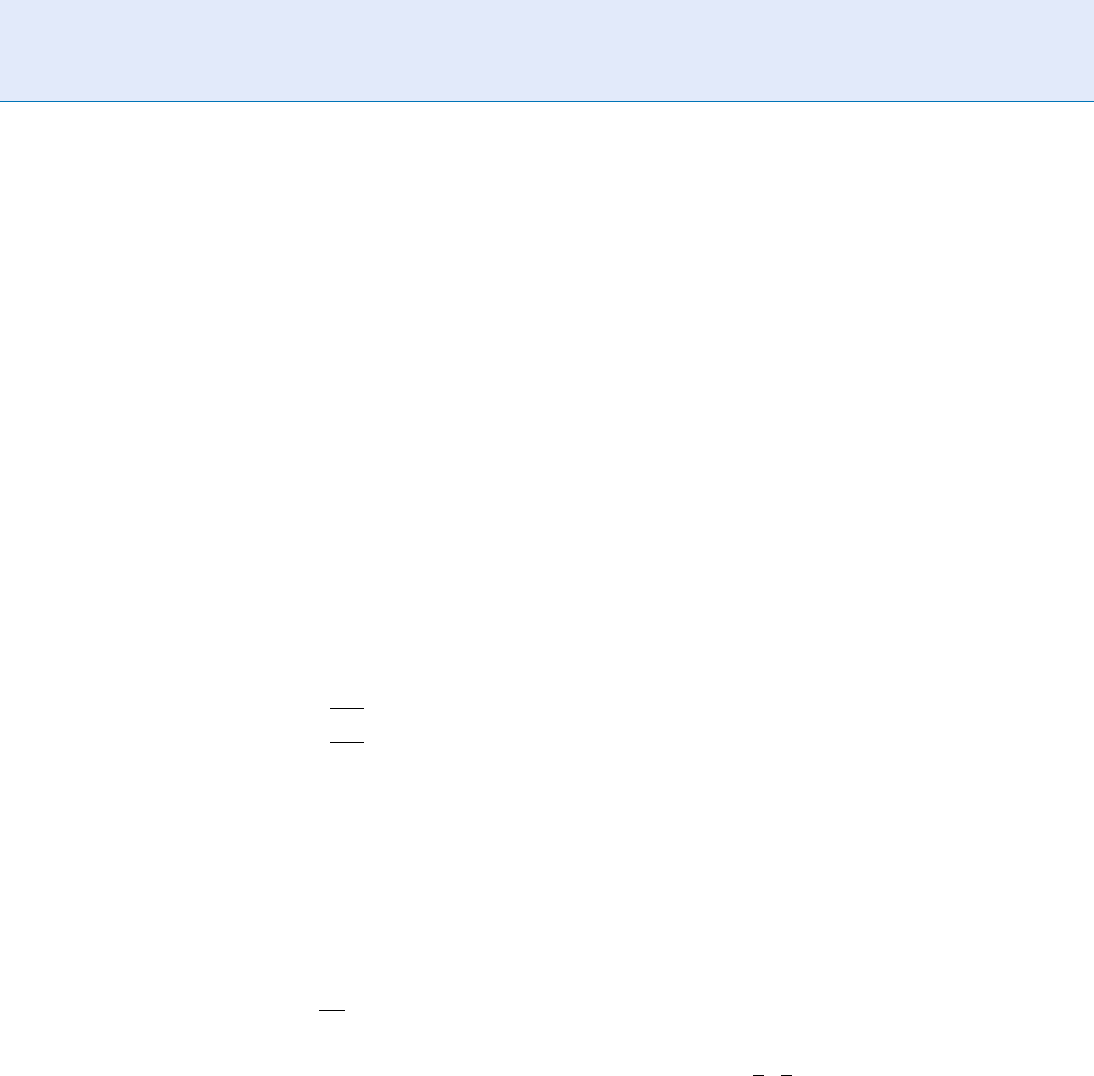

The Perspective Projection Camera

Geometry

Some commonly-used geometric camera models

include the perspective camera model, the weak-

perspective camera model, the affine camera model,

and the orthographic camera model. Among them, the

perspective camera model is the most popular. In par-

ticular, an idealized model that defines perspective

projection is the pinhole camera model, in which rays

of light pass through a ‘‘pinhole’’ and form an inverted

image of the object on the image plane. The geometry

of the device is depicted in Fig. 1.

In Fig. 1, the optical center C coincides with the

pinhole; the dotted vertical line perpendicular to the

image plane is the optical axis; the intersection of

the optical axis with the image plane is the principal

Image Formation. Figure 1 The perspective projection camera geometry.

Image Formation

I

723

I

point U

0

; the focal length f is the distance between

the optical center C and the principal point U

0

.(Itis

assumed that the camera is focused at infinity.) The

projection is formed by an optical ray, which is reflected

from a 3D scene point X ¼ðx; y; zÞ

T

(where T denotes

transpose) and passes through the optical center C and

hits the 2D image plane at the point U ¼ðu; vÞ

T

. Note

that three coordinate systems are used in Fig. 1. The

scene point X is expressed in the world coordinate

system, which generally is not coincident with the

camera coordinate system. The latter has the optical

center C as its origin and its Z-axis is aligned with the

optical axis. The alignment of these two coordinate

systems can be performed by an Euclidean transforma-

tion consisting of a 3 3 rotation matrix R and a 3 1

translation vector, t. The projected point U on the

image plane can be expressed in pixels in the image

plane coordinate system, whose origin is assumed as

the lower left corner of the image plane. Expressing

X ¼ðx; y; zÞ

T

in the homogeneous form as

~

X ¼

ðx; y; z; 1Þ

T

, the coordinates of U are given as [5]

u ¼

m

1

~

X

m

3

~

X

u ¼

m

2

~

X

m

3

~

X

(

ð1Þ

where m

1

, m

2

, and m

3

are the three transposed rows

of the 3 4 perspective projection matrix, and

M ¼ KðR=TÞ where ‘‘

’’ denotes the dot product

operation.

The 3 3 matrix K in the perspective projec-

tion matrix M contains the intrinsic parameters of

the camera and can be expressed as

K ¼

a a cot y u

0

0

b

sin y

v

0

00 1

2

4

3

5

; ð2Þ

where a and b are the scale factors of the image plane

(in units of the focal length f ), y is the skew (it is the

angle between the two image axis), and (u

0

, v

0

)

T

are

the coordinates of the principal point. These five

intrinsic parameters can be obtained through camera

calibration.

It is worth pointing out that altho ugh the pinhole

camera model is mathematically convenient, it is not

the case with real cameras that are equipped with real

lenses. The more realistic model of real lenses includes

the radial distortion, a type of aberration that bends

the light ray more or less than in the ideal case.

As a consequence, the image formed is more or less

distorted. Once the image is undistorted, the camera

projection can be formulated as a projective projection.

The Radiometric Aspects that

Determine the Brightness of an

Image Point

A television camera measures the amount of received

light energy in individual pixels as the result of inter-

action among various materials and various sources.

The value measured is informally called brightness (or

gray level). Radiometry is a branch of physics that deals

with the mea surement of the flow and transfer of

radiant energy. It is an useful tool to establish the

relationship between the brightness of a point in the

image plane and the radiant energy the point received.

Two definitions in radiometry, the radiance and irradi-

ance, will be useful in the following explanations. Radi-

ance is defined as the power of light that is emitted from

an unit surface area into some spatial angle. The unit

of radiance is W m

2

sr

1

(watts per square meter per

steradian). Irradiance is defined as the amount of en-

ergy that an image -capturing device gets per unit of an

efficient area of the camera. Gray-level of image pixels

are quantized estimates of image irradiance. The unit

of irradiance is W m

2

(watts per square meter).

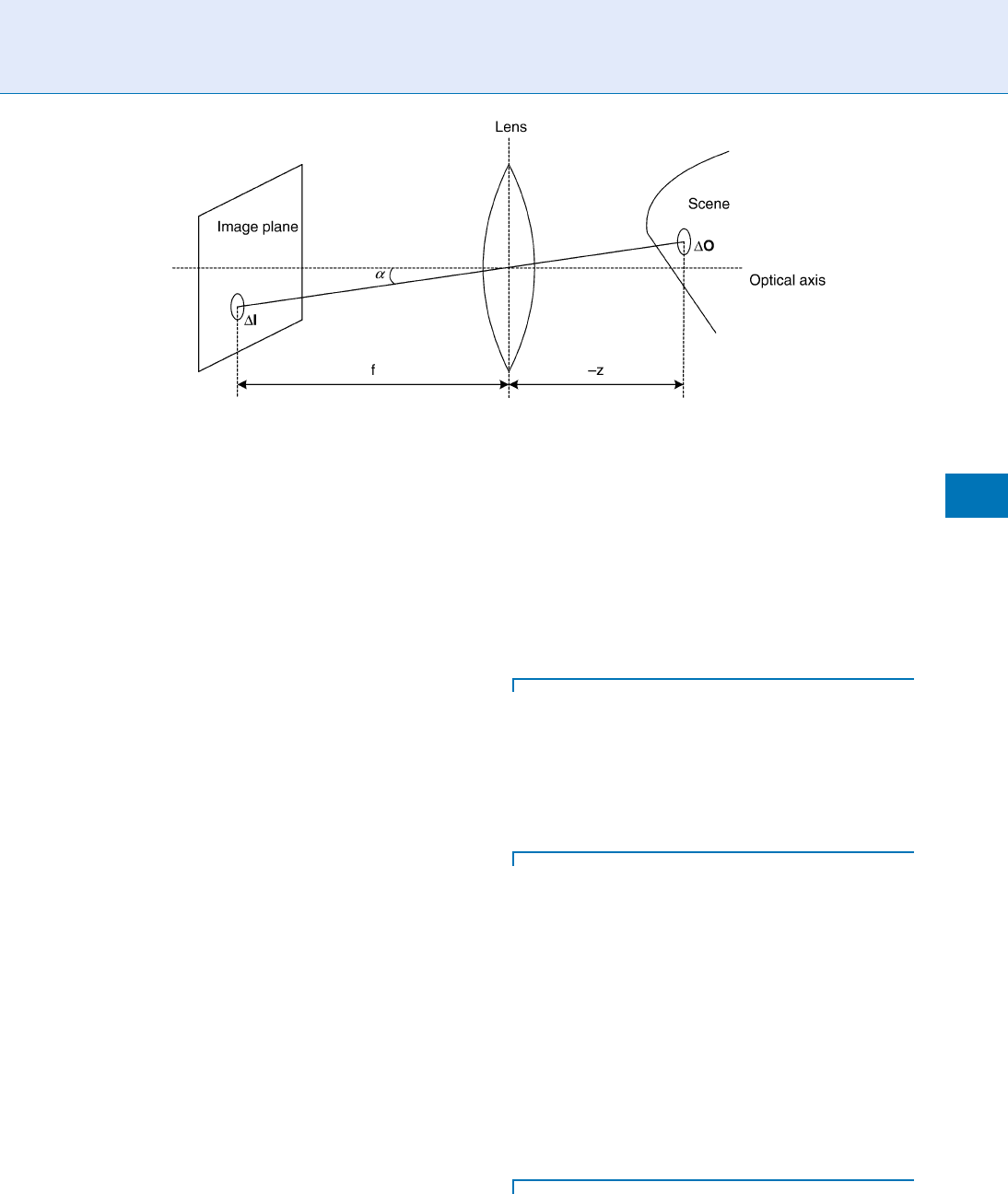

Consider the relationship between the irradiance E

measured in an infinitesimal image patch DI and the

radiance L produced by an infinitesimal scene patch

DO (Fig. 2). In Fig. 2, a lens with focal length f is placed

at the coordinate origin and the infinitesimal scene

patch DO is at distance z from the lens. The off-axis

angle spans between the optical axis and the line con-

necting DO with DI. As given in [6],

E ¼ L

p

4

d

f

2

cos

4

a; ð3Þ

where d is the diameter of the lens. Equation (3) shows

that the image irradiance is proportional to the scene

radiance.

As the image irradiance is a result of light reflection

from scene objects, the ability of different materials to

reflect light needs to be described. The most general

model for this purpose is the bi-directional reflectance

distribution function (BRDF), which describes the

brightness of an elementary surface patch for a specific

material, light source, and viewer directions. For most

practically applicable surfaces, the BRDF remains

724

I

Image Formation

constant if the elementary surface patch rotates along

its surface normal. For a Lamber tian surface (also

called ideal diffuse surface), which reflects light energy

in all directions (and the radiance is constant in all

directions) the BRDF is given as r/p, w here r is the

albedo of the surface.

Summary

Mechanisms of image formation vary across different

types of imaging devices. The image formation process

is explained both geometrically and optically of the

television camera. The projected location of a 3D

scene point onto the 2D image plane is dependent on

the camera geometry, and the brightness of an image

pixel is determined by the interaction among various

material and various sources.

Related Entries

▶ Camera models

▶ Radiometri c calibration

References

1. Webb, S.: The Physics of Medical Imaging. Taylor & Francis,

London (1988)

2. Burns, B.L., Cordaro, J.T.: SAR image formation algorithm that

compensates for the spatially variant effects of antenna motion.

Proc. SPIE 2230, 14–24 (1994)

3. Foulkes, P.W.: Towards infrared image understanding. Ph.D.

Thesis, Department of Engineering Science, Oxford University

(1991)

4. Murino, V., Trucco, A.: A confidence-based approach to enhanc-

ing underwater acoustic image formation. IEEE Trans. Image

Process. 8(2), 270–285 (1999)

5. Forsyth, D.A., Ponce, J.: Computer Vision: A modern Approach.

Prentice Hall, New Jersey (2003)

6. Sonka, M., Hlavac, V., Boyle, R.: Image Processing, Analysis, and

Machine Vision, 2nd edn. Brooks/Cole, California (1998)

Image Formation Process

▶ Face Sample Synthesis

Image Morphology

Morphological image processing is a theoretical model

for digital image processing built upon lattice theory

and topology. Morphological operators or filters rely

only on the relative ordering of pixel values, not on

their numerical values, and are suited especially to the

processing of binary and grayscale images.

▶ Footwear Recognition

Image Pattern Classification

▶ Image Pattern Recognition

Image Formation. Figure 2 The relationship between image irradiance E and scene radiance L.

Image Pattern Classification

I

725

I

Image Pattern Recognition

JIAN YANG,JINGYU YANG

School of Computer Science and Technology, Nanjing

University of Science and Technology, Nanjing, China

Synonyms

Image classification; Image pattern classification;

Image recognition

Definition

Image pattern recognition is the problem of exploring

how to recognize image patterns. An image pattern

recognition system generall y consists of four parts: a

camera that acquires the image sam ples to be classified,

an image preprocessor that improves the qualities of

images, a feature extraction mechanism that gains dis-

criminative features from images for recognition, and a

classification scheme that classifies the image samples

based on the extracted features.

Introduction

Image is the most important pattern perceived everyday.

A lot of biometric patterns, such as faces, fingerprints,

palmprints, hands, iris, ears, are all shown in images.

Image pattern recognition, therefore, the fundamental

problem in pattern recognition area, particularly in

biometrics. The process of an image pattern recognition

task generally includes four steps: image acquisition,

image preprocessing, image feature extraction and clas-

sification, as shown in Fig. 1.

Image acquisition is the process of acquiring digital

images from devices (e.g., digital cameras, scanners, or

video frame grabbers) that are primarily used to cap-

ture still images. It is the elementary step of an image

pattern recognition task. The qualit y of images

acquired essentially affects the performance of the

whole image pattern recognition system.

Image preprocessing is a common term for opera-

tions on images at the lowest level of abstraction. The

objective is to improve the image data by removing the

unwanted areas from the original image or enhancing

some image features important for further processing.

For biometric imag e recognition, image preprocessing

generally involves two important aspects: image crop-

ping and image enhancement.

Image feature extractio n is the core problem of

image pattern recognition, since finding most discrim-

inative features of images is a key to solving pattern

classification problems. Actually, our visual system has

the special power of finding the most discriminative

features. Given an image, its most important features

can be obtained in a glance. Only based on these

few features, the image can be immediately recognized

next time. Note that for image pattern recognition,

a direct way is to match two images based on pixel

values. This method uses pixel values of an image as

features, without a process of feature extraction. Gen-

erally speaking , this method has three weaknesses

since image pattern is generally high-dimensional:

first, it takes more time for classification; second, it

increases the storage requirement of the recognition

system; third, it may cause the so-called ‘‘curse of

dimensionality’’ and achieve unsatisfactory recogni-

tion performance.

Classification is the task of classifying the image

samples based on the extracted features and then

providing the class label for imag es. A classifier needs

to be designed or trained to do this task. There are a

number of existing classifiers available for use. The

choice of classifiers, however, is a difficult problem in

practice. A more reliable way is to perform classifier

combination, that is, to combine several classifiers to

achieve a good, robust performance.

Image Preprocessing

In the context of biometrics, two image preprocessing

steps deserve particular concern. One is image cropping

and the other is image enhancement. Image crop-

ping refers to the removal of the unwanted areas of

an image to accentuate subject matter. For biometric

images, the subject matter is the biometric object itself.

For example, given a face image as shown in Fig. 2, the

only concern is the face, since other parts, such as

Image Pattern Recognition. Figure 1 The general process of image pattern recognition.

726

I

Image Pattern Recognition

clothes and bac kground, are of little use face recogni-

tion. By proper cropping , the face is preserved for

recognition. Figure 2 shows an example of image crop-

ping. Image cropping can be done manually or auto-

matically. Automatic image cropping is generally

performed by virtue of an object detection algorithm,

which is designed particularly for finding the object of

interest.

Image enhancement is the improvement of digi-

tal image quality for visual inspection or for machine

analysis, without knowing about the source of degra-

dation. For biometric recognition, a primary role of

image enhancement is to alleviate the effects of illumi-

nation. Image enhancement techniques can be divided

into two broad categories: spatial domain methods

which operate directly on pixels and frequency domain

methods which operate on the

▶ Fourier transform of

an image. A widely-used spatial domain method is

▶ histogram equalization, which aims to enhance the

contrast of an image by using the normalized cumula-

tive histogram as the grey scale mapping function [1].

Figure 2 shows a result of an image after histogram

equalization. It should be noted that many image en-

hancement methods are problem-oriented: a method

that works fine in one case may be completely inade-

quate for another case. For biometrics applications, an

advantage for choosing image enhancement methods

is that these methods can ultimately improve the rec-

ognition performance.

Image Feature Extraction

Broadly speaking, there are two categories of features

to be extracted from an image: holistic features and

local features. Image feature extraction generally

involves a transformation from the input image space

to the feature space. Holistic features are extracted

through a holistic transformation, while the local fea-

tures are derived via a local transformation.

Principal component analysis (PCA) and Fisher

linear discriminant analysis (LDA) are two classical

holistic transformation techniques. Both methods

are widely applied to biometrics [2, 3]. However,

PCA and LDA are essentially 1D vector pattern based

techniques. Before applying PCA and LDA to 2D

image patterns, the 2D image matrices must be

mapped into 1D pattern vectors by concatenating

their column s or rows. The pattern vectors generally

lead to a high-dimensional space. For example, an

image with a spatial resolution of 128 128 results

in a 16,384-dimensional vector space. In such a high-

dimensional vector space, computing the Eigenvectors

of the covariance matrix is very time-consuming. Al-

though the singular value decomposition (SVD) tech-

nique [2] is effective for reducing computations when

the training sample size is much smaller than the

dimensionality of the images, it does not help much

when the training sample size becomes large.

Compared with PCA, the two-dimensional PCA

method (2DPCA) [4] is a more efficient technique

for dealing with 2D image pattern, as 2DPCA works

on matrices rather than on vectors. Therefore, 2DPCA

does not transform an image into a vector, but rather,

it constructs an image covariance matrix directly from

the original image matrices. In contrast to the covari-

ance matrix of PCA, the size of the image covariance

matrix of 2DPCA is much smaller. For example, if the

image size is 128 128, the image covariance matrix of

2DPCA is still 128 128, regardless of the training

sample size. As a result, 2DPCA has a remarkable

computational advantage over PCA. In addition,

Image Pattern Recognition. Figure 2 Image cropping and histogram equalization.

Image Pattern Recognition

I

727

I

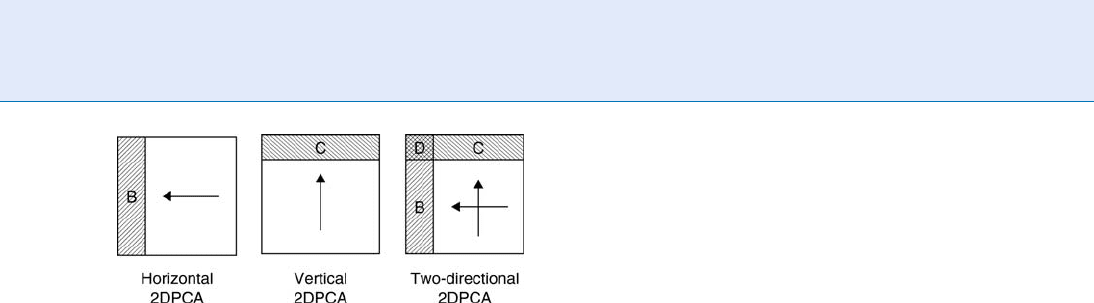

2DPCA can be performed on images horizontally and

vertically. The horizontal 2DPCA is invariant to verti-

cal image translations and vertical mirror imaging, and

the vertical 2DPCA is invariant to horizontal image

translations and horizontal mirror imaging. 2DPCA is

therefore less sensitive to imprecise object detection

and image cropping, and can improve the performance

of PCA for image recognition [5]. Following the deri-

vation of 2DPCA, some two-dimensional LDA

(2DLDA) versions were developed and used for

image feature extraction [6, 7](Fig. 3).

Gabor wavelet transformation [8] and local binary

patterns (LBP) [9] are two representative local trans-

formation techniques. A Gabor wavelet (filter) is de-

fined by a two-dimensional Gabor function, which was

proposed by Daugman to model the spatial summa-

tion properties of the receptive fields of simple cells in

the visual cortex [8]. A bank of Gabor filters with

various scales and rotations are convolved with an

image, resulting in a set of Gabor features of the

image. Gabor features were demonstrated very effec-

tive for biometric image recognition due to their in-

sensitivity to illumination variations. However, Gabor

feature space is generally very high-dimensional, since

multiple scales and rotations generate many filters.

A feature extraction method, for example PCA or

LDA, is usually followed to further reduce the dimen -

sion of the original Gabor feature space. In contrast to

Gabor wavelet transform ation, LBP is a relative new

local descriptor method. This method commonly com-

bines with a spatially enhanced histogram for image

feature extraction [10].

Classification

After image feature extraction, one (or multiple) classi-

fier for classification needs to be designed or chosen.

The nearest neighbor (1-NN) classifier is one of the

most widely-used classifiers due to its simplicity and

effectiveness. Cover and Hart laid the theoretical foun-

dation of 1-NN classifier and showed in 1967 [11]

that when the training sample size approaches to infin-

ity, the error rate of the NN classifier is bounded above

by twice the Bayes error rate. As a generalization of

1-NN classifier, K-NN classifier was presented subse-

quently [12]. In practice, the performance of the

NN-classifier depends on the representational capacity

of prototypes as well as on how many prototypes are

available. To enhance the representational capacity

of the available limited prototypes, a nearest feature

line classifier was proposed and applied to face

biometrics [13].

Support vector machine (SVM) [14] is very popu-

lar as a classification method over the last decade. An

SVM seeks to construct a separating hyperplane that

maximizes the margin between the two classes of data

sets. The margin is the perpendicular distance between

the two parallel hyperplanes, each of which is deter-

mined by the class sample points closest to the separ-

ating hyperplane (these sample points are called

support vectors). Intuitively, the larger the margin

the better the

▶ generalization error of the classifier.

No classifier is perfect; each classifier has its advan-

tages and weaknesses. To achieve a good, robust classi-

fication performance, multiple classifiers can be

combined in practice. If the set of classifiers is deter-

mined, the remaining problem is to design or choose a

proper combination scheme. A large number of classi-

fier combination schemes (including voting, sum rule,

bagging, boasting, etc.) have been proposed and sum-

marized in the literature [15].

Summary

This entry provides a general view of image pattern

recognition. The process of image pattern recognition

includes four steps: image acquisition, image preproces-

sing, image feature extraction and classification. For

image preprocessing, more attention is given to image

cropping and image enhancement, which are two im-

portant steps in dealing with biometric images.

For image feature extraction, holistic and local feature

extraction methods are outlined, with an emphasis

on the 2DPCA method which was designed to fit

the two-dimensional image patterns. For classification,

Image Pattern Recognition. Figure 3 Illustration of the

horizontal 2DPCA transform, the vertical 2DPCA transform,

and the 2DPCA transform in both directions.

728

I

Image Pattern Recognition

the nearest neighbor classifier, support vector ma-

chine, and classifier combination methods have been

introduced.

Related Entries

▶ Dimensionality Reduction

▶ Feature Extraction

▶ Image Formation

▶ Local Feature Filters

References

1. Russ, J.C.: The Image Processing Handbook (4th edn.). Baker &

Taylor Press, Charlotte, NC (2002)

2. Turk, M., Pentland, A.: Eigenfaces for recognition. J. Cogn.

Neurosci. 3(1), 71–86 (1991)

3. Belhumeur, P.N., Hespanha, J.P., Kriengman, D.J.: Eigenfaces

vs. Fisherfaces: recognition using class specific linear

projection. IEEE Trans. Pattern Anal. Machine Intell. 19(7),

711–720 (1997)

4. Yang, J., Zhang, D., Frangi, A.F., Yang, J.-y.: Two-

dimensional PCA: a new approach to face representation

and recognition. IEEE Trans. Pattern Anal. Machine Intell.

26(1), 131–137 (2004)

5. Yang, J., Liu, C.: Horizontal and vertical 2DPCA-based discrim-

inant analysis for face verification on a large-scale database. IEEE

Trans. Inf. Forensics Security. 2(4), 781–792 (2007)

6. Yang, J., Yang, J.-y., Frangi, A.F., Zhang, D.: Uncorrelated pro-

jection discriminant analysis and its application to face image

feature extraction. Intern. J. Pattern Recognit. Artif. Intell. 17(8),

1325–1347 (2003)

7. Ye, J., Janardan, R., Li, Q.: Two-dimensional linear discriminant

analysis. In: Proceedings of the Annual Conference on Advances

in Neural Information Processing Systems (NIPS’04), Vancou-

ver, BC, Canada (2004)

8. Daugman, J.G.: Uncertainty relations for resolution in

space, spatial frequency, and orientation optimized by

two-dimensional visual cortical filters. J. Opt. Soc. Am. A. 2,

1160–1169 (1985)

9. Ahonen, T., Hadid, A., Pietikainen, M.: Face description

with local binary patterns: application to face recognition.

IEEE Trans. Pattern Anal. Machine Intell. 28(12), 2037–2041

(2006)

10. Liu, C., Wechsler, H.: Gabor feature based classification using the

enhanced fisher linear discriminant model for face recognition.

IEEE Trans. Image Process. 11(4), 467–476 (2002)

11. Cover, T.M., Hart, P.E.: Nearest neighbor pattern classification.

IEEE Trans. Inf. Theory. 13(1), 21–27 (1967)

12. Fukunaga, K.: Introduction to Statistical Pattern Recognition

(2nd edn.). Academic Press, New York (1990)

13. Li, S.Z., Lu, J.: Face recognition using the nearest feature line

method. IEEE Trans. Neural Netw. 10(2), 439–443 (1999)

14. Burge, C.J.C.: A tutorial on support vector machines

for pattern recognition. Data Min. Knowl. Disc. 2, 121–167

(1998)

15. Jain, A.K., Duin, R.P.W., Mao, J.: Statistical pattern recognition:

a review. IEEE Trans. Pattern Anal. Machine Intell. 22(1), 4–37

(2000)

16. Generalization error. http://en.wikipedia.org/wiki/Generaliza

tion_error

Image Recognition

▶ Image Pattern Recognition

Image Regeneration from Templates

▶ Template Security

Image Resolution

Image resolution describes the detail an image holds.

Higher resolution means more image detail. When

applied to digital images, this term usually means

pixel resolution, which is a pixel count in digital

imaging.

▶ Skin Texture

Image Segmentation

Image segmentation is a technique that partitions a

given input image into several different regions, and

each region shares common features, like similar tex-

ture, similar colors or semantically reasonable object.

The goal of image segmentation is to map every pixel of

image to a group that is meaningful to human. Image

segmentation is, most of the time, used to locate the

object of interest, and find boundaries between objects.

Image Segmentation

I

729

I