Wooldridge J. Introductory Econometrics: A Modern Approach (Basic Text - 3d ed.)

Подождите немного. Документ загружается.

the other betas) by IV. What do we have to assume about condis in each

equation?

16.6 Consider a linear probability model for whether employers offer a pension plan

based on the percentage of workers belonging to a union, as well as other factors:

pension b

0

b

1

percunion b

2

avgage b

3

avgeduc

b

4

percmale b

5

percmarr u

1

.

(i) Why might percunion be jointly determined with pension?

(ii) Suppose that you can survey workers at firms and collect information

on workers’ families. Can you think of information that can be used to

construct an IV for percunion?

(iii) How would you test whether your variable is at least a reasonable IV

candidate for percunion?

16.7 For a large university, you are asked to estimate the demand for tickets to women’s

basketball games. You can collect time series data over 10 seasons, for a total of about

150 observations. One possible model is

lATTEND

t

b

0

b

1

lPRICE

t

b

2

WINPERC

t

b

3

RIVAL

t

b

4

WEEKEND

t

b

5

t u

t

,

where PRICE

t

is the price of admission, probably measured in real terms—say, deflating

by a regional consumer price index—WINPERC

t

is the team’s current winning percent-

age, RIVAL

t

is a dummy variable indicating a game against a rival, and WEEKEND

t

is

a dummy variable indicating whether the game is on a weekend. The l denotes natural

logarithm, so that the demand function has a constant price elasticity.

(i) Why is it a good idea to have a time trend in the equation?

(ii) The supply of tickets is fixed by the stadium capacity; assume this has

not changed over the 10 years. This means that quantity supplied does not

vary with price. Does this mean that price is necessarily exogenous in the

demand equation? (Hint: The answer is no.)

(iii) Suppose that the nominal price of admission changes slowly—say, at

the beginning of each season. The athletic office chooses price based

partly on last season’s average attendance, as well as last season’s team

success. Under what assumptions is last season’s winning percentage

(SEASPERC

t1

) a valid instrumental variable for lPRICE

t

?

(iv) Does it seem reasonable to include the (log of the) real price of men’s bas-

ketball games in the equation? Explain. What sign does economic theory

predict for its coefficient? Can you think of another variable related to

men’s basketball that might belong in the women’s attendance equation?

(v) If you are worried that some of the series, particularly lATTEND and

lPRICE,have unit roots, how might you change the estimated equation?

(vi) If some games are sold out, what problems does this cause for estimating

the demand function? (Hint: If a game is sold out, do you necessarily

observe the true demand?)

576 Part 3 Advanced Topics

16.8 How big is the effect of per-student school expenditures on local housing values? Let

HPRICE be the median housing price in a school district and let EXPEND be per-student

expenditures. Using panel data for the years 1992, 1994, and 1996, we postulate the model

lHPRICE

it

u

t

b

1

lEXPEND

it

b

2

lPOLICE

it

b

3

lMEDINC

it

b

4

PROPTAX

it

a

i1

u

it1

,

where POLICE

it

is per capita police expenditures, MEDINC

it

is median income, and

PROPTAX

it

is the property tax rate; l denotes natural logarithm. Expenditures and hous-

ing price are simultaneously determined because the value of homes directly affects the

revenues available for funding schools.

Suppose that, in 1994, the way schools were funded was drastically changed: rather

than being raised by local property taxes, school funding was largely determined at the

state level. Let lSTATEALL

it

denote the log of the state allocation for district i in year t,

which is exogenous in the preceding equation, once we control for expenditures and a dis-

trict fixed effect. How would you estimate the b

j

?

COMPUTER EXERCISES

C16.1 Use SMOKE.RAW for this exercise.

(i) A model to estimate the effects of smoking on annual income (perhaps

through lost work days due to illness, or productivity effects) is

log(income) b

0

b

1

cigs b

2

educ b

3

age b

4

age

2

u

1

,

where cigs is number of cigarettes smoked per day, on average. How

do you interpret b

1

?

(ii) To reflect the fact that cigarette consumption might be jointly deter-

mined with income, a demand for cigarettes equation is

cigs g

0

g

1

log(income) g

2

educ g

3

age g

4

age

2

g

5

log(cigpric) g

6

restaurn u

2

,

where cigpric is the price of a pack of cigarettes (in cents), and

restaurn is a binary variable equal to unity if the person lives in a state

with restaurant smoking restrictions. Assuming these are exogenous to

the individual, what signs would you expect for g

5

and g

6

?

(iii) Under what assumption is the income equation from part (i) identified?

(iv) Estimate the income equation by OLS and discuss the estimate of b

1

.

(v) Estimate the reduced form for cigs. (Recall that this entails regressing

cigs on all exogenous variables.) Are log(cigpric) and restaurn signif-

icant in the reduced form?

(vi) Now, estimate the income equation by 2SLS. Discuss how the estimate

of b

1

compares with the OLS estimate.

(vii) Do you think that cigarette prices and restaurant smoking restrictions

are exogenous in the income equation?

Chapter 16 Simultaneous Equations Models 577

C16.2 Use MROZ.RAW for this exercise.

(i) Reestimate the labor supply function in Example 16.5, using log(hours)

as the dependent variable. Compare the estimated elasticity (which is

now constant) to the estimate obtained from equation (16.24) at the

average hours worked.

(ii) In the labor supply equation from part (i), allow educ to be endogenous

because of omitted ability. Use motheduc and fatheduc as IVs for educ.

Remember, you now have two endogenous variables in the equation.

(iii) Test the overidentifying restrictions in the 2SLS estimation from part

(ii). Do the IVs pass the test?

C16.3 Use the data in OPENNESS.RAW for this exercise.

(i) Because log(pcinc) is insignificant in both (16.22) and the reduced

form for open,drop it from the analysis. Estimate (16.22) by OLS and

IV without log(pcinc). Do any important conclusions change?

(ii) Still leaving log(pcinc) out of the analysis, is land or log(land ) a bet-

ter instrument for open? (Hint: Regress open on each of these sepa-

rately and jointly.)

(iii) Now, return to (16.22). Add the dummy variable oil to the equation and

treat it as exogenous. Estimate the equation by IV. Does being an oil

producer have a ceteris paribus effect on inflation?

C16.4 Use the data in CONSUMP.RAW for this exercise.

(i) In Example 16.7, use the method from Section 15.5 to test the single

overidentifying restriction in estimating (16.35). What do you conclude?

(ii) Campbell and Mankiw (1990) use second lags of all variables as IVs

because of potential data measurement problems and informational

lags. Reestimate (16.35), using only gc

t2

, gy

t2

, and r3

t2

as IVs. How

do the estimates compare with those in (16.36)?

(iii) Regress gy

t

on the IVs from part (ii) and test whether gy

t

is sufficiently

correlated with them. Why is this important?

C16.5 Use the Economic Report of the President (2005 or later) to update the data in

CONSUMP.RAW, at least through 2003. Reestimate equation (16.35). Do any important

conclusions change?

C16.6 Use the data in CEMENT.RAW for this exercise.

(i) A static (inverse) supply function for the monthly growth in cement

price (gprc) as a function of growth in quantity (gcem) is

gprc

t

a

1

gcem

t

b

0

b

1

gprcpet b

2

feb

t

… b

12

dec

t

u

t

s

,

where gprcpet (growth in the price of petroleum) is assumed to be

exogenous and feb,…,dec are monthly dummy variables. What signs

do you expect for a

1

and b

1

? Estimate the equation by OLS. Does the

supply function slope upward?

578 Part 3 Advanced Topics

(ii) The variable gdefs is the monthly growth in real defense spending in

the United States. What do you need to assume about gdefs for it to be

a good IV for gcem? Test whether gcem is partially correlated with

gdefs. (Do not worry about possible serial correlation in the reduced

form.) Can you use gdefs as an IV in estimating the supply function?

(iii) Shea (1993) argues that the growth in output of residential (gres) and

nonresidential (gnon) construction are valid instruments for gcem. The

idea is that these are demand shifters that should be roughly uncorre-

lated with the supply error u

t

s

. Test whether gcem is partially correlated

with gres and gnon; again, do not worry about serial correlation in the

reduced form.

(iv) Estimate the supply function, using gres and gnon as IVs for gcem.

What do you conclude about the static supply function for cement?

[The dynamic supply function is, apparently, upward sloping; see Shea

(1993).]

C16.7 Refer to Example 13.9 and the data in CRIME4.RAW.

(i) Suppose that, after differencing to remove the unobserved effect, you

think log(polpc) is simultaneously determined with log(crmrte); in

particular, increases in crime are associated with increases in police

officers. How does this help to explain the positive coefficient on

log(polpc) in equation (13.33)?

(ii) The variable taxpc is the taxes collected per person in the county. Does

it seem reasonable to exclude this from the crime equation?

(iii) Estimate the reduced form for log(polpc) using pooled OLS, includ-

ing the potential IV, log(taxpc). Does it look like log(taxpc) is a

good IV candidate? Explain.

(iv) Suppose that, in several of the years, the state of North Carolina

awarded grants to some counties to increase the size of their county

police force. How could you use this information to estimate the effect

of additional police officers on the crime rate?

C16.8 Use the data set in FISH.RAW, which comes from Graddy (1995), to do this exer-

cise. The data set is also used in Computer Exercise C12.9. Now, we will use it to esti-

mate a demand function for fish.

(i) Assume that the demand equation can be written, in equilibrium for

each time period, as

log(totqty

t

) a

1

log(avgprc

t

) b

10

b

11

mon

t

b

12

tues

t

b

13

wed

t

b

14

thurs

t

u

t1

,

so that demand is allowed to differ across days of the week. Treating

the price variable as endogenous, what additional information do we

need to consistently estimate the demand-equation parameters?

(ii) The variables wave2

t

and wave3

t

are measures of ocean wave heights

over the past several days. What two assumptions do we need to make

in order to use wave2

t

and wave3

t

as IVs for log(avgprc

t

) in estimating

the demand equation?

Chapter 16 Simultaneous Equations Models 579

(iii) Regress log(avgprc

t

) on the day-of-the-week dummies and the two

wave measures. Are wave2

t

and wave3

t

jointly significant? What is the

p-value of the test?

(iv) Now, estimate the demand equation by 2SLS. What is the 95% confi-

dence interval for the price elasticity of demand? Is the estimated elas-

ticity reasonable?

(v) Obtain the 2SLS residuals, uˆ

t1

. Add a single lag, uˆ

t1,1

in estimating

the demand equation by 2SLS. Remember, use uˆ

t1,1

as its own instru-

ment. Is there evidence of AR(1) serial correlation in the demand equa-

tion errors?

(vi) Given that the supply equation evidently depends on the wave

variables, what two assumptions would we need to make in order to

estimate the price elasticity of supply?

(vii) In the reduced form equation for log(avgprc

t

), are the day-of-the-week

dummies jointly significant? What do you conclude about being able

to estimate the supply elasticity?

C16.9 For this exercise, use the data in AIRFARE.RAW, but only for the year 1997.

(i) A simple demand function for airline seats on routes in the United

States is

log(passen) b

10

a

1

log(fare) b

11

log(dist) b

12

[log(dist)]

2

u

1

,

where passen is average passengers per day, fare is average airfare, and

dist is the route distance (in miles). If this is truly a demand function,

what should be the sign of a

1

?

(ii) Estimate the equation from part (i) by OLS. What is the estimated price

elasticity?

(iii) Consider the variable concen,which is a measure of market concen-

tration. (Specifically, it is the share of business accounted for by

the largest carrier.) Explain in words what we must assume to treat con-

cen as exogenous in the demand equation.

(iv) Now assume concen is exogenous to the demand equation. Estimate the

reduced form for log(fare) and confirm that concen has a positive (par-

tial) effect on log(fare).

(v) Estimate the demand function using IV. Now what is the estimate price

elasticity of demand? How does it compare with the OLS estimate?

(vi) Using the IV estimates, describe how demand for seats depends on

route distance.

C16.10 Use the entire panel data set in AIRFARE.RAW for this exercise. The demand

equation in a simultaneous equations unobserved effects model is

log(passen

it

) u

t1

a

1

log(fare

it

) a

i1

u

it1

,

where we absorb the distance variables into a

i1

.

580 Part 3 Advanced Topics

(i) Estimate the demand function using fixed effects, being sure to include

year dummies to account for the different intercepts. What is the esti-

mated elasticity?

(ii) Use fixed effects to estimate the reduced form

log(fare

it

) u

t2

p

21

concen

it

c

i2

v

it2

.

Perform the appropriate test to ensure that concen

it

can be used as an

IV for log(fare

it

).

(iii) Now estimate the demand function using the fixed effects transforma-

tion along with IV, as in equation (16.42). Now what is the estimated

elasticity? Is it statistically significant?

Chapter 16 Simultaneous Equations Models 581

Limited Dependent Variable Models

and Sample Selection Corrections

I

n Chapter 7, we studied the linear probability model, which is simply an application of

the multiple regression model to a binary dependent variable. A binary dependent vari-

able is an example of a limited dependent variable (LDV). An LDV is broadly defined

as a dependent variable whose range of values is substantively restricted. A binary vari-

able takes on only two values, zero and one. We have seen several other examples of lim-

ited dependent variables: participation percentage in a pension plan must be between zero

and 100, the number of times an individual is arrested in a given year is a nonnegative

integer, and college grade point average is between zero and 4.0 at most colleges.

Most economic variables we would like to explain are limited in some way, often because

they must be positive. For example, hourly wage, housing price, and nominal interest rates

must be greater than zero. But not all such variables need special treatment. If a strictly pos-

itive variable takes on many different values, a special econometric model is rarely necessary.

When y is discrete and takes on a small number of values, it makes no sense to treat it as

an approximately continuous variable. Discreteness of y does not in itself mean that lin-

ear models are inappropriate. However, as we saw in Chapter 7 for binary response, the

linear probability model has certain drawbacks. In Section 17.1, we discuss logit and pro-

bit models, which overcome the shortcomings of the LPM; the disadvantage is that they

are more difficult to interpret.

Other kinds of limited dependent variables arise in econometric analysis, especially

when the behavior of individuals, families, or firms is being modeled. Optimizing behav-

ior often leads to a corner solution response for some nontrivial fraction of the popula-

tion. That is, it is optimal to choose a zero quantity or dollar value, for example. During

any given year, a significant number of families will make zero charitable contributions.

Therefore, annual family charitable contributions has a population distribution that is spread

out over a large range of positive values, but with a pileup at the value zero. Although a

linear model could be appropriate for capturing the expected value of charitable contribu-

tions, a linear model will likely lead to negative predictions for some families. Taking the

natural log is not possible because many observations are zero. The Tobit model, which we

cover in Section 17.2, is explicitly designed to model corner solution dependent variables.

Another important kind of LDV is a count variable, which takes on nonnegative inte-

ger values. Section 17.3 illustrates how Poisson regression models are well suited for mod-

eling count variables.

In some cases, we observe limited dependent variables due to data censoring, a topic

we introduce in Section 17.4. The general problem of sample selection, where we observe

a nonrandom sample from the underlying population, is treated in Section 17.5.

Limited dependent variable models can be used for time series and panel data, but

they are most often applied to cross-sectional data. Sample selection problems are usually

confined to cross-sectional or panel data. We focus on cross-sectional applications in this

chapter. Wooldridge (2002) presents these problems in the context of panel data models

and provides many more details for cross-sectional and panel data applications.

17.1 Logit and Probit Models for Binary Response

The linear probability model is simple to estimate and use, but it has some drawbacks that

we discussed in Section 7.5. The two most important disadvantages are that the fitted prob-

abilities can be less than zero or greater than one and the partial effect of any explanatory

variable (appearing in level form) is constant. These limitations of the LPM can be over-

come by using more sophisticated binary response models.

In a binary response model, interest lies primarily in the response probability

P(y 1x) P(y 1x

1

,x

2

,…,x

k

), (17.1)

where we use x to denote the full set of explanatory variables. For example, when y is an

employment indicator, x might contain various individual characteristics such as educa-

tion, age, marital status, and other factors that affect employment status, including a binary

indicator variable for participation in a recent job training program.

Specifying Logit and Probit Models

In the LPM, we assume that the response probability is linear in a set of parameters,

j

;

see equation (7.27). To avoid the LPM limitations, consider a class of binary response

models of the form

P(y 1x) G(

0

1

x

1

…

k

x

k

) G(

0

x

), (17.2)

where G is a function taking on values strictly between zero and one: 0 G(z) 1, for

all real numbers z. This ensures that the estimated response probabilities are strictly

between zero and one. As in earlier chapters, we write x

1

x

1

…

k

x

k

.

Various nonlinear functions have been suggested for the function G in order to make

sure that the probabilities are between zero and one. The two we will cover here are used

in the vast majority of applications (along with the LPM). In the logit model, G is the

logistic function:

G(z) exp(z)/[1 exp(z)] (z), (17.3)

Chapter 17 Limited Dependent Variable Models and Sample Selection Corrections 583

which is between zero and one for all real numbers z. This is the cumulative distribution

function for a standard logistic random variable. In the probit model, G is the standard

normal cumulative distribution function (cdf), which is expressed as an integral:

G(z) (z)

z

(v)dv, (17.4)

where

(z) is the standard normal density

(z) (2

)

1/2

exp(z

2

/2). (17.5)

This choice of G again ensures that (17.2) is strictly between zero and one for all values

of the parameters and the x

j

.

The G functions in (17.3) and (17.4) are both increasing functions. Each increases most

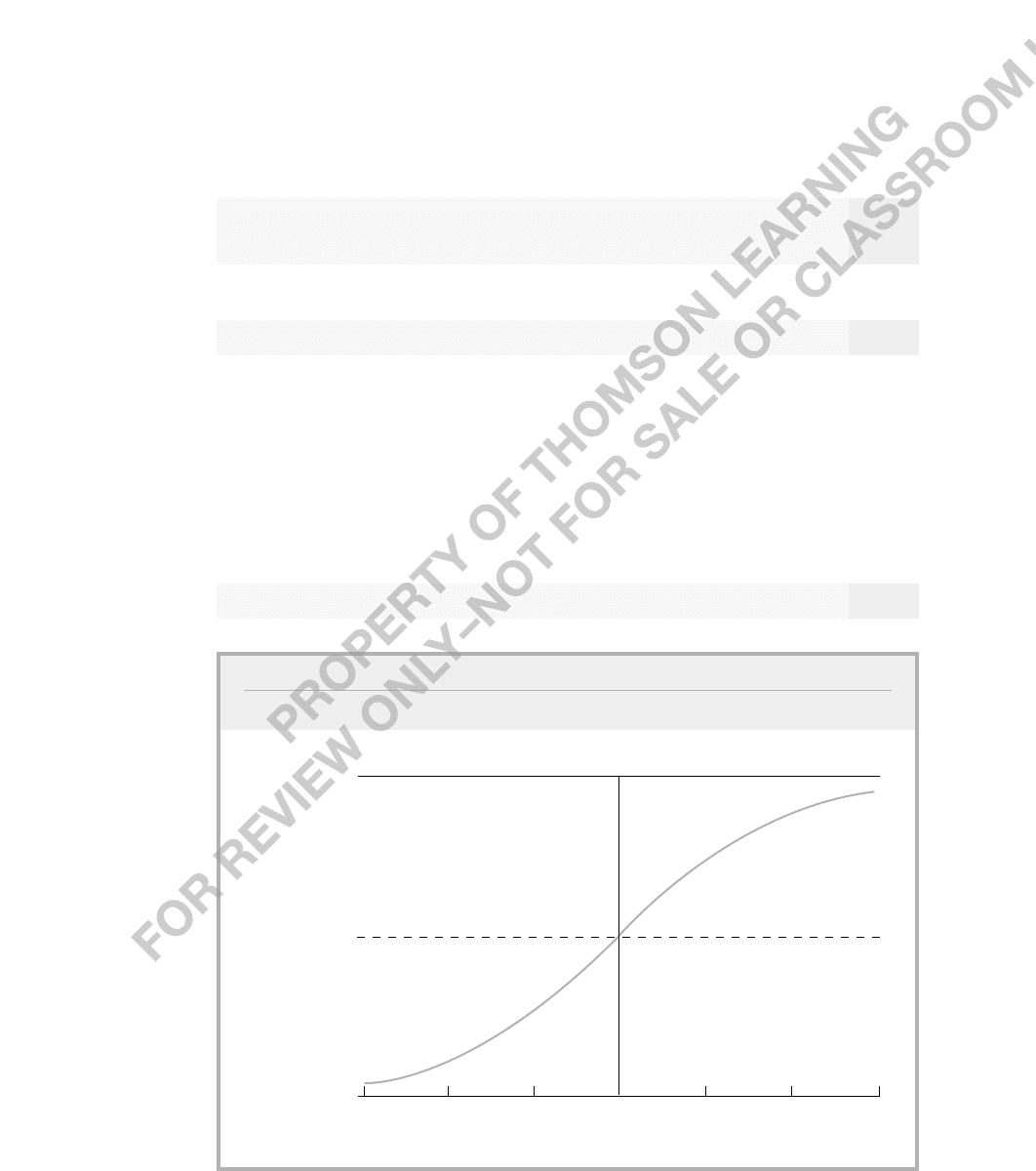

quickly at z 0, G(z) S 0 as z S , and G(z) S 1 as z S . The logistic function is

plotted in Figure 17.1. The standard normal cdf has a shape very similar to that of the

logistic cdf.

Logit and probit models can be derived from an underlying latent variable model.

Let y* be an unobserved, or latent,variable, determined by

y*

0

x

e, y 1[y* 0], (17.6)

584 Part 3 Advanced Topics

FIGURE 17.1

Graph of the logistic function G(z) exp(z)/[1 exp(z)].

G(z) exp(z)/[1 exp(z)]

3

1

.5

0

3

2 1

0

1

2

z

where we introduce the notation 1[] to define a binary outcome. The function 1[] is called

the indicator function,which takes on the value one if the event in brackets is true, and

zero otherwise. Therefore, y is one if y* 0, and y is zero if y* 0. We assume that e is

independent of x and that e either has the standard logistic distribution or the standard nor-

mal distribution. In either case, e is symmetrically distributed about zero, which means that

1 G(z) G(z) for all real numbers z. Economists tend to favor the normality assump-

tion for e,which is why the probit model is more popular than logit in econometrics. In

addition, several specification problems, which we touch on later, are most easily analyzed

using probit because of properties of the normal distribution.

From (17.6) and the assumptions given, we can derive the response probability for y:

P(y 1x) P(y* 0x) P[e (

0

x

)x]

1 G[(

0

x

)] G(

0

x

),

which is exactly the same as (17.2).

In most applications of binary response models, the primary goal is to explain the

effects of the x

j

on the response probability P(y 1x). The latent variable formulation

tends to give the impression that we are primarily interested in the effects of each x

j

on

y*. As we will see, for logit and probit, the direction of the effect of x

j

on E(y*x)

0

x

and on E(yx) P(y 1x) G(

0

x

) is always the same. But the latent

variable y* rarely has a well-defined unit of measurement. (For example, y* might be the

difference in utility levels from two different actions.) Thus, the magnitudes of each

j

are

not, by themselves, especially useful (in contrast to the linear probability model). For most

purposes, we want to estimate the effect of x

j

on the probability of success

P(y 1x), but this is complicated by the nonlinear nature of G().

To find the partial effect of roughly continuous variables on the response probability,

we must rely on calculus. If x

j

is a roughly continuous variable, its partial effect on

p(x) P(y 1x) is obtained from the partial derivative:

g(

0

x

)

j

,whereg(z) (z).

(17.7)

Because G is the cdf of a continuous random variable, g is a probability density function.

In the logit and probit cases, G() is a strictly increasing cdf, and so g(z) 0 for all z.

Therefore, the partial effect of x

j

on p(x) depends on x through the positive quantity g(

0

x

), which means that the partial effect always has the same sign as

j

.

Equation (17.7) shows that the relative effects of any two continuous explanatory vari-

ables do not depend on x: the ratio of the partial effects for x

j

and x

h

is

j

/

h

. In the typical

case that g is a symmetric density about zero, with a unique mode at zero, the largest effect

occurs when

0

x

0. For example, in the probit case with g(z)

(z), g(0)

(0)

1/

2

.40. In the logit case, g(z) exp(z)/[1 exp(z)]

2

, and so g(0) .25.

If, say, x

1

is a binary explanatory variable, then the partial effect from changing x

1

from

zero to one, holding all other variables fixed, is simply

G(

0

1

2

x

2

…

k

x

k

) G(

0

2

x

2

…

k

x

k

).

(17.8)

dG

dz

∂p(x)

∂x

j

Chapter 17 Limited Dependent Variable Models and Sample Selection Corrections 585