Li S.Z., Jain A.K. (eds.) Encyclopedia of Biometrics

Подождите немного. Документ загружается.

Localization Inaccuracy

▶ Face Misalignment Problem

Logical Access Control, Client-Based

Most platforms and peripherals that come w ith em-

bedded fingerprint readers include software to access

the local PC and applicati ons. These applications may

include biometric-based access to the PC, pre-boot

authentication, full disk encryption, Windows logon,

and a general password manager application to facili-

tate the use of biometric for other applications and

websites. Such a suite of applications protects the spe-

cific PC on which it is deployed and makes pers onal

access to data more secure, convenient, and fun. Com-

panies such as Dell, Lenovo, Microsoft, and Hewlett-

Packard ship platforms and peripherals are pre-loaded

with such capability. However, these are end-user uti-

lities with the scope of use only on the local PC. As a

result, they may be challenging and costly to manage if

deployed widely in an enterprise since each user will

need to setup, enroll her biometric, and configure the

appropriate policy, all by herself. Usually the user is

given the option to use the biometric system as a cool

individual convenience, rather than enforced by an

enterprise-wide authentication policy.

▶ Access Control, Logical

Logical Access Control,

Client-Server-Based

The client-server-based logical access control solutions

typically limit the flexibility given to the end-user and

instead focus on the needs of the organization and the

system administrator to deploy, enroll users’ biometric

credentials into the enterprise directory, and centrally

configure enterprise-wide policies. An enterprise-wide

policy, however, drives stronger requirements for the

reliability, security, and interoperability of the biomet-

ric authentication. If it is a busin ess policy that every-

one in the organization must use the biometric system

for authentication, the reliability of the biometric sys-

tem must be higher than a client-side-only solution

where the user can opt-in to use the biometric system

just for convenience. A server-based logical access con-

trol solution generally needs to be interoperable with

data coming from many different biometric readers

since not every platform in the organization will use

the same model of the biometric reader. Interoperabil-

ity can be accomplished at either the enrollment tem-

plate level or the biometric image level. Lastly, since a

server-based solution typically stores biometric cre-

dentials in a central database, the security model of

the whole chain from the reader to the server must be

considered to protect against hackers and maintain

user privacy. However, unlike government deploy-

ments that store the user’s actual biometric image(s)

for archival purposes, a biometric solutio n used for

enterprise authentication typically stores only the bio-

metric enrollment templates.

▶ Access Control, Logical

Logico-Linear Operator

An operation in signal processing that brid ges the gulf

between linear operations, such as filtering, and the

logical calculus of Boolean operators such as AND,

OR, and XOR. In doing so, a logico-linear operator

serves as a kind of signal-to-symbol converter. The input

to the operator is a continuous signal such as a sound

waveform or an image, upon which a linear operation

is performed such as computing some derivative, or

convolving with some filter. The output of the linear

operation is converted into a logical state by, for exam-

ple, comparing to a threshold, noting its sign, quantiz-

ing its phase or its modulus if complex, or some more

abstract binary class ification. The resulting Boolean

quantity can be used as a logical operand for purposes

such as detecting similarity and differences (XOR),

motion between image frames (XOR), region growing

(OR), masking (AND) of some data by other data,

950

L

Localization Inaccuracy

descriptions of complexity or of graph structure, veto-

ing (NAND), machine learning, and so forth. Iris

encoding and recognition is performed through the

use of logico-linear operators.

▶ Iris Encoding and Recognition using Gabor

Wavelets

Logon, Password Management

▶ Access Control, Logical

Luminance

In photometry, luminance is a measure of the density

of illumination describing the amount of light that

passes through or is emitted from a particular area,

and falls within a given solid angle. In the context of

color perception, luminance indicates the perceived

brightness (or lightness) of a given color. In color

spaces that separate the luminance in a separate chan-

nel (such as the Y channel in the YUV color space), the

luminance channel of an image is equivalent to a gray

level version of that image.

▶ Gait Recognition, Motion Analysis for

Luminance

L

951

L

M

Machine-Generated Fingerprint

Classes

Fingerprints are grouped based on some similarity

criteria in the feature space. Fingerprint groups are

formed by machine learning from finge rprint samples

in an unsuper vised manner such as clustering and

binning. Such fingerprint groups are called machine-

generated fingerprint classes. The goal of partitioning

the database into machine-generated fingerprint clas-

ses is to divide the fingerprint population into as many

classes as possible while maximizing the possibility of

placing the fingerprints of a same finger into a same

class in a consistent and reliable way.

▶ Fingerprint Classification

Machine-Learning

A type of algorithm that learns from past experience to

make decisions.

▶ Incremental Learning

▶ Palmprint Matching

Magnification

In optical imaging, the ratio of the dimensions of the

image created by the optical system to the dimensions of

the object that is imaged. The ratio can be less than one.

▶ Iris Device

Mahalanobis Distance

The Mahalanobis distance is based on the covariance

among variables in the feature vectors which are com-

pared. It has the advantage of utilizing group means

and variances for each variable and the problems

of scale and correlation inherent in the Euclidean dis-

tance are no longer an issue. When using Euclidean

distance, the set of points equidistant from a given

location is a sphere. The Mahalanobis distanc e stretches

this sphere to correct the respective scales of different

variables and to account for correlation among variables.

▶ Hand Shape

▶ Signature Matching

Malicious-code-free Operating

System

▶ Tamper-proof Operati ng System

Manifold

Manifold is a non-empty subset M of R

N

such that the

neighborhood of every point p 2 M resembles a Eu-

clidean space. A smooth manifold is associated with a set

of homeomorphisms that map points from open subsets

around every point p to points in open subsets in R

m

,

where

m

is the intrinsic dimensionality of the manifold.

▶ Gait Recognition, Motion Analysis for

▶ Manifold Learning

▶ Non-linear Techniques for Dimension Reduction

#

2009 Springer Science+Business Media, LLC

Manifold Embedding

Any manifold is embedded in an Euclidean space, e.g.,

a sphere in the 3D world is a two-dimensional mani-

fold embedded in a three-dimensional space.

▶ Gait Recognition, Motion Analysis for

Manifold Learning

PHILIPPOS MORDOHAI

1

,GE

´

RARD MEDIONI

2

1

Stevens Institute of Technology, PA, USA

2

University of Southern California, Los Angeles,

CA, USA

Definition

Manifold learning is the process of estimating the

structure of a

▶ manifold from a set of samples, also

referred to as obser vations or instances, taken from the

manifold. It is a subfield of machine learning that

operates in continuous domains and learns from

observations that are represented as points in a Euclid-

ean space, referred to as the

▶ ambient space. This type

of learning, to Mitchell, is termed instance-based or

memory-based learning [1]. The goal of such learning

is to discover the underlying relationships between

observations, on the assumption that they lie in a limited

part of the space, typically a manifold, the

▶ intrinsic

dimensionality of a manifold of which is an indication

of the degrees of freedom of the underlying system.

Introduction

Manifold learning has attracted considerable attention

of the machine learning community, due to a wide

spectrum of applications in domains such as pattern

recognition, data mining, biometrics, function approx-

imation and visualization. If the manifolds are linear,

techniques such as the Principal Component Analysis

(PCA) [2] and Multi-Dimensional Scaling (MDS) [3]

are very effective in discovering the subspace in which

the data lie. Recently, a number of new algorithms that

not only advances the state of the art, but are also

capable of learning nonlinear manifolds in spaces of

very high dimensionality have been reported in the

literature. These include locally linear embedding

(LLE) [4], Isomap [5] and the charting algorithm [6].

They aim at reducing the dimensionality of the input

space in a way that preserves certain geometric or

statistical properties of the data. Isomap, for instance,

preserves the

▶ geodesic distances between all points as

the manifold is ‘‘unfolded’’ and mapped to a space of

lower dimension.

Given a set of observations, which are represented

as vectors, the typical steps of processing are as follows:

Intrinsic dimensionality estimation

Learning structure of the manifold

Dimensionality reduction to remove redundant

dimensions, preserving the learned manifold

structure.

Not all algorithms perform all steps. For instance, LLE

[4] and the Laplacian eigenmaps algorithm [7] require

an estimate of the dimensionality to be provided

externally. The method proposed by Mordohai and

Medioni [8], which is based on tensor voting, does

not reduce the dimensionality of the space, but per-

forms all operations in the original ambient space.

Recent methods used for these tasks are discussed

in this essay. Dimensionality reduction is described in

conjunction with manifold learning since it is often

closely tied with the selected manifold learning algo-

rithm. In addition, research on manifold learning with

applications in biometrics is highlighted.

Intrinsic Dimensionality Estimation

Bruske and Sommer [9] who proposed an approach an

optimally topology preserving map (OTPM) is con-

structed for a subset of the data. Principal Component

Analysis (PCA) is then performed for each node of

the OTPM on the assumption that the underlying

structure of the data is locally line ar. The average of

the number of significant singular values at the nodes

is the estimate of the intrinsic dimensionality.

Ke

´

gl [10] estimated the capacity dimension of

a manifold, which is equal to the topological dimen-

sion and does not depend on the distribution of

the data, using an efficient approximation based on

packing numbers. The algorithm takes into account

954

M

Manifold Embedding

dimensionality variations with scale and is based on a

geometric property of the data. This approach differs

from the PCA-related methods that employ succes-

sive projections to increasingly higher-dimensional sub-

spaces until a certain percentage of the data is explained.

Raginsky and Lazebnik [11] described a family of

dimensionality estimators based on the concept of

quantization dimension. The family is parameterized

by the distortion exponent and includes Ke

´

gl’s method

[10] when the distortion exponent tends to infinity. The

authors demonstrated that small values of the distortion

exponent yield estimators that are more robust to noise.

Costa and Hero [12] estimated the intrinsic dimen-

sion of the manifold and the entropy of the samples.

Using geodesic-minimal-spanning trees, the method,

like Isomap [5], considers global properties of the adja-

cency graph and thus produces a single global estimate.

The radius of the spheres is selected in such a way

that they contain enough points and that the density of

the data contained in them can be assumed constant.

These requirements tend to underestimate of the dimen-

sionality when it is very high.

The method proposed by Mordohai and Medioni

[14] obtains estimates of local intrinsic dimensionality

at the point level. Tensor voting enables the estimation

of the normal subspace of the most salient manifold

passing through each point. The normal subspace is

estimated locally by collecting at each point votes from

its neighbors. Tensor voting is a pairwise operation in

which all points act as voters casting votes to their

neighbors and as receivers collecting votes from their

neighbors . These votes encode geometric information

on the dimensionality and orientation of the local

subspace of the receiver on the assumption that the

voter and receiver belong to the same structure (mani-

fold). The dimensionality of the estimated normal

subspace is given by the maximum gap in the eigenva-

lues of a second order tensor that represents the accu-

mulated votes at the point. The intrinsic dimensionality

of the manifold at the point under consideration is

computed as the dimensionality of the ambient space

minus that of the normal subspace.

Manifold Learning and Dimensionality

Reduction

Scho

¨

lkopf et al. [15] proposed the underlying as-

sumption o f is that if the data lie on a locally linear,

low-dimensional manifold, then each point can be

reconstructed from its neighbors with appropriate

weights. These weights should be the same in a low-

dimensional space, the dimensionality of which is greater

than or equal to the intrinsic dimensionality of the man-

ifold. The LLE algorithm computes the basis of such

a low-dimensional space. The dimensionality of the

embedding, however, has to be given as a parameter,

since it cannot always be estimated from the data. More-

over, the output is an embedding of the given data, but

not a mapping from the ambient to the

▶ embedding

space. The LLE is not isometric and often fails by

mapping distant points close to each other.

Isomap, an extension of the MDS, developed by

Tenenbaum et al. [5] uses geodesic instead of Euclide-

an distances and can thus be applied to nonlinear

manifolds. The geodesic distances between points are

approximated by graph distances. MDS is then applied

to the geodesic distances to compute an embedding

that preserves the property of points to be close or far

away from each oth er. Isomap can handle points not

in the original dataset, and perform interpolation, but

it is limited to convex datase ts.

The Laplacian eigenmaps algorithm, developed by

Belkin and Niyogi [7] computes the normalized graph

Laplacian of the adjacency graph of the input data,

which is an approximation of the Laplace-Beltrami

operator, on the manifold. It exploits locality preserv-

ing properties that were first observed in the field of

clustering. The Laplacian eigenmaps algorithm can be

viewed as a generalization of LLE, since the two be-

come identical when the weights of the graph are

chosen according to the criterion of the latter. As in

the case of the LLE, the dimensionality of the manifold

also has to be provided, the computed embeddings are

not isometric and a mapping between the two spaces

is not produced.

Donoho and Grimes [16] proposed the Hessian

LLE (HLLE), an approach similar to the Laplacian

eigenmaps. It computes the Hessian instead of the

Laplacian of the graph. The authors alleged that the

Hessian is better suited than the Laplacian for detect-

ing linear patches on the manifold. The major contri-

bution of this approach is that it proposes a global,

isometric method, which, unlike the Isomap, can be

applied to non-convex datasets. The need to estimate

second derivatives from possibly noisy, discrete data

makes the algorithm more sensitive to noise than the

others approaches.

Manifold Learning

M

955

M

Other related research includes the charting

algorithm of Brand [6], which computes a pseudo-

invertible mapping of the data as well as the intrinsic

dimensionality of the manifold. The dimensionality is

estimated by examining the rate of growth of the num-

ber of points contained in hyper-spheres as a function of

the radius. Linear patches, areas of curvature and noise

can be correctly classified using the proposed measure.

At a subsequent stage, a global coordinate system for

the embedding is defined. This produces a mapping

between the input space and the embedding space.

Weinberger and Saul [17] developed Semidefinite

Embedding (SDE) which addresses manifold learning

by enforcing local isometry . The lengths of the sides of

triangles formed by neighboring points are preserved

during the embedding. These constraints can be

expressed in terms of pairwise distances and the optimal

embedding can be found by semidefinite programming.

The method is computationally demanding, but can reli-

ably estimate the underlying dimensionality of the inputs

by locating the largest gap between the eigen values of the

Gram matrix of the outputs. As in the case of the authors’

approach , this estimate does not require a threshold.

The method for manifold learning described [8]by

Mordohai and Medioni [8] is based on inferring the

geometric properties of the manifold locally via tensor

voting. An estimate of the local tangent space allows one

to traverse the manifold estimating geodesic distances

between points and generating novel observations on the

manifold. In this method it is not necessary that the

manifold is differentiable, or even connected. It can

process data from intersecting manifolds with different

dimensionality and is very robust to outliers. Unlike

most of the other approaches, the authors did not per-

form dimensionality reduction, but conducted all opera-

tions in the ambient space instead. If dimensionality

reduction is desired for visualization or memory saving,

any technique can be applied after this.

Applications

There are two main areas of application of manifold

learning techniques in biometrics: estimation of the

degrees of freedom of the data and visualization.

Given labeled data, the degrees of freedom can be

separated into those that are related to the identity of

the subject and those that are due to other factors, such

as pose. Visualization is enabled by reducing the

dimensionality of the data to two or three to generate

datasets suitable for display. This can be achieved by

selecting the most relevant dimensions of the manifold

and mapping them to a linear 2-D or 3-D space.

An example of both visualization and estimation of

the important mode s of variability of face images has

been discussed by [4]. The input is a set of images of the

face of a single person undergoing expression and view-

point changes. The images are vectorized, that is the

pixels of each 28*20 image are stacked to form a 560-D

vector, and used as observations. LLE is able to deter-

mine the two most dominant degrees of freedom which

are related to head pose and expression variations.

Embedding the manifold from the 560-D ambient

space to a 2-D space provides a visualization in which

similar images appear close to each other. Similar experi-

ments have been described in Tenenbaum et al. [5].

Prince and Elder [18] addressed the issue of face

recognition from a manifold learning perspective by

creating invariance to degrees of freedom that do not

depend on identity. They labeled these degrees of free-

dom, namely, pose and illumination, ‘‘nuisance para-

meters’’ and were able to isolate their effects using a

training dataset in which the value of the nuisance para-

meters is known and each individual has at least two

different values of each nuisance parameter. The images

are converted to 32 32 and subsequently to 1024-D

vectors. Varying a nuisance parameter generates a mani-

fold, which has little value for recognition. Therefore,

once these manifolds are learned, their observations are

mapped to a single point, which corresponds to the

identity of the imaged person, in a new space.

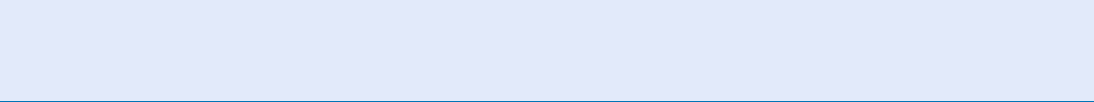

Liao and Medioni [19] studied face tracking and

expression inference from video sequences using tensor

voting to learn manifolds that corr espond to basic expres-

sions, such as smile and surprise. During training, land-

mark points are tracked in the video sequence and their

3-D positions are obtained using a 3-D model of the head.

Facial deformation manifolds are learned from labeled

sequences of the basic expressions. A parameter that cor-

responds to the magnitude of the expression is estimated

for each frame. During testing, the observation vector is

the position of the landmarks and the goal is to jointly

estimate head pose and the magnitude of each expression.

This is accomplished by computing the probability that

the observation was generated by each manifold. The

posterior probability is inferred using a combination

model of all manifolds. Some results of deformable track-

ing and expression inference are presented in Fig. 1.

956

M

Manifold Learning

Summary

Manifold learning techniques have attracted consider-

able attention in the last few years, because of their

ability to untangle information in high dimensional

spaces and reveal the degrees of freedom of the under-

lying process. This essay presents an overview of the

state of the ar t in intrinsic dimensionality estimation

and manifold learning. These algorithms can be de-

ployed in the field of biometrics, where high dimension-

al data exist in large volumes, to discover and learn the

dimensionality and local structure of manifolds formed

by biometric measurements. Different observations on

the manifold are due to variations in identity or other

factors, which may be unimportant for many applica-

tions. Given training data, in which variations between

samples have been labeled according to the factor that

caused them, manifold learning techniques can estimate

a mapping from a measurement to identity, pose, facial

expression or any other variable of interest.

Related Entries

▶ Kernel Methods

▶ Machine-Learning

▶ Classifier Design

▶ Probability Distribution

References

1. Mitchell, T.: Machine Learning. McGraw-Hill, New York (1997)

2. Jolliffe, I.: Principal Component Analysis. Springer, New York

(1986)

3. Cox, T., Cox, M.: Multidimensional Scaling. Chapman & Hall,

London (1994)

4. Roweis, S., Saul, L.: Nonlinear dimensionality reduction by

locally linear embedding. Science 290, 2323–2326 (2000)

5. Tenenbaum, J., de Silva, V., Langford, J.: A global geometric

framework for nonlinear dimensionality reduction. Science

290, 2319–2323 (2000)

6. Brand, M.: Charting a manifold. In: Advances in Neural Infor-

mation Processing Systems 15, pp. 961–968. MIT, Cambridge,

MA (2003)

7. Belkin, M., Niyogi, P.: Laplacian eigenmaps for dimensionality

reduction and data representation. Neural Comput. 15(6),

1373–1396 (2003)

8. Mordohai, P., Medioni, G.: Tensor Voting: A Perceptual Organi-

zation Approach to Computer Vision and Machine Learning.

Morgan & Claypool, San Rafael, CA (2006)

9. Bruske, J., Sommer, G.: Intrinsic dimensionality estimation with

optimally topology preserving maps. IEEE Trans. Pattern Analy.

Mach. Intell. 20(5), 572–575 (1998)

10. Ke

´

gl, B.: Intrinsic dimension estimation using packing numbers.

In: Advances in Neural Information Processing Systems 15,

pp. 681–688. MIT, Cambridge, MA (2003)

Manifold Learning. Figure 1 Top row: Some frames from test video sequences [19]. Middle row: Visualization of the

positions of the landmarks that show the estimated pose as well as the estimated deformation that corresponds to the

inferred magnitude of each expression. Bottom row: Probability of basic expressions. Since each frame corresponds to a

single expression, only one model in the mixture has a high probability.

Manifold Learning

M

957

M

11. Raginsky, M., Lazebnik, S.: Estimation of intrinsic dimensional-

ity using high-rate vector quantization. In: Advances in Neural

Information Processing Systems 18, pp. 1105–1112. MIT,

Cambridge, MA (2006)

12. Costa, J., Hero, A.: Geodesic entropic graphs for dimension and

entropy estimation in manifold learning. IEEE Trans. Signal

Process 52(8), 2210–2221 (2004)

13. Levina, E., Bickel, P.: Maximum likelihood estimation of intrin-

sic dimension. In: Advances in Neural Information Processing

Systems 17, pp. 777–784. MIT, Cambridge, MA (2005)

14. Mordohai, P., Medioni, G.: Unsupervised dimensionality esti-

mation and manifold learning in high-dimensional spaces by

tensor voting. International Joint Conference on Artificial Intel-

ligence (2005)

15. Scho

¨

lkopf, B., Smola, A., Mu

¨

ller, K.R.: Nonlinear component

analysis as a kernel eigenvalue problem. Neural Comput. 10(5),

1299–1319 (1998)

16. Donoho, D., Grimes, C.: Hessian eigenmaps: new tools for

nonlinear dimensionality reduction. In: Proceedings of National

Academy of Science, pp. 5591–5596 (2003)

17. Weinberger, K.Q., Saul, L.K.: Unsupervised learning of image

manifolds by semidefinite programming. Int. J. Comput. Vis.

70(1), 77–90 (2006)

18. Prince, S., Elder, J.: Creating invariance to ‘nuisance parameters’

in face recognition. In: International Conference on Computer

Vision and Pattern Recognition, II: pp. 446–453 (2005)

19. Liao, W.K., Medioni, G.: 3D face tracking and expression inference

from a 2D sequence using manifold learning. In: International

Conference on Computer Vision and Pattern Recognition (2008)

Manual Annotation

The manual annotation or description of an outsole

involves a trained professional assigning a number of

predefined pattern descriptors to the tread pattern.

The palette of available descriptors is usually quite

small and somewhat general or abstract in interpreta-

tion. For example, there may be descriptor terms such

as wavy, linked, curved, zig zag, circular, simple geo-

metric, and complex. This makes the annotation task

quite subjective and inconsistent and hence must be

complete by trained professionals.

▶ Footwear Recognition

Margin Classifier

▶ Support Vector Machine

Markerless 3D Human Motion

Capture from Images

P. F UA

EPFL, IC-CVLab, Lausanne, Switzerland

Synonyms

Motion recovery 3D; Video-based motion capture

Definition

Markerless human motion capture from images entails

recovering the successive 3D poses of a human body

moving in front of one or more cameras, which should

be achieved without additional sensors or markers

to be worn by the person. The 3D poses are usually

expressed in terms of the joint angles of a kinematic

model including an articulated skeleton and volumet-

ric primitives designed to approximate the body shape.

They can be used to analyze, modify, and re-synthesize

the motion. As no two people move in exactly the same

way, they also constitute a signature that can be used

for identification purposes.

Introduction

Understanding and recording human and other verte-

brate motion from images is a longstanding interest.

In its modern form, it goes back at least to Edward

Muybridge [1] and Etienne-Jules Marey [2] in the

nineteenth centur y. They can be considered as the pre-

cursors of human motion and animal locomotion

analysis from video. Muybridge used a battery of pho-

tographic cameras while Marey designed an early

‘‘video camera’’ to capture motions such as the one of

Fig. 1a. In addition to creating beautiful pictures, they

pioneered image-based motion capture, motion anal-

ysis, and motion measurements.

Today, more than 100 years later, automating this

process remains an elusive goal because humans

have a complex ar ticulated geometry overlaid with

deformable tissues, skin, and loose clothing. They

move constantly, and their motion is often rapid,

complex, and self-occluding. Commercially avail-

able

▶ motion capture systems are cumbersome or

958

M

Manual Annotation

expensive or both because they rely on infra-red

or magnetic sensors, lasers, or targets that must be

worn by the subject. Furthermore, they usually work

best in controlled environments. Markerless video-

based systems have the potential to address these

problems but, until recently, they have not been reli-

able enough to be used practically. This situation

is now changing and they are fast becoming an attrac-

tive alternative.

Video-based motion capture is comparatively simpler

if multiple calibrated cameras can be used simultaneously .

In particular, if camera motion and background scenes

are controlled, it is easy to extract the body outlines.

These techniques can be very effective and commercial

systems are now available. By contrast, in natural scenes

with cluttered backgrounds and significant depth vari-

ation, the problem remains very challenging, especially

when a single camera is used. However, it is worth

addressing because solving it will result in solutions

far easier to deploy and more generally applicable

than the existing ones.

Success will make it possible to routinely use video-

based motion capture to recognize people and charac-

terize their motion for biometric purposes. It will also

make our interaction with computers, able to perceive

our gestures much more natural; allow the quantitati ve

analysis of the movements ranging from those of ath-

letes at sports events to those of patients whose

locomotive skills are impaired; useful to capture mo-

tion sequences outside the laboratory for realistic ani-

mation and sy nthesis purposes; make possible the

analysis of people’s motion in a surveillance context;

or facilitate the indexing of visual media. In short, it

has many potential mass-market applications.

Methodology

This section briefly reviews the range of techniques

that have be en developed to overcome the difficulties

inherent to 3D body motion modeling from images.

This modeling is usually done by recovering the joint

angles of a

▶ kinematic model that represents the

subject’s body, as shown in Fig. 1d. The author dis-

tinguishes between multi-camera and single-camera

techniques because the former are more robust but

require much more elaborate setups, which are not

necessarily appropriate for biometrics applications.

This section also discusses the use of

▶ pose and

motion mode ls, which have proved very effective at

disambiguating difficult situations. For all the techni-

ques introduced a few representative papers are listed.

However, the author does not attempt to be exhaustive

to prevent the reference list of this essay from contain-

ing several hundred entries. For a more extensive

analysis, please refer [3, 4].

Markerless 3D Human Motion Capture from Images. Figure 1 Two centuries of video-based motion capture.

(a) Chronophotography by Marey at the end of the nineteenth century [2]. (b) Multi-camera setup early in the twenty-first

century with background images at the top and subject’s body outline overlaid in white at the bottom [3]. (c)Video

sequence with overlaid body outlines and corresponding visual hulls [3]. (d) Articulated skeleton matched to the visual hulls [3].

Markerless 3D Human Motion Capture from Images

M

959

M