Tanenbaum A. Computer Networks

Подождите немного. Документ загружается.

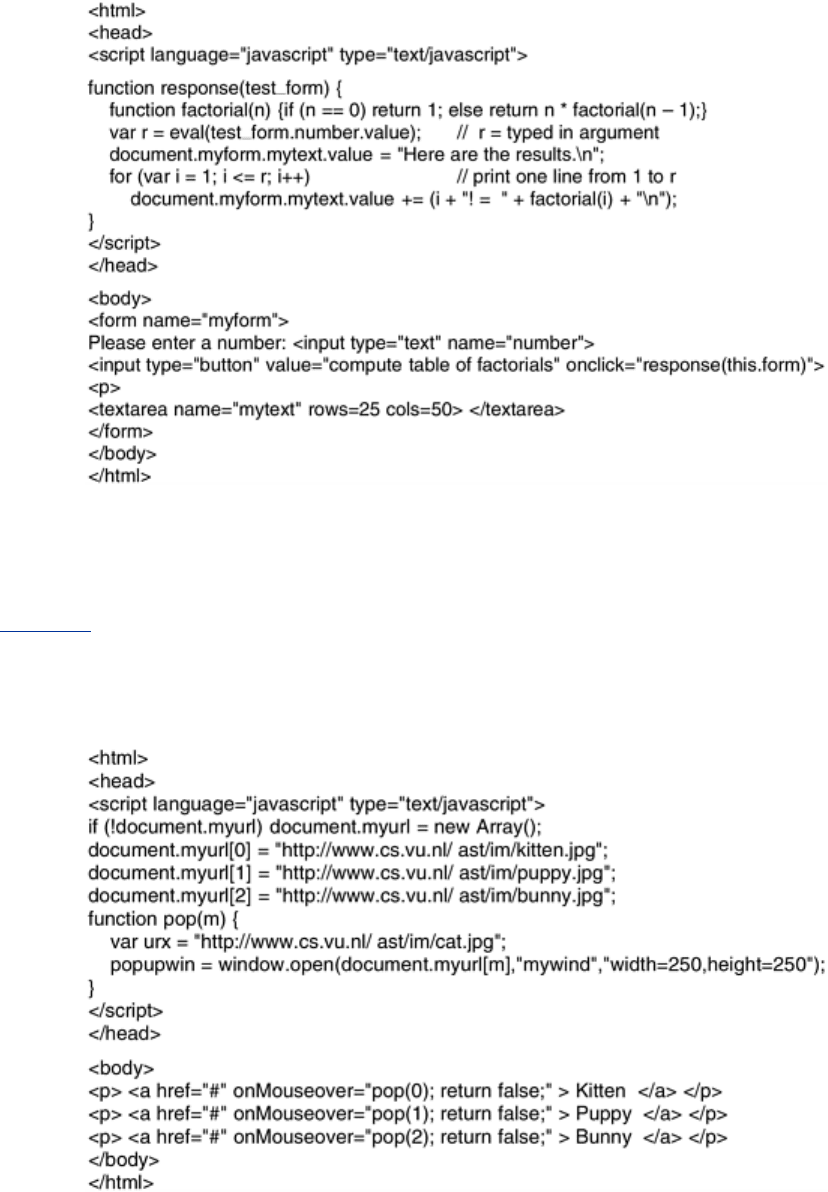

JavaScript can also track mouse motion over objects on the screen. Many JavaScript Web

pages have the property that when the mouse cursor is moved over some text or image,

something happens. Often the image changes or a menu suddenly appears. This kind of

behavior is easy to program in JavaScript and leads to lively Web pages. An example is given

in

Fig. 7-39.

Figure 7-39. An interactive Web page that responds to mouse

movement.

JavaScript is not the only way to make Web pages highly interactive. Another popular method

is through the use of

applets. These are small Java programs that have been compiled into

machine instructions for a virtual computer called the

JVM (Java Virtual Machine). Applets

can be embedded in HTML pages (between

<applet> and </applet>) and interpreted by JVM-

capable browsers. Because Java applets are interpreted rather than directly executed, the Java

interpreter can prevent them from doing Bad Things. At least in theory. In practice, applet

writers have found a nearly endless stream of bugs in the Java I/O libraries to exploit.

501

Microsoft's answer to Sun's Java applets was allowing Web pages to hold ActiveX controls,

which are programs compiled to Pentium machine language and executed on the bare

hardware. This feature makes them vastly faster and more flexible than interpreted Java

applets because they can do anything a program can do. When Internet Explorer sees an

ActiveX control in a Web page, it downloads it, verifies its identity, and executes it. However,

downloading and running foreign programs raises security issues, which we will address in

Chap. 8.

Since nearly all browsers can interpret both Java programs and JavaScript, a designer who

wants to make a highly-interactive Web page has a choice of at least two techniques, and if

portability to multiple platforms is not an issue, ActiveX in addition. As a general rule,

JavaScript programs are easier to write, Java applets execute faster, and ActiveX controls run

fastest of all. Also, since all browers implement exactly the same JVM but no two browsers

implement the same version of JavaScript, Java applets are more portable than JavaScript

programs. For more information about JavaScript, there are many books, each with many

(often > 1000) pages. A few examples are (Easttom, 2001; Harris, 2001; and McFedries,

2001).

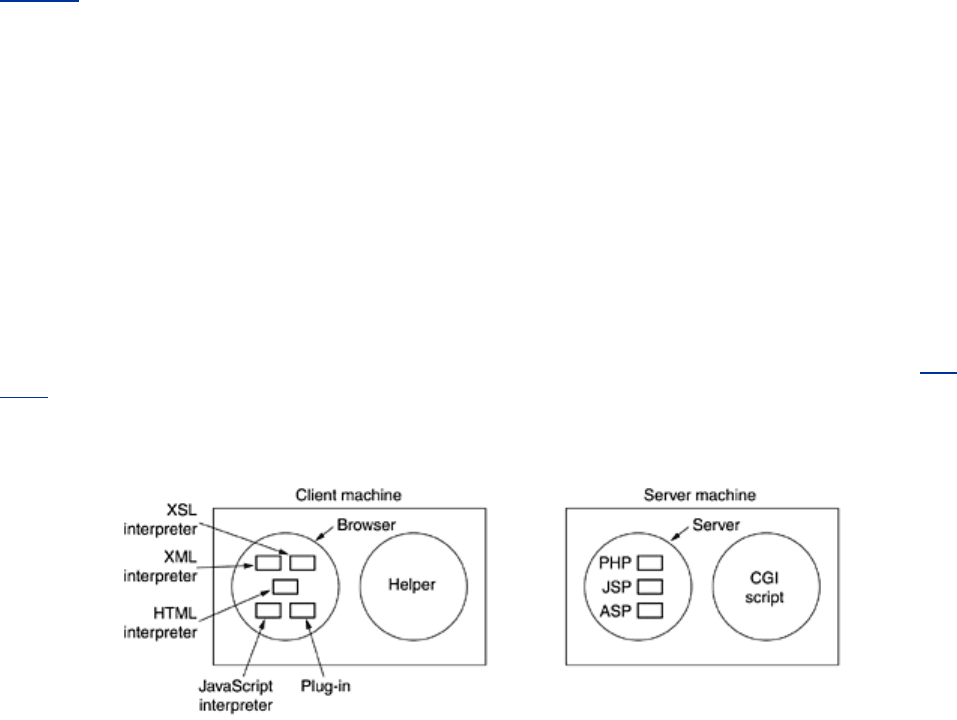

Before leaving the subject of dynamic Web content, let us briefly summarize what we have

covered so far. Complete Web pages can be generated on-the-fly by various scripts on the

server machine. Once they are received by the browser, they are treated as normal HTML

pages and just displayed. The scripts can be written in Perl, PHP, JSP, or ASP, as shown in

Fig.

7-40.

Figure 7-40. The various ways to generate and display content.

Dynamic content generation is also possible on the client side. Web pages can be written in

XML and then converted to HTML according to an XSL file. JavaScript programs can perform

arbitrary computations. Finally, plug-ins and helper applications can be used to display content

in a variety of formats.

7.3.4 HTTP—The HyperText Transfer Protocol

The transfer protocol used throughout the World Wide Web is HTTP (HyperText Transfer

Protocol

). It specifies what messages clients may send to servers and what responses they

get back in return. Each interaction consists of one ASCII request, followed by one RFC 822

MIME-like response. All clients and all servers must obey this protocol. It is defined in RFC

2616. In this section we will look at some of its more important properties.

Connections

The usual way for a browser to contact a server is to establish a TCP connection to port 80 on

the server's machine, although this procedure is not formally required. The value of using TCP

is that neither browsers nor servers have to worry about lost messages, duplicate messages,

502

long messages, or acknowledgements. All of these matters are handled by the TCP

implementation.

In HTTP 1.0, after the connection was established, a single request was sent over and a single

response was sent back. Then the TCP connection was released. In a world in which the typical

Web page consisted entirely of HTML text, this method was adequate. Within a few years, the

average Web page contained large numbers of icons, images, and other eye candy, so

establishing a TCP connection to transport a single icon became a very expensive way to

operate.

This observation led to HTTP 1.1, which supports

persistent connections. With them, it is

possible to establish a TCP connection, send a request and get a response, and then send

additional requests and get additional responses. By amortizing the TCP setup and release over

multiple requests, the relative overhead due to TCP is much less per request. It is also possible

to pipeline requests, that is, send request 2 before the response to request 1 has arrived.

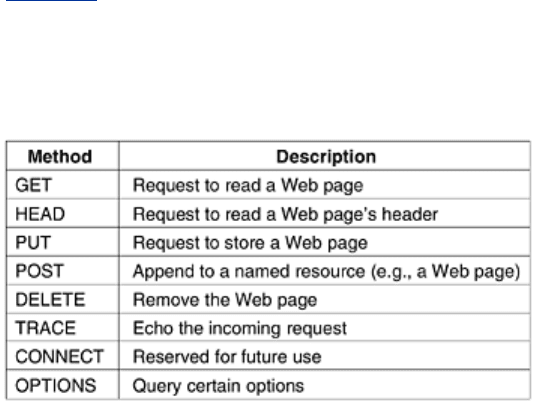

Methods

Although HTTP was designed for use in the Web, it has been intentionally made more general

than necessary with an eye to future object-oriented applications. For this reason, operations,

called

methods, other than just requesting a Web page are supported. This generality is what

permitted SOAP to come into existence. Each request consists of one or more lines of ASCII

text, with the first word on the first line being the name of the method requested. The built-in

methods are listed in

Fig. 7-41. For accessing general objects, additional object-specific

methods may also be available. The names are case sensitive, so

GET is a legal method but

get is not.

Figure 7-41. The built-in HTTP request methods.

The

GET method requests the server to send the page (by which we mean object, in the most

general case, but in practice normally just a file). The page is suitably encoded in MIME. The

vast majority of requests to Web servers are

GETs. The usual form of GET is

GET filename HTTP/1.1

where

filename names the resource (file) to be fetched and 1.1 is the protocol version being

used.

The

HEAD method just asks for the message header, without the actual page. This method can

be used to get a page's time of last modification, to collect information for indexing purposes,

or just to test a URL for validity.

The

PUT method is the reverse of GET: instead of reading the page, it writes the page. This

method makes it possible to build a collection of Web pages on a remote server. The body of

503

the request contains the page. It may be encoded using MIME, in which case the lines

following the

PUT might include Content-Type and authentication headers, to prove that the

caller indeed has permission to perform the requested operation.

Somewhat similar to

PUT is the POST method. It, too, bears a URL, but instead of replacing the

existing data, the new data is ''appended'' to it in some generalized sense. Posting a message

to a newsgroup or adding a file to a bulletin board system are examples of appending in this

context. In practice, neither

PUT nor POST is used very much.

DELETE does what you might expect: it removes the page. As with PUT, authentication and

permission play a major role here. There is no guarantee that

DELETE succeeds, since even if

the remote HTTP server is willing to delete the page, the underlying file may have a mode that

forbids the HTTP server from modifying or removing it.

The

TRACE method is for debugging. It instructs the server to send back the request. This

method is useful when requests are not being processed correctly and the client wants to know

what request the server actually got.

The

CONNECT method is not currently used. It is reserved for future use.

The

OPTIONS method provides a way for the client to query the server about its properties or

those of a specific file.

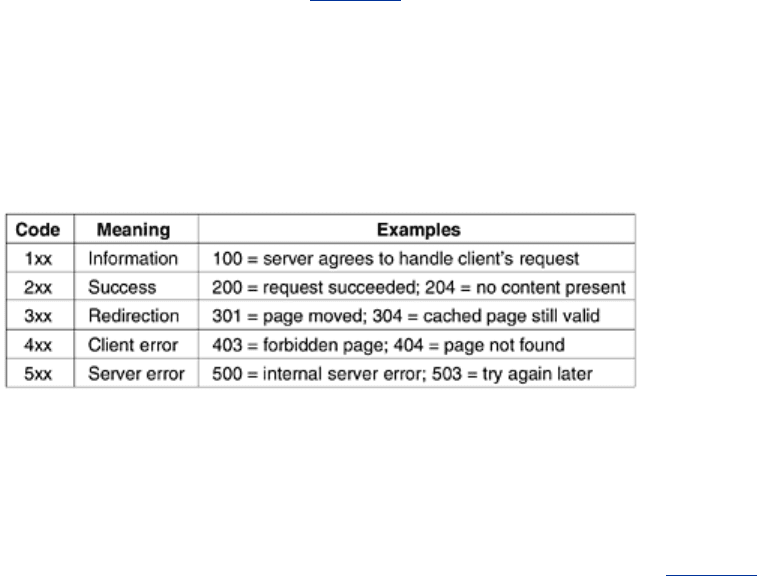

Every request gets a response consisting of a status line, and possibly additional information

(e.g., all or part of a Web page). The status line contains a three-digit status code telling

whether the request was satisfied, and if not, why not. The first digit is used to divide the

responses into five major groups, as shown in

Fig. 7-42. The 1xx codes are rarely used in

practice. The 2xx codes mean that the request was handled successfully and the content (if

any) is being returned. The 3xx codes tell the client to look elsewhere, either using a different

URL or in its own cache (discussed later). The 4xx codes mean the request failed due to a

client error such an invalid request or a nonexistent page. Finally, the 5xx errors mean the

server itself has a problem, either due to an error in its code or to a temporary overload.

Figure 7-42. The status code response groups.

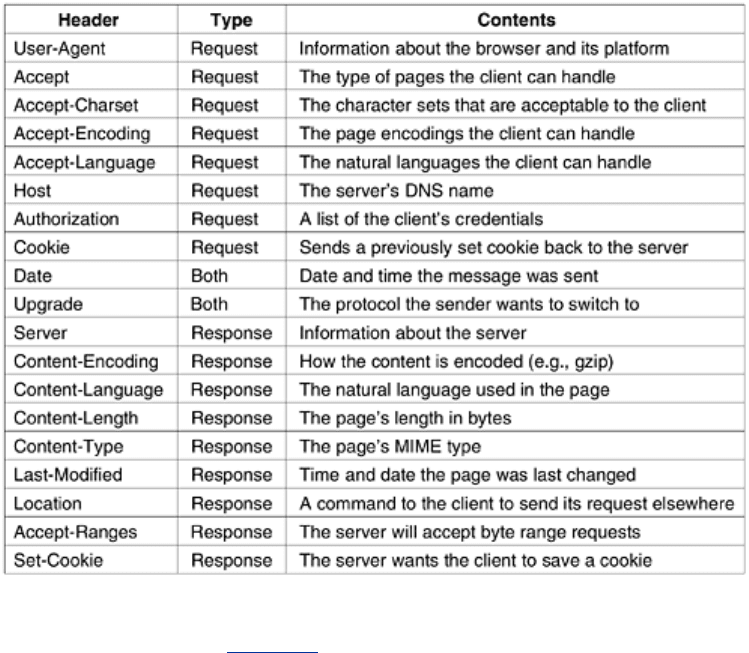

Message Headers

The request line (e.g., the line with the GET method) may be followed by additional lines with

more information. They are called

request headers. This information can be compared to the

parameters of a procedure call. Responses may also have

response headers. Some headers

can be used in either direction. A selection of the most important ones is given in

Fig. 7-43.

Figure 7-43. Some HTTP message headers.

504

The

User-Agent header allows the client to inform the server about its browser, operating

system, and other properties. In

Fig. 7-34 we saw that the server magically had this

information and could produce it on demand in a PHP script. This header is used by the client

to provide the server with the information.

The four

Accept headers tell the server what the client is willing to accept in the event that it

has a limited repertoire of what is acceptable. The first header specifies the MIME types that

are welcome (e.g., text/html). The second gives the character set (e.g., ISO-8859-5 or

Unicode-1-1). The third deals with compression methods (e.g., gzip). The fourth indicates a

natural language (e.g., Spanish) If the server has a choice of pages, it can use this information

to supply the one the client is looking for. If it is unable to satisfy the request, an error code is

returned and the request fails.

The

Host header names the server. It is taken from the URL. This header is mandatory. It is

used because some IP addresses may serve multiple DNS names and the server needs some

way to tell which host to hand the request to.

The

Authorization header is needed for pages that are protected. In this case, the client may

have to prove it has a right to see the page requested. This header is used for that case.

Although cookies are dealt with in RFC 2109 rather than RFC 2616, they also have two

headers. The

Cookie header is used by clients to return to the server a cookie that was

previously sent by some machine in the server's domain.

The

Date header can be used in both directions and contains the time and date the message

was sent. The

Upgrade header is used to make it easier to make the transition to a future

(possibly incompatible) version of the HTTP protocol. It allows the client to announce what it

can support and the server to assert what it is using.

Now we come to the headers used exclusively by the server in response to requests. The first

one,

Server, allows the server to tell who it is and some of its properties if it wishes.

505

The next four headers, all starting with Content-, allow the server to describe properties of the

page it is sending.

The

Last-Modified header tells when the page was last modified. This header plays an

important role in page caching.

The

Location header is used by the server to inform the client that it should try a different URL.

This can be used if the page has moved or to allow multiple URLs to refer to the same page

(possibly on different servers). It is also used for companies that have a main Web page in the

com domain, but which redirect clients to a national or regional page based on their IP address

or preferred language.

If a page is very large, a small client may not want it all at once. Some servers will accept

requests for byte ranges, so the page can be fetched in multiple small units. The

Accept-

Ranges

header announces the server's willingness to handle this type of partial page request.

The second cookie header,

Set-Cookie, is how servers send cookies to clients. The client is

expected to save the cookie and return it on subsequent requests to the server.

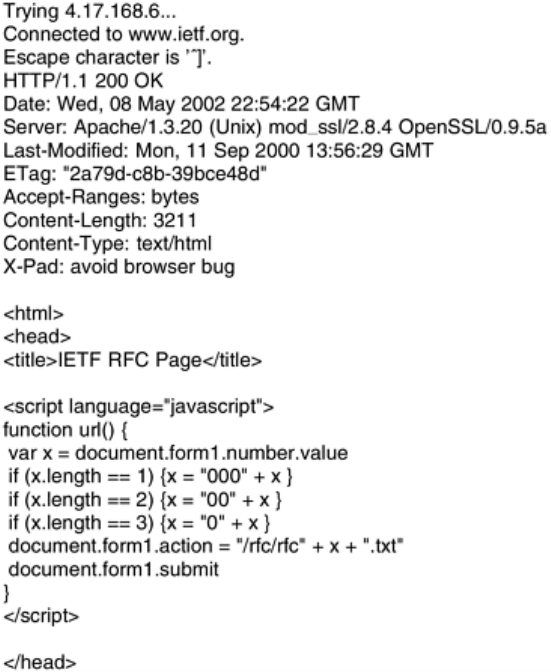

Example HTTP Usage

Because HTTP is an ASCII protocol, it is quite easy for a person at a terminal (as opposed to a

browser) to directly talk to Web servers. All that is needed is a TCP connection to port 80 on

the server. Readers are encouraged to try this scenario personally (preferably from a UNIX

system, because some other systems do not return the connection status). The following

command sequence will do it:

telnet www.ietf.org 80 >log

GET /rfc.html HTTP/1.1

Host: www.ietf.org

close

This sequence of commands starts up a telnet (i.e., TCP) connection to port 80 on IETF's Web

server,

www.ietf.org. The result of the session is redirected to the file log for later inspection.

Then comes the

GET command naming the file and the protocol. The next line is the

mandatory

Host header. The blank line is also required. It signals the server that there are no

more request headers. The

close command instructs the telnet program to break the

connection.

The

log can be inspected using any editor. It should start out similarly to the listing in Fig. 7-

44, unless IETF has changed it recently.

Figure 7-44. The start of the output of www.ietf.org/rfc.html.

506

The first three lines are output from the telnet program, not from the remote site. The line

beginning HTTP/1.1 is IETF's response saying that it is willing to talk HTTP/1.1 with you. Then

come a number of headers and then the content. We have seen all the headers already except

for

ETag which is a unique page identifier related to caching, and X-Pad which is nonstandard

and probably a workaround for some buggy browser.

7.3.5 Performance Enhancements

The popularity of the Web has almost been its undoing. Servers, routers, and lines are

frequently overloaded. Many people have begun calling the WWW the World Wide Wait. As a

consequence of these endless delays, researchers have developed various techniques for

improving performance. We will now examine three of them: caching, server replication, and

content delivery networks.

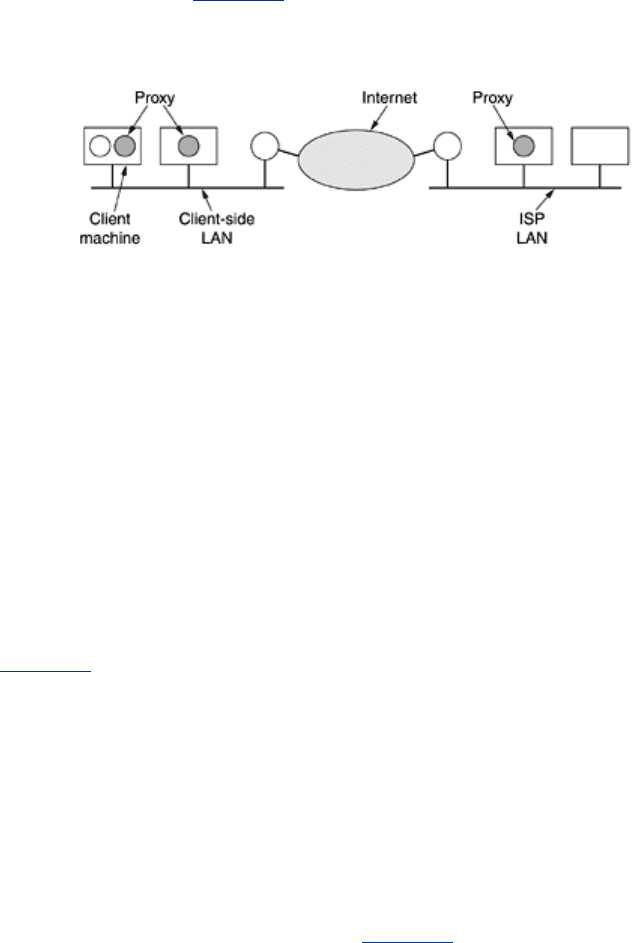

Caching

A fairly simple way to improve performance is to save pages that have been requested in case

they are used again. This technique is especially effective with pages that are visited a great

deal, such as

www.yahoo.com and www.cnn.com. Squirreling away pages for subsequent use

is called

caching. The usual procedure is for some process, called a proxy, to maintain the

cache. To use caching, a browser can be configured to make all page requests to a proxy

instead of to the page's real server. If the proxy has the page, it returns the page immediately.

If not, it fetches the page from||the server, adds it to the cache for future use, and returns it

to the client that requested it.

Two important questions related to caching are as follows:

1. Who should do the caching?

507

2. How long should pages be cached?

There are several answers to the first question. Individual PCs often run proxies so they can

quickly look up pages previously visited. On a company LAN, the proxy is often a machine

shared by all the machines on the LAN, so if one user looks at a certain page and then another

one on the same LAN wants the same page, it can be fetched from the proxy's cache. Many

ISPs also run proxies, in order to speed up access for all their customers. Often all of these

caches operate at the same time, so requests first go to the local proxy. If that fails, the local

proxy queries the LAN proxy. If that fails, the LAN proxy tries the ISP proxy. The latter must

succeed, either from its cache, a higher-level cache, or from the server itself. A scheme

involving multiple caches tried in sequence is called

hierarchical caching. A possible

implementation is illustrated in

Fig. 7-45.

Figure 7-45. Hierarchical caching with three proxies.

How long should pages be cached is a bit trickier. Some pages should not be cached at all. For

example, a page containing the prices of the 50 most active stocks changes every second. If it

were to be cached, a user getting a copy from the cache would get

stale (i.e., obsolete) data.

On the other hand, once the stock exchange has closed for the day, that page will remain valid

for hours or days, until the next trading session starts. Thus, the cacheability of a page may

vary wildly over time.

The key issue with determining when to evict a page from the cache is how much staleness

users are willing to put up with (since cached pages are kept on disk, the amount of storage

consumed is rarely an issue). If a proxy throws out pages quickly, it will rarely return a stale

page but it will also not be very effective (i.e., have a low hit rate). If it keeps pages too long,

it may have a high hit rate but at the expense of often returning stale pages.

There are two approaches to dealing with this problem. The first one uses a heuristic to guess

how long to keep each page. A common one is to base the holding time on the

Last-Modified

header (see

Fig. 7-43). If a page was modified an hour ago, it is held in the cache for an hour.

If it was modified a year ago, it is obviously a very stable page (say, a list of the gods from

Greek and Roman mythology), so it can be cached for a year with a reasonable expectation of

it not changing during the year. While this heuristic often works well in practice, it does return

stale pages from time to time.

The other approach is more expensive but eliminates the possibility of stale pages by using

special features of RFC 2616 that deal with cache management. One of the most useful of

these features is the

If-Modified-Since request header, which a proxy can send to a server. It

specifies the page the proxy wants and the time the cached page was last modified (from the

Last-Modified header). If the page has not been modified since then, the server sends back a

short

Not Modified message (status code 304 in Fig. 7-42), which instructs the proxy to use

the cached page. If the page has been modified since then, the new page is returned. While

this approach always requires a request message and a reply message, the reply message will

be very short when the cache entry is still valid.

These two approaches can easily be combined. For the first ∆

T after fetching the page, the

proxy just returns it to clients asking for it. After the page has been around for a while, the

508

proxy uses If-Modified-Since messages to check on its freshness. Choosing ∆T invariably

involves some kind of heuristic, depending on how long ago the page was last modified.

Web pages containing dynamic content (e.g., generated by a PHP script) should never be

cached since the parameters may be different next time. To handle this and other cases, there

is a general mechanism for a server to instruct all proxies along the path back to the client not

to use the current page again without verifying its freshness. This mechanism can also be used

for any page expected to change quickly. A variety of other cache control mechanisms are also

defined in RFC 2616.

Yet another approach to improving performance is proactive caching. When a proxy fetches a

page from a server, it can inspect the page to see if there are any hyperlinks on it. If so, it can

issue requests to the relevant servers to preload the cache with the pages pointed to, just in

case they are needed. This technique may reduce access time on subsequent requests, but it

may also flood the communication lines with pages that are never needed.

Clearly, Web caching is far from trivial. A lot more can be said about it. In fact, entire books

have been written about it, for example (Rabinovich and Spatscheck, 2002; and Wessels,

2001); But it is time for us to move on to the next topic.

Server Replication

Caching is a client-side technique for improving performance, but server-side techniques also

exist. The most common approach that servers take to improve performance is to replicate

their contents at multiple, widely-separated locations. This technique is sometimes called

mirroring.

A typical use of mirroring is for a company's main Web page to contain a few images along

with links for, say, the company's Eastern, Western, Northern, and Southern regional Web

sites. The user then clicks on the nearest one to get to that server. From then on, all requests

go to the server selected.

Mirrored sites are generally completely static. The company decides where it wants to place

the mirrors, arranges for a server in each region, and puts more or less the full content at each

location (possibly omitting the snow blowers from the Miami site and the beach blankets from

the Anchorage site). The choice of sites generally remains stable for months or years.

Unfortunately, the Web has a phenomenon known as

flash crowds in which a Web site that

was previously an unknown, unvisited, backwater all of a sudden becomes the center of the

known universe. For example, until Nov. 6, 2000, the Florida Secretary of State's Web site,

www.dos.state.fl.us, was quietly providing minutes of the meetings of the Florida State cabinet

and instructions on how to become a notary in Florida. But on Nov. 7, 2000, when the U.S.

Presidency suddenly hinged on a few thousand disputed votes in a handful of Florida counties,

it became one of the top five Web sites in the world. Needless to say, it could not handle the

load and nearly died trying.

What is needed is a way for a Web site that suddenly notices a massive increase in traffic to

automatically clone itself at as many locations as needed and keep those sites operational until

the storm passes, at which time it shuts many or all of them down. To have this ability, a site

needs an agreement in advance with some company that owns many hosting sites, saying that

it can create replicas on demand and pay for the capacity it actually uses.

An even more flexible strategy is to create dynamic replicas on a per-page basis depending on

where the traffic is coming from. Some research on this topic is reported in (Pierre et al.,

2001; and Pierre et al., 2002).

509

Content Delivery Networks

The brilliance of capitalism is that somebody has figured out how to make money from the

World Wide Wait. It works like this. Companies called

CDNs (Content Delivery Networks)

talk to content providers (music sites, newspapers, and others that want their content easily

and rapidly available) and offer to deliver their content to end users efficiently for a fee. After

the contract is signed, the content owner gives the CDN the contents of its Web site for

preprocessing (discussed shortly) and then distribution.

Then the CDN talks to large numbers of ISPs and offers to pay them well for permission to

place a remotely-managed server bulging with valuable content on their LANs. Not only is this

a source of income, but it also provides the ISP's customers with excellent response time for

getting at the CDN's content, thereby giving the ISP a competitive advantage over other ISPs

that have not taken the free money from the CDN. Under these conditions, signing up with a

CDN is kind of a no-brainer for the ISP. As a consequence, the largest CDNs have more than

10,000 servers deployed all over the world.

With the content replicated at thousands of sites worldwide, there is clearly great potential for

improving performance. However, to make this work, there has to be a way to redirect the

client's request to the nearest CDN server, preferably one colocated at the client's ISP. Also,

this redirection must be done without modifying DNS or any other part of the Internet's

standard infrastructure. A slightly simplified description of how Akamai, the largest CDN, does

it follows.

The whole process starts when the content provider hands the CDN its Web site. The CDN then

runs each page through a preprocessor that replaces all the URLs with modified ones. The

working model behind this strategy is that the content provider's Web site consists of many

pages that are tiny (just HTML text), but that these pages often link to large files, such as

images, audio, and video. The modified HTML pages are stored on the content provider's

server and are fetched in the usual way; it is the images, audio, and video that go on the

CDN's servers.

To see how this scheme actually works, consider Furry Video's Web page of

Fig. 7-46(a). After

preprocessing, it is transformed to

Fig. 7-46(b) and placed on Furry Video's server as

www.furryvideo.com/index.html.

Figure 7-46. (a) Original Web page. (b) Same page after

transformation.

510