Birge J.R., Louveaux F. Introduction to Stochastic Programming

Подождите немного. Документ загружается.

344 8 Evaluating and Approximating Expectations

where h

i

is independently uniformly distributed on [0, 1] for i = 1,2.

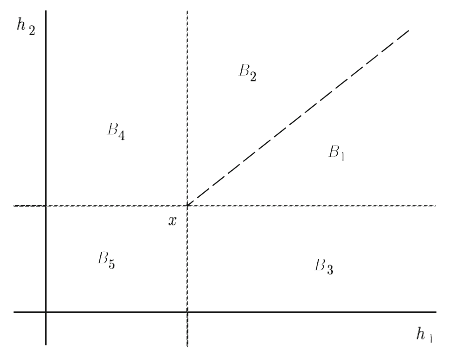

Fig. 1 Optimal basis regions of Example 1.

The optimal basis regions for the solution of this problem are illustrated in Figure 1.

Here, the optimal bases are B

1

corresponding to (y

+

1

,y

3

) , B

2

corresponding to

(y

+

2

,y

3

) , B

3

corresponding to (y

+

1

,y

−

2

) , B

4

corresponding to (y

−

1

,y

+

2

) ,and B

5

corresponding to (y

−

1

,y

−

2

) with dual multipliers

π

1

=(1, 0)

T

,

π

2

=(0, 1)

T

,

π

3

=

(1,−1)

T

,

π

4

=(−1,1)

T

,and

π

5

=(−1,−1)

T

, respectively. Figure 1 shows the

regions in which each of these bases is optimal.

We let p

i

= P(

Ω

i

) for i = 3,4,5 . To make the calculations somewhat sim-

pler, we divide

Ω

1

and

Ω

2

into two sections each depending on x as

Ω

1

=

Ω

10

(x)+

Ω

11

(x) and

Ω

2

=

Ω

20

(x)+

Ω

21

(x) where

Ω

10

(x)={

ω

|

ω

∈

Ω

1

,x

1

≤

h

1

(

ω

) ≤x

1

+min(1−x

1

,1−x

2

)},

Ω

11

(x)={

ω

|

ω

∈

Ω

1

,x

1

+min(1−x

1

,1−x

2

) <

h

1

(

ω

) ≤ 1},

Ω

20

(x)={

ω

|

ω

∈

Ω

1

,x

2

≤ h

2

(

ω

) ≤ x

2

+ min(1 −x

1

,1 −x

2

)},and

Ω

21

(x)={

ω

|

ω

∈

Ω

1

,x

2

+ min(1 −x

1

,1 −x

2

) < h

2

(

ω

) ≤ 1} with corresponding

integrals of h over each of these regions given by

¯

h

10

(x) ,

¯

h

11

(x) ,

¯

h

20

(x) ,and

¯

h

21

(x) respectively. In this way,

Ω

10

(x) and

Ω

20

(x) are symmetric around the di-

agonal x

1

= x

2

with one of

Ω

11

(x) and

Ω

21

(x) corresponding to a rectangular

region of positive probability if 1 ≥x

2

> x

1

or 1 ≥ x

1

> x

2

.

With these definitions, we can then write Q(x) for Example 1 as

Q(x)=

2

∑

i=1

1

∑

j= 0

π

T

i

(

¯

h

ij

(x) −Tx)+

5

∑

i=3

π

T

i

(

¯

h

i

(x) −Tx). (1.6)

Finding the value of

¯

h for each region then yields the following expression

(Exercise 2):

8.1 Direct Solutions with Multiple Integration 345

Q(x)=

1

2

(x

1

+ x

2

1

+ x

2

−4x

1

x

2

+ x

2

1

x

2

+ x

2

2

+ x

1

x

2

2

+ 2(1 −x

2

)

2

max[0,−x

1

+ x

2

]

+max[0,x

1

−x

2

](2(1 −x

1

)

2

)+

4

3

(min[1−x

1

,1 −x

2

])

3

),

for any x ∈ [0,1]

2

.

The regions

Ω

i

are polyhedral (Exercise 4) in general, which, as in Example

1, yields direct integration procedures when these regions are simple enough to

have explicit integration formulas. Unfortunately, this is not often the case for the

Ω

i

regions that are common in stochastic programs with recourse. As Exercise 2

demonstrates, even in the simple cases of uniform distributions, the expectations

over different regions depends on the relative values of the components of x and

may require significant computation to find exactly.

In problems with probabilistic constraints, however, there are possibilities for

creating deterministic equivalents when the data are, for example, normal as in

Theorem 3.18. In general, however, efficient computation requires some form of

approximation.

In the following sections, we explore several methods for approximating the

value function and its subgradient in stochastic programming. The basic approaches

are either approximations with known error bounds or approximations based on

Monte Carlo procedures that may have associated confidence intervals. In the re-

mainder of this chapter and Chapter 10, we explore bounding approaches, while in

Chapter 9 we also consider methods based on sampling.

Exercises

1. The principle of Gaussian quadrature is to find points and weights on those

points that yield the correct integral over all polynomials of a certain degree.

For example, we can solve for points,

ξ

1

,

ξ

2

, and weights, p

1

, p

2

,sothat

we have a probability ( p

1

+ p

2

= 1 ) and distribution that matches the mean,

( p

1

ξ

1

+ p

2

ξ

2

=

¯

ξ

), the second moment, ( p

1

ξ

2

1

+ p

2

ξ

2

2

=

¯

ξ

(2)

), and the third

moment, ( p

1

ξ

3

1

+ p

2

ξ

3

2

=

¯

ξ

(3)

). Solve this for a uniform distribution on [0, 1]

to yield the two points, 0.211 and 0.788 , each with probability 0.5.

(a) Verify that this distribution matches the expectation of any polynomial up

to degree three over [0,1] .

(b) Consider a piecewise linear function, f , with two linear pieces and 0 ≤

f (

ξ

) ≤ 1for0≤

ξ

≤ 1 . How large a relative error can the Gaussian

quadrature points give? Can you use two other points that are better?

2. Derive the expression of Q(x) for Example 1 in (1.7)using(1.6).

3. Verify that Q(x) for Example 1 is convex on [0,1]

2

using (1.7).

4. Show that each region

Ω

i

is polyhedral.

346 8 Evaluating and Approximating Expectations

8.2 Discrete Bounding Approximations

The most common procedures in stochastic programming approximations are to find

some relatively low cardinality discrete set of realizations that somehow represents

a good approximation of the true underlying distribution or whatever is known about

this distribution. The basic procedures are extensions of Jensen’s inequality ([1906],

generalization of the midpoint approximation) and an inequality due to Edmundson

[1956] and Madansky [1959], the Edmundson-Madansky inequality, a generaliza-

tion of the trapezoidal approximation. For convex functions in

ξ

, Jensen provides

a lower bound while Edmundson-Madansky provides an upper bound. Significant

refinements of these bounds appear in Huang, Ziemba, and Ben-Tal [1977], Kall

and Stoyan [1982] and Frauendorfer [1988b].

We refer to a general integrand g(x, ξ) . Our goal is to bound E(g(x)) =

E

ξ

[g(x,ξ)] =

Ξ

g(x,ξ)P(dξ) . The basic ideas are to partition the support

Ξ

into

a number of different regions (analogous to intervals in one-dimensional integra-

tion) and to apply bounds in each of those regions. We let the partition of

Ξ

be

S

ν

= {S

l

,l = 1,...,

ν

}.Define

ξ

l

= E[ξ | S

l

] and p

l

= P[ξ ∈ S

l

] . The basic

lower bounding result is the following.

Theorem 1. Suppose that g(x,·) is convex for all x ∈D.Then

E(g(x)) ≥

ν

∑

l=1

p

l

g(x,

ξ

l

) . (2.1)

Proof: Write E(g(x)) as

E(g(x)) =

ν

∑

l=1

S

l

g(x,ξ)P(dξ)

=

ν

∑

l=1

p

l

E[g(x, ξ) | S

l

]

≥

ν

∑

l=1

p

l

g(x,E[ξ | S

l

]) , (2.2)

where the last inequality follows from Jensen’s inequality that the expectation of a

convex function of some argument is always greater than or equal to the function

evaluated at the expectation of its argument, i.e., E(g(ξ)) ≥ g(E(ξ)) (see Exer-

cise 1).

This result applies directly to approximating Q(x) by Q

ν

(x)=

∑

ν

l=1

p

l

Q(x,

ξ

l

) . The approximating distribution P

ν

is the discrete distribution with

atoms, i.e., points

ξ

l

of probability p

l

> 0forl = 1,...,

ν

. By choosing S

ν

+1

so that each S

l

∈ S

ν

+1

is completely contained in some S

l

∈ S

ν

, the approxi-

mations actually improve, i.e.,

8.2 Discrete Bounding Approximations 347

E(g(x)) ≥ E

ν

+1

(g(x)) ≥ E

ν

(g(x)) . (2.3)

Various methods can achieve convergence in distribution of the P

ν

to P .Anex-

ample is given in Exercise 2.

In general, the goal of refining the partition from

ν

to

ν

+ 1 is to achieve as

great an improvement as possible. We will describe the basic approaches; more

details appear in Birge and Wets [1986], Frauendorfer and Kall [1988], and Birge

and Wallace [1986]. Three basic decisions are to choose the cell, S

ν

∗

∈ S

ν

,in

which to make the partition, to choose the direction in which to split S

ν

∗

,andto

choose the point at which to make the split.

The reader should note that this section contains notation specific to bounding

procedures. To keep the notation manageable, we reuse some from previous sec-

tions, including a and b for endpoints of rectangular regions and c for points

within these intervals at which to subdivide the region. For ease of exposition,

suppose that the sets S

l

are all rectangular, defined by [a

l

1

,b

l

1

] × ···× [a

l

N

,b

l

N

] .

The most basic refinement scheme for l =

ν

∗

is to find i

∗

and c

l

i

∗

so that

S

l

(

ν

) splits into S

l

(

ν

+ 1)=[a

l

1

,b

l

1

] ×...[a

l

i

∗

,c

l

i

∗

] ×[a

l

N

,b

l

N

] and S

ν

+1

(

ν

+ 1)=

[a

l

1

,b

l

1

] ×...[c

l

i

∗

,b

l

i

∗

] ×[a

l

N

,b

l

N

] .

If we also have an upper bound UB(S

l

) ≥ E[g(x,

ξ

) |

ξ

∈ S

ν

l

] for each cell

S

l

, then the most likely choice for S

ν

∗

is the cell that maximizes p

l

(UB(S

l

) −

g(x,

ξ

l

)) , which bounds the error attributable to the approximation on S

l

. Reduc-

ing this greatest partition error appears to offer the most hope in reducing the error

on the

ν

+ 1 approximation.

The direction choice is less clear. The general idea is to choose a direction in

which the function g is “most nonlinear”. The use of subgradient (dual price)

information for this process was discussed in Birge and Wets [1986]. Frauendor-

fer and Kall [1988] improved on this and reported good results by considering

all 2

m+1

pairs, (

α

j

,

β

j

) , of vertices of S

l

,where

α

j

=(

γ

l

1

,...,a

l

i

,...,

γ

l

N

) and

β

j

=(

γ

l

1

,...,b

l

i

,...,

γ

l

N

) with

γ

l

i

= a

l

i

or b

l

i

.Given x , they assume a dual vector,

π

α

j

,at Q(x,

α

j

) and

π

β

j

at Q(x,

β

j

) . Because these represent subgradients of the

recourse function Q(x, ·) ,wehave Q(x,

β

j

) −(Q(x,

α

j

)+

π

T

α

j

(

β

j

−

α

j

)) =

ε

1

j

≥ 0

and Q(x,

α

j

)−(Q(x,

β

j

)+

π

T

β

j

(

α

j

−

β

j

)) =

ε

2

j

≥0 . They then choose k

∗

that max-

imizes min{

ε

1

k

,

ε

2

k

} over k .Theylet i

∗

be i such that

α

k

∗

and

β

k

∗

differ in the

i th coordinate. The position c

i

∗

is then chosen so that Q(x,

β

k

∗

)+

π

T

β

k

∗

(c

i

∗

−b

i

∗

)=

Q(x,

α

k

∗

)+

π

T

α

k

∗

(c

i

∗

−a

i

∗

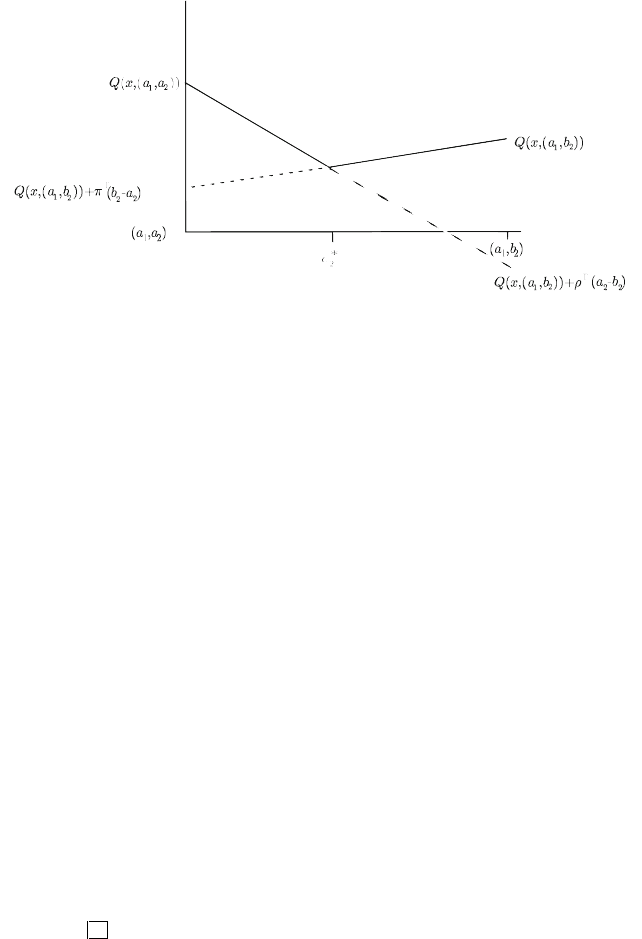

) . (See Figure 2, where we use

π

for the subgradient at

(a

1

,b

2

) and

ρ

for the subgradient at (a

1

,a

2

) .) The general idea is then to choose

the direction that yields the maximum of the minimum of linearization errors in each

direction.

Refinement schemes clearly depend on having upper bounds available. These

bounds are generally based on convexity properties of g and the ability to obtain

each

ξ

in terms of the extreme points. The fundamental result is the following

theorem that also appears in Birge and Wets [1986]. In the following, we use P as

themeasureon

Ω

instead of

Ξ

because we wish to obtain a different measure

derived from this domain. In context, this change should not cause confusion. We

348 8 Evaluating and Approximating Expectations

Fig. 2 Choosing the direction according to the maximum of the minimum linearization errors.

also let ext

Ξ

be the set of extreme points of co

Ξ

and E is a Borel field of ext

Ξ

,

in this case, the collections of all subsets of ext

Ξ

.

Theorem 2. Suppose that

ξ

→g(x,

ξ

) is convex and

Ξ

is compact. For all

ξ

∈

Ξ

,

let

φ

(ξ,·) be a probability measure on (ext

Ξ

,E ) , such that

e∈ext

Ξ

e

φ

(ξ,de)=ξ , (2.4)

and

ω

→

φ

(

ξ

(

ω

),A) is measurable for all A ∈ E .Then

E(g(x)) ≤

e∈ext

Ξ

g(x,e)

λ

(de) , (2.5)

where

λ

is the probability measure on E defined by

λ

(A)=

Ω

φ

(

ξ

(

ω

),A)P(d

ω

) . (2.6)

Proof: Because g is convex in

ξ

,for

φ

,

g(x,

ξ

) ≤

e∈ext

Ξ

g(x,e)

φ

(

ξ

,de) . (2.7)

Substituting

ξ

(

ω

) for

ξ

and integrating with respect to P , the result in (2.5)is

obtained.

This result states that if we can choose the appropriate

φ

and find

λ

, we can

produce an upper bound. The key is to make the calculation of

λ

as simple as pos-

sible. Of course, the cardinality of ext

Ξ

may also play a role in the computability

of the bound.

8.2 Discrete Bounding Approximations 349

One way to reduce the cardinality of the supporting extreme points is simply to

choose the extreme point that has the highest value as an upper bound. Let this up-

per bound be UB

max

(x)=sup

e∈ext

Ξ

g(x,e) ≥

e∈ext

Ξ

g(x,e)

λ

(de) ≥ E(g(x)) from

Theorem 2, regardless of the particular

λ

. While UB

max

may only involve a single

extreme point, it is often a poor bound (see the result from Exercise 3). Its calcu-

lation also often involves evaluating all the extreme points to maximize the convex

function g(x,·) .

In general, bounds built on the result in Theorem 2 construct the probability

measure

λ

so that each extreme point e

j

of

Ξ

has some weight, p

j

=

λ

(e

j

) .

The following bounds, described in more detail in Birge and Wets [1986], find these

weights in various cases. The first is general but involves some optimization. The

second involves simplicial regions, and the third uses rectangular regions.

Because

λ

is constructed to be consistent with the distribution of ξ ,wemust

have that

Ω

ξ

(

ω

)P(d

ω

)=

Ω

e∈ext

Ξ

e

φ

(

ξ

(

ω

),de)P(d

ω

)

=

e∈ext

Ξ

e

Ω

φ

(

ξ

(

ω

),de)P(d

ω

)

=

e∈ext

Ξ

e

λ

(de) . (2.8)

Hence,

λ

∈P = {

μ

|

μ

is a probability measure on E ,and E

μ

[e]=

¯

ξ

}. The next

upper bound, originally suggested by Madansky [1960] and extended by Gassmann

and Ziemba [1986], builds on this idea by finding an upper bound through a linear

program to maximize the objective expectation over all probability measures in P .

We write this bound as UB

mean

,where

UB

mean

(x)= max

p

1

,...,p

K

K

∑

k=1

p

k

g(x,e

k

)

s. t.

K

∑

k=1

p

k

e

k

=

¯

ξ

,

K

∑

k=1

p

k

= 1 , p

k

≥ 0 , k = 1,...,K .

(2.9)

As we shall see in Section 8.5, the probability measure that optimizes the linear pro-

gram in (2.9) is the solution of a moment problem in which only the first moment

is known. Another interpretation of this bound is that it represents the worst pos-

sible outcome if only the mean of the random variable is known. Optimizing with

this bound, therefore, brings some form of risk avoidance if no other distribution

information is available.

Assuming that the dimension of co

Ξ

is N , Carath´eodory’s theorem states that

¯

ξ

must be expressable as a convex combination of at most N + 1 points in ext

Ξ

.

Finding these N +1 points may, however,again involve computationsfor the values

350 8 Evaluating and Approximating Expectations

at all extreme points. The number of extreme point representations may be much

higher than N + 1if

Ξ

is, for example, rectangular, but lower if, for example,

Ξ

is a simplex, i.e., a convex combination of N + 1 points,

ξ

i

, i = 1,...,N + 1,such

that

ξ

i

−

ξ

1

are linearly independent for i > 1 . The representation of interior points

is, in fact, unique. Indeed, the p

j

in this case are called the barycentric coordinates

of

¯

ξ

.

Although

Ξ

may not be simplicial itself, it is often possible to extend Q(x,·)

from

Ξ

to some simplex

Σ

including

Ξ

. The bound obtained with this approach

is written UB

Σ

. In this bound, the number of points used in the evaluation remains

one more than the dimension of the affine hull of

Ξ

. Frauendorfer [1989, 1992]

gives more details about this form of approximation and various methods for its

refinement.

Often,

Ξ

is given as a rectangular region. In this case, the number of extreme

points is 2

N

. The number of simplices containing

¯

ξ

may also be exponential in

N . With relatively complete information about the correlations among random vari-

ables, however, bounds can be obtained that assign the same weight to each extreme

point of

Ξ

(or a rectangular enclosing region), regardless of the value of x .This

attribute is quite beneficial in algorithms where x may change frequently as an

optimal solution is sought.

The basic bounds for rectangular regions follow Edmundson and Madansky, for

which, the name Edmundson-Madansky (E-M) bound is used. They begin with the

trapezoidal type of approximation on an interval. Here, if

Ξ

=[a,b] , we can easily

construct

φ

(

ξ

,·) in Theorem 2 as

φ

(

ξ

,a)=

π

(

ξ

) and

φ

(

ξ

,b)=1 −

π

(

ξ

) ,where

π

(

ξ

)=

b−

ξ

b−a

. Integrating over

ω

, we obtain

λ

(a)=

Ω

φ

(

ξ

(

ω

),a)P(d

ω

)

=

Ω

b −

ξ

(

ω

)

b −a

P(d

ω

)

=

b −

¯

ξ

b −a

. (2.10)

We then also have

λ

(b)=

¯

ξ

−a

b−a

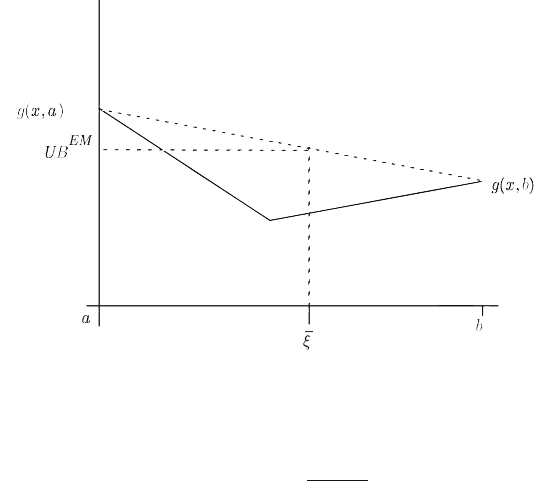

. The bound obtained is UB

EM

(x)=

λ

(a)g(x,a)+

λ

(b)g(x,b) ≥ E(g(x)) . Observe in Figure 3 that this bound represents approximat-

ing the integrand g(x,·) with the values formed as convex combinations of extreme

point values. This is the same procedure as in trapezoidal approximation for nu-

merical integration except that the endpoint weights may change for nonuniform

probability distributions.

The E −M bound on an interval extends easily to multiple dimensions, where

Ξ

=[a

1

,b

1

]×···×[a

N

,b

N

] if either g(x,·) is separable in the components of

ξ

,in

which case, the bound is applied in each component separately, or the components

of ξ are stochastically independent. In this case, the bound is developed in each

component i = 1toN in order so that the full independent

ξ

i

bound contains the

product of all combinations of each interval bound, i.e.,

8.2 Discrete Bounding Approximations 351

Fig. 3 Example of the Edmundson-Madansky bound on an interval.

UB

EM−I

(x)=

∑

e∈ext

Ξ

N

∏

i=1

|

¯

ξ

i

−e

i

|

b

i

−a

i

g(x,e) , (2.11)

where

Ξ

is again assumed polyhedral.

Example 1 (continued)

We return again to Example 1 and suppose an initial solution, ¯x =(0.3,0.3)

T

.From

(1.7), Q( ¯x)=0.466 . Our initial lower bound using the mean of the random vector

is then the Jensen lower bound, LB

1

= Q( ¯x,

ξ

=

¯

h =(0.5,0.5)

T

)=0.2.

The upper bounds can be found using the values at the extreme points of

the support of h . These values are Q( ¯x,(0, 0)

T

)=0.6, Q( ¯x,(0,1)

T

)=1.0,

Q( ¯x, (1,0)

T

)=1.0, and Q(¯x,(1,1)

T

)=0.7. For UB

max

1

( ¯x) ,wemusttakethe

highest of these values; hence, UB

max

1

( ¯x)=1.0. For UB

mean

1

, notice that

¯

h =

(1/2)(1, 0)

T

+(1/2)(0, 1)

T

;so,UB

mean

1

( ¯x)=UB

max

1

( ¯x)=1.0.For UB

EM

1

, each

extreme point is weighted equally, so UB

EM

1

( ¯x)=(1/4)(1 + 1 + .7 + .6)=0.825 .

For the simplicial approximation, let

Σ

= co{(0,0),(2,0),(0,2)} , which includes

the support of h . In this case, the weights on the extreme points are

λ

(0,0)=0.5

and

λ

(2,0)=

λ

(0,2)=0.25 . The resulting upper bound is UB

Σ

( ¯x)=0.5(.6)+

0.25(2)(2)=1.3.

To refine the bounds, we consider the dual multipliers at each extreme point.

At (0,0) ,theyare (−1, −1) .At (1, 0) ,theyare (1,−1) .At (0,1) ,theyare

(−1,1) .At (1,1) , both bases B

1

and B

2

are optimal, so the multipliers are (0,1) ,

(1,0) , or any convex combination. The linearization along the line segment from

352 8 Evaluating and Approximating Expectations

(0,0) to (1,0) is the minimum of Q( ¯x,(1,0)

T

)−Q( ¯x,(0,0)

T

)+(−1,−1)

T

(1,0)=

1−(0.6−1)=1.4andQ( ¯x,(0,0)

T

)−Q( ¯x, (1,0)

T

)+(1,−1)

T

(−1,0)=0.6−(1−

1)=0.6 . Hence, the minimum error on (0, 0) to (1,0) is 0.6 . Similarly, for (0,0)

to (0,1) , the error is 0.6.From (1,0) to (1,1) , the minimum error is 0.3ifthe

(0,1) subgradient is used at (1,1) ; however, the minimum error on (0,1) to (1,1)

is then min{1 −(0.7 −1),0.7−(1

−1)}= 0.7 . Thus, the maximum of these errors

over each edge of the region is 0.7 for the edge (0,1) to (1,1) .

To find the value of c

∗

1

to split the interval [a

1

= 0,b

1

= 1] , we need to find

where Q( ¯x,(0,1)

T

)−c

∗

1

= Q( ¯x,(1, 1)

T

)+(c

∗

1

−1) or where 1−c

∗

1

= 0.7−1+ c

∗

1

,

i.e., where c

∗

1

= 0.65 . We obtain two regions, S

1

=[0,0.65] ×[0, 1] and S

2

=

[0.65,1] ×[0,1] , with p

1

= 0.65 and p

2

= 0.35 .

The Jensen lower bound is now LB

2

= 0.65(Q( ¯x,(0.325, 0.5)

T

))+

(0.35)(Q( ¯x,(0.825, 0.5)

T

)) = 0.65(0.2)+0.35(0.525)=0.31375 . The

upper bounds are UB

max

2

( ¯x)=0.65(1)+0.35(1)=1, UB

mean

2

( ¯x)=0.65(0.5)(1 +

0.65)+0.35(0.5)(1 + 0.7)=0.83375 , and UB

EM

2

( ¯x)=0.65(0.25)(1 + 0.7 +

0.65 + 0.6)+0.35(0.25)(0.7 + 0.7 + 1 + .65)=0.74625 . (The simplicial bound

is not given because we have split the region into rectangular parts.) Exercise 3 asks

for these computations to continue until the lower and upper bounds are within 10%

of each other.

Exercises

1. For Example 1, ¯x =(0.1, 0.7)

T

, compute Q( ¯x) , the Jensen lower bound, and

the upper bounds, UB

mean

, UB

max

, UB

EM

,and UB

Σ

.

2. Prove Jensen’s inequality, E(g(ξ)) ≥ g(E(ξ)) , by taking an expectation of the

points on a supporting hyperplane to g(ξ) at g(E(ξ)) .

3. Follow the splitting rules for Example 1 until the Edmundson-Madansky upper

and Jensen lower bounds are within 10% of each other. Compare UB

EM

to

UB

max

on each step.

8.3 Using Bounds in Algorithms

The bounds in Section 8.2 can be used in algorithms in a variety of ways. We de-

scribe three basic procedures in this section: (1) uses of lower bounds in the L -

shaped method with stopping criteria provided by upper bounds; (2) uses of upper

bounds in generalized programming with stopping rules given by lower bounds; and

(3) uses of the dual formulation in the separable convex hull function. The first two

approaches are described in Birge [1983] while the last is taken from Birge and Wets

[1989].

The L -shaped method as described in Chapter 5 is based on iteratively providing

a lower bound on the recourse objective, Q(x) . If a lower bound, Q

L

(x) ,isused

8.3 Using Bounds in Algorithms 353

in place of Q(x) , then clearly for any supports, E

L

x + e

L

,if Q

L

(x) ≥ E

L

x + e

L

,

Q(x) ≥ E

L

x + e

L

. Thus, any cuts generated on a lower bounding approximation of

Q(x) remain valid throughout a procedure that refines that lower bounding approx-

imation. This observation leads to the following algorithm. We suppose that Q

L

j

(x)

and Q

U

j

(x) are approximating lower and upper bounding approximations such that

lim

j→∞

Q

L

j

(x)=Q(x) and lim

j→∞

Q

U

j

(x)=Q(x) . We suppose that P

L

j

is the

j th lower bounding approximation measure so that Q

L

j

(x)=

Ω

Q

L

j

(x,ξ)P

L

j

(d

ω

) .

To simplify the algorithm in the following, we assume that all feasibility cuts are

generated separately in (3.2) below before the sequential bounding procedure be-

gins (which generally can be accomplished by first considering all extreme points

of the domain of the random variables).

L -Shaped Method with Sequential Bounding Approximations

Step 0.Set r = s = v = k = 0.

Step 1.Set

ν

=

ν

+ 1 . Solve the linear program (3.1)–(3.3):

min z = c

T

x +

θ

s. t. Ax = b , (3.1)

D

x ≥d

,= 1,...,r , (3.2)

E

x +

θ

≥ e

,= 1,...,s , (3.3)

x ≥ 0 ,

θ

∈ ℜ .

Let (x

ν

,

θ

ν

) be an optimal solution. If no constraint (3.3)ispresent,

θ

is set equal

to −∞ and is ignored in the computation.

Step 2.Find Q

L

j

(x

ν

)=

Ω

Q

L

j

(x

ν

,ξ)P

L

j

(d

ω

) ,the j th lower bounding approxima-

tion. Suppose −(

π

ν

(ξ))

T

T ∈

∂

x

Q

L

j

(x

ν

,ξ) (the simplex multipliers associated with

the optimal solution of the recourse problem). Define

E

s+1

=

Ω

(

π

ν

(ξ))

T

TP

L

j

(d

ω

) (3.4)

and e

s+1

=

Ω

(

π

ν

(ξ))

T

hP

L

j

(d

ω

) . (3.5)

Let w

ν

= e

s+1

−E

s+1

x

ν

= Q

L

j

(x

ν

). If

θ

ν

≥ w

ν

, x

ν

is optimal, relative to the

lower bound; go to Step 4. Otherwise, set s = s + 1 and return to Step 1.

Step 3.Find Q

U

j

(x

ν

)=

Ω

Q

U

j

(x

ν

,ξ)P

U

j

(

ω

) ,the j th upper bounding approxima-

tion. If

θ

ν

≥ Q

U

j

(x

ν

) , stop; x

ν

is optimal. Otherwise, refine the lower and upper

bounding approximations from

ν

to

ν

+ 1.Let

ν

=

ν

+ 1.GotoStep2.