Davidson K.R., Donsig A.P. Real Analysis with Real Applications

Подождите немного. Документ загружается.

16.7 Subdifferentials and Directional Derivatives 593

To show that f is differentiable, it suffices to show that f(a) + hx, ∇f(a)i approx-

imates f(x) to first order near a or, equivalently, that g(x)/kxk tends to 0 as kxk

tends to 0. We use e

1

, . . . , e

n

for the standard basis of R

n

.

The fact that the n partial derivatives exist means that for 1 ≤ i ≤ n,

0 = lim

h→0

f(a + he

i

) − f (a) − h

∂f

∂x

i

(a)

h

= lim

h→0

f(a + he

i

) − f (a) − hhe

i

, ∇f(a)i

h

= lim

h→0

g(he

i

)

h

.

Fix an ε > 0 and choose r so small that |g(he

i

)| < ε|h|/n for |h| ≤ r and

1 ≤ i ≤ n.

Take x = (x

1

, . . . , x

n

) with kxk ≤ r/n. Then

g(x) = g

³

1

n

n

X

i=1

nx

i

e

i

´

≤

1

n

n

X

i=1

g(nx

i

e

i

) ≤

1

n

n

X

i=1

ε|x

i

| ≤ εkxk.

Now

0 = g(0) = g

³

x + (−x)

2

´

≤

1

2

g(x) +

1

2

g(−x).

Thus g(x) ≥ −g(−x) ≥ −εkxk. Therefore, |g(x)| ≤ εkxk on B

r

(0). As ε > 0 is

arbitrary, this proves that f is differentiable at a.

Assuming (2) that f is differentiable at a, the function f(a) + hx, ∇f(a)i ap-

proximates f(x) to first order near a with error g(x) satisfying lim

kxk→0

g(x)

kxk

= 0.

Compute

f

0

(a;d) = lim

h→0

+

f(a + hd) − f (a)

h

= lim

h→0

+

hhd, ∇f(a)i + g(he

i

)

h

= hd, ∇f (a)i+ lim

h→0

g(he

i

)

h

= hd, ∇f (a)i.

Theorem 16.7.7 now says that s ∈ ∂f(a) if and only if hd, si ≤

d, ∇f(a)

®

for all d ∈ R

n

. Equivalently,

d, s −∇f(a)

®

≤ 0 for all d ∈ R

n

. But the left-hand

side takes all real values except when s = ∇f(a). Therefore, ∂f(a) = {∇f(a)}.

Finally, suppose that (3) holds and ∂f (a) = {s}. Then by Corollary 16.7.9,

f

0

(a;d) = hd, si. In particular, f

0

(a;−e

i

) = −f

0

(a;e

i

) and thus

he

i

, si = lim

h→0

+

f(a + he

i

) − f (a)

h

= lim

h→0

−

f(a + he

i

) − f (a)

h

=

∂f

∂x

i

(a).

Hence the partial derivatives of f are defined at a, which proves (1). ¥

594 Convexity and Optimization

16.7.12. EXAMPLE. Let Q be a positive definite n × n matrix, and let q ∈ R

n

and c ∈ R. Minimize the quadratic function f(x) = hx, Qxi + hx, qi + c.

We compute the differential

f

0

(x;d) = lim

h→0

+

f(x + hd) − f (x)

h

= lim

h→0

+

hx + hd, Q(x + hd)i + hx + hd, qi − hx, Qxi − hx, qi

h

= lim

h→0

+

1

h

¡

hhd, Qxi + hx, Qhdi + hhd, Qhdi + hhd, qi

¢

= lim

h→0

+

hd, Qxi + hQx, di + hhd, Qdi + hd, qi = hd, q + 2Qxi.

Thus ∇f(x) = q + 2Qx. We solve ∇f(x) = 0 to obtain the unique minimizer

x = −

1

2

Q

−1

q with minimum value f(−

1

2

Q

−1

q) = −

1

4

hq, Q

−1

qi + c.

This problem can also be solved using linear algebra. The spectral theorem for

Hermitian matrices states that there is an orthonormal basis v

1

, . . . , v

n

of eigen-

vectors that diagonalizes Q. Thus there are positive eigenvalues d

1

, . . . , d

n

so that

Qv

i

= d

i

v

i

. Write q in this basis as q = q

1

v

1

+ ···+ q

n

v

n

. We also write a generic

vector x as x = x

1

v

1

+ ··· + x

n

v

n

. Then

f(x) = c +

n

X

i=1

d

i

x

2

i

+ q

i

x

i

= c

0

+

n

X

i=1

d

i

(x

i

− a

i

)

2

,

where a

i

= −q

i

/(2d

i

) and c

0

= c −

n

P

i=1

d

i

a

2

i

.

Now observe by inspection that the minimum is achieved when x

i

= a

i

. To

complete the circle, note that

x =

n

X

i=1

a

i

v

i

= −

1

2

n

X

i=1

q

i

d

i

v

i

= −

1

2

Q

−1

q

and the minimum value is

f(x) = c

0

= c −

n

X

i=1

d

i

³

q

i

2d

i

´

2

= c −

n

X

i=1

q

2

i

4d

i

= c −

1

4

hq, Q

−1

qi.

We finish this section with two of the calculus rules for subgradients. The

proofs are very similar, so we prove the first and leave the second as an exercise.

16.7.13. THEOREM. Suppose that f

1

, . . . , f

k

are convex functions on R

n

and

set f (x) = max{f

1

(x), . . . , f

k

(x)}. For a ∈ R

n

, set J(a) = {j : f

j

(a) = f(a)}.

Then ∂f(a) = conv{∂f

j

(a) : j ∈ J(a)}.

PROOF. By Theorem 16.6.2, each f

j

is continuous. Thus there is an ε > 0 so

that f

j

(x) < f(x) for all kx − ak < ε and all j /∈ J(a). So for x ∈ B

ε

(a),

f(x) = max{f

j

(x) : j ∈ J(a)}. Fix d ∈ R

n

and note that f(a + hd) depends only

16.7 Subdifferentials and Directional Derivatives 595

on f

j

(a + hd) for j ∈ J(a) when |h| < ε/d. Thus using Corollary 16.7.9,

f

0

(a;d) = lim

h→0

+

f(a + hd) − f (a)

h

= lim

h→0

+

max

j∈J(a)

f

j

(a + hd) − f

j

(a)

h

= max

j∈J(a)

f

0

j

(a;d) = max

j∈J(a)

sup{hd, s

j

i : s

j

∈ ∂f

j

(a)}

= sup

©

hd, si : s ∈ ∪

j∈J(a)

∂f

j

(a)

ª

= sup

©

hd, si : s ∈ conv{∂f

j

(a) : j ∈ J(a)}

ª

.

This shows that f

0

(a;·) is the support function of the compact convex set

conv{∂f

j

(a) : j ∈ J(a)}. However, by Corollary 16.7.9, f

0

(a;·) is the support

function of ∂f (a). So by the Support Function Lemma (Lemma 16.7.8), these two

sets are equal. ¥

16.7.14. THEOREM. Suppose that f

1

and f

2

are convex functions on R

n

and

λ

1

and λ

2

are positive real numbers. Then for a ∈ R

n

,

∂(λ

1

f

1

+ λ

2

f

2

)(a) = λ

1

∂f

1

(a) + λ

2

∂f

2

(a).

Exercises for Section 16.7

A. Show that f

0

(a;d) is sublinear in d: f

0

(a;λd + µe) ≤ λf

0

(a;d) + µf

0

(a;e) for all

d, e ∈ R

n

and λ, µ ∈ [0, ∞).

B. Suppose that f is a convex function on a convex subset A ⊂ R

n

. If a, b ∈ A and

s ∈ ∂f (a), t ∈ ∂f(b), show that hs − t, a − bi ≥ 0.

C. Give an example of a convex set A ⊂ R

n

, a convex function f on A and a point a ∈ A

such that ∂f(a) is empty.

D. Compute the subdifferential for the norm kxk

∞

= max{|x

1

|, . . . , |x

n

|}.

E. Let g be a convex function on R, and define a function on R

2

by G(x, y) = g(x) − y.

(a) Compute the directional derivatives of the function G at a point (a, b).

(b) Use Theorem 16.7.7 to evaluate ∂G(a, b). Check you answer against

Example 16.7.4.

F. Prove Theorem 16.7.14—compute ∂f (a) when f = λ

1

f

1

+ λ

2

f

2

as follows:

(a) Show that f

0

(a;d) = λ

1

f

0

1

(a;d) + λ

2

f

0

2

(a;d).

(b) Apply Corollary 16.7.9 to both sides.

(c) Show that ∂(f)(a) = λ

1

∂f

1

(a) + λ

2

∂f

2

(a).

G. Given convex functions f on R

n

and g on R

m

, define h on R

n

× R

m

by h(x, y) =

f(x) + g(y). Show that ∂h(x, y) = ∂f(x) × ∂g(y).

HINT: h = F + G, where F (x, y) = f(x) and G(x, y) = g(y).

H. Suppose S is any nonempty subset of R

n

. We may still define the support function

of S by σ

S

= sup{hs, xi : s ∈ S}, but it may sometimes take the value +∞.

(a) If A =

conv(S), show that σ

S

= σ

A

.

(b) Show that σ

S

is convex.

(c) Show that σ

S

is finite valued everywhere if and only if S is bounded.

596 Convexity and Optimization

I. Consider a convex function f on a convex set A ⊂ R

n

and a 6= b ∈ ri(A).

(a) Define g on [0, 1] by g(λ) = f (λa + (1 − λ)b). If x

λ

= λa + (1 −λ)b, then show

that ∂g(λ) =

©

hm, b − ai : m ∈ ∂f(x

λ

)

ª

.

(b) Use the Convex Mean Value Theorem (Exercise 16.5.K) and part (a) to show that

there are λ ∈ (0, 1) and s ∈ ∂f(x

λ

) so that f(b) − f(a) = hs, b − ai.

J. Define a local subgradient of a convex function f on a convex set A ⊂ R

n

to be a

vector s so that f(x) ≥ f(a) + hx −a, si for all x ∈ A ∩B

r

(a) for some r > 0. Show

that if s is a local subgradient, then it is a subgradient in the usual sense.

K. (a) If f

k

are convex functions on a convex subset A ⊂ R

n

converging pointwise to f

and s

k

∈ ∂f

k

(x

0

) converge to s, prove that s ∈ ∂f(x

0

).

(b) Show that in general, it is not true that every s ∈ ∂f is obtained as such a limit by

considering f

k

(x) =

p

x

2

+ 1/k on R.

L. Let h = f ¯ g be the infimal convolution of two convex functions f and g on R

n

.

(a) Suppose that there are points x

0

= x

1

+x

2

such that h(x

0

) = f(x

1

)+g(x

2

). Prove

that ∂h(x

0

) = ∂f (x

1

) ∩ ∂g(x

2

).

HINT: s ∈ ∂h(x

0

) ⇔ f(y) + g(z) ≥ f (x

1

) + g(x

2

) + s(y + z −x

0

). Take y = x

1

or z = x

2

.

(b) If (a) holds and g is differentiable, show that h is differentiable at x

0

.

HINT: Theorem 16.7.11

M. Moreau–Yosida. Let f be a convex function on an open convex subset A of R

n

. For

k ≥ 1, define f

k

(x) = f ¯ (kk · k

2

)(x) = inf

y

f(y) + kkx − yk

2

.

(a) Show that f

k

≤ f

k+1

≤ f.

(b) Show that x

0

is a minimizer for f if and only if it is a minimizer for every f

k

.

HINT: If f (x

0

) = f(x

1

) + ε, find r > 0 so that f(x) ≥ f(x

1

) + ε/2 on B

r

(x

0

).

(c) Prove that f

k

converges to f.

HINT: If L is a Lipschitz constant on some ball about x

0

, estimate f

k

inside and

outside B

L/

√

k

(x

0

) separately.

(d) Prove that f

k

is differentiable for all k ≥ 1.

HINT: Use the previous exercise.

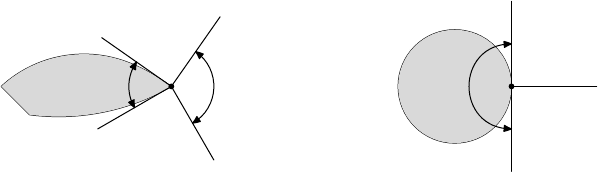

16.8. Tangent and Normal Cones

In this section, we study two special cones associated to a convex subset of R

n

.

We develop only a small portion of their theory, since our purpose is to set the stage

for our minimization results, and our results are all related to that specific goal.

16.8.1. DEFINITION. Consider a convex set A ⊂ R

n

and a ∈ A. Define the

cone C

A

(a) = R

+

(A − a) generated by A − a. The tangent cone to A at a is the

closed cone T

A

(a) =

C

A

(a) = R

+

(A − a). The normal cone to A at a is defined

to be N

A

(a) = {s ∈ R

n

: hs, x − ai ≤ 0 for all x ∈ A}.

It is routine to verify that T

A

(a) and N

A

(a) are closed cones. The cone C

A

(a)

is only used as a tool for working with T

A

(a). Notice that hs, x − ai ≤ 0 implies

16.8 Tangent and Normal Cones 597

that hs, λ(x − a)i ≤ 0 for all λ > 0. Thus s ∈ N

A

(a) satisfies hs, di ≤ 0 for

all d ∈ C

A

(a). Since the inner product is continuous, the inequality also holds for

d ∈ T

A

(a).

a + N

A

(a)

a + T

A

(a)

A

a

b + N

B

(b)

b + T

B

(b)

B

b

FIGURE 16.6. Two examples of tangent and normal cones.

16.8.2. EXAMPLE. As a motivating example, let

A = {(x, y) : x ≥ 0, y > 0, x

2

+ y

2

< 1} ∪ {(0, 0)} ⊂ R

2

.

Then C

A

((0, 0)) = {(x, y) : x ≥ 0, y > 0} ∪ {(0, 0)}, so the tangent cone is

T

A

((0, 0)) = {(x, y) : x, y ≥ 0}. At the boundary points (0, y) for y ∈ (0, 1),

C

A

((0, y)) = T

A

((0, y)) = {(x, y) : x ≥ 0}. Finally, at points (x, y) ∈ int A,

C

A

((x, y)) = T

A

((x, y)) = R

2

.

The normal cone gets smaller as the tangent cone increases in size. Here we

have N

A

((0, 0)) = {(a, b) : a, b ≤ 0}, N

A

((0, y)) = {(a, 0) : a ≤ 0} for

y ∈ (0, 1) and N

A

((x, y)) = {0} for (x, y) ∈ int A.

You may find it useful to draw pictures like Figure 16.6 for various points in A.

Let us formalize the procedure that produced the normal cone.

16.8.3. DEFINITION. Given a nonempty subset A of R

n

, the polar cone of A,

denoted A

◦

, is A

◦

= {s ∈ R

n

: ha, si ≤ 0 for all a ∈ A}.

It is easy to verify that A

◦

is a closed cone. It is evident from the previous

definition that N

A

(a) = T

A

(a)

◦

.

We need the following consequence of the Separation Theorem.

16.8.4. BIPOLAR THEOREM.

If C is a closed cone in R

n

, then C

◦◦

= C.

PROOF. From the definition, C

◦◦

= {d : hd, si ≤ 0 for all s ∈ C

◦

}. This clearly

includes C. Conversely, suppose that x /∈ C. Applying the Separation Theorem

(Theorem 16.3.3), there is a vector s and scalar α so that hc, si ≤ α for all c ∈ C

and hx, si > α. Since C = R

+

C, the set of values {hc, si : c ∈ C} is a cone

in R. Since C is bounded above by α, it follows that C = R

−

or {0}. Hence

598 Convexity and Optimization

hc, si ≤ 0 ≤ α < hx, si for c ∈ C. Consequently, s belongs to C

◦

. Therefore, x is

not in C

◦◦

. So C

◦◦

= C. ¥

Since N

A

(a) = T

A

(a)

◦

, we obtain the following:

16.8.5. COROLLARY. Let A be a convex subset of R

n

and a ∈ A. Then the

normal and tangent cones at a are polar to each other, namely N

A

(a) = T

A

(a)

◦

and T

A

(a) = N

A

(a)

◦

.

16.8.6. EXAMPLE. Let s

1

, . . . , s

m

be vectors in R

n

. Consider the convex poly-

hedron given as A = {x ∈ R

n

: hx, s

j

i ≤ r

j

, 1 ≤ j ≤ m}. What are the tangent

and normal cones at a ∈ A?

Fix a ∈ A. Set J(a) = {j : ha, s

j

i = r

j

}. For example, this set is empty if

a ∈ ri(A). Then

C

A

(a) =

©

d = t(x − a) : hx, s

j

i ≤ r

j

and some t ≥ 0

ª

=

©

d :

d

t

+ a, s

j

®

≤ r

j

and some t ≥ 0

ª

=

©

d : hd, s

j

i ≤ t(r

j

− ha, s

j

i) and some t ≥ 0

ª

.

If r

j

− ha, si > 0, this is no constraint; so C

A

(a)

=

©

d : hd, s

j

i ≤ 0, j ∈ J(a)

ª

.

This is closed, and thus T

A

(a) = C

A

(a).

Note that {d : hd, si ≤ 0}

◦

= R

+

s. Indeed, (R

+

s)

◦

= {d : hd, si ≤ 0}. So

the result follows from the Bipolar Theorem. Now Exercise 16.8.J tells us that

N

A

(x) =

¡

\

j∈J(a)

{d : hd, si ≤ 0}

¢

◦

=

X

j∈J(a)

R

+

s

j

= cone{s

j

: j ∈ J(a)}.

Indeed, without the exercise, we can see that

cone{s

j

: j ∈ J(a)}

◦

=

©

d : hd, s

j

i ≤ 0, j ∈ J(a)

ª

= T

A

(a).

Therefore, by Corollary 16.8.5 and the Bipolar Theorem,

N

A

(x) = T

A

(a)

◦

= cone{s

j

: j ∈ J(a)}

◦◦

= cone{s

j

: j ∈ J(a)}.

We need to compute the tangent and normal cones for a convex set A given as

the sublevel set of a convex function.

16.8.7. LEMMA. Let A be a compact convex subset of R

n

that does not contain

the origin 0. Then the cone R

+

A is closed.

16.8 Tangent and Normal Cones 599

PROOF. Suppose that a

k

∈ A and λ

k

≥ 0 and that c = lim

k→∞

λ

k

a

k

is a point in

R

+

A. From the compactness of A, we deduce that there is a subsequence (k

i

) so

that a

0

= lim

i→∞

a

k

i

exists in A. Because ka

0

k 6= 0,

λ

0

:=

kck

ka

0

k

= lim

i→∞

kλ

k

i

a

k

i

k

ka

k

i

k

= lim

i→∞

λ

k

i

.

Therefore, c = lim

i→∞

λ

k

i

a

k

i

= λ

0

a

0

belongs to R

+

A. ¥

16.8.8. THEOREM. Let g be a convex function on R

n

, and let A be the convex

sublevel set {x : g(x) ≤ 0}. Assume that there is a point x with g(x) < 0. If

a ∈ R

n

satisfies g(a) = 0, then

T

A

(a) = {d ∈ R

n

: g

0

(a;d) ≤ 0} and N

A

(a) = R

+

∂g(a).

PROOF. Let C = {d ∈ R

n

: g

0

(a;d) ≤ 0}, which is a closed cone. Suppose that

d ∈ A − a. Then [a, a + d] is contained in A and thus g(a + hd) − g(a) ≤ 0 for

0 < h ≤ 1. So g

0

(a;d) ≤ 0 and hence d ∈ C. As C is a closed cone, it follows

that C contains R

+

(A − a) = T

A

(a).

Choose x ∈ A with g(x) < 0, and set d = x − a. Then

g

0

(a;d) = inf

h>0

g(a + hd) − g(a)

h

≤

g(a + d) − g(a)

1

< 0.

Hence by Lemma 16.6.5, intC = {d : g

0

(a;d) < 0} is nonempty and moreover

C = intC.

Let d ∈ intC. Since g

0

(a;d) < 0, there is some h > 0 so that g(a + hd) < 0.

Consequently, a + hd belongs to A and d ∈ R

+

(A − a) ⊂ T

A

(a). So intC is a

subset of T

A

(a). Thus C = intC is contained in T

A

(a), and the two cones agree.

By Corollary 16.7.9, g

0

(a;d) = sup{hd, si : s ∈ ∂g(a)}. Thus d ∈ T

A

(a)

if and only if hd, si ≤ 0 for all s ∈ ∂g(a), which by definition is the polar cone

of ∂g(a). Hence by the Bipolar Theorem (Theorem 16.8.4), the polar N

A

(a) of

T

A

(a) is the closed cone generated by ∂g(a). Note that 0 /∈ ∂g(a) because a is not

a minimizer of g (Proposition 16.7.2). Therefore, by Lemma 16.8.7, N

A

(a) is just

R

+

∂g(a). ¥

Exercises for Section 16.8

A. Show that T

A

(a) and N

A

(a) are closed convex cones.

B. For a point v ∈ R

n

, show that v ∈ N

A

(a) if and only if P

A

(a + v) = a.

C. If C is a closed cone, show that T

C

(0) = C and N

C

(0) = C

◦

.

D. Suppose that A ⊂ R

n

is convex and f is a convex function on A. Prove that x

0

∈ A is

a minimizer for f in A if and only if f

0

(x

0

, d) ≥ 0 for all d ∈ T

A

(x

0

).

600 Convexity and Optimization

E. Let f (x, y) = (x − y

2

)(x − 2y

2

). Show that (0, 0) is not a minimizer of f on R

2

yet

f

0

((0, 0), d) ≥ 0 for all d ∈ R

2

. Why does this not contradict the previous exercise?

F. If C

1

⊂ C

2

, show that C

2

◦

⊂ C

1

◦

.

G. If A is a subspace of R

n

, show that A

◦

is the orthogonal complement of A.

H. Suppose that a

1

, . . . , a

r

are vectors in R

n

. Compute conv({a

1

, . . . , a

r

})

◦

.

I. If A is a convex subset of R

n

, show that A

◦

= {0} if and only if 0 ∈ int(A).

HINT: Use the Separation Theorem and Support Theorem.

J. Suppose that C

1

and C

2

are closed cones in R

n

.

(a) Show that (C

1

+ C

2

)

◦

= C

1

◦

∩ C

2

◦

.

(b) Show that (C

1

∩ C

2

)

◦

=

C

1

◦

+ C

2

◦

.

HINT: Use the Bipolar Theorem and part (a).

K. Given a convex function f on R

n

, define g on R

n+1

by g(x, r) = f(x) − r and

A = epi(f) = {(x, r) : f(x) ≤ r}. Use Theorem 16.8.8 to verify

(a) T

A

¡

(x, f(x))

¢

= {(d, p) : f

0

(x;d) ≤ p},

(b) intT

A

¡

(x, f(x))

¢

= {(d, p) : f

0

(x;d) < p}, and

(c) N

A

¡

(x, f(x))

¢

= R

+

[∂f (x) × {−1}].

(d) For n = 1, explain the last equation geometrically.

L. For a convex subset A ⊂ R

n

, show that the following are equivalent for x ∈ A:

(1) x ∈ ri(A).

(2) T

A

(x) is a subspace.

(3) N

A

(x) is a subspace.

(4) y ∈ N

A

(x) implies that −y ∈ N

A

(x).

M. (a) Suppose C ⊂ R

n

is a closed convex cone and x /∈ C. Show that y ∈ C is the

closest vector to x if and only if x − y ∈ C

◦

and hy, x − yi = 0.

HINT: Recall Theorem 16.3.1. Expand kx − yk

2

.

(b) Hence deduce that x = P

C

(x) + P

C

◦

x.

N. Give an example of two closed cones in R

3

whose sum is not closed.

HINT: Let C

i

= cone{(x, y, 1) : (x, y) ∈ A

i

}, where A

1

and A

2

come from Exer-

cise 16.2.G(c).

O. A polyhedral cone in R

n

is a setAR

m

+

= {Ax : x ∈ R

m

+

}for somematrix A mapping

R

m

into R

n

. Show that (AR

m

+

)

◦

= {y ∈ R

n

: A

t

y ≤ 0}, where A

t

is the transpose

of A and z ≤ 0 means z

i

≤ 0 for 1 ≤ i ≤ m.

P. Suppose A ⊂ R

n

and B ⊂ R

m

are convex sets. If (a, b) ∈ A × B, then show that

T

A×B

(a, b) = T

A

(a) × T

B

(b) and N

A×B

(a, b) = N

A

(a) × N

B

(b).

Q. Suppose that A

1

and A

2

are convex sets and a ∈ A

1

∩ A

2

.

(a) Show that T

A

1

∩A

2

(a) ⊂ T

A

1

(a) ∩ T

A

2

(a).

(b) Give an example where this inclusion is proper.

HINT: Find a convex set A in R

2

such that the positive y-axis Y

+

is contained in

T

A

(0) but A ∩ Y

+

= {(0, 0)}.

16.9 Constrained Minimization 601

16.9. Constrained Minimization

The goal of this section is to characterize the minimizers of a convex function

subject to constraints that limit the domain of the function to a convex set. Gen-

erally, this convex set is not explicitly described but is given as the intersection of

level sets. That is, we are only interested in minimizers in some specified convex

set. The first theorem characterizes such minimizers abstractly, using the normal

cone of the constraint set and the subdifferentials of the function. If the constraint

is given as the intersection of sublevel sets of convex functions, these conditions

may be described explicitly in terms of subgradients analogous to the Lagrange

multiplier conditions of multivariable calculus. Finally, we present another charac-

terization in terms of saddlepoints.

We will only consider convex functions that are defined on all of R

n

, rather than

a convex subset. This is not as restrictive as it might seem. Exercise 16.9.H will

guide you through a proof that any convex function satisfying a Lipschitz condition

on a convex set A extends to a convex function on all of R

n

. There are convex

functions that cannot be extended. For example, f(x) = −

√

x − x

2

on [0, 1] is

convex, but cannot be extended to all of R because the derivative blows up at 0 and

1.

We begin with the problem of minimizing a convex function f defined on R

n

over a convex subset A. A point x in A is called a feasible point.

16.9.1. THEOREM. Suppose that A ⊂ R

n

is convex and that f is a convex

function on R

n

. Then the following are equivalent for a ∈ A:

(1) a is a minimizer for f|

A

.

(2) f

0

(x;d) ≥ 0 for all d ∈ T

A

(a).

(3) 0 ∈ ∂f (a) + N

A

(a).

PROOF. First assume (3) that 0 ∈ ∂f(a) + N

A

(a); so there is a vector s ∈ ∂f(a)

such that −s ∈ N

A

(a). Recall that N

A

(a) = {v : hx − a, vi ≤ 0 for all x ∈ A}.

Hence hx − a, si ≥ 0 for x ∈ A. Now use the fact that s ∈ ∂f(a) to obtain

f(x) ≥ f(a) + hx − a, si ≥ f (a).

Therefore, a is a minimizer for f on A. So (3) implies (1).

Assume (1) that a is a minimizer for f on A. Let x ∈ A and set d = x − a.

Then [a, x] = {a + hd : 0 ≤ h ≤ 1} is contained in A. So f(a + hd) ≥ f (a) for

h ∈ [0, 1] and thus

f

0

(a;d) = lim

h→0

+

f(a + hd) − f (a)

h

≥ 0.

Because f

0

(a;·) is positively homogeneous and is nonnegative on A −a, it follows

that f

0

(a;d) ≥ 0 for d in the cone R

+

(A − a). But f

0

(a;·) is defined on all of R

n

,

and hence is continuous by Theorem 16.6.2. Therefore, f

0

(a;·) ≥ 0 on the closure

T

A

(a) =

R

+

(A − a). This establishes (2).

602 Convexity and Optimization

By Theorem 16.7.3, since a is in the interior of R

n

, the subdifferential ∂f(a)

is a nonempty compact convex set. Thus the sum ∂f(a) + N

A

(a) is closed and

convex by Exercise 16.2.G. Suppose that (3) fails: 0 /∈ ∂f (a) + N

A

(a). Then we

may apply the Separation Theorem 16.3.3 to produce a vector d and scalar α so

that

sup{hs + n, di : s ∈ ∂f(a), n ∈ N

A

(a)} ≤ α < h0, di = 0.

It must be the case that hn, di ≤ 0 for n ∈ N

A

(a), for if hn, di > 0, then

hs + λn, di = hs, di + λhn, di > 0

for very large λ. Therefore, d belongs to N

A

(a)

◦

= T

A

(a) by Corollary 16.8.5.

Now take n = 0 and apply Corollary 16.7.9 to compute

f

0

(a;d) = sup{hs, di : s ∈ ∂f(a)} ≤ α < 0.

Thus (2) fails. Contrapositively, (2) implies (3). ¥

Theorem 16.9.1 is a fundamental and very useful result. In particular, condi-

tion (3) does not depend on where a is in the set A. For example, if a is an interior

point of A, then N

A

(a) = {0} and this theorem reduces to Proposition 16.7.2.

Given that all we know about the constraint set is that it is convex, this theorem is

the best we can do. However, when the constraints are described in other terms,

such as the sublevel sets of convex functions, then we can find more detailed char-

acterizations of the optimal solutions.

16.9.2. DEFINITION. By a convex program, we mean the ingredients of a

minimization problem involving convex functions. Precisely, we have a convex

function f on R

n

to be minimized. The set over which f is to be minimized is

not given explicitly but instead is determined by constraint conditions of the form

g

i

(x) ≤ 0, where g

1

, . . . , g

r

are convex functions. The associated problem is

Minimize f(x)

subject to constraints g

1

(x) ≤ 0, . . . , g

r

(x) ≤ 0.

We call a ∈ R

n

a feasible vector for the convex program if a satisfies the con-

straints, that is, g

i

(a) ≤ 0 for i = 1, . . . , r. A solution a ∈ R

n

of this problem is

called an optimal solution for the convex program, and f(a) is the optimal value.

The set over which f is minimized is the convex set A =

T

1≤i≤r

{x : g

i

(x) ≤ 0}.

The r functional constraints may be combined to obtain A = {x : g(x) ≤ 0},

where g(x) = max{g

i

(x) : 1 ≤ i ≤ r}. The function g is also convex. This is

useful for technical reasons, but in practice the conditions g

i

may be superior (for

example, they may be differentiable). It is better to be able to express optimality

conditions in terms of the g

i

themselves.

In order to solve this problem, we need to impose some sort of regularity con-

dition on the constraints that allows us to use our results about sublevel sets.