Davidson K.R., Donsig A.P. Real Analysis with Real Applications

Подождите немного. Документ загружается.

16.9 Constrained Minimization 603

16.9.3. DEFINITION. A convex program satisfies Slater’s condition if there is

point x ∈ R

n

so that g

i

(x) < 0 for i = 1, . . . , r. Such a point is called a strictly

feasible point or a Slater point.

16.9.4. KARUSH–KUHN–TUCKER THEOREM.

Consider a convex program that satisfies Slater’s condition. Then a ∈ R

n

is an

optimal solution if and only if there is a vector w = (w

1

, . . . , w

r

) ∈ R

r

with

w

j

≥ 0 for 1 ≤ j ≤ r so that

0 ∈ ∂f (a) + w

1

∂g

1

(a) + ··· + w

r

∂g

r

(a),

g

j

(a) ≤ 0, w

j

g

j

(a) = 0 for 1 ≤ j ≤ r.

(KKT)

The relations (KKT) are called the Karush-Kuhn-Tucker conditions. If a is

an optimal solution, the set of vectors w ∈ R

r

+

that satisfy (KKT) are called the

(Lagrange) multipliers.

A slight variant on these conditions for differentiable functions was given in

a 1951 paper by Kuhn and Tucker and was labeled with their names. Years later,

it came to light that they also appeared in Karush’s unpublished Master’s thesis of

1939, and so Karush’s name was added.

This definition of multipliers appears to depend on which optimal point a

is used. However, the set of multipliers is in fact independent of a; see Exer-

cise 16.9.E.

PROOF. We introduce the function g(x) = max{g

i

(x) : 1 ≤ i ≤ r} as mentioned

previously. Then the feasible set becomes

A = {x ∈ R

n

: g

i

(x) ≤ 0, 1 ≤ i ≤ r} = {x ∈ R

n

: g(x) ≤ 0}.

Slater’s condition guarantees that the set {x : g(x) < 0} is nonempty. Hence by

Lemma 16.6.5, this is the interior of A.

Assume that a ∈ A is an optimal solution. In particular, a is feasible, so

g

j

(a) ≤ 0 for all j. By Theorem 16.9.1, 0 ∈ ∂f (a)+N

A

(a). When a is an interior

point, then N

A

(a) = {0} and so 0 ∈ ∂f(a). Set w

j

= 0 for 1 ≤ j ≤ r and the

conditions (KKT) are satisfied. Otherwise we may suppose that g(a) = 0.

The hypotheses of Theorem 16.8.8 are satisfied, and so N

A

(a) = R

+

∂g(a).

When the subdifferential of g is computed using Theorem 16.7.13, it is found to be

∂g(a) = conv{∂g

j

(a) : j ∈ J(a)}, where J(a) = {j : g

j

(a) = 0}. We claim that

N

A

(a) =

n

X

j∈J(a)

w

j

s

j

: w

j

≥ 0, s

j

∈ ∂g

j

(a)

o

.

Indeed, every element of ∂g(a) has this form, and multiplication by a positivescalar

preserves it. Conversely, if w =

P

j∈J(a)

w

j

6= 0, then

P

j∈J(a)

w

j

w

s

j

belongs to

conv{∂g

j

(a) : j ∈ J(a)}, and so

P

j∈J(a)

w

j

s

j

belongs to R

+

∂g(a).

Therefore, the condition 0 ∈ ∂f(a) + N

A

(a) may be restated as s ∈ ∂f(a),

s

j

∈ ∂g

j

(a), w

j

≥ 0 for j ∈ J(a) and s +

P

j∈J(a)

w

j

s

j

= 0. By definition,

g

j

(a) = g(a) = 0 for j ∈ J(a), whence we have w

j

g

j

(a) = 0. For all other j, we

set w

j

= 0 and (KKT) is satisfied.

604 Convexity and Optimization

Conversely suppose that (KKT) holds. Since g

j

(a) ≤ 0, a is a feasible point.

The conditions w

j

g

j

(a) = 0 mean that w

j

= 0 for j /∈ J(a). If a is strictly feasible,

J(a) is the empty set. In this event, (KKT) reduces to 0 ∈ ∂f(a) = ∂f(a)+N

A

(a).

On the other hand, when g(a) = 0, we saw that in this instance the (KKT) condition

is equivalent to 0 ∈ ∂f(a) + N

A

(a). In both cases, Theorem 16.9.1 implies that a

is an optimal solution. ¥

Notice that if f and each g

i

is differentiable, then first part of (KKT) becomes

0 = ∇f (a) + w

1

∇g

1

(a) + ··· + w

r

∇g

r

(a),

which is more commonly written as a system of linear equations

0 =

∂f

∂x

i

(a) + w

1

∂g

1

∂x

i

(a) + ··· + w

r

∂g

r

∂x

i

(a) for 1 ≤ i ≤ n.

This is known as a Lagrange multiplier problem. So we adopt the same terminology

here.

These conditions can be used to solve concrete optimization problems in much

the same way as in multivariable calculus. Their greatest value for applications

is in understanding minimization problems, which can lead to the development of

efficient numerical algorithms.

16.9.5. DEFINITION. Given a convex program, define the Lagrangian of this

system to be the function L on R

n

× R

r

given by

L(x, y) = f(x) + y

1

g

1

(x) + ··· + y

r

g

r

(x).

Next, we recall the definition of a saddlepoint from multivariable calculus.

There are several equivalent conditions for saddlepoints given in the Exercises.

16.9.6. DEFINITION. Suppose that X and Y are sets and L is a real-valued

function on X × Y . A point (x

0

, y

0

) ∈ X × Y is a saddlepoint for L if

L(x

0

, y) ≤ L(x

0

, y

0

) ≤ L(x, y

0

) for all x ∈ X, y ∈ Y.

We shall be interested in saddlepoints of the Lagrangian over the set R

n

×R

r

+

.

We restrict the y variables to the positive orthant R

r

+

because the (KKT) conditions

require nonnegative multipliers.

16.9.7. THEOREM. Consider a convex program that admits an optimal solution

and satisfies Slater’s condition. Then a is an optimal solution and w a multiplier

for the program if and only if (a, w) is a saddlepoint for its Lagrangian function

L(x, y) = f (x) + y

1

g

1

(x) + ···y

r

g

r

(x) on R

n

× R

r

+

. The value L(a, w) at any

saddlepoint equals the optimal value of the program.

16.9 Constrained Minimization 605

PROOF. First suppose that L(a, y) ≤ L(a, w) for all y ∈ R

n

+

. Observe that

r

X

j=1

(y

j

− w

j

)g

j

(a) = L(a, y) − L(a, w) ≤ 0.

Since each y

j

may be taken to be arbitrarily large, this forces g

j

(a) ≤ 0 for each

j. So a is a feasible point. Also, taking y

j

= 0 and y

i

= w

i

for i 6= j yields

−w

j

g

j

(a) ≤ 0. Since this quantity is positive, we deduce that w

j

g

j

(a) = 0. So

L(a, w) = f(a) +

P

j

w

j

g

j

(a) = f (a).

Now turn to the condition L(a, w) ≤ L(x, w) for all x ∈ R

n

. This means

that h(x) = L(x, w) = f(x) +

P

j

w

j

g

j

(x) has a global minimum at a. By

Proposition 16.7.2, 0 ∈ ∂h(a). We may compute ∂h(a) using Theorem 16.7.14.

So 0 ∈ ∂f(a) +

r

P

j=1

w

j

∂g

j

(a). This establishes the (KKT) conditions, and thus a

is a minimizer for the convex program and w is a multiplier.

Conversely, suppose that a and w satisfy (KKT). Then g

j

(a) ≤ 0 because a is

feasible and w

j

g

j

(a) = 0 for 1 ≤ j ≤ r. So w

j

= 0 except for j ∈ J(a). Thus

L(a, y) −L(a, w) =

P

j /∈J(a)

y

j

g

j

(a) ≤ 0. The other part of (KKT) states that the

function h(x) has 0 ∈ ∂h(a). Thus by Proposition 16.7.2, a is a minimizer for h.

That is, L(a, w) ≤ L(x, w) for all x ∈ R

n

. So L has a saddlepoint at (a, w). ¥

If we had a multiplier w for the convex program, then to solve the convex

program it is enough to solve the unconstrained minimization problem:

inf{L(x, w) : x ∈ R

n

}.

This shows one important property of multipliers: They turn constrained optimiza-

tion problems into unconstrained ones. In order to use multipliers in this way, we

need a method for finding multipliers without first solving the convex program.

This problem is addressed in the next section.

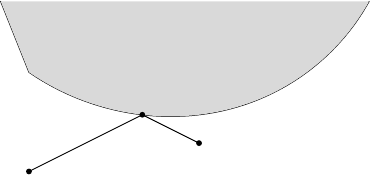

16.9.8. EXAMPLE. Consider the following example. Let g be a convex function

on R and fix two points p = (x

p

, y

p

) and q = (x

q

, y

q

) in R

2

. Minimize the sum

of the distances to p and q over A = epi(g) = {(x, y) : G(x, y) ≤ 0}, where

G(x, y) = g(x) − y, as indicated in Figure 16.7.

p

q

v

epi(g)

FIGURE 16.7. Minimizing the sum of two distances.

606 Convexity and Optimization

Then the function of v = (x, y) ∈ R

2

to be minimized is

f(v) = kv−pk + kv−qk =

q

(x−x

p

)

2

+ (y−y

p

)

2

+

q

(x−x

q

)

2

+ (y−y

q

)

2

.

Using Example 16.7.10, we may compute that

∂f(v) =

B

1

(0) +

p−q

kp−qk

if v = p

B

1

(0) −

p−q

kp−qk

if v = q

v−p

kv−pk

+

v−q

kv−qk

if x 6= p, q.

Note that 0 ∈ ∂f (v) if v = p or q or the two vectors v − p and v − q point in

opposite directions, namely v ∈ [p, q]. This is obvious geometrically.

To make the problem more interesting, let us assume that A does not intersect

the line segment [p, q]. The (KKT) conditions at the point v = (x, y) become

g(x) ≤ y w ≥ 0 w(g(x) − y) = 0

0 ∈ ∂f (v) + w∂G(v).

The first line reduces to saying that v = (x, g(x)) lies in the boundary of A and

w ≥ 0. Alternatively, we could observe that N

A

(v) = {0} when v ∈ intA, and

thus 0 /∈ ∂f(v) + N

A

(v), whence the minimum occurs on the boundary.

At a point x, we know that ∂g(x) = [D

−

g(x), D

+

g(x)]. Thus by Exam-

ple 16.7.4 for v = (x, g(x)),

∂G(v) =

©

(s, −1) : s ∈ [D

−

g(x), D

+

g(x)]

ª

.

So by Theorem 16.8.8, N

A

(v) =

©

(st, −t) : s ∈ [D

−

g(x), D

+

g(x)], t ≥ 0

ª

.

Thus the second statement of (KKT) says that the sum of the two unit vectors in the

directions p − v and q − v is an element of N

A

(v). Now geometrically this means

that p −v and q −v make the same angle on opposite sides of some normal vector.

In particular, if g is differentiable at v, then N

A

(v) = R

+

(g

0

(x), −1) is the

outward normal to the tangent line at v. So the geometric condition is just that

the angles to the tangent (from opposite sides) made by [p, v] and [q, v] are equal.

In physics, this is the well-known law: The angle of incidence equals the angle of

reflection. This fact for a light beam reflecting off a surface is explained by Fermat’s

Principle that a beam of light will follow the fastest path (hence the shortest path)

between two points.

However, this criterion works just as well when g is not differentiable. For

example, take g(x) = |x| and points p = (−1, −1) and q = (2, 0). Then

∂g(x) =

−1 if x < 0

[−1, 1] if x = 0

+1 if x > 0.

We will verify the (KKT) condition at the point 0 = (0, 0). First observe that

∂G(0) = [−1, 1] × {−1}. So

N

A

(0) = R

+

¡

∂g(0) × {−1}

¢

= {(s, t) : t ≤ 0}

16.9 Constrained Minimization 607

consists of the lower half-plane. Now

∂f(0) = (1, 1)/

√

2 + (−2, 0)/2 = (

1

√

2

− 1,

1

√

2

)

lies in the upper half-plane. In particular,

¡

1

√

2

− 1,

1

√

2

¢

+

1

√

2

¡

1 −

√

2, −1) = (0, 0).

So w = 1/

√

2 and v = (0, 0) satisfy (KKT), and thus (0, 0) is the minimizer.

Exercises for Section 16.9

A. Minimize x

2

+ y

2

− 4x − 6y subject to x ≥ 0, y ≥ 0, and x

2

+ y

2

≤ 4.

B. Minimize ax + by subject to x ≥ 1 and

√

xy ≥ K. Here a, b, and K are positive

constants.

C. Minimize

1

x

+

4

y

+

9

z

subject to x + y + z = 1 and x, y, z > 0.

HINT: Find the Lagrange multipliers.

D. Suppose (x

1

, y

1

) and (x

2

, y

2

) are saddlepoints of a real valued function p on X × Y .

(a) Show that (x

1

, y

2

) and (x

2

, y

1

) are also saddlepoints.

(b) Show that p takes the same value at all four points.

(c) Prove that the set of saddlepoints of p has the form A ×B for A ⊂ X and B ⊂ Y .

E. (a) Use the previous exercise to show that the set of multipliers for a convex program

do not depend on the choice of optimal point.

(b) Show that the set of multipliers is a closed convex subset of R

r

+

.

(c) Show that the set of saddlepoints for the Lagrangian is a closed convex rectangle

A × M, where A is the set of optimal solutions and M is the set of multipliers.

F. Given a real-valued function p on X × Y , define functions α on X and β on Y by

α(x) = sup{p(x, y) : y ∈ Y } and β(y) = inf{p(x, y) : x ∈ X}. Show that for

(x

0

, y

0

) ∈ X × Y the following are equivalent:

(1) (x

0

, y

0

) is a saddlepoint for p.

(2) p(x

0

, y) ≤ p(x, y

0

) for all x ∈ X and all y ∈ Y .

(3) α(x

0

) = p(x

0

, y

0

) = β(y

0

).

(4) α(x

0

) ≤ β(y

0

).

G. Let g be a convex function on R and let p = (x

p

, y

p

) ∈ R

2

. Find a criterion for the

closest point to p in A = epi(g).

(a) What is the function f to be minimized? Find ∂f(v).

(b) What is the constraint function G? Compute ∂G(v).

(c) Write down the (KKT) conditions.

(d) Simplify these conditions and interpret them geometrically.

H. Suppose that A is an open convex subset of R

n

and f is a convex function on A that is

Lipschitz with constant L. Construct a convex function g on R

n

extending f:

(a) Show if a ∈ A and v ∈ ∂f(a), then kvk ≤ L.

HINT: Check the proof of Theorem 16.7.3.

(b) For x ∈ R

n

and a, b ∈ A and s ∈ ∂f(a), show that

f(b) + Lkx − bk ≥ f (a) + hs, x − ai.

608 Convexity and Optimization

(c) Define g on R

n

by g(x) = inf{f (a) + Lkx −ak : a ∈ A}. Show that g(x) > −∞

for x ∈ R

n

and that g(a) = f(a) for a ∈ A.

(d) Show that g is convex.

I. Let f and g

1

, . . . , g

m

be C

1

functions on R

n

. The problem is to minimize f over the

set A = {x : g

j

(x) ≤ 0, 1 ≤ j ≤ m}. Let J(x) = {j : g

j

(x) = 0}. Prove that a

feasible point x

0

is a local minimum if and only if there are constants λ

i

not all 0 so

that λ

0

∇f(x

0

) +

P

j∈J(x

0

)

λ

j

∇g

j

(x

0

) = 0.

HINT: Let g(x) = max{f(x) − f(x

0

), g

j

(x) : j ∈ J(x

0

)}. Compute ∂g(x

0

) by

Theorem 16.7.13. Use Exercise 16.3.J to deduce g

0

(x

0

;d) ≥ 0 for all d if and only if

0 ∈ cone{∇f(x

0

), ∇g

j

(x

0

) : j ∈ J(x

0

)}.

J. Duffin’s duality gap. Let b ≥ 0, and consider the convex program:

Minimize f(x, y) = e

−y

subject to g(x, y) =

p

x

2

+ y

2

− x ≤ b in R

2

.

(a) Find the feasible region. For which b is Slater’s condition satisfied?

(b) Solve the problem. When is the minimum attained?

(c) Show that the solution is not continuous in b.

K. An alternativeapproach to solving minimization problems is to eliminate the constraint

set g

i

(x) ≤ 0 and instead modify f by adding a term h(g

i

(x)), where h is an increasing

function with h(y) = 0 for y ≤ 0. The quantity h(g

i

(x)) is a called a penalty, and this

approach is the penalty method. Assume that f and each g

i

are continuous functions

on R

n

but not necessarily convex. Let h

k

(y) = k(max{y, 0})

2

. For each integer

k ≥ 1, we have the minimization problem: Minimize F

k

(x) = f(x) +

r

P

i=1

h

k

(g

i

(x))

for x ∈ R

n

. Suppose that this minimization problem has a solution a

k

and the original

has a solution a.

(a) Show that F

k

(a

k

) ≤ F

k+1

(a

k+1

) ≤ f(a).

(b) Show that lim

k→∞

r

P

i=1

h

k

(g

i

(a

k

)) = 0.

(c) If a

0

is the limit of a subsequence of (a

k

), show that it is a minimizer.

(d) If f(x) → ∞ as kxk → ∞, deduce that the minimization problem has a solution.

16.10. The Minimax Theorem

In addition to the Lagrangian of the previous section, saddlepoints play a cen-

tral role in various other optimization problems. For example, they arise in game

theory and mathematical economics. Our purpose in this section is to examine the

mathematics that leads to the existence of a saddlepoint under quite general hy-

potheses. Examination of a typical saddlepoint in R

3

shows that the cross sections

in the xz-plane are convex functions while the cross sections in the yz-plane are

concave. See Figure 16.8. It is this trade-off that gives the saddle its characteristic

shape. Hence we make the following definition:

16.10.1. DEFINITION. A function p(x, y) defined on X ×Y is called convex–

concave if p(·, y) is a convex function of x for each fixed y ∈ Y and p(x, ·) is a

concave function of y for each fixed x ∈ X.

16.10 The Minimax Theorem 609

FIGURE 16.8. A typical saddlepoint.

The term minimax comes from comparing two interesting quantities:

p

∗

= sup

y∈Y

inf

x∈X

p(x, y) and p

∗

= inf

x∈X

sup

y∈Y

p(x, y),

which are the maximin and minimax, respectively. These quantities make sense

for any function p. Moreover, for x

1

∈ X and y

1

∈ Y ,

inf

x∈X

p(x, y

1

) ≤ p(x

1

, y

1

) ≤ sup

y∈Y

p(x

1

, y).

Take the supremum of the left-hand side over y

1

∈ Y to get p

∗

≤ sup

y∈Y

p(x

1

, y),

since the right-hand side does not depend on y

1

. Then take the infimum over all

x

1

∈ X to obtain p

∗

≤ p

∗

.

Suppose that there is a saddlepoint ( ¯x, ¯y), that is, p( ¯x, y) ≤ p( ¯x, ¯y) ≤ p(x, ¯y)

for all x ∈ X and y ∈ Y . Then

p

∗

≥ inf

x∈X

p(x, ¯y) ≥ p( ¯x, ¯y) ≥ sup

y∈Y

p( ¯x, y) ≥ p

∗

.

Thus the existence of a saddlepoint shows that p

∗

= p

∗

.

We will use the following variant of Exercise 16.6.J.

16.10.2. LEMMA. Let f

1

, . . . , f

r

be convex functions on a convex subset X of

R

n

. For c ∈ R, the following are equivalent:

(1) There is no point x ∈ X satisfying f

j

(x) < c for 1 ≤ j ≤ r.

(2) There exist λ

j

≥ 0 so that

P

j

λ

j

= 1 and

P

j

λ

j

f

j

≥ c on X.

PROOF. If (1) is false and f

j

(x

0

) < c for 1 ≤ j ≤ k, then

P

j

λ

j

f

j

(x

0

) < c for all

choices of λ

j

≥ 0 with

P

j

λ

j

= 1. Hence (2) is also false.

Conversely, assume that (1) is true. Define

Y = {y ∈ R

r

: y

j

> f

j

(x) for 1 ≤ j ≤ r and some x ∈ X}.

This set is open and convex (left as Exercise 16.10.B). By hypothesis, the point

z = (c, c . . . , c) ∈ R

r

is not in Y . Depending on whether z belongs to

Y or not,

we apply either the Support Theorem (Theorem 16.3.7) or the Separation Theorem

610 Convexity and Optimization

(Theorem 16.3.3) to obtain a hyperplane that separates z from Y . That is, there is a

nonzero vector h = (h

1

, . . . , h

r

) in R

r

and α ∈ R so that hy, hi < α ≤ hz, hi for

all y ∈ Y .

We claim that each coefficient h

j

≤ 0. Indeed, for any x ∈ X, Y contains

¡

f

1

(x), . . . , f

r

(x)

¢

+ te

j

for any t ≥ 0, where e

j

is a standard basis vector for R

r

.

Thus

P

r

j=1

h

j

f

j

(x) + th

j

≤ α, which implies that h

j

≤ 0.

Define λ

j

= h

j

/H, where H =

r

P

j=1

h

j

< 0. Then λ

j

≥ 0 and

P

j

λ

j

= 1.

Restating the separation for

¡

f

1

(x), . . . , f

r

(x)

¢

∈ Y , we obtain

r

X

j=1

λ

j

f

j

(x) ≥

α

H

≥

hz, hi

H

=

r

X

j=1

cλ

j

= c.

So (2) holds. ¥

Now we establish our saddlepoint result. First we assume compactness. We

will remove it later, at the price of adding a mild additional requirement.

16.10.3. MINIMAX THEOREM (COMPACT CASE).

Let X be a compact convex subset of R

n

and let Y be a compact convex subset of

R

m

. If p is a convex–concave function on X × Y , then p has a nonempty compact

convex set of saddlepoints.

PROOF. For each y ∈ Y , define a convex function on X by p

y

(x) = p(x, y). For

each c > p

∗

, define A

y,c

= {x ∈ X : p

y

(x) ≤ c}. Then this is a nonempty

compact convex subset of X.

For any finite set of points y

1

, . . . , y

r

in Y , we claim that A

y

1

,c

∩ ··· ∩ A

y

r

,c

is nonempty. If not, then there is no point x so that p

y

j

(x) < c for 1 ≤ j ≤ r.

So by Lemma 16.10.2, there would be scalars λ

i

≥ 0 with

P

i

λ

i

= 1 so that

P

i

λ

i

p

y

i

≥ c on X. Set ˜y =

P

r

i=1

λ

i

y

i

. As p is concave in y,

c ≤

r

X

i=1

λ

i

p(x, y

i

) ≤ p(x, ˜y) for all x ∈ X.

Consequently, c ≤ min

x∈X

p(x, ¯y) ≤ p

∗

, which is a contradiction.

Let {y

i

: i ≥ 1} be a dense subset of Y , and set c

n

= p

∗

+ 1/n. Then the

set A

n

=

T

n

i=1

A

y

i

,c

n

is nonempty closed and convex. It is clear that A

n

contains

A

n+1

, and thus this is a decreasing sequence of compact sets in R

n

. By Cantor’s In-

tersection Theorem (Theorem 4.4.7), the set A =

T

n≥1

A

n

is a nonempty compact

set. (It is also convex, as the reader can easily verify.)

Let ¯x ∈ A. Then ¯x ∈ A

y

i

,c

n

for all n ≥ 1. Thus p( ¯x, y

i

) ≤ c

n

for all i ≥ 1 and

n ≥ 1. So p(¯x, y

i

) ≤ p

∗

. But the set {y

i

: i ≥ 1} is dense in Y and p is continuous,

so p( ¯x, y) ≤ p

∗

for all y ∈ Y . Therefore,

p

∗

= inf

x∈X

max

y∈Y

p(x, y) ≤ max

y∈Y

p( ¯x, y) ≤ p

∗

.

16.10 The Minimax Theorem 611

Since p

∗

≤ p

∗

is always true, we obtain equality. Choose a point ¯y ∈ Y so that

p( ¯x, ¯y) = p

∗

. Then p( ¯x, y) ≤ p( ¯x, ¯y) ≤ p(x, ¯y) for all x ∈ X and y ∈ Y . That is,

( ¯x, ¯y) is a saddlepoint for p.

By Exercise 16.9.D, the set of saddlepoints is a rectangle A × B. Moreover,

the same argument required in Exercise 16.9.E shows that this rectangle is closed

and convex. ¥

Slippery things can happen at infinity if precautions are not taken. However,

the requirements of the next theorem are often satisfied.

16.10.4. MINIMAX THEOREM.

Suppose that X is a closed convex subset of R

n

and Y is a closed convex subset of

R

m

. Assume that p is convex–concave on X ×Y , and in addition assume that

(1) if X is unbounded, then there is a y

0

∈ Y so that p(x, y

0

) → +∞ as

kxk → ∞ for x ∈ X.

(2) if Y is unbounded, then there is an x

0

∈ X so that p(x

0

, y) → −∞ as

kyk → ∞ for y ∈ Y .

Then p has a nonempty compact convex set of saddlepoints.

PROOF. We deal only with the case in which both X and Y are unbounded. The

reader can find a modification that works when only one is unbounded.

By the hypotheses, max

y∈Y

p(x

0

, y) = α < ∞ and min

x∈X

p(x, y

0

) = β > −∞.

Clearly, β ≤ p(x

0

, y

0

) ≤ α. Set

X

0

= {x ∈ X : p(x, y

0

) ≤ α + 1} and Y

0

= {y ∈ Y : p(x

0

, y) ≥ β + 1}.

Conditions (1) and (2) guarantee that X

0

and Y

0

are bounded, and thus they are

compact and convex. Let A × B be the set of saddlepoints for the restriction of p

to X

0

× Y

0

provided by the compact case.

In particular, let ( ¯x, ¯y) be one saddlepoint, and let c = p( ¯x, ¯y) be the critical

value. Then

β ≤ p( ¯x, y

0

) ≤ p( ¯x, ¯y) = c ≤ p(x

0

, ¯y) ≤ α.

Let x ∈ X \ X

0

, so that p(x, y

0

) > α + 1. Now p(·, y

0

) is continuous, and

hence there is a point x

1

in [x, ¯x] with p(x

1

, y

0

) = α + 1. So x

1

∈ X

0

and x

1

6= ¯x.

Thus x

1

= λx + (1 −λ) ¯x for some 0 < λ < 1. As p(·, ¯y) is convex,

c ≤ p(x

1

, ¯y) ≤ λp(x, ¯y) + (1 −λ)p( ¯x, ¯y) = λp( ¯x, ¯y) + (1 −λ)c.

Hence p( ¯x, ¯y) = c ≤ p(x, ¯y). Similarly, for every y ∈ Y \ Y

0

, we may show that

p( ¯x, y) ≤ c = p( ¯x, ¯y). Therefore, ( ¯x, ¯y) is a saddlepoint in X × Y . ¥

Now let us see how this applies to the problem of constrained optimization.

Consider the convex programming problem: Minimize a convex function f(x) over

the closed convex set X = {x : g

j

(x) ≤ 0, 1 ≤ j ≤ r}. Suppose that it satisfies

Slater’s condition. The Lagrangian L(x, y) = f(x) + y

1

g

1

(x) + ···+ y

r

g

r

(x) is a

convex function of x for each fixed y ∈ R

r

+

, and is a linear function of y for each

x ∈ R

n

. So, in particular, L is convex–concave on R

n

× R

r

+

.

612 Convexity and Optimization

Now we also suppose that this problem has an optimal solution. Then we can

apply Theorem 16.9.7 and the Karush–Kuhn–Tucker Theorem (Theorem 16.9.4) to

guarantee a saddlepoint (a, w) for L; and L(a, w) is the solution of the convex pro-

gram. By the argumentsof this section, it follows that the existence of a saddlepoint

means that the optimal value is also obtained as the maximin:

f(a) = min

x∈X

f(x) = L

∗

= max

y∈R

r

+

inf

x∈R

n

L(x, y).

Define h(y) = inf

x∈R

n

f(x) + y

1

g

1

(x) + ···y

r

g

r

(x) for y ∈ R

r

+

. While its defini-

tion requires an infimum, h gives a new optimization problem, which can be easier

to solve. This new problem is called the dual program:

Maximize h(y) over y ∈ R

r

+

.

16.10.5. PROPOSITION. Consider a convex program that admits an optimal

solution and satisfies Slater’s condition. The solutions of the dual program are

exactly the multipliers of the original program, and the optimal value of the dual

program is the same.

PROOF. Suppose a is an optimal solution of the original program and w a multi-

plier. Then L(a, y) ≤ L(a, w) ≤ L(x, w) for all x ∈ R

n

and y ∈ R

r

+

since (a, w)

is a saddlepoint for the Lagrangian L. In particular,

h(w) = inf{L(x, w) : x ∈ R

n

} = L(a, w).

Moreover, for any y ∈ R

r

+

with y 6= w, h(y) ≤ L(a, y) ≤ L(a, w) = h(w). So w

is a solution of the dual problem, and the value is L(a, w) = L

∗

, which equals the

value of the original problem.

Conversely, suppose that w

0

is a solution of the dual program. Let (a, w) be a

saddlepoint. Then

L

∗

= L(a, w) ≥ L(a, w

0

) ≥ h(w

0

) = L

∗

.

Thus h(w

0

) = L(a, w

0

) = L

∗

. Therefore, L(a, w

0

) = h(w

0

) ≤ L(x, w

0

) for all

x ∈ R

n

. Also, since (a, w) is a saddlepoint, L(a, y) ≤ L(a, w) = L(a, w

0

) for all

y ∈ R

r

+

. Consequently, w

0

is a multiplier. ¥

An important fact for computational purposes is that since these two problems

have the same answer, we can obtain estimates for the solution by sampling. Sup-

pose that we have a point x

0

∈ X and y

0

∈ R

m

+

so that h(y

0

) − f(x

0

) < ε. Then

since we know that the solution lies in [f(x

0

), h(y

0

)], we have a good estimate for

the solution even if we cannot compute it exactly.

16.10.6. EXAMPLE. Consider a quadratic programming problem. Let Q be

a positive definite n ×n matrix, and let q ∈ R

n

. Also let A be an m ×n matrix and

a ∈ R

m

. Minimize the quadratic function f(x) = hx, Qxi+ hx, qi over the region

Ax ≤ a.

We can assert before doing any calculation that this minimum will be attained.

This follows from the global version, Example 16.7.12, where it was shown that