Griffiths D. Head First Statistics

Подождите немного. Документ загружается.

you are here 4 371

using the normal distribution ii

Find the probability that the combined weight of the bride and

groom is less than 380 pounds using the following three steps.

1. X is the weight of the bride and Y is the weight of the groom, where X ~ N(150, 400) and Y ~ N(190, 500). With this

information, find the probability distribution for the combined weight of the bride and groom.

2. Then, using this distribution, find the standard score of 380 pounds.

3. Finally, use the standard score to find P(X + Y < 380).

372 Chapter 9

Find the probability that the combined weight of the bride and

groom is less than 380 pounds using the following three steps.

1. X is the weight of the bride and Y is the weight of the groom, where X ~ N(150, 400) and Y ~ N(190, 500). With this

information, find the probability distribution for the combined weight of the bride and groom.

We need to find the probability distribution of X + Y. To find the mean and variance of X + Y, we add

the means and variances of the X and Y distributions together. This gives us

X + Y ~ N(340, 900)

2. Then, using this distribution, find the standard score of 380 pounds.

z = (x + y) - μ

= 380 - 340

30

= 40

30

= 1.33 (to 2 decimal places)

3. Finally, use the standard score to find P(X + Y < 380)

If we look 1.33 up in standard normal probability tables, we get a probability of 0.9082. This means that

P(X + Y < 380) = 0.9082

Remember how before we used z = x - μ ?

σ

This time around we’re using the distribution

of X + Y, so we use z = (x + y) - μ

σ

sharpen solution

σ

you are here 4 373

using the normal distribution ii

Julie’s matchmaker is at it again. What’s the probability that a man will be at least 5 inches taller

than a woman?

In Statsville, the height of men in inches is distributed as N(71, 20.25), and the height of women

in inches is distributed as N(64, 16).

374 Chapter 9

Julie’s matchmaker is at it again. What’s the probability that a man will be at least 5 inches taller

than a woman?

In Statsville, the height of men in inches is distributed as N(71, 20.25), and the height of women

in inches is distributed as N(64, 16).

Let’s use X to represent the height of the men and Y to represent the height of the women. This means that

X ~ N(71, 20.25) and Y ~ N(64, 16).

We need to find the probability that a man is at least 5 inshes taller than a woman. This means we need to find

P(X > Y + 5)

or

P(X - Y > 5)

To find the mean and variance of X - Y, we take the mean of Y from the mean of X, and add the variances

together. This gives us

X - Y ~ N(7, 36.25)

We need to find the standard score of 5 inches

z = (x - y) - μ

σ

= 5 - 7

6.02

= -0.33 (t

o 2 decima

l places)

We can use this to find P(X - Y > 5).

P(X - Y > 5) = 1 - P(X - Y < 5)

= 1 - 0.3707

= 0.6293

exercise solution

you are here 4 375

using the normal distribution ii

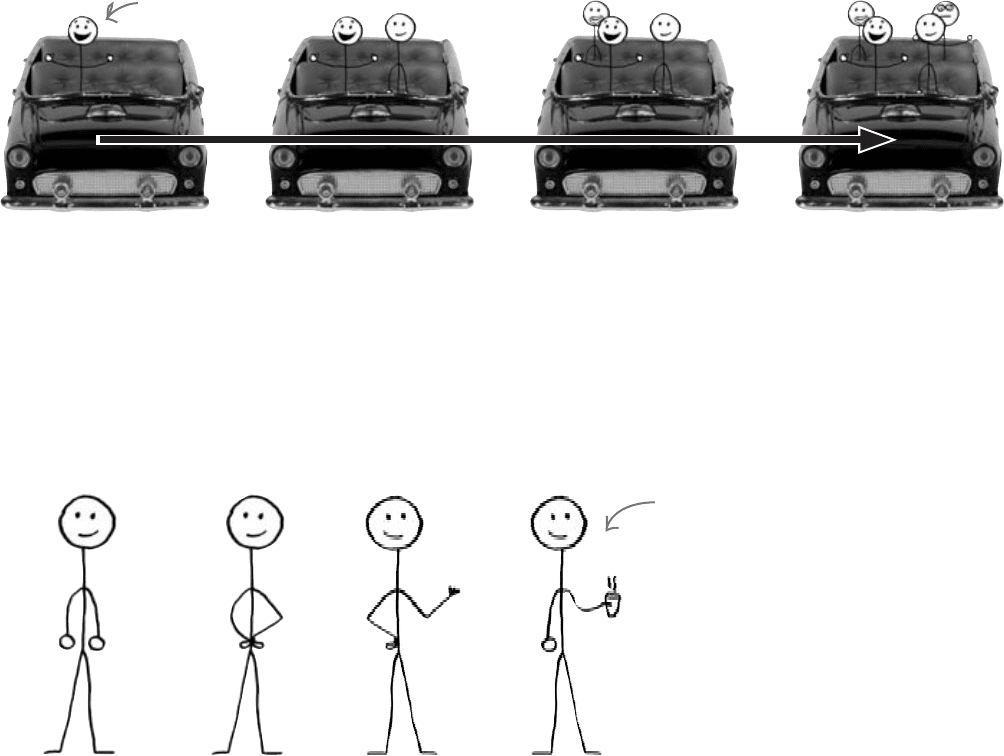

More people want the Love Train

It looks like there’s a good chance that the combined weight of the happy

couple will be less than the maximum the ride can take. But why restrict

the ride to the bride and groom?

Let’s see what happens if we add another car for four more members of

the wedding party. These could be parents, bridesmaids, or anyone else

the bride and groom want along for the ride.

The car will hold a total weight of 800 pounds, and we’ll assume the

weight of an adult in pounds is distributed as

X ~ N(180, 625)

where X represents the weight of an adult. But how can we work out the

probability that the combined weight of four adults will be less than 800

pounds?

Think back to the shortcuts you can use when you calculate expectation

and variance. What’s the difference between independent observations and

linear transformations? What effect does each have on the expectation and

variance? Which is more appropriate for this problem?

Customers are demanding

that we allow more members of the

wedding party to join the ride, and

they’ll pay good money. That’s great, but

will the Love Train be able to handle

the extra load?

376 Chapter 9

Let’s start off by looking at the probability distribution of 4X, where X is

the weight of one adult. Is 4X appropriate for describing the probability

distribution for the weight of 4 people?

The distribution of 4X is actually a linear transform of X. It’s a

transformation of X in the form aX + b, where a is equal to 4, and b is equal

to 0. This is exactly the same sort of transform as we encountered earlier with

discrete probability distributions.

Linear transforms describe underlying changes to the size of the values in the

probability distribution. This means that 4X actually describes the weight of

an individual adult whose weight has been multiplied by 4.

So what’s the distribution of a linear transform?

Suppose you have a linear transform of X in the form aX + b, where

X ~ N(μ, σ

2

). As X is distributed normally, this means that aX + b is distributed

normally too. But what’s the expectation and variance?

Let’s start with the expectation. When we looked at discrete probability

distributions, we found that E(aX + b) = aE(X) + b. Now, X follows a normal

distribution where E(X) = μ, so this gives us E(aX + b) = aμ + b.

We can take a similar approach with the variance. When we looked at discrete

probability distributions, we found that Var(aX + b) = a

2

Var(X). We know that

Var(X) in this case is given by Var(X) = σ

2

, so this means that Var(aX + b) = a

2

σ

2

.

Putting both of these together gives us

aX + b ~ N(aμ + b, a

2

σ

2

)

The new variance is the SQUARE

of a multiplied by the original

variance.

In other words, the new mean becomes aμ + b, and the new variance becomes a

2

σ

2

.

So what about independent observations?

1X

2X

4X

The 4X probability

distribution describes

adults whose weights have

been multiplied by 4. The

weight is changed, not the

number of adults.

Linear transforms describe underlying changes in values…

What we wanted was 4 adults,

not 1 adult 4 times actual size.

linear transforms vs. independent observations

you are here 4 377

using the normal distribution ii

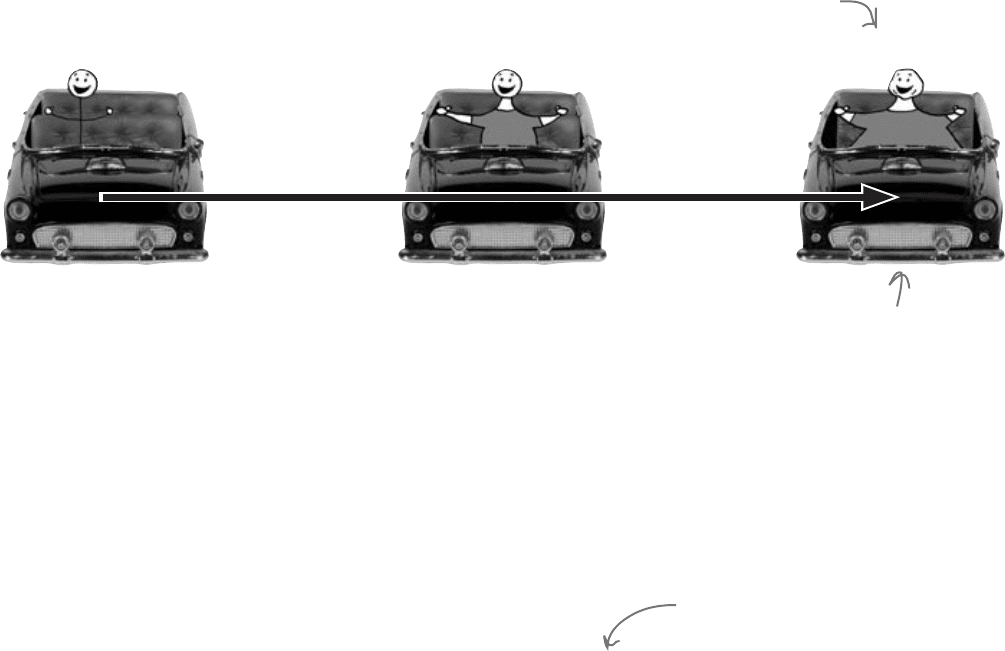

Rather than transforming the weight of each adult, what we really need

to figure out is the probability distribution for the combined weight of

four separate adults. In other words, we need to work out the probability

distribution of four independent observations of X.

The weight of each adult is an observation of X, so this means that the

weight of each adult is described by the probability distribution of X. We

need to find the probability distribution of four independent observations of

X, so this means we need to find the probability distribution of

X

1

+ X

2

+ X

3

+ X

4

where X

1

, X

2

, X

3

and X

4

are independent observations of X.

Each adult’s weight is an

independent observation of X.

Each adult is an independent

observation of X.

X X + X X + X + X X + X + X + X

X

1

X

2

X

3

X

4

…and independent observations describe how many values you have

378 Chapter 9

When we looked at the expectation and variance of independent observations of

discrete random variables, we found that

E(X

1

+ X

2

+ ... X

n

) = nE(X)

and

Var(X

1

+ X

2

+ ... + X

n

) = nVar(X)

As you’d expect, these same calculations work for continuous random variables too.

This means that if X ~ N(μ, σ

2

), then

X

1

+ X

2

+ ... + X

n

~ N(nμ, nσ

2

)

Q:

So what’s the difference between

linear transforms and independent

observations?

A: Linear transforms affect the underlying

values in your probability distribution. As

an example, if you have a length of rope of

a particular length, then applying a linear

transform affects the length of the rope.

Independent observations have to do with

the quantity of things you’re dealing with.

As an example, if you have n independent

observations of a piece of rope, then you’re

talking about n pieces of rope.

In general, if the quantity changes, you’re

dealing with independent observations. If the

underlying values change, then you’re dealing

with a transform.

Q:

Do I really have to know which is

which? What difference does it make?

A: You have to know which is which

because it make a difference in your

probability calculations. You calculate

the expectation for linear transforms and

independent observations in the same

way, but there’s a big difference in the way

the variance is calculated. If you have n

independent observations then the variance

is n times the original. If you transform your

probability distribution as aX + b, then your

variance becomes a

2

times the original.

Q:

Can I have both independent

observations and linear transforms in the

same probability distribution?

A: Yes you can. To work out the probability

distribution, just follow the basic rules for

calculating expectation and variance. You

use the same rules for both discrete and

continuous probability distributions.

If X ~ N(μ

x

, σ

2

x

) and

Y ~ N(μ

y

, σ

2

y

), and X and Y

are independent, then

X + Y ~ N(μ

x

+ μ

y

, σ

2

x

+ σ

2

y

)

X - Y ~ N(μ

x

- μ

y

, σ

2

x

+ σ

2

y

)

If X ~ N(μ, σ

2

) and a and b are

numbers, then

aX + b ~ N(aμ + b, a

2

σ

2

)

If X

1

, X

2

, ..., X

n

are

independent observations of

X where X ~ N(μ, σ

2

), then

X

1

+ X

2

+ ... + X

n

~ N(nμ, nσ

2

)

finding expectation and variance

Expectation and variance for independent observations

you are here 4 379

using the normal distribution ii

Let’s solve Dexter’s Love Train dilemma. What’s the probability that the combined weight of 4

adults will be less than 800 pounds? Assume the weight of an sdult is distributed as N(180, 625).

380 WHO WANTS TO WIN A SWIVEL CHAIR

Let’s solve Dexter’s Love Train dilemma. What’s the probability that the combined weight of 4

adults will be less than 800 pounds? Assume the weight of an sdult is distributed as N(180, 625).

If we represent the weight of an adult as X, then X ~ N(180, 625). We need to start by finding

how the weight of 4 adults is distributed. To find the mean and variance of this new distribution,

we multiply the mean and variance of X by 4. This gives us

X

1

+ X

2

+ X

3

+ X

4

~ N(720, 2500)

To find P(X

1

+ X

2

+ X

3

+ X

4

< 800), we start by finding the standard score.

z = x - μ

= 800 - 720

50

= 80

50

= 1.6

Looking this value up in standard normal probability tables gives us a value of 0.9452. This means

that

P(X

1

+ X

2

+ X

3

+ X

4

< 800) = 0.9452

exercise solution

σ